前言

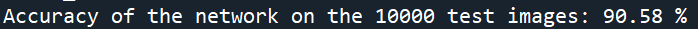

挺久之前老师留的大作业,因为是上古模型和玩具级别的数据集,所以写完了就一直放着,后来看了几本优化的书想起来这个入门实验,想到当初准确率没上九十,没有被老师课堂表扬一番倒是挺遗憾的。于是想着让参数多迭代的想法,回去稍微改了改,然后结果从之前的89.89%升到了90.58%,无语了。

说实在这个很多人写过了,烂大街了都,追求高准确率的同学可以看看其他的了,这里有一份榜单What is the class of this image ?,有兴趣地可以看看人家论文怎么操作的。刚入门的同学很多应该会直奔代码,或者看全文找准确率,觉得合适就拿来Ctrl + c 一波(过来人理解,但建议不要),我现在写一遍主要是记录一下思路,以及一点别的感悟

前面是一些讲解,完整代码在后面。

整体思路

数据获取 (数据增强,归一化)

def transforms_RandomHorizontalFlip():

transform_train = transforms.Compose([transforms.RandomHorizontalFlip(),

transforms.ToTensor(),transforms.Normalize((0.485, 0.456, 0.406), (0.229, 0.224, 0.225))])

transform = transforms.Compose([transforms.ToTensor(),

transforms.Normalize((0.485, 0.456, 0.406), (0.229, 0.224, 0.225))])

train_dataset = datasets.CIFAR10(root='../../data_hub/cifar10/data_1',

train=True, transform = transform_train,download=True)

test_dataset = datasets.CIFAR10(root='../../data_hub/cifar10/data_1',

train=False, transform = transform,download=True)

return train_dataset,test_dataset

# 数据增强:随机翻转

train_dataset,test_dataset = transforms_RandomHorizontalFlip()

train_loader = DataLoader(train_dataset, batch_size = batch_size,shuffle=True)

test_loader = DataLoader(test_dataset, batch_size = batch_size,shuffle=False)

这里主要讲的是transforms.Compose的变换。

- RandomHorizontalFlip()是torchvision自带的数据增强函数,作用是随机翻转图片,除此之外还有RandomGrayscale()用于随机调整亮度等,别的操作可以参考此篇PyTorch常用的torchvision transforms函数

- ToTensor()使图片数据转化成tensor张量,这个过程包含了归一化,图像数据从0 ~ 255压缩到0 ~ 1,这个函数必须在Normalize之前使用。

- Normalize()是归一化过程,ToTensor()的作用是将图像数据转换为(0,1)之间的张量,Normalize()则使用公式”(x-mean)/std”,将每个元素分布到(-1,1)。归一化后数据转为标准格式,此标准形式图像对平移、旋转、缩放等仿射变换具有不变性。值得注意的是,归一化后,图像储存的信息和对比度并未发生改变,只是减小了仿射变换和几何变换的影响,加快了梯度下降过程。

有人肯定会疑问,为什么有些博客用的是transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))标准参数,而有些是transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),其实[0.485, 0.456, 0.406], [0.229, 0.224, 0.225]是根据ImageNet数据分布计算出的均值和标准差。它们根据数百万张图像计算得出的,所以使用他们很正常,特别在是广泛使用的数据集上。

本文将使用ImageNet参数,如果要在自己的数据集上从头开始训练,可以计算新的均值和标准差,过程参考pytorch 计算图像数据集的均值和标准差。

图片显示

# 按batch_size 打印出dataset里面一部分images和label

classes = ('plane', 'car', 'bird', 'cat','deer', 'dog', 'frog', 'horse', 'ship', 'truck')

def image_show(img):

img = img / 2 + 0.5

npimg = img.numpy()

plt.imshow(np.transpose(npimg, (1, 2, 0)))

def label_show(loader):

global classes

dataiter = iter(loader) # 迭代遍历图片

images, labels = dataiter.next()

image_show(make_grid(images))

print(' '.join('%5s' % classes[labels[j]] for j in range(batch_size)))

return images,labels

#label_show(train_loader)

- 就是单纯的图片显示,这是大家都在用的了

模型、优化器

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu") #有gpu则设置gpu,没有则cpu

from VggNet import *

model = Vgg16_Net().to(device)

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(),lr = lr,momentum = 0.8,weight_decay = 0.001 )

scheduler = optim.lr_scheduler.StepLR(optimizer, step_size=step_size, gamma=0.5, last_epoch=-1)

- criterion在多分类情况下一般使用交叉熵

- optimizer 我使用的是带动量的SGD,学习率为0.01,动量设置为0.8(玄学调参得出的best参数),惩罚项常数为0.001。

稍微解释下,学习率lr是梯度下降的步子长度,如果步子太大,在学习曲线会剧烈震荡,极小值点附近很容易产生震荡导致难以收敛,步子太小,学习过程会很缓慢,并且损失函数难以下降,常用的数值为0.1,0.01,0.001。

而动量momentum 可以看做是学习的加速,不做过多解释,在使用动量的SGD算法中,其步长将是 ϵ ∥ g ∥ 1 − α \frac{\epsilon \left\| g \right\|}{1-\alpha} 1−αϵ∥g∥,( ϵ \epsilon ϵ是学习率,g为梯度, α α α为动量),这时候将动量的参数视为 1 1 − α \frac{1}{1-\alpha} 1−α1,例如 α α α为0.9时对应着最大速度是梯度下降算法的10倍,常用的取值为0.5,0.9,0.99。

α α α可以随着时间一起调整,但是调整它远没有调整学习率重要。

- scheduler 就是为了调整学习率设置的,我这里设置的gamma衰减率为0.5,step_size为10,也就是每10个epoch将学习率衰减至原来的0.5倍。

通俗讲,如果将学习过程看作是从山上走下谷底,已知学习率是步子长度,刚下山时步伐可以稍大,但在接近谷底时学习率还是这么大的话,就很可能导致你走不到真正的谷底而是跨向谷的另一面山坡,接着梯度下降方向会调整并告诉你得往回跨,你再跨的话很大可能还是只能回到之前的坡面,所以你得在靠近谷底时(可以理解为损失很小)减小你的步伐。

训练

loss_list = [] #为了后续画出损失图

start = time.time()

# train

for epoch in range(epoch_num):

running_loss = 0.0

for i, (inputs, labels) in enumerate(train_loader, 0):

inputs ,labels = inputs.to(device),labels.to(device) #记得送入GPU

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, labels).to(device)

loss.backward()

optimizer.step()

running_loss += loss.item()

loss_list.append(loss.item())

if i % num_print == num_print-1 :

print('[%d epoch, %d] loss: %.6f' %(epoch + 1, i + 1, running_loss / num_print))

running_loss = 0.0

lr_1 = optimizer.param_groups[0]['lr']

print('learn_rate : %.15f'%lr_1)

scheduler.step()

end = time.time()

print('time:{}'.format(end-start))

- optimizer.zero_grad(),用于梯度清零,在每次应用新的梯度时,要把原来的梯度清零,否则会梯度累加。

- loss.backward(),反向传播,pytorch会自动计算反向传播的值。

- optimizer.step(),对反向传播以后,会对目标函数进行调整

- scheduler.step(),更新学习率,按自己预设的值step_size,每step_size个epoch后学习率进行伽马衰减。注意这个要在epoch内循环的后面,否则训练时学习率会提前一个epoch更新。

测试

# test

model.eval()

correct = 0.0

total = 0

with torch.no_grad(): # 训练集不需要反向传播

for inputs, labels in test_loader:

inputs, labels = inputs.to(device), labels.to(device) # 将输入和目标在每一步都送入GPU

outputs = model(inputs)

pred = outputs.argmax(dim = 1) # 返回每一行中最大值元素索引

total += inputs.size(0)

correct += torch.eq(pred,labels).sum().item()

print('Accuracy of the network on the 10000 test images: %.2f %%' % (100.0 * correct / total))

- model.eval(),由于训练集不需要梯度更新,于是进入测试模式。

- with torch.no_grad(),检测是否已经开启测试模式,若开启进入测试,

预测结果

损失下降

总体准确率以及每一类的准确率。

可视化feature_map

a = 1

def viz(module, input):

global a

x = input[0][0].cpu()

for i in range(x.size()[0]):

plt.xticks([]) #关闭x刻度

plt.yticks([]) #关闭y刻度

plt.axis('off') #关闭坐标轴

plt.rcParams['figure.figsize'] = (20, 20)

# plt.rcParams['savefig.dpi'] = 240

# plt.rcParams['figure.dpi'] = 240

plt.imshow(x[i])

plt.tight_layout()

plt.savefig('./show/conv'+str(a)+'_'+str(i)+'.jpg')

a += 1

# plt.show()

model = torch.load('../../model_hub/cifar10/model_907.pkl')

dataiter = iter(test_loader) # 迭代遍历图片

images, labels = dataiter.next()

for name, m in model.named_modules():

if isinstance(m, torch.nn.Conv2d):

m.register_forward_pre_hook(viz)

model.eval()

with torch.no_grad():

model(images[2].unsqueeze(0).to(device))

我们知道在调参过程中,知道自己的feature_map(卷积核)可视化是否合理很重要,而且直接观察模型的运行过程,有助于我们了解模型性能。

对于cifar-10,我的feature_map是这样的,不过这只是一部分而已,我显示的是每一层卷积的特征,vgg中像这样的卷积有13层。

详细解释参考pytorch使用hook打印中间特征图、计算网络算力等

完整代码

train.py

import torch

from torch import nn,optim,tensor

from torch.utils.data import DataLoader

from torchvision.utils import make_grid

from torchvision import datasets,transforms

import numpy as np

from matplotlib import pyplot as plt

import time

#全局变量

batch_size = 32 #每次喂入的数据量

num_print = int(50000//batch_size//4) #每n次batch打印一次

epoch_num = 50 #总迭代次数

lr = 0.01 #学习率

step_size = 10 #每n个epoch更新一次学习率

def transforms_RandomHorizontalFlip():

transform_train = transforms.Compose([transforms.RandomHorizontalFlip(),

transforms.ToTensor(),transforms.Normalize((0.485, 0.456, 0.406), (0.229, 0.224, 0.225))])

transform = transforms.Compose([transforms.ToTensor(),

transforms.Normalize((0.485, 0.456, 0.406), (0.229, 0.224, 0.225))])

train_dataset = datasets.CIFAR10(root='../../data_hub/cifar10/data_1',

train=True, transform = transform_train,download=True)

test_dataset = datasets.CIFAR10(root='../../data_hub/cifar10/data_1',

train=False, transform = transform,download=True)

return train_dataset,test_dataset

# 数据增强:随机翻转

train_dataset,test_dataset = transforms_RandomHorizontalFlip()

train_loader = DataLoader(train_dataset, batch_size = batch_size,shuffle=True)

test_loader = DataLoader(test_dataset, batch_size = batch_size,shuffle=False)

# 按batch_size 打印出dataset里面一部分images和label

classes = ('plane', 'car', 'bird', 'cat','deer', 'dog', 'frog', 'horse', 'ship', 'truck')

def image_show(img):

img = img / 2 + 0.5

npimg = img.numpy()

plt.imshow(np.transpose(npimg, (1, 2, 0)))

def label_show(loader):

global classes

dataiter = iter(loader) # 迭代遍历图片

images, labels = dataiter.next()

image_show(make_grid(images))

print(' '.join('%5s' % classes[labels[j]] for j in range(batch_size)))

return images,labels

#label_show(train_loader)

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

from VggNet import * #这里的VggNet是放置vgg模型代码的py文件名

model = Vgg16_Net().to(device)

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(),lr = lr,momentum = 0.8,weight_decay = 0.001 )

scheduler = optim.lr_scheduler.StepLR(optimizer, step_size=step_size, gamma=0.5, last_epoch=-1)

loss_list = []

start = time.time()

# train

for epoch in range(epoch_num):

running_loss = 0.0

for i, (inputs, labels) in enumerate(train_loader, 0):

inputs ,labels = inputs.to(device),labels.to(device)

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, labels).to(device)

loss.backward()

optimizer.step()

running_loss += loss.item()

loss_list.append(loss.item())

if i % num_print == num_print-1 :

print('[%d epoch, %d] loss: %.6f' %(epoch + 1, i + 1, running_loss / num_print))

running_loss = 0.0

lr_1 = optimizer.param_groups[0]['lr']

print('learn_rate : %.15f'%lr_1)

scheduler.step()

end = time.time()

# print('time:{}'.format(end-start))

#torch.save(model, './model.pkl') #保存模型

#model = torch.load('./model.pkl') #加载模型

# loss images show

plt.plot(loss_list, label='Minibatch cost')

plt.plot(np.convolve(loss_list,np.ones(200,)/200, mode='valid'),label='Running average')

plt.ylabel('Cross Entropy')

plt.xlabel('Iteration')

plt.legend()

plt.show()

'''

images,labels = label_show(test_loader)

#输出预测集预测值

images, labels = images.to(device), labels.to(device)

outputs = model(images)

predicted = outputs.argmax(dim = 1)

print('Predicted: ', ' '.join('%5s' % classes[predicted[j]] for j in range(batch_size)))

'''

# test

model.eval()

correct = 0.0

total = 0

with torch.no_grad(): # 训练集不需要反向传播

for inputs, labels in test_loader:

inputs, labels = inputs.to(device), labels.to(device) # 将输入和目标在每一步都送入GPU

outputs = model(inputs)

pred = outputs.argmax(dim = 1) # 返回每一行中最大值元素索引

total += inputs.size(0)

correct += torch.eq(pred,labels).sum().item()

print('Accuracy of the network on the 10000 test images: %.2f %%' % (100.0 * correct / total))

class_correct = list(0. for i in range(10))

class_total = list(0. for i in range(10))

for inputs, labels in test_loader:

inputs, labels = inputs.to(device), labels.to(device)

outputs = model(inputs)

pred = outputs.argmax(dim = 1) # 返回每一行中最大值元素索引

c = (pred == labels.to(device)).squeeze()

for i in range(4):

label = labels[i]

class_correct[label] += float(c[i])

class_total[label] += 1

#每个类的ACC

for i in range(10):

print('Accuracy of %5s : %.2f %%' % (classes[i], 100 * class_correct[i] / class_total[i]))

#显示feature_map

a = 0

def viz(module, input):

global a

x = input[0][0].cpu()

# print(x.device)

#最多显示图数量

min_num = min(4,x.size()[0])

for i in range(min_num):

plt.subplot(1, min_num, i+1)

plt.xticks([]) #关闭x刻度

plt.yticks([]) #关闭y刻度

plt.axis('off') #关闭坐标轴

plt.rcParams['figure.figsize'] = (20, 20)

plt.rcParams['savefig.dpi'] = 480

plt.rcParams['figure.dpi'] = 480

plt.imshow(x[i])

plt.savefig('./'+str(a)+'.jpg')

a += 1

plt.show()

model = torch.load('../../model_hub/cifar10/model_907.pkl')

dataiter = iter(test_loader) # 迭代遍历图片

images, labels = dataiter.next()

for name, m in model.named_modules():

if isinstance(m, torch.nn.Conv2d):

m.register_forward_pre_hook(viz)

model.eval()

with torch.no_grad():

model(images[2].unsqueeze(0).to(device))

VggNet.py

from torch import nn

class Vgg16_Net(nn.Module):

def __init__(self):

super(Vgg16_Net, self).__init__()

#2个卷积层和1个最大池化层

self.layer1 = nn.Sequential(

nn.Conv2d(3, 64, kernel_size = 3, stride=1, padding=1), # (32-3+2)/1+1 = 32 32*32*64

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

nn.Conv2d(64,64, kernel_size = 3, stride=1, padding=1), # (32-3+2)/1+1 = 32 32*32*64

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

nn.MaxPool2d(2, 2) # (32-2)/2+1 = 16 16*16*64

)

#2个卷积层和1个最大池化层

self.layer2 = nn.Sequential(

nn.Conv2d(64, 128, kernel_size = 3, stride=1, padding=1), # (16-3+2)/1+1 = 16 16*16*128

nn.BatchNorm2d(128),

nn.ReLU(inplace=True),

nn.Conv2d(128, 128, kernel_size = 3, stride=1, padding=1), # (16-3+2)/1+1 = 16 16*16*128

nn.BatchNorm2d(128),

nn.ReLU(inplace=True),

nn.MaxPool2d(2, 2) # (16-2)/2+1 = 8 8*8*128

)

#3个卷积层和1个最大池化层

self.layer3 = nn.Sequential(

nn.Conv2d(128, 256, kernel_size = 3, stride=1, padding=1), # (8-3+2)/1+1 = 8 8*8*256

nn.BatchNorm2d(256),

nn.ReLU(inplace=True),

nn.Conv2d(256, 256, kernel_size = 3, stride=1, padding=1), # (8-3+2)/1+1 = 8 8*8*256

nn.BatchNorm2d(256),

nn.ReLU(inplace=True),

nn.Conv2d(256, 256, kernel_size = 3, stride=1, padding=1), # (8-3+2)/1+1 = 8 8*8*256

nn.BatchNorm2d(256),

nn.ReLU(inplace=True),

nn.MaxPool2d(2, 2), # (8-2)/2+1 = 4 4*4*256

)

#3个卷积层和1个最大池化层

self.layer4 = nn.Sequential(

nn.Conv2d(256, 512, kernel_size = 3, stride=1, padding=1), # (4-3+2)/1+1 = 4 4*4*512

nn.BatchNorm2d(512),

nn.ReLU(inplace=True),

nn.Conv2d(512, 512, kernel_size = 3, stride=1, padding=1), # (4-3+2)/1+1 = 4 4*4*512

nn.BatchNorm2d(512),

nn.ReLU(inplace=True),

nn.Conv2d(512, 512, kernel_size = 3, stride=1, padding=1), # (4-3+2)/1+1 = 4 4*4*512

nn.BatchNorm2d(512),

nn.ReLU(inplace=True),

nn.MaxPool2d(2, 2) # (4-2)/2+1 = 2 2*2*512

)

#3个卷积层和1个最大池化层

self.layer5 = nn.Sequential(

nn.Conv2d(512, 512, kernel_size = 3, stride=1, padding=1), # (2-3+2)/1+1 = 2 2*2*512

nn.BatchNorm2d(512),

nn.ReLU(inplace=True),

nn.Conv2d(512, 512, kernel_size = 3, stride=1, padding=1), # (2-3+2)/1+1 = 2 2*2*512

nn.BatchNorm2d(512),

nn.ReLU(inplace=True),

nn.Conv2d(512, 512, kernel_size = 3, stride=1, padding=1), # (2-3+2)/1+1 = 2 2*2*512

nn.BatchNorm2d(512),

nn.ReLU(inplace=True),

nn.MaxPool2d(2, 2) # (2-2)/2+1 = 1 1*1*512

)

self.conv = nn.Sequential(

self.layer1,

self.layer2,

self.layer3,

self.layer4,

self.layer5

)

self.fc = nn.Sequential(

nn.Linear(512, 512),

nn.ReLU(inplace=True),

nn.Dropout(0.5),

nn.Linear(512, 256),

nn.ReLU(inplace=True),

nn.Dropout(0.5),

nn.Linear(256, 10)

)

def forward(self, x):

x = self.conv(x)

x = x.view(-1, 512)

x = self.fc(x)

return x

其实网络结构有结果更简单的写法,但是怕新人们看不懂,就不放了。。。

算了还是放出来吧。用这个网络之前,你先把train.py的模型实例化部分给改了

conv_arch = ((2,3,64),(2,64,128),(3,128,256),(3,256,512),(3,512,512))

fc_features = 512

fc_hidden_units = 512

model = Vgg16_Net(conv_arch,fc_features,fc_hidden_units).to(device)

接着vgg.py内容改为

# conv_arch = ((2,3,64),(2,64,128),(3,128,256),(3,256,512),(3,512,512))

# fc_features = 512*2*2

# fc_hidden_units = 512

from torch import nn

def vgg_block(num_convs,in_channels,out_channels):

blk = []

for i in range(num_convs):

if i == 0:

blk.append(nn.Conv2d(in_channels,out_channels,kernel_size=3,stride = 1,padding = 1 ))

else:

blk.append(nn.Conv2d(out_channels,out_channels,kernel_size=3,stride = 1,padding = 1 ))

blk.append(nn.BatchNorm2d(out_channels))

blk.append(nn.ReLU(inplace = True))

blk.append(nn.MaxPool2d(kernel_size=2,stride=2))

return nn.Sequential(*blk)

class Vgg16_Net(nn.Module):

def __init__(self,conv_arch,fc_features,fc_hidden_units):

super(Vgg16_Net, self).__init__()

self.conv_arch = conv_arch

self.fc_features = fc_features

self.fc_hidden_units = fc_hidden_units

self.conv_layer = nn.Sequential()

for i ,(num_convs,in_channels,out_channels) in enumerate(self.conv_arch):

self.conv_layer.add_module('vgg_block_'+str(i+1),vgg_block(num_convs,in_channels,out_channels))

self.fc_layer = nn.Sequential(

nn.Linear(self.fc_features, self.fc_features),

nn.ReLU(inplace=True),

nn.Dropout(0.5),

nn.Linear(self.fc_features, self.fc_hidden_units),

nn.ReLU(inplace=True),

nn.Dropout(0.5),

nn.Linear(self.fc_hidden_units, 2)

)

def forward(self, x):

x = self.conv_layer(x)

x = x.view(-1, self.fc_features)

x = self.fc_layer(x)

return x

恭喜你有耐心看到最后,我其实在这里放了一个准确率90.7%的模型,我是在这篇博客写了一半的时候才发现我之前已经上90%了,结果我忘了。。。害得我又是改参又是训练,虽然结果也不会很差就是了。

模型自取,之前是免费传到csdn上的,结果系统给我一直涨价,从0积分到48积分,导致有些小朋友来私信我,我看到消息的时候有些迟了,那就应大家要求放在百度网盘上吧,

链接:https://pan.baidu.com/s/14gWN3V3KSUeD–2Vmo9AqA

提取码:2333

加载的代码我已经写在了train.py里面了。

1683

1683

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?