线性回归的简洁实现

在每个迭代周期⾥,我们将完整遍历⼀次数据集(train_data),不停地从中获取⼀个小批量的

输⼊和相应的标签。对于每⼀个小批量,我们会进⾏以下步骤:

- 通过调⽤net(X)⽣成预测并计算损失l(前向传播)。

- 通过进⾏反向传播来计算梯度。

- 通过调⽤优化器来更新模型参数。

from torch import nn

from d2l import torch as d2l

import torch

from torch.utils import data

#读取数据

def load_array(data_arrays, batch_size, is_train=True):

"""构造一个Pytorch数据迭代器"""

dataset = data.TensorDataset(*data_arrays)

return data.DataLoader(dataset,batch_size,shuffle=is_train)

if __name__ == "__main__":

# 生成数据集

true_w = torch.tensor([2, -3.4])

true_b = 4.2

features, labels = d2l.synthetic_data(true_w, true_b, 1000) #随机生成1000个样本数据

batch_size=10

data_iter = load_array((features, labels), batch_size)

"""使⽤iter构造Python迭代器,并使⽤next从迭代器

中获取第⼀项。"""

print(next(iter(data_iter)))

#定义模型

net = nn.Sequential(nn.Linear(2, 1))

#初始化模型参数

"""深度学习框架通常有预定义的⽅法来初始化参数。

在这⾥,我们指定每个权重参数应该从均值为0、标准差为0.01的正态分布中随机采

样,偏置参数将初始化为零。"""

net[0].weight.data.normal_(0, 0.01)

net[0].bias.data.fill_(0)

print("初始化参数w:",net[0].weight.data)

print("初始化参数b:",net[0].bias.data)

## 定义损失函数

"""计算均⽅误差使⽤的是MSELoss类,也称为平⽅L2范数。

默认情况下,它返回所有样本损失的平均值。"""

loss = nn.MSELoss()

# 定义优化算法

trainer = torch.optim.SGD(net.parameters(), lr=0.03)

# 训练

num_epochs = 3

for epoch in range(num_epochs):

for X, y in data_iter:

l = loss(net(X), y) # 计算当前损失,每一batch的loss

trainer.zero_grad() # 将模型的参数梯度初始化为0

l.backward() # 反向传播计算梯度

trainer.step() # 更新所有参数

l = loss(net(features), labels) # 每一epoch的loss

print(f'epoch {epoch + 1},loss{l:f}')

w = net[0].weight.data

print("w的估计误差",true_w-w.reshape(true_w.shape))

b = net[0].bias.data

print("b的估计误差:",true_b-b)

softmax回归的从零开始实现

import torch

from torch import nn

from d2l import torch as d2l

from IPython import display

# 定义softmax参数

# X = torch.tensor([[1.0, 2.0, 3.0], [4.0, 5.0, 6.0]])

# print("维度0上X的和:",X.sum(0, keepdim=True))

# print("维度1上X的和:",X.sum(1, keepdim=True))

def softmax(X):

X_exp = torch.exp(X)

partition = X_exp.sum(1, keepdim=True)

return X_exp / partition

# X = torch.normal(0, 1, (2, 5)) #从均值为0标准差为1的分布里面随机抽取大小为(2,5)的张量返回

# X_prob = softmax(X)

# print("X_prob:",X_prob)

# print("X中每一行的和:",X.sum(1))

# 定义模型

def net(X):

#注意,将数据传递到模型之前,我们使⽤reshape函数将每张原始图像展平为向量。

return softmax(torch.matmul(X.reshape((-1, W.shape[0])),W)+b)

# 定义损失函数

# y = torch.tensor([0, 2])

# y_hat = torch.tensor([[0.1, 0.3, 0.6], [0.3, 0.2, 0.5]])

# print("y_hat",y_hat[[0, 1], y])

def cross_entropy(y_hat, y):

return -torch.log(y_hat[range(len(y_hat)), y])

# cross_entropy(y_hat, y)

## 分类精度

def accuracy(y_hat, y):

"""计算预测正确的数量"""

if len(y_hat.shape) > 1 and y_hat.shape[1] > 1:

y_hat = y_hat.argmax(axis=1)

cmp = y_hat.type(y.dtype) == y

return float(cmp.type(y.dtype).sum())

# print("accuracy:",accuracy(y_hat, y) / len(y))

def evaluate_accuracy(net, data_iter):

"""计算在指定数据集上模型的精度"""

if isinstance(net, torch.nn.Module):

net.eval() # 将模型设置为评估陌生

metric = Accumulator(2) # 正确预测数、预测总数

with torch.no_grad():

for X, y in data_iter:

metric.add(accuracy(net(X), y), y.numel())

return metric[0] / metric[1]

class Accumulator:

"""在n个变量上累加"""

def __init__(self, n):

self.data = [0.0] * n

def add(self, *args):

self.data = [a + float(b) for a, b in zip(self.data, args)]

def reset(self):

self.data = [0.0] * len(self.data)

def __getitem__(self, idx):

return self.data[idx]

# print("evaluate:",evaluate_accuracy(net, test_iter))

def train_epoch_ch3(net, train_iter, loss, updater):

"""训练模型一个迭代周期"""

if isinstance(net, torch.nn.Module):

net.train() # 将模型设置为训练模式

metric = Accumulator(3)

for X, y in train_iter:

y_hat = net(X)

l = loss(y_hat, y)

if isinstance(updater, torch.optim.Optimizer):

# 使用Pytorch内置的优化器和损失函数

updater.zero_grad()

l.backward()

updater.step()

metric.add(float(l) * len(y), accuracy(y_hat, y),

y.size().numel())

else:

# 使用定制的优化器和损失函数

l.sum().backward()

updater(X.shape[0])

metric.add(float(l.sum()), accuracy(y_hat, y), y.numel())

# 返回训练损失和训练精度

return metric[0] / metric[2], metric[1] / metric[2]

class Animator:

"""在动画中绘制数据"""

def __init__(self, xlabel=None, ylabel=None, legend=None, xlim=None,

ylim=None, xscale='linear', yscale='linear',

fmts=('-', 'm--', 'g-.', 'r:'), nrows=1, ncols=1,

figsize=(3.5, 2.5)):

# 增量地绘制多条线

if legend is None:

legend = []

d2l.use_svg_display()

self.fig, self.axes = d2l.plt.subplots(nrows, ncols, figsize=figsize)

if nrows * ncols == 1:

self.axes = [self.axes, ]

# 使用lambda函数捕获参数

self.config_axes = lambda :d2l.set_axes(

self.axes[0], xlabel, ylabel, xlim, ylim, xscale, yscale, legend)

self.X, self.Y, self.fmts = None, None, fmts

def add(self, x, y):

#向图表中添加多个数据点

if not hasattr(y, "__len__"):

y = [y]

n = len(y)

if not hasattr(x, "__len__"):

x= [x] * n

if not self.X:

self.X = [[] for _ in range(n)]

if not self.Y:

self.Y= [[] for _ in range(n)]

for i, (a, b) in enumerate(zip(x, y)):

if a is not None and b is not None:

self.X[i].append(a)

self.Y[i].append(b)

self.axes[0].cla()

for x, y, fmt in zip(self.X, self.Y, self.fmts):

self.axes[0].plot(x, y, fmt)

self.config_axes()

display.display(self.fig)

display.clear_output(wait=True)

def train_ch3(net, train_iter, test_iter, loss, num_epochs, updater):

"""训练模型(定义见第3章)"""

animator = Animator(xlabel='epoch', xlim=[1, num_epochs], ylim=[0.3, 0.9],

legend=['train loss', 'train acc', 'test acc'])

for epoch in range(num_epochs):

train_metrics = train_epoch_ch3(net, train_iter, loss, updater)

test_acc = evaluate_accuracy(net, test_iter)

animator.add(epoch + 1, train_metrics + (test_acc, ))

train_loss, train_acc = train_metrics

assert train_loss < 0.5, train_loss

assert train_acc <= 1 and train_acc > 0.7, train_acc

assert test_acc <= 1 and test_acc>0.7, test_acc

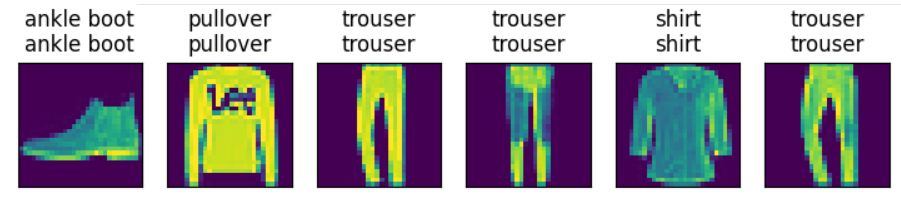

def predict_ch3(net, test_iter, n=6):

"""预测标签"""

for X, y in test_iter:

break

trues = d2l.get_fashion_mnist_labels(y)

preds = d2l.get_fashion_mnist_labels(net(X).argmax(axis=1))

titles = [true + "\n" +pred for true, pred in zip(trues, preds)]

d2l.show_images(X[0:n].reshape((n, 28, 28)), 1, n, titles=titles[0:n])

d2l.plt.show()

# 使用Fashion-MNIST数据集

batch_size = 256

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size)

# 初始化模型参数

num_inputs = 784

num_outputs = 10

W = torch.normal(0, 0.01, size=(num_inputs, num_outputs), requires_grad=True)

b = torch.zeros(num_outputs, requires_grad=True)

lr = 0.1

def upfater(batch_size):

return d2l.sgd([W, b], lr, batch_size)

num_epochs = 10

train_ch3(net, train_iter, test_iter, cross_entropy, num_epochs, upfater) # 训练模型

d2l.plt.show()

predict_ch3(net, test_iter) # 预测

预测截图

Softmax回归的简洁实现

import torch

from torch import nn

from d2l import torch as d2l

batch_size = 256

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size)

#初始化参数模型

"""PyTorch不会隐式地调整输⼊的形状。因此,

我们在线性层前定义了展平层(flatten),来调整⽹络输⼊的形状"""

net = nn.Sequential(nn.Flatten(), nn.Linear(784, 10))

def init_weights(m):

if type(m) == nn.Linear:

nn.init.normal(m.weight, std=0.01) # 在这⾥Sequential并不是必要的

net.apply(init_weights)

loss = nn.CrossEntropyLoss() # 损失函数

trainer = torch.optim.SGD(net.parameters(), lr=0.1)

# 训练

num_epochs = 10

d2l.train_ch3(net, train_iter, test_iter, loss, num_epochs, trainer)

d2l.plt.show()

感知机

Relu激活函数

import torch

from d2l import torch as d2l

d2l.plt.rcParams["font.sans-serif"] = "SimHei" # 设置图片中的字体为中文黑体

d2l.plt.rcParams['axes.unicode_minus'] = False # 正常显示负号

x = torch.arange(-8.0, 8.0, 0.1, requires_grad=True)

y = torch.relu(x)

d2l.plot(x.detach(), y.detach(), 'x', 'relu(x)', figsize=(5, 4))# 使用detach()是将requires_grad设置为False,不能计算梯度后才可以绘图

d2l.plt.title("Relu激活函数")

# Relu激活函数的导数

y.backward(torch.ones_like(x), retain_graph=True)

d2l.plt.figure()

d2l.plot(x.detach(),x.grad, 'x', 'grad of relu', figsize=(5,4))

d2l.plt.title("Relu激活函数的导数")

# sigmoid激活函数

y = torch.sigmoid(x)

d2l.plt.figure() # 创建一个新的绘图窗口

d2l.plot(x.detach(), y.detach(), 'x', 'sigmoid(x)', figsize=(5, 4))

d2l.plt.title("sigmoid激活函数")

d2l.plt.figure(figsize=(5, 4)) # 创建一个新的绘图窗口

#清楚以前的梯度

x.grad.data.zero_()

y.backward(torch.ones_like(x), retain_graph=True)

d2l.plot(x.detach(), x.grad, 'x', 'grad of sigmoid')

d2l.plt.title("sigmoid激活函数的导数")

# tanh激活函数

d2l.plt.figure(figsize=(5, 4)) # 创建一个新的绘图窗口

y = torch.tanh(x)

d2l.plot(x.detach(), y.detach(), 'x', 'tanh(x)')

d2l.plt.title("tanh激活函数")

# 清除以前的梯度

x.grad.data.zero_()

y.backward(torch.ones_like(x), retain_graph=True)

d2l.plt.figure(figsize=(5, 4)) # 创建一个新的绘图窗口

d2l.plot(x.detach(), x.grad, 'x', 'grad of tanh')

d2l.plt.title("tanh激活函数的导数")

d2l.plt.show()

运行截图

多层感知机从零开始实现

import torch

from torch import nn

from d2l import torch as d2l

"""多层感知机的从零开始实现"""

def relu(X):

"""激活函数"""

a = torch.zeros_like(X) # 生成一个大小与X一致的全零张量

return torch.max(X, a)

def net(X):

"""模型"""

X = X.reshape((-1, num_inputs))

H = relu(X@W1 + b1) # 这里“@”代表矩阵乘法

return (H@W2 + b2)

if __name__ == "__main__":

batch_size= 256

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size)

# for i, (data, label) in enumerate(train_iter):

# print(i)

# print("data:",data)

# print("label",label)

# continue

# 初始化模型参数

num_inputs, num_outputs, num_hiddens = 784, 10, 256

W1 = nn.Parameter(torch.randn(

num_inputs, num_hiddens, requires_grad=True) * 0.01)

b1 = nn.Parameter(torch.zeros(num_hiddens, requires_grad=True))

W2 = nn.Parameter(torch.randn(

num_hiddens, num_outputs, requires_grad=True

) * 0.01)

b2 = nn.Parameter(torch.zeros(num_outputs, requires_grad=True))

params = [W1, b1, W2, b2]

# 损失函数

loss = nn.CrossEntropyLoss()

num_epochs, lr = 5, 0.1

updater = torch.optim.SGD(params, lr=lr) # 优化器采用随机梯度下降

d2l.train_ch3(net, train_iter, test_iter, loss, num_epochs,updater)

# 预测

d2l.predict_ch3(net, test_iter)

d2l.plt.show()

运行截图

多层感知机的简洁实现

import torch

from torch import nn

from d2l import torch as d2l

def init_weights(m):

"""初始化网络权重"""

if type(m) == nn.Linear:

nn.init.normal_(m.weight, std= 0.01)

if __name__ == "__main__":

"""简洁实现多层感知机"""

# 定义网络

net = nn.Sequential(nn.Flatten(),

nn.Linear(784, 256),

nn.ReLU(),

nn.Linear(256, 10))

net.apply(init_weights) # 初始化网络权重

batch_size, lr, num_epochs = 256, 0.1, 10

loss = nn.CrossEntropyLoss()

trainer = torch.optim.SGD(net.parameters(), lr=lr)

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size)

d2l.train_ch3(net, train_iter, test_iter, loss, num_epochs, trainer)

d2l.plt.show()

dropout从零开始实现

from torch import nn

from d2l import torch as d2l

import torch

def dropout_layer(X, dropout):

assert 0<= dropout<=1

#在此情况下,所有元素都被丢弃

if dropout == 1:

return torch.zeros_like(X)

# 在此情况下,所有元素都被保留

if dropout == 0:

return X

mask = (torch.rand(X.shape) > dropout).float()

return mask * X / (1.0 - dropout)

class Net(nn.Module):

def __init__(self, num_inputs, num_outputs, num_hiddens1, num_hiddens2, is_training=True):

super(Net, self).__init__()

self.num_inputs = num_inputs

self.training = is_training

self.lin1 = nn.Linear(num_inputs, num_hiddens1)

self.lin2 = nn.Linear(num_hiddens1, num_hiddens2)

self.lin3 = nn.Linear(num_hiddens2, num_outputs)

self.relu = nn.ReLU()

def forward(self, X):

H1 = self.relu(self.lin1(X.reshape((-1, self.num_inputs))))

# 只有在训练模型时才使用dropout

if self.training == True:

#在第一个全连接层之后添加一个dropout层

H1 = dropout_layer(H1, dropout1)

H2 = self.relu(self.lin2(H1))

if self.training == True:

#在第二个全连接层之后添加一个dropout层

H2 = dropout_layer(H2, dropout2)

out = self.lin3(H2)

return out

if __name__ == "__main__":

#从零开始实现dropout

dropout1, dropout2 = 0.2, 0.5

num_inputs, num_outputs, num_hiddens1, num_hiddens2 = 784, 10, 256, 256

net = Net(num_inputs, num_outputs, num_hiddens1, num_hiddens2)

num_epochs, lr, batch_size = 10, 0.5, 256

loss = nn.CrossEntropyLoss()

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size)

trainer = torch.optim.SGD(net.parameters(), lr)

d2l.train_ch3(net, train_iter, test_iter, loss, num_epochs, trainer)

d2l.plt.show()

运行截图

dropout简洁实现

from torch import nn

from d2l import torch as d2l

import torch

def init_weights(m):

if type(m) == nn.Linear:

nn.init.normal_(m.weights, std=0.01)

if __name__ == "__main__":

#简洁实现dropout

dropout1, dropout2 = 0.2, 0.5

num_epochs, lr, batch_size = 10, 0.5, 256

loss = nn.CrossEntropyLoss()

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size)

net = nn.Sequential(nn.Flatten(),

nn.Linear(784, 256),

nn.ReLU(),

# 在第一个全连接层之后添加一个dropout层

nn.Dropout(dropout1),

nn.Linear(256, 256),

nn.ReLU(),

# 在第二个全连接层之后添加一个dropout层

nn.Dropout(dropout2),

nn.Linear(256, 10))

net.apply(init_weights)

trainer = torch.optim.SGD(net.parameters(), lr)

d2l.train_ch3(net, train_iter, test_iter, loss, num_epochs, trainer)

d2l.plt.show()

1322

1322

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?