- 讨论最优化算法的部分没看懂

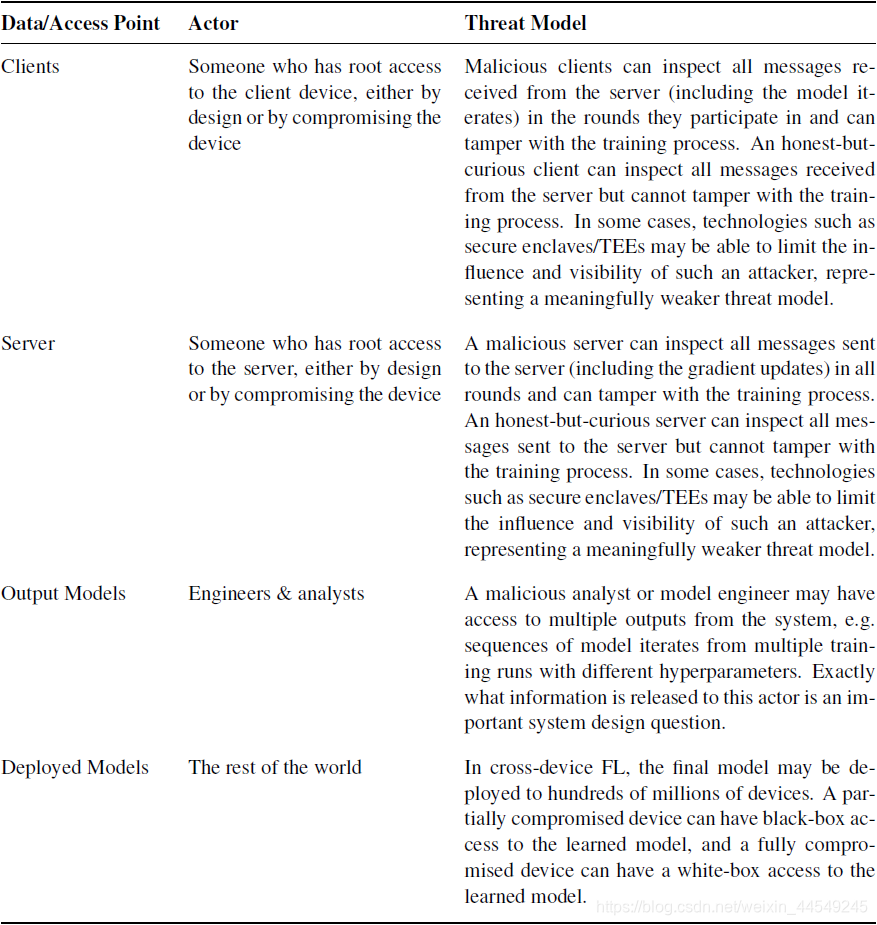

4.1 \, Actors, Threat Models, and Privacy in Depth

Various threat models for different adversarial actors (malicious / honest-but-curious) :

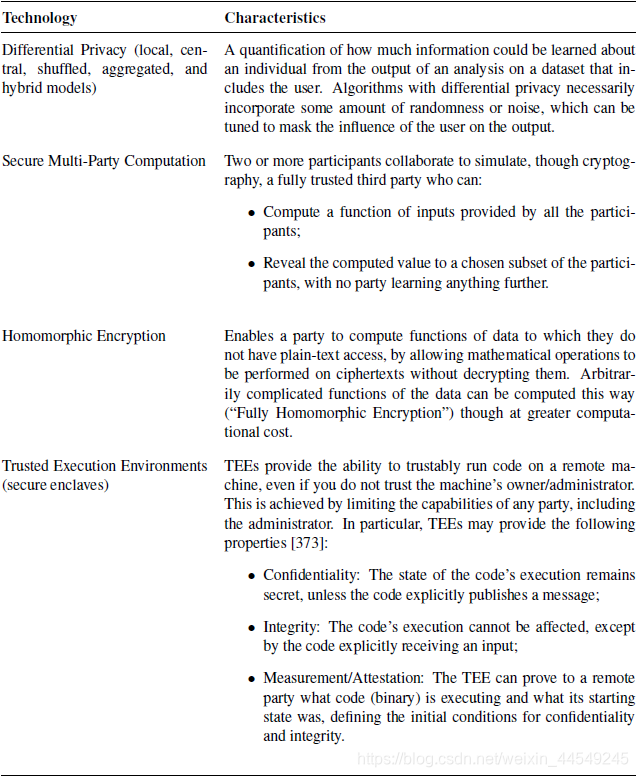

4.2 \, Tools and Technologies

Various technologies along with their characteristics :

- Secure multi-party computation

- Trusted execution environments

While secure multi-party computation and trusted execution environments offer general solutions to the problem of privately computing any function on distributed private data, many optimizations are possible when focusing on specific functionalities. (e.g. Secure aggregation, Secure shuffling, Private information retrieval)

- Differential privacy

\quad 在FL中考虑的DP,与传统的DP有一个区别是:相邻数据集的定义不同。FL中的要求更强 :In the context of FL, D and D’ correspond to decentralized datasets that are adjacent if D’ can be obtained from D by adding or subtracting all the records of a single client (user).

\quad 传统的差分隐私需要(假设)有一个可信的第三方。FL中应用DP则需要减少对trusted data curator的需求或依赖,具体方法有:-

Local differential privacy

在每个client把数据共享给服务器之前就对各自的数据应用差分隐私的处理。但由于对每个client的数据都进行了加噪,尽管很好的保护了隐私,但很大地影响了服务器收集到的数据集的utility。 -

Distributed differential privacy

每个client首先计算和编码一个minimal (application specific) focused report,然后把encoded reports发送给secure computation function,它的输出满足differential privacy。选择不同的secure computation function可以应对不同的threat models。Distributed differential privacy比Local differential privacy提供更好的utility,但它依赖于不同的setups和更强的假设。

Distributed differential privacy 模型举例 : Distributed DP via secure aggregation (通过安全聚合来确保central server获得聚合的结果,同时确保不会将各设备和参与者的参数暴露给central server)、Distributed DP via secure shuffling(由secure shuffler把每个client从LDP协议得到的数据进行随机化,最后再发送给central server)。 -

Hybrid differential privacy

根据用户不同的信任偏好对他们进行分类,再对不同的分组应用不同的模型。

-

4.3 \, Protections Against External Malicious Actors

-

Central Differential Privacy

user-level differential privacy used in FL’s iterative training process.

具体过程类似于之前看过的"-2- Deep Learning with Differential Privacy".

To limit or eliminate the information that could be learned about an individual from the iterates. -

Concealing the Iterates

在TEE模型下可以对参与者隐藏模型的 iterates (the newly updated versions of the model after each round of training) -

Repeated Analyses over Evolving Data , Preventing Model Theft and Misuse

4.4 \, Protections Against an Adversarial Server

- 依然应用Local differential privacy, Distributed differential privacy, Hybrid differential privacy,主要运用Distributed differential privacy

4.5 \, User Perception

- the Pufferfish framework of privacy [235]

该框架允许各个用户指定自己的隐私需求,允许analyst指定一类受保护的 predicates,对这些predicates应用差分隐私的处理,而其他的predicates可以在没有差分隐私或隐私预算较小的情况下进行学习。

Ref

Kairouz, P., McMahan, H. B., Avent, B., Bellet, A., Bennis, M., Bhagoji, A. N., … & d’Oliveira, R. G. (2019). Advances and open problems in federated learning. arXiv preprint arXiv:1912.04977.

4035

4035

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?