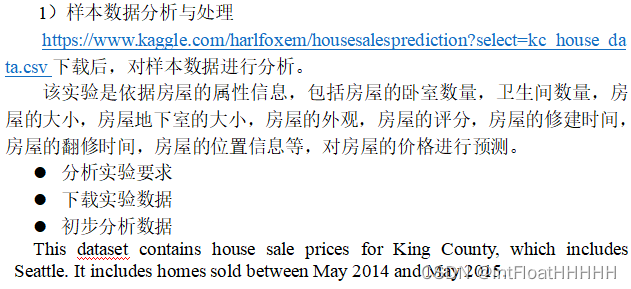

Ex2 实现房价预测的线性回归算法

Ex3 通过设置不同较大、合适和较小的学习率来对比不同的实验结果。

实验一代码:

# -*- coding: utf-8 -*-

import numpy as np

from math import e

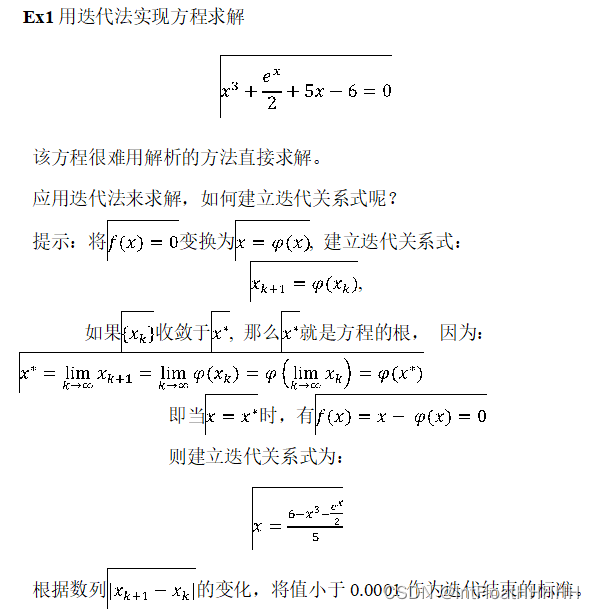

# 迭代函数. 收敛性由定理2保证.

def Phi(x):

return (6-x**3-e**x/2.0)/5.0

def main():

x = np.array([1]) #x0

x = np.append( x, Phi(x[0]) ) #x1

print(f"x[0] = {x[0]:.8f}")

print(f"x[1] = {x[1]:.8f}, 误差为{np.abs(x[1]-x[0]):.8f}")

k=1

while( np.abs(x[k]-x[k-1]) > 1e-4):

x = np.append( x, Phi(x[k]) ) #x_k+1

print(f"x[{k+1:d}] = {x[k+1]:.8f}, 误差为{np.abs(x[k+1]-x[k]):.8f}")

k+=1;

print(f"根为x[{k:d}] = {x[k]:.8f}")

if __name__ == "__main__":

main()

实验二代码:

import pandas as pd

import matplotlib.pyplot as plt

raw_data = pd.read_csv(r'D:\研一\机器学习\kc_house_data.csv')

raw_data #查看原数据

raw_data.info() #查看数据特征类型

raw_data.duplicated().sum() #检查是否有重复特征数据

X = raw_data.drop(['id', 'date', 'price'], axis=1)

y = raw_data['price']

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=1026)

from sklearn.preprocessing import StandardScaler

sc = StandardScaler()

sc.fit(X_train)

X_train= sc.transform(X_train)

X_test = sc.transform(X_test)

import numpy as np

# 计算损失函数值

def J(X_b,y,theta):

try:

return np.sum((X_b.dot(theta) - y) ** 2) / 2 * len(y)

except:

return float('inf')

# 计算梯度(针对一组theta参数的偏导数)

def dJ(X_b,y,theta):

return X_b.T.dot(X_b.dot(theta) - y) / len(y)

# 以批量梯度下降法为例

def BGD(X_b,y,initial_theta,eta=0.01,iters=1e4,epsilon=1e-4):

theta = initial_theta # 将参数的初始值赋给theta变量

curr_iter = 1

while curr_iter < iters: # 判断迭代次数

gradient = dJ(X_b,y,theta) # 计算当前theta对应的梯度

last_theta = theta # 先保存theta的旧值

theta = theta - eta * gradient # 更新theta

cost_value = J(X_b,y,theta) # 计算更新后的theta对应的损失函数值

last_cost_value = J(X_b,y,last_theta) # 计算更新前的theta对应的损失函数值

# 如果两次损失函数的值相差的非常小,则认为损失函数已经最小了

if abs(cost_value - last_cost_value) < epsilon:

break

curr_iter += 1 # 每次迭代完毕,迭代次数加1

return theta,cost_value # 返回最佳的theta和对应的损失函数值

# 拼接训练样本的X_b

X_b = np.hstack([np.ones((len(X_train),1)),X_train])

# 初始化theta

initial_theta = np.random.random(size=(X_b.shape[1]))

theta,cost_value = BGD(X_b,y_train,initial_theta,iters=1e5)

# 拼接测试样本的X_b

X_b_test = np.hstack([np.ones((len(X_test),1)),X_test])

# 在测试集上预测

y_predict = X_b_test.dot(theta)

from sklearn.metrics import mean_squared_error

r2 = 1 - mean_squared_error(y_test,y_predict) / np.var(y_test)

print("在测试集上的R^2为 ",r2)

from sklearn.linear_model import LinearRegression

linreg=LinearRegression()

linreg.fit(X_train,y_train)

from sklearn import metrics

y_train_pred = linreg.predict(X_train)

y_test_pred = linreg.predict(X_test)

train_err = metrics.mean_squared_error(y_train, y_train_pred)

test_err = metrics.mean_squared_error(y_test, y_test_pred)

print( 'Train_RMSE分别是: {:.2f}'.format(train_err) )

print( 'Test_RMSE分别是: {:.2f}'.format(test_err) )

predict_score = linreg.score(X_test,y_test)

train_score = linreg.score(X_train,y_train)

print('训练集上的正确率为:{:.2f} '.format(train_score) )

print('测试集上的正确率为:{:.2f} '.format(predict_score) )

# 输出模型测试集上的效果

%matplotlib inline

import matplotlib.pyplot as plt

fig = plt.figure(figsize=(8,5))

plt.plot(y_test, label='real')

plt.plot(y_test_pred, label='predict')

plt.legend()

plt.show()

new_X = np.array([[3,1,1110,5550,1,1,0,3,8,1110,0,1888,0,98178,47,-122,1555,8888]])

print("自己希望购买的房屋数据:",new_X)

new_X = sc.transform(new_X)

print("预测希望购买的价格为:{}".format(linreg.predict(new_X)))

from sklearn.model_selection import GridSearchCV

model_select=LinearRegression()

parameters={'fit_intercept':['True','False'],'copy_X':['True','False'],'n_jobs':[1,2,3]}

model_gs=GridSearchCV(estimator=model_select,param_grid=parameters,verbose=3)

model_gs.fit(X_train,y_train)

print("最优参数:",model_gs.best_estimator_.get_params())

print("相关系数矩阵为:\n")

print(theta)实验3代码:

X=raw_data.drop(['id','date','price','sqft_lot15','sqft_living15','long','lat','zipcode','yr_built','sqft_basement'],axis=1)

y=raw_data['price']

X_train,X_test,y_train,y_test=train_test_split(X,y,test_size=0.3,random_state=1026)

sc.fit(X_train)

X_train=sc.transform(X_train)

X_test=sc.transform(X_test)

# 拼接训练样本的X_b

X_b = np.hstack([np.ones((len(X_train),1)),X_train])

# 初始化theta

initial_theta = np.random.random(size=(X_b.shape[1]))

# 学习率为0.1

theta,cost_value = BGD(X_b,y_train,initial_theta,eta=0.1,iters=1e5)

# 拼接测试样本的X_b

X_b_test = np.hstack([np.ones((len(X_test),1)),X_test])

# 在测试集上预测

y_predict = X_b_test.dot(theta)

from sklearn.metrics import mean_squared_error

r2 = 1 - mean_squared_error(y_test,y_predict) / np.var(y_test)

print("在测试集上的R^2为:",r2)

# 拼接训练样本的X_b

X_b = np.hstack([np.ones((len(X_train),1)),X_train])

# 初始化theta

initial_theta = np.random.random(size=(X_b.shape[1]))

# 学习率为0.01

theta,cost_value = BGD(X_b,y_train,initial_theta,eta=0.01,iters=1e5)

# 拼接测试样本的X_b

X_b_test = np.hstack([np.ones((len(X_test),1)),X_test])

# 在测试集上预测

y_predict = X_b_test.dot(theta)

from sklearn.metrics import mean_squared_error

r2 = 1 - mean_squared_error(y_test,y_predict) / np.var(y_test)

print("在测试集上的R^2为:",r2)总结:

通过本次实验,我深入学习了如何用迭代法去求解方程式,并且实现了房价预测的线性回归方程,实践了样本数据分析与处理、划分训练集和验证集、对特征进行归一化、线性回归算法建模及分析和特征选择等过程。

1534

1534

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?