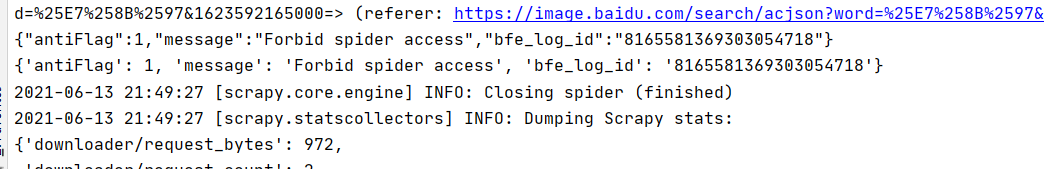

这是被百度反爬了,可以参考以下代码

baidu.py

import scrapy

from scrapy.http import Request

from urllib.parse import urlencode

import json

import time

from urllib.parse import quote

from fake_useragent import UserAgent

import re

from baiduimg.items import BaiduimgItem

class BaiduSpider(scrapy.Spider):

name = 'baidu'

# allowed_domains = ['www.xxx.com']

# start_urls = ['http://www.xxx.com/']

ua = UserAgent()

header={

'User-Agent':ua.random

}

def start_requests(self):

# base_url = "https://image.baidu.com/search/acjson?"

# words = quote(input("请输入关键词:"))

# parm = {'word': words}

# for page in range(1, self.settings.get("MAX_PAGE") + 1):

# parm['pn'] = 30 * page

# parm['tn'] ='resultjson_com'

# parm['ipn'] ='rj'

# parm['gsm'] = hex(30 * page)

# parm['queryWord'] = words

# timestamp=int(time.time())*1000

# parm[timestamp] = ''

# url = base_url +urlencode(parm)

# # print(timestamp)

# yield Request(url=url,headers=self.header,callback=self.parse)

keyworlds=input("输入关键字:")

for page in range(1, self.settings.get("MAX_PAGE") + 1):

url = "http://image.baidu.com/search/flip?tn=baiduimage&ie=utf-8&word=" + keyworlds + "&pn="+ str(page * 20) + "&gsm=140&ct=&ic=0&lm=-1&width=0&height=0"

yield Request(url=url, headers=self.header, callback=self.parse)

def parse(self, response):

# print(response.text)

data=response.text

# data=str(data).replace("")

er = '\"objURL\":\"(.*?)\"\,'

er1='\"fromPageTitle\":\"(.*?)\"\,'

objURL = re.compile(er, re.S).findall(data)

fromPageTitle=re.compile(er1, re.S).findall(data)

# image_list = re.findall(er, data, re.S)

# print(d.encode())

for i in range(1,len(objURL)):

item=BaiduimgItem()

item['fromPageTitle']=fromPageTitle[i].replace("<strong>",'').replace("<\\/strong>",'')

item['objURL'] = objURL[i]

yield item

# json_data=json.loads(response.text)

# print(json_data['data'])

# for data in json_data['data']:

# print(data['thumbURL'])

item.py

# Define here the models for your scraped items

#

# See documentation in:

# https://docs.scrapy.org/en/latest/topics/items.html

import scrapy

class BaiduimgItem(scrapy.Item):

# define the fields for your item here like:

fromPageTitle = scrapy.Field()

objURL = scrapy.Field()

middlewares.py

# Define here the models for your spider middleware

#

# See documentation in:

# https://docs.scrapy.org/en/latest/topics/spider-middleware.html

from scrapy import signals

# useful for handling different item types with a single interface

from itemadapter import is_item, ItemAdapter

class BaiduimgSpiderMiddleware:

# Not all methods need to be defined. If a method is not defined,

# scrapy acts as if the spider middleware does not modify the

# passed objects.

@classmethod

def from_crawler(cls, crawler):

# This method is used by Scrapy to create your spiders.

s = cls()

crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)

return s

def process_spider_input(self, response, spider):

# Called for each response that goes through the spider

# middleware and into the spider.

# Should return None or raise an exception.

return None

def process_spider_output(self, response, result, spider):

# Called with the results returned from the Spider, after

# it has processed the response.

# Must return an iterable of Request, or item objects.

for i in result:

yield i

def process_spider_exception(self, response, exception, spider):

# Called when a spider or process_spider_input() method

# (from other spider middleware) raises an exception.

# Should return either None or an iterable of Request or item objects.

pass

def process_start_requests(self, start_requests, spider):

# Called with the start requests of the spider, and works

# similarly to the process_spider_output() method, except

# that it doesn’t have a response associated.

# Must return only requests (not items).

for r in start_requests:

yield r

def spider_opened(self, spider):

spider.logger.info('Spider opened: %s' % spider.name)

class BaiduimgDownloaderMiddleware:

# Not all methods need to be defined. If a method is not defined,

# scrapy acts as if the downloader middleware does not modify the

# passed objects.

@classmethod

def from_crawler(cls, crawler):

# This method is used by Scrapy to create your spiders.

s = cls()

crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)

return s

def process_request(self, request, spider):

# Called for each request that goes through the downloader

# middleware.

# Must either:

# - return None: continue processing this request

# - or return a Response object

# - or return a Request object

# - or raise IgnoreRequest: process_exception() methods of

# installed downloader middleware will be called

return None

def process_response(self, request, response, spider):

# Called with the response returned from the downloader.

# Must either;

# - return a Response object

# - return a Request object

# - or raise IgnoreRequest

return response

def process_exception(self, request, exception, spider):

# Called when a download handler or a process_request()

# (from other downloader middleware) raises an exception.

# Must either:

# - return None: continue processing this exception

# - return a Response object: stops process_exception() chain

# - return a Request object: stops process_exception() chain

pass

def spider_opened(self, spider):

spider.logger.info('Spider opened: %s' % spider.name)

pipelines.py

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

# useful for handling different item types with a single interface

from scrapy.http import Request

from scrapy.exceptions import DropItem

from scrapy.pipelines.images import ImagesPipeline

import pymongo

class BaiduimgmongoPipeline(object):

def __init__(self,mongo_url,mongo_db):

self.mongo_url=mongo_url

self.mongo_db=mongo_db

@classmethod

def from_crawler(cls,crawler):

return cls(

mongo_url=crawler.settings.get("MONGO_URL"),

mongo_db=crawler.settings.get("MONGO_DB")

)

def open_spider(self,spider):

self.client=pymongo.MongoClient(self.mongo_url)

self.db=self.client[self.mongo_db]

def process_item(self,item,spider):

self.db[spider.name].insert(dict(item))

print("数据库中的集合名字:"+spider.name)

return item

def close_spider(self,spider):

self.client.close()

class BaiduimgPipeline(ImagesPipeline):

def file_path(self, request, response=None, info=None):

url = request.url

file_name = url.split('/')[-1]

return file_name

def item_completed(self, results, item, info):

image_paths = [x['path'] for ok, x in results if ok]

print(str(image_paths))

if not image_paths:

raise DropItem("下载失败")

return item

def get_media_requests(self, item, info):

yield Request(url=item['objURL'], dont_filter=False)

settings.py

# Scrapy settings for baiduimg project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# https://docs.scrapy.org/en/latest/topics/settings.html

# https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

# https://docs.scrapy.org/en/latest/topics/spider-middleware.html

import os

project_dir=os.path.abspath(os.path.dirname(__file__))

IMAGES_STORE=os.path.join(project_dir,'images')

BOT_NAME = 'baiduimg'

#页码

MAX_PAGE=2

#配置mongodb参数

MONGO_URL='localhost'

MONGO_DB='test_image'

SPIDER_MODULES = ['baiduimg.spiders']

NEWSPIDER_MODULE = 'baiduimg.spiders'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.100 Safari/537.36'

# Obey robots.txt rules

ROBOTSTXT_OBEY = False

# Configure maximum concurrent requests performed by Scrapy (default: 16)

#CONCURRENT_REQUESTS = 32

# Configure a delay for requests for the same website (default: 0)

# See https://docs.scrapy.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

DOWNLOAD_DELAY = 3

# The download delay setting will honor only one of:

#CONCURRENT_REQUESTS_PER_DOMAIN = 16

#CONCURRENT_REQUESTS_PER_IP = 16

# Disable cookies (enabled by default)

#COOKIES_ENABLED = False

# Disable Telnet Console (enabled by default)

#TELNETCONSOLE_ENABLED = False

# Override the default request headers:

DEFAULT_REQUEST_HEADERS = {

# 'referer':'https://image.baidu.com/search/acjson?word=%25E7%258B%2597&pn=30&tn=resultjson_com&ipn=rj&gsm=0x1e&queryWor',

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

'Accept-Language': 'en',

}

# #显示指定类型的日志信息

# LOG_LEVEL='ERROR'

# Enable or disable spider middlewares

# See https://docs.scrapy.org/en/latest/topics/spider-middleware.html

#SPIDER_MIDDLEWARES = {

# 'baiduimg.middlewares.BaiduimgSpiderMiddleware': 543,

#}

# Enable or disable downloader middlewares

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

#DOWNLOADER_MIDDLEWARES = {

# 'baiduimg.middlewares.BaiduimgDownloaderMiddleware': 543,

#}

# Enable or disable extensions

# See https://docs.scrapy.org/en/latest/topics/extensions.html

#EXTENSIONS = {

# 'scrapy.extensions.telnet.TelnetConsole': None,

#}

# Configure item pipelines

# See https://docs.scrapy.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

'baiduimg.pipelines.BaiduimgPipeline': 300,

'baiduimg.pipelines.BaiduimgmongoPipeline': 301,

}

# Enable and configure the AutoThrottle extension (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/autothrottle.html

#AUTOTHROTTLE_ENABLED = True

# The initial download delay

#AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

#AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

#AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

#AUTOTHROTTLE_DEBUG = False

# Enable and configure HTTP caching (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

#HTTPCACHE_ENABLED = True

#HTTPCACHE_EXPIRATION_SECS = 0

#HTTPCACHE_DIR = 'httpcache'

#HTTPCACHE_IGNORE_HTTP_CODES = []

#HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

start.py

from scrapy import cmdline

#注入执行语句

cmdline.execute("scrapy crawl baidu".split())

281

281

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?