11 高斯混合模型:GMM

这一章开始,我们将进入到Guassian Mixture Model (GMM) 的学习。而为什么要学习GMM 呢?这是因为单峰分布已经不能准备的反映数据的分布了。正如下面的一个分布:

对于如上的数据分布来说,如果强行用单峰的 Guassian Distribution 来表示这个分布,显然是可以的。但是,很明显是不合适的。会造成较大的误差,不能较好的表示整个数据的分布特征。

1 模型介绍

1.1 从几何的角度看

从几何角度来看比较的简单,也就是多个高斯分布来取加权平均值。也就是一个混合高斯分布就是多个高斯分布叠加而成的。那么,概率密度函数,可以被我们写成: p ( x ) = ∑ k = 1 K α k N ( μ k , Σ k ) , ∑ k = 1 K α k = 1 p(x)=\sum_{k=1}^{K} \alpha_{k} \mathcal{N}\left(\mu_{k}, \Sigma_{k}\right), \quad \sum_{k=1}^{K} \alpha_{k}=1 p(x)=∑k=1KαkN(μk,Σk),∑k=1Kαk=1

1.2 从混合模型角度来看(生成模型)

如果当输入变量的维度高于一维的时候,我们就不能使用简单的加权来看了。因为,这时,我们已经无法简单的用加权平均来计算了,正如下图所示。其中,X 是Observable Variable,Z 是Latent Variable。这个 Z 是个什么意思呢?我们先举一个小例子。看到图2 中那个打了红圈圈的数据点。它既属于C1 的分布,并且也属于C2 的分布,我们可以写作:

{

X

∼

C

1

X

∼

C

2

\left\{\begin{array}{l} X \sim C_{1} \\ X \sim C_{2} \end{array}\right.

{X∼C1X∼C2这样写太麻烦了,我们可以直接写成

X

∼

Z

X \sim Z

X∼Z,这里的 Z 就是一个离散的随机变量,它包含了

C

1

,

C

2

,

⋯

,

C

N

C1,C2, \cdots,C_N

C1,C2,⋯,CN 的概率分布。Z 其实就是看对应的样本X 是属于哪一个高斯分布的概率。可以被我们写成:

{

X

∼

C

1

X

∼

C

2

\left\{\begin{array}{l} X \sim C_{1} \\ X \sim C_{2} \end{array}\right.

{X∼C1X∼C2这样写太麻烦了,我们可以直接写成

X

∼

Z

X \sim Z

X∼Z,这里的 Z 就是一个离散的随机变量,它包含了

C

1

,

C

2

,

⋯

,

C

N

C1,C2, \cdots,C_N

C1,C2,⋯,CN 的概率分布。Z 其实就是看对应的样本X 是属于哪一个高斯分布的概率。可以被我们写成:

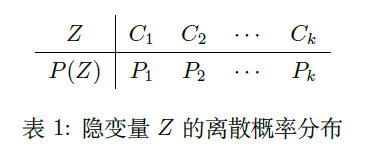

并且,

∑

k

P

k

=

1

\sum_kP_k=1

∑kPk=1。接下来,我们来说一说,如何来生成 N 个样本点

C

1

,

C

2

,

⋯

,

C

N

C1,C2, \cdots,C_N

C1,C2,⋯,CN。

我们假设有一个骰子,有 K 个面,每个面都是不均匀的,假设我们可以控制每一个面的质量,这个骰子的面出现的概率会符合

P

(

Z

)

P(Z)

P(Z)。有 K 个面,就有 K 个高斯分布。那么每次我们就投一下这个骰子,根据出现的面 K,选择在第 K 个高斯分布中进行采样,生成一个样本点

x

i

x_i

xi。

概率图可以被我们描述为如下形式:

P

(

x

)

=

∑

Z

P

(

X

,

Z

)

=

∑

k

=

1

K

P

(

X

,

z

=

C

k

)

=

∑

k

=

1

K

P

(

z

=

C

k

)

⋅

P

(

X

∣

z

=

C

k

)

=

∑

k

=

1

K

P

k

⋅

N

(

X

∣

μ

k

,

Σ

k

)

\begin{aligned} P(x) &=\sum_{Z} P(X, Z) \\ &=\sum_{k=1}^{K} P\left(X, z=C_{k}\right) \\ &=\sum_{k=1}^{K} P\left(z=C_{k}\right) \cdot P\left(X | z=C_{k}\right) \\ &=\sum_{k=1}^{K} P_{k} \cdot \mathcal{N}\left(X | \mu_{k}, \Sigma_{k}\right) \end{aligned}

P(x)=Z∑P(X,Z)=k=1∑KP(X,z=Ck)=k=1∑KP(z=Ck)⋅P(X∣z=Ck)=k=1∑KPk⋅N(X∣μk,Σk)我们根据一个离散的随机变量 Z 来选择是选取那个高斯分布,利用这个高斯分布

N

(

μ

,

Σ

)

\mathcal{N}\left(\mu_{}, \Sigma_{}\right)

N(μ,Σ) 来采样得到我们想要的样本点。而且,离散随机变量 Z 符合一个离散分布

p

=

(

p

1

,

p

2

,

⋯

p

k

)

p = (p_1, p_2, \cdots p_k)

p=(p1,p2,⋯pk)。

2 极大似然估计:Maximum Likelihood Estimation

本节我们想使用极大似然估计来求解Gaussian Mixture Model (GMM) 的最优参数结果。首先,

我们明确一下参数的意义:

X

:

O

b

s

e

r

v

e

d

d

a

t

a

,

X

=

(

x

1

,

x

2

,

⋯

,

x

N

)

X: Observed data, X=\left(x_{1}, x_{2}, \cdots, x_{N}\right)

X:Observeddata,X=(x1,x2,⋯,xN)

C

o

m

p

l

e

t

e

d

a

t

a

,

(

X

,

Z

)

=

{

(

x

1

,

z

1

)

,

(

x

2

,

z

2

)

,

⋯

,

(

x

N

,

z

N

)

}

Complete data , (X, Z)=\left\{\left(x_{1}, z_{1}\right),\left(x_{2}, z_{2}\right), \cdots,\left(x_{N}, z_{N}\right)\right\}

Completedata,(X,Z)={(x1,z1),(x2,z2),⋯,(xN,zN)}

θ

=

{

P

1

,

⋯

,

P

k

,

μ

1

,

⋯

,

μ

k

,

Σ

1

,

⋯

,

Σ

k

}

\theta=\left\{P_{1}, \cdots, P_{k}, \mu_{1}, \cdots, \mu_{k}, \Sigma_{1}, \cdots, \Sigma_{k}\right\}

θ={P1,⋯,Pk,μ1,⋯,μk,Σ1,⋯,Σk}

2.1 Maximum Likelihood Estimation 求解参数

P

(

x

)

=

∑

Z

P

(

X

,

Z

)

=

∑

k

=

1

K

P

(

X

,

z

=

C

k

)

=

∑

k

=

1

K

P

(

z

=

C

k

)

⋅

P

(

X

∣

z

=

C

k

)

=

∑

k

=

1

K

P

k

⋅

N

(

X

∣

μ

k

,

Σ

k

)

\begin{aligned} P(x) &=\sum_{Z} P(X, Z) \\ &=\sum_{k=1}^{K} P\left(X, z=C_{k}\right) \\ &=\sum_{k=1}^{K} P\left(z=C_{k}\right) \cdot P\left(X | z=C_{k}\right) \\ &=\sum_{k=1}^{K} P_{k} \cdot \mathcal{N}\left(X | \mu_{k}, \Sigma_{k}\right) \end{aligned}

P(x)=Z∑P(X,Z)=k=1∑KP(X,z=Ck)=k=1∑KP(z=Ck)⋅P(X∣z=Ck)=k=1∑KPk⋅N(X∣μk,Σk)其中,

P

k

P_k

Pk 也就是数据点去第

k

k

k 个高斯分布的概率。下面我们开始使用MLE 来求解

θ

\theta

θ:

θ

^

M

L

E

=

arg

max

θ

log

P

(

X

)

=

arg

max

θ

log

∏

i

=

1

N

P

(

x

i

)

=

arg

max

θ

∑

i

=

1

N

log

P

(

x

i

)

=

arg

max

θ

∑

i

=

1

N

log

∑

k

=

1

K

P

k

⋅

N

(

x

i

∣

μ

k

,

Σ

k

)

\begin{aligned} \hat{\theta}_{M L E} &=\arg \max _{\theta} \log P(X) \\ &=\arg \max _{\theta} \log \prod_{i=1}^{N} P\left(x_{i}\right) \\ &=\arg \max _{\theta} \sum_{i=1}^{N} \log P\left(x_{i}\right) \\ &=\arg \max _{\theta} \sum_{i=1}^{N} \log \sum_{k=1}^{K} P_{k} \cdot \mathcal{N}\left(x_{i} | \mu_{k}, \Sigma_{k}\right) \end{aligned}

θ^MLE=argθmaxlogP(X)=argθmaxlogi=1∏NP(xi)=argθmaxi=1∑NlogP(xi)=argθmaxi=1∑Nlogk=1∑KPk⋅N(xi∣μk,Σk)我们想要求的

θ

\theta

θ 包括

θ

=

{

P

1

,

⋯

,

P

k

,

μ

1

,

⋯

,

μ

k

,

Σ

1

,

⋯

,

Σ

k

}

\theta=\left\{P_{1}, \cdots, P_{k}, \mu_{1}, \cdots, \mu_{k}, \Sigma_{1}, \cdots, \Sigma_{k}\right\}

θ={P1,⋯,Pk,μ1,⋯,μk,Σ1,⋯,Σk}。

2.2 MLE 的问题

按照之前的思路,我们就要分别对每个参数进行求偏导来计算最终的结果。但是问题马上就来了,大家有没有看到 log 函数里面是一个求和的形式,而不是一个求积的形式。这意味着计算非常的困难。甚至可以说,我们根本就求不出解析解。如果是单一的Gaussian Distribution:

log

P

(

x

i

)

=

log

1

2

π

σ

exp

{

−

(

x

i

−

μ

)

2

2

σ

}

\log P\left(x_{i}\right)=\log \frac{1}{\sqrt{2 \pi} \sigma} \exp \left\{-\frac{\left(x_{i}-\mu\right)^{2}}{2 \sigma}\right\}

logP(xi)=log2πσ1exp{−2σ(xi−μ)2}根据 log 函数优秀的性质,这个问题是可以解的。但是,很不幸后面是一个求和的形式。所以,直接使用MLE 求解GMM,无法得到解析解。故⽽引出下⾯两节的内容: ⽤EM 对GMM 求解:

3 EM进行求解

上一小节中,我们看到了使用极大似然估计的方法,我们根本就求不出最优参数的解析解。所以,我们使用迭代的方法来求近似解。

EM 算法的表达式,可以被我们写为:

θ

(

t

+

1

)

=

arg

max

θ

E

P

(

Z

∣

X

,

θ

(

t

)

)

[

log

P

(

X

,

Z

∣

θ

)

]

⏟

Q

(

θ

,

θ

(

t

)

)

\theta^{(t+1)}=\arg \max _{\theta} \underbrace{\mathbb{E}_{P\left(Z | X, \theta^{(t)}\right)}[\log P(X, Z | \theta)]}_{Q\left(\theta, \theta^{(t)}\right)}

θ(t+1)=argθmaxQ(θ,θ(t))

EP(Z∣X,θ(t))[logP(X,Z∣θ)]经过一系列的迭代,我们可以得到

θ

0

,

θ

1

⋯

,

θ

t

\theta^0,\theta^1\cdots,\theta^{t}

θ0,θ1⋯,θt 迭代到一定次数以后我们得到的

θ

N

\theta^N

θN 就是我们想要得到的结果。EM 算法大体上可以分成两个部分,E-step 和M-step,

3.1 E-Step

Q

(

θ

,

θ

(

t

)

)

=

∫

Z

log

P

(

X

,

Z

∣

θ

)

⋅

P

(

Z

∣

X

,

θ

(

t

)

)

d

Z

=

∑

Z

log

∏

i

=

1

N

P

(

x

i

,

z

i

∣

θ

)

⋅

∏

i

=

1

N

P

(

z

i

∣

x

i

,

θ

(

t

)

)

d

Z

=

∑

z

1

,

⋯

,

z

N

∑

i

=

1

N

log

P

(

x

i

,

z

i

∣

θ

)

⋅

∏

i

=

1

N

P

(

z

i

∣

x

i

,

θ

(

t

)

)

d

Z

=

∑

z

1

,

⋯

,

z

N

[

log

P

(

x

1

,

z

1

∣

θ

)

+

log

P

(

x

2

,

z

2

∣

θ

)

+

⋯

log

P

(

x

N

,

z

N

∣

θ

)

]

⋅

∏

i

=

1

N

P

(

z

i

∣

x

i

,

θ

(

t

)

)

d

Z

\begin{aligned} Q\left(\theta, \theta^{(t)}\right) &=\int_{Z} \log P(X, Z | \theta) \cdot P\left(Z | X, \theta^{(t)}\right) d Z \\ &=\sum_{Z} \log \prod_{i=1}^{N} P\left(x_{i}, z_{i} | \theta\right) \cdot \prod_{i=1}^{N} P\left(z_{i} | x_{i}, \theta^{(t)}\right) d Z \\ &=\sum_{z_{1}, \cdots, z_{N}} \sum_{i=1}^{N} \log P\left(x_{i}, z_{i} | \theta\right) \cdot \prod_{i=1}^{N} P\left(z_{i} | x_{i}, \theta^{(t)}\right) d Z \\ &=\sum_{z_{1}, \cdots, z_{N}}\left[\log P\left(x_{1}, z_{1} | \theta\right)+\log P\left(x_{2}, z_{2} | \theta\right)+\cdots \log P\left(x_{N}, z_{N} | \theta\right)\right] \cdot \prod_{i=1}^{N} P\left(z_{i} | x_{i}, \theta^{(t)}\right) d Z \end{aligned}

Q(θ,θ(t))=∫ZlogP(X,Z∣θ)⋅P(Z∣X,θ(t))dZ=Z∑logi=1∏NP(xi,zi∣θ)⋅i=1∏NP(zi∣xi,θ(t))dZ=z1,⋯,zN∑i=1∑NlogP(xi,zi∣θ)⋅i=1∏NP(zi∣xi,θ(t))dZ=z1,⋯,zN∑[logP(x1,z1∣θ)+logP(x2,z2∣θ)+⋯logP(xN,zN∣θ)]⋅i=1∏NP(zi∣xi,θ(t))dZ

为了简化推导,我们首先只取第一项来化简一下,

∑

z

1

,

⋯

,

z

N

log

P

(

x

1

,

z

1

∣

θ

)

⋅

∏

i

=

1

N

P

(

z

i

∣

x

i

,

θ

(

t

)

)

d

Z

=

∑

z

1

,

⋯

,

z

N

log

P

(

x

1

,

z

1

∣

θ

)

⋅

P

(

z

1

∣

x

1

,

θ

(

t

)

)

⋅

∏

i

=

2

N

P

(

z

i

∣

x

i

,

θ

(

t

)

)

d

Z

=

∑

z

1

log

P

(

x

1

,

z

1

∣

θ

)

⋅

P

(

z

1

∣

x

1

,

θ

(

t

)

)

⋅

∑

z

2

,

⋯

,

z

N

∏

i

=

2

N

P

(

z

i

∣

x

i

,

θ

(

t

)

)

d

Z

\begin{array}{l} \sum_{z_{1}, \cdots, z_{N}} \log P\left(x_{1}, z_{1} | \theta\right) \cdot \prod_{i=1}^{N} P\left(z_{i} | x_{i}, \theta^{(t)}\right) d Z \\ \\ =\sum_{z_{1}, \cdots, z_{N}} \log P\left(x_{1}, z_{1} | \theta\right) \cdot P\left(z_{1} | x_{1}, \theta^{(t)}\right) \cdot \prod_{i=2}^{N} P\left(z_{i} | x_{i}, \theta^{(t)}\right) d Z \\ \\ =\sum_{z_{1}} \log P\left(x_{1}, z_{1} | \theta\right) \cdot P\left(z_{1} | x_{1}, \theta^{(t)}\right) \cdot \sum_{z_{2}, \cdots, z_{N}} \prod_{i=2}^{N} P\left(z_{i} | x_{i}, \theta^{(t)}\right) d Z \end{array}

∑z1,⋯,zNlogP(x1,z1∣θ)⋅∏i=1NP(zi∣xi,θ(t))dZ=∑z1,⋯,zNlogP(x1,z1∣θ)⋅P(z1∣x1,θ(t))⋅∏i=2NP(zi∣xi,θ(t))dZ=∑z1logP(x1,z1∣θ)⋅P(z1∣x1,θ(t))⋅∑z2,⋯,zN∏i=2NP(zi∣xi,θ(t))dZ而:

∑

z

2

,

⋯

,

z

N

∏

i

=

2

N

P

(

z

i

∣

x

i

,

θ

(

t

)

)

=

∑

z

2

,

⋯

,

z

N

P

(

z

2

∣

x

2

,

θ

(

t

)

)

⋅

P

(

z

3

∣

x

3

,

θ

(

t

)

)

⋯

P

(

z

N

∣

x

N

,

θ

(

t

)

)

=

∑

z

2

P

(

z

2

∣

x

2

,

θ

(

t

)

)

⋅

∑

z

3

P

(

z

3

∣

x

3

,

θ

(

t

)

)

⋯

∑

z

N

P

(

z

N

∣

x

N

,

θ

(

t

)

)

=

1

⋅

1

⋯

1

=

1

\begin{aligned} \sum_{z_{2}, \cdots, z_{N}} \prod_{i=2}^{N} P\left(z_{i} | x_{i}, \theta^{(t)}\right) &=\sum_{z_{2}, \cdots, z_{N}} P\left(z_{2} | x_{2}, \theta^{(t)}\right) \cdot P\left(z_{3} | x_{3}, \theta^{(t)}\right) \cdots P\left(z_{N} | x_{N}, \theta^{(t)}\right) \\ &=\sum_{z_{2}} P\left(z_{2} | x_{2}, \theta^{(t)}\right) \cdot \sum_{z_{3}} P\left(z_{3} | x_{3}, \theta^{(t)}\right) \cdots \sum_{z_{N}} P\left(z_{N} | x_{N}, \theta^{(t)}\right) \\ &=1 \cdot 1 \cdots 1 \\ &=1 \end{aligned}

z2,⋯,zN∑i=2∏NP(zi∣xi,θ(t))=z2,⋯,zN∑P(z2∣x2,θ(t))⋅P(z3∣x3,θ(t))⋯P(zN∣xN,θ(t))=z2∑P(z2∣x2,θ(t))⋅z3∑P(z3∣x3,θ(t))⋯zN∑P(zN∣xN,θ(t))=1⋅1⋯1=1

所以,

∑

z

1

,

⋯

,

z

N

log

P

(

x

1

,

z

1

∣

θ

)

⋅

∏

i

=

1

N

P

(

z

i

∣

x

i

,

θ

(

t

)

)

d

Z

=

∑

z

1

log

P

(

x

1

,

z

1

∣

θ

)

⋅

P

(

z

1

∣

x

1

,

θ

(

t

)

)

\sum_{z_{1}, \cdots, z_{N}} \log P\left(x_{1}, z_{1} | \theta\right) \cdot \prod_{i=1}^{N} P\left(z_{i} | x_{i}, \theta^{(t)}\right) d Z=\sum_{z_{1}} \log P\left(x_{1}, z_{1} | \theta\right) \cdot P\left(z_{1} | x_{1}, \theta^{(t)}\right)

z1,⋯,zN∑logP(x1,z1∣θ)⋅i=1∏NP(zi∣xi,θ(t))dZ=z1∑logP(x1,z1∣θ)⋅P(z1∣x1,θ(t))

将上面得到的结果,代入到初始的推导式 中,我们就可以得到:

Q

(

θ

,

θ

(

t

)

)

=

∑

z

1

log

P

(

x

1

,

z

1

∣

θ

)

⋅

P

(

z

1

∣

x

1

,

θ

(

t

)

)

+

⋯

+

∑

z

N

log

P

(

x

N

,

z

N

∣

θ

)

⋅

P

(

z

N

∣

x

N

,

θ

(

t

)

)

=

∑

i

=

1

N

∑

Z

i

log

P

(

x

i

,

z

i

∣

θ

)

⋅

P

(

z

i

∣

x

i

,

θ

(

t

)

)

\begin{aligned} Q\left(\theta, \theta^{(t)}\right) &=\sum_{z_{1}} \log P\left(x_{1}, z_{1} | \theta\right) \cdot P\left(z_{1} | x_{1}, \theta^{(t)}\right)+\cdots+\sum_{z_{N}} \log P\left(x_{N}, z_{N} | \theta\right) \cdot P\left(z_{N} | x_{N}, \theta^{(t)}\right) \\ &=\sum_{i=1}^{N} \sum_{Z_{i}} \log P\left(x_{i}, z_{i} | \theta\right) \cdot P\left(z_{i} | x_{i}, \theta^{(t)}\right) \end{aligned}

Q(θ,θ(t))=z1∑logP(x1,z1∣θ)⋅P(z1∣x1,θ(t))+⋯+zN∑logP(xN,zN∣θ)⋅P(zN∣xN,θ(t))=i=1∑NZi∑logP(xi,zi∣θ)⋅P(zi∣xi,θ(t))

那么,下一步我们就是要找到,

log

P

(

x

i

,

z

i

∣

θ

)

和

P

(

z

i

∣

x

i

,

θ

(

t

)

)

\log P\left(x_{i}, z_{i} | \theta\right) 和 P\left(z_{i} | x_{i}, \theta^{(t)}\right)

logP(xi,zi∣θ)和P(zi∣xi,θ(t)) 的表达方式了。其中:

P

(

X

,

Z

)

=

P

(

Z

)

P

(

X

∣

Z

)

=

P

Z

⋅

N

(

X

∣

μ

Z

,

Σ

Z

)

P

(

Z

∣

X

)

=

P

(

X

,

Z

)

P

(

X

)

=

P

Z

⋅

N

(

X

∣

μ

Z

,

Σ

Z

)

∑

i

=

1

K

P

Z

i

⋅

N

(

X

∣

μ

Z

i

,

Σ

Z

i

)

\begin{array}{c} P(X, Z)=P(Z) P(X | Z)=P_{Z} \cdot \mathcal{N}\left(X | \mu_{Z}, \Sigma_{Z}\right) \\ \\ P(Z | X)=\frac{P(X, Z)}{P(X)}=\frac{P_{Z} \cdot \mathcal{N}\left(X | \mu_{Z}, \Sigma_{Z}\right)}{\sum_{i=1}^{K} P_{Z i} \cdot \mathcal{N}\left(X | \mu_{Z i}, \Sigma_{Z i}\right)} \end{array}

P(X,Z)=P(Z)P(X∣Z)=PZ⋅N(X∣μZ,ΣZ)P(Z∣X)=P(X)P(X,Z)=∑i=1KPZi⋅N(X∣μZi,ΣZi)PZ⋅N(X∣μZ,ΣZ)所以,我们将上式代入,就可以得到:

Q

(

θ

,

θ

(

t

)

)

=

∑

i

=

1

N

∑

Z

i

log

P

Z

i

⋅

N

(

X

∣

μ

Z

i

,

Σ

Z

i

)

⋅

P

Z

i

θ

(

t

)

⋅

N

(

x

i

∣

μ

Z

i

θ

(

t

)

,

Σ

Z

i

θ

(

t

)

)

∑

k

=

1

K

P

k

θ

(

t

)

⋅

N

(

x

i

∣

μ

k

θ

(

t

)

,

Σ

k

θ

(

t

)

)

Q\left(\theta, \theta^{(t)}\right)=\sum_{i=1}^{N} \sum_{Z_{i}} \log P_{Z_{i}} \cdot \mathcal{N}\left(X | \mu_{Z_{i}}, \Sigma_{Z_{i}}\right) \cdot \frac{P_{Z_{i}}^{\theta(t)} \cdot \mathcal{N}\left(x_{i} | \mu_{Z_{i}}^{\theta(t)}, \Sigma_{Z_{i}}^{\theta(t)}\right)}{\sum_{k=1}^{K} P_{k}^{\theta(t)} \cdot \mathcal{N}\left(x_{i} | \mu_{k}^{\theta(t)}, \Sigma_{k}^{\theta(t)}\right)}

Q(θ,θ(t))=i=1∑NZi∑logPZi⋅N(X∣μZi,ΣZi)⋅∑k=1KPkθ(t)⋅N(xi∣μkθ(t),Σkθ(t))PZiθ(t)⋅N(xi∣μZiθ(t),ΣZiθ(t))

3.2 M-Step

根据我们在E-Step 中的推导,我们可以得到:

Q

(

θ

,

θ

(

t

)

)

=

∑

i

=

1

N

∑

Z

i

log

P

Z

i

⋅

N

(

X

∣

μ

Z

i

,

Σ

Z

i

)

⋅

P

Z

i

θ

(

t

)

⋅

N

(

x

i

∣

μ

Z

i

θ

(

t

)

,

Σ

Z

i

θ

(

t

)

)

∑

k

=

1

K

P

k

θ

(

t

)

⋅

N

(

x

i

∣

μ

k

θ

(

t

)

,

Σ

k

θ

(

t

)

)

⏟

=

∑

Z

i

∑

i

=

1

N

log

(

P

Z

i

⋅

N

(

X

∣

μ

Z

i

,

Σ

Z

i

)

)

⋅

P

(

Z

i

∣

X

i

,

θ

(

t

)

)

=

∑

k

=

1

K

∑

i

=

1

N

log

(

P

k

⋅

N

(

X

∣

μ

k

,

Σ

k

)

)

⋅

P

(

Z

i

=

C

k

∣

X

i

,

θ

(

t

)

)

(

Z

i

=

C

k

)

=

∑

k

=

1

K

∑

i

=

1

N

(

log

P

k

+

log

N

(

X

i

∣

μ

k

,

Σ

k

)

)

⋅

P

(

Z

i

=

C

k

∣

X

i

,

θ

(

t

)

)

\begin{aligned} Q\left(\theta, \theta^{(t)}\right) &=\sum_{i=1}^{N} \sum_{Z_{i}} \log P_{Z_{i}} \cdot \mathcal{N}\left(X | \mu_{Z_{i}}, \Sigma_{Z_{i}}\right) \cdot \frac{P_{Z_{i}}^{\theta(t)} \cdot \mathcal{N}\left(x_{i} | \mu_{Z_{i}}^{\theta(t)}, \Sigma_{Z_{i}}^{\theta(t)}\right)}{\underbrace{\sum_{k=1}^{K} P_{k}^{\theta(t)} \cdot \mathcal{N}\left(x_{i} | \mu_{k}^{\theta(t)}, \Sigma_{k}^{\theta(t)}\right)}} \\ &=\sum_{Z_{i}} \sum_{i=1}^{N} \log \left(P_{Z_{i}} \cdot \mathcal{N}\left(X | \mu_{Z_{i}}, \Sigma_{Z_{i}}\right)\right) \cdot P\left(Z_{i} | X_{i}, \theta^{(t)}\right) \\ &=\sum_{k=1}^{K} \sum_{i=1}^{N} \log \left(P_{k} \cdot \mathcal{N}\left(X | \mu_{k}, \Sigma_{k}\right)\right) \cdot P\left(Z_{i}=C_{k} | X_{i}, \theta^{(t)}\right) \quad\left(Z_{i}=C_{k}\right) \\ &=\sum_{k=1}^{K} \sum_{i=1}^{N}\left(\log P_{k}+\log \mathcal{N}\left(X_{i} | \mu_{k}, \Sigma_{k}\right)\right) \cdot P\left(Z_{i}=C_{k} | X_{i}, \theta^{(t)}\right) \end{aligned}

Q(θ,θ(t))=i=1∑NZi∑logPZi⋅N(X∣μZi,ΣZi)⋅

k=1∑KPkθ(t)⋅N(xi∣μkθ(t),Σkθ(t))PZiθ(t)⋅N(xi∣μZiθ(t),ΣZiθ(t))=Zi∑i=1∑Nlog(PZi⋅N(X∣μZi,ΣZi))⋅P(Zi∣Xi,θ(t))=k=1∑Ki=1∑Nlog(Pk⋅N(X∣μk,Σk))⋅P(Zi=Ck∣Xi,θ(t))(Zi=Ck)=k=1∑Ki=1∑N(logPk+logN(Xi∣μk,Σk))⋅P(Zi=Ck∣Xi,θ(t))我们的目的也就是进行不断迭代,从而得出最终的解,用公式表达也就是:

θ

(

t

+

1

)

=

arg

max

θ

Q

(

θ

,

θ

(

t

)

)

\theta^{(t+1)}=\arg \max _{\theta} Q\left(\theta, \theta^{(t)}\right)

θ(t+1)=argθmaxQ(θ,θ(t))我们需要求解的参数也就是:

θ

(

t

+

1

)

=

{

P

1

(

t

+

1

)

,

⋯

,

P

k

(

t

+

1

)

,

μ

1

(

t

+

1

)

,

⋯

,

μ

k

(

t

+

1

)

,

Σ

1

(

t

+

1

)

,

⋯

,

Σ

k

(

t

+

1

)

}

\theta^{(t+1)}=\left\{P_{1}^{(t+1)}, \cdots, P_{k}^{(t+1)}, \mu_{1}^{(t+1)}, \cdots, \mu_{k}^{(t+1)}, \Sigma_{1}^{(t+1)}, \cdots, \Sigma_{k}^{(t+1)}\right\}

θ(t+1)={P1(t+1),⋯,Pk(t+1),μ1(t+1),⋯,μk(t+1),Σ1(t+1),⋯,Σk(t+1)}

首先,我们来展示一下怎么求解

P

k

(

t

+

1

)

P_{k}^{(t+1)}

Pk(t+1)。

由于在前面的推导结果

∑

k

=

1

K

∑

i

=

1

N

(

log

P

k

+

log

N

(

X

i

∣

μ

k

,

Σ

k

)

)

⋅

P

(

Z

i

=

C

k

∣

X

i

,

θ

(

t

)

)

\sum_{k=1}^{K} \sum_{i=1}^{N}\left(\log P_{k}+\log \mathcal{N}\left(X_{i} | \mu_{k}, \Sigma_{k}\right)\right) \cdot P\left(Z_{i}=C_{k} | X_{i}, \theta^{(t)}\right)

∑k=1K∑i=1N(logPk+logN(Xi∣μk,Σk))⋅P(Zi=Ck∣Xi,θ(t))中的

log

N

(

X

i

∣

μ

k

,

Σ

k

)

\log \mathcal{N}\left(X_{i} | \mu_{k}, \Sigma_{k}\right)

logN(Xi∣μk,Σk)

部分和

P

k

P_k

Pk 并没有什么关系。所以,可以被我们直接忽略掉。所以,求解问题,可以被我们描述为M-step:

{

arg

max

P

k

∑

k

=

1

K

∑

i

=

1

N

log

P

k

⋅

P

(

Z

i

=

C

k

∣

X

i

,

θ

(

t

)

)

s.t.

∑

k

=

1

K

P

k

=

1

\left\{\begin{array}{l} \arg \max _{P_{k}} \sum_{k=1}^{K} \sum_{i=1}^{N} \log P_{k} \cdot P\left(Z_{i}=C_{k} | X_{i}, \theta^{(t)}\right) \\ \\ \text { s.t. } \quad \sum_{k=1}^{K} P_{k}=1 \end{array}\right.

⎩⎨⎧argmaxPk∑k=1K∑i=1NlogPk⋅P(Zi=Ck∣Xi,θ(t)) s.t. ∑k=1KPk=1

使用拉格朗日算子法,我们可以写成:

L

(

P

,

λ

)

=

∑

k

=

1

K

∑

i

=

1

N

log

P

k

⋅

P

(

Z

i

=

C

k

∣

X

i

,

θ

(

t

)

)

+

λ

(

∑

k

=

1

K

P

k

−

1

)

∂

L

(

P

,

λ

)

∂

P

k

=

∑

i

=

1

N

1

P

k

⋅

P

(

Z

i

=

C

k

∣

X

i

,

θ

(

t

)

)

+

λ

=

0

⇒

∑

i

=

1

N

P

(

Z

i

=

C

k

∣

X

i

,

θ

(

t

)

)

+

P

k

λ

=

0

⇒

∑

i

=

1

N

∑

k

=

1

K

P

(

Z

i

=

C

k

∣

X

i

,

θ

(

t

)

)

⏟

1

+

∑

k

=

1

K

P

k

λ

⏟

1

=

0

⇒

N

+

λ

=

0

\begin{array}{c} \mathcal{L}(P, \lambda)=\sum_{k=1}^{K} \sum_{i=1}^{N} \log P_{k} \cdot P\left(Z_{i}=C_{k} | X_{i}, \theta^{(t)}\right)+\lambda\left(\sum_{k=1}^{K} P_{k}-1\right) \\ \qquad \begin{aligned} \frac{\partial \mathcal{L}(P, \lambda)}{\partial P_{k}} &=\sum_{i=1}^{N} \frac{1}{P_{k}} \cdot P\left(Z_{i}=C_{k} | X_{i}, \theta^{(t)}\right)+\lambda=0 \\ & \Rightarrow \sum_{i=1}^{N} P\left(Z_{i}=C_{k} | X_{i}, \theta^{(t)}\right)+P_{k} \lambda=0 \end{aligned} \\ \Rightarrow \sum_{i=1}^{N} \underbrace{\sum_{k=1}^{K} P\left(Z_{i}=C_{k} | X_{i}, \theta^{(t)}\right)}_{1}+\underbrace{\sum_{k=1}^{K} P_{k} \lambda}_{1}=0 \\ \Rightarrow N+\lambda=0 \end{array}

L(P,λ)=∑k=1K∑i=1NlogPk⋅P(Zi=Ck∣Xi,θ(t))+λ(∑k=1KPk−1)∂Pk∂L(P,λ)=i=1∑NPk1⋅P(Zi=Ck∣Xi,θ(t))+λ=0⇒i=1∑NP(Zi=Ck∣Xi,θ(t))+Pkλ=0⇒∑i=1N1

k=1∑KP(Zi=Ck∣Xi,θ(t))+1

k=1∑KPkλ=0⇒N+λ=0所以,我们可以轻易的得到

λ

=

−

N

\lambda =- N

λ=−N,所以有

P

K

(

t

+

1

)

=

1

N

∑

i

=

1

N

P

(

Z

i

=

C

k

∣

X

i

,

θ

(

t

)

)

P_{K}^{(t+1)}=\frac{1}{N} \sum_{i=1}^{N} P\left(Z_{i}=C_{k} | X_{i}, \theta^{(t)}\right)

PK(t+1)=N1i=1∑NP(Zi=Ck∣Xi,θ(t))那么,我们所有想要求的参数也就是

P

(

t

+

1

)

=

(

P

1

(

t

+

1

)

,

P

2

(

t

+

1

)

,

⋯

,

P

k

(

t

+

1

)

)

P^{(t+1)}=\left(P_{1}^{(t+1)}, P_{2}^{(t+1)}, \cdots, P_{k}^{(t+1)}\right)

P(t+1)=(P1(t+1),P2(t+1),⋯,Pk(t+1))求解

P

k

(

t

+

1

)

P_{k}^{(t+1)}

Pk(t+1) 是一个有约束的求最大值问题,由于带约束所以我们要使用拉格朗日乘子法。而且这里使用到了一个track,也就是将从1 到 k,所有的数据集做一个整合,非常的精彩,这样就直接消掉了

P

k

P_k

Pk 无法计算的问题。而至于

θ

\theta

θ 的其他部分,也就是关于

{

μ

1

(

t

+

1

)

,

⋯

,

μ

k

(

t

+

1

)

,

Σ

1

(

t

+

1

)

,

⋯

,

Σ

k

(

t

+

1

)

}

\left\{\mu_{1}^{(t+1)}, \cdots, \mu_{k}^{(t+1)}, \Sigma_{1}^{(t+1)}, \cdots, \Sigma_{k}^{(t+1)}\right\}

{μ1(t+1),⋯,μk(t+1),Σ1(t+1),⋯,Σk(t+1)} 的计算,使用的方法也是一样的.

为什么极大似然估计搞不定的问题,放在EM 算法里面我们就可以搞定了呢?我们来对比一下两个方法中,需要计算极值的公式。

∑ k = 1 K ∑ i = 1 N ( log P k + log N ( X i ∣ μ k , Σ k ) ) ⋅ P ( Z i = C k ∣ X i , θ ( t ) ) \begin{array}{c} \sum_{k=1}^{K} \sum_{i=1}^{N}\left(\log P_{k}+\log \mathcal{N}\left(X_{i} | \mu_{k}, \Sigma_{k}\right)\right) \cdot P\left(Z_{i}=C_{k} | X_{i}, \theta^{(t)}\right) \end{array} ∑k=1K∑i=1N(logPk+logN(Xi∣μk,Σk))⋅P(Zi=Ck∣Xi,θ(t)) arg max θ ∑ i = 1 N log ∑ k = 1 K P k ⋅ N ( x i ∣ μ k , Σ k ) \begin{array}{c} \arg \max _{\theta} \sum_{i=1}^{N} \log \sum_{k=1}^{K} P_{k} \cdot \mathcal{N}\left(x_{i} | \mu_{k}, \Sigma_{k}\right) \end{array} argmaxθ∑i=1Nlog∑k=1KPk⋅N(xi∣μk,Σk)极大似然估计一开始计算的就是 P ( X ) P(X) P(X),而EM 算法中并没有出现有关 P ( X ) P(X) P(X) 的计算,而是全程计算都是 P ( X , Z ) P(X,Z) P(X,Z) 。而 P ( X ) P(X) P(X) 实际上就是 P ( X , Z ) P(X,Z) P(X,Z) 的求和形式。所以,每次单独的考虑 P ( X , Z ) P(X,Z) P(X,Z) 就避免了在log 函数中出现求和操作。

版权声明:本文为博主原创文章,遵循 CC 4.0 BY-SA 版权协议,转载请附上原文出处链接和本声明。

本文链接:https://blog.csdn.net/cengjing12/article/details/106535515

————————————————

版权声明:本文为CSDN博主「AI路上的小白」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/cengjing12/article/details/106535515

1490

1490

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?