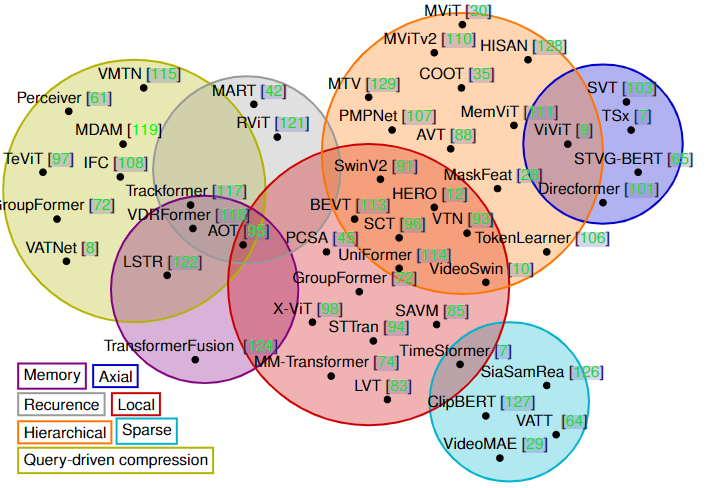

Awesome Video Transformer仅针对Video数据,探索近年来Transformer的架构演进,以及实现细节。

Transformer架构特点:lack inductive biases and scale quadratically with input length. Transformer 计算复杂度是输入序列长度的平方倍

O

(

N

2

)

O(N^2)

O(N2),在处理时间维度引入的高维时,视频高维加剧了这种情况。此外,缺乏归纳偏差使得 Transformer 需要大量数据或一些架构上的魔改,以适应视频的高度冗余时空结构。

TimeSformer

TimeSformer: “Is Space-Time Attention All You Need for Video Understanding?”, ICML, 2021 (Facebook). [Paper][PyTorch (lucidrains)]

MViT

MViT: “Multiscale Vision Transformers”, ICCV, 2021 (Facebook). [Paper][PyTorch]

VidTr

VidTr: “VidTr: Video Transformer Without Convolutions”, ICCV, 2021 (Amazon). [Paper][PyTorch]

ViViT

ViViT: “ViViT: A Video Vision Transformer”, ICCV, 2021 (Google). [Paper][PyTorch (rishikksh20)]

VTN

VTN: “Video Transformer Network”, ICCVW, 2021 (Theator). [Paper][PyTorch]

TokShift: “Token Shift Transformer for Video Classification”, ACMMM, 2021 (CUHK). [Paper][PyTorch]

Motionformer: “Keeping Your Eye on the Ball: Trajectory Attention in Video Transformers”, NeurIPS, 2021 (Facebook). [Paper][PyTorch][Website]

X-ViT: “Space-time Mixing Attention for Video Transformer”, NeurIPS, 2021 (Samsung). [Paper][PyTorch]

SCT: “Shifted Chunk Transformer for Spatio-Temporal Representational Learning”, NeurIPS, 2021 (Kuaishou). [Paper]

RSANet: “Relational Self-Attention: What’s Missing in Attention for Video Understanding”, NeurIPS, 2021 (POSTECH). [Paper][PyTorch][Website]

STAM: “An Image is Worth 16x16 Words, What is a Video Worth?”, arXiv, 2021 (Alibaba). [Paper][Code]

GAT: “Enhancing Transformer for Video Understanding Using Gated Multi-Level Attention and Temporal Adversarial Training”, arXiv, 2021 (Samsung). [Paper]

TokenLearner: “TokenLearner: What Can 8 Learned Tokens Do for Images and Videos?”, arXiv, 2021 (Google). [Paper]

VLF: “VideoLightFormer: Lightweight Action Recognition using Transformers”, arXiv, 2021 (The University of Sheffield). [Paper]

UniFormer: “UniFormer: Unified Transformer for Efficient Spatiotemporal Representation Learning”, ICLR, 2022 (CAS + SenstTime). [Paper][PyTorch]

Video-Swin: “Video Swin Transformer”, CVPR, 2022 (Microsoft). [Paper][PyTorch]

DirecFormer: “DirecFormer: A Directed Attention in Transformer Approach to Robust Action Recognition”, CVPR, 2022 (University of Arkansas). [Paper][Code (in construction)]

DVT: “Deformable Video Transformer”, CVPR, 2022 (Meta). [Paper]

MeMViT: “MeMViT: Memory-Augmented Multiscale Vision Transformer for Efficient Long-Term Video Recognition”, CVPR, 2022 (Meta). [Paper]

MLP-3D: “MLP-3D: A MLP-like 3D Architecture with Grouped Time Mixing”, CVPR, 2022 (JD). [Paper][PyTorch (in construction)]

RViT: “Recurring the Transformer for Video Action Recognition”, CVPR, 2022 (TCL Corporate Research, HK). [Paper]

SIFA: “Stand-Alone Inter-Frame Attention in Video Models”, CVPR, 2022 (JD). [Paper][PyTorch]

MViTv2: “MViTv2: Improved Multiscale Vision Transformers for Classification and Detection”, CVPR, 2022 (Meta). [Paper][PyTorch]

MTV: “Multiview Transformers for Video Recognition”, CVPR, 2022 (Google). [Paper][Tensorflow]

ORViT: “Object-Region Video Transformers”, CVPR, 2022 (Tel Aviv). [Paper][Website]

TIME: “Time Is MattEr: Temporal Self-supervision for Video Transformers”, ICML, 2022 (KAIST). [Paper][PyTorch]

TPS: “Spatiotemporal Self-attention Modeling with Temporal Patch Shift for Action Recognition”, ECCV, 2022 (Alibaba). [Paper][PyTorch]

DualFormer: “DualFormer: Local-Global Stratified Transformer for Efficient Video Recognition”, ECCV, 2022 (Sea AI Lab). [Paper][PyTorch]

STTS: “Efficient Video Transformers with Spatial-Temporal Token Selection”, ECCV, 2022 (Fudan University). [Paper][PyTorch]

Turbo: “Turbo Training with Token Dropout”, BMVC, 2022 (Oxford). [Paper]

MultiTrain: “Multi-dataset Training of Transformers for Robust Action Recognition”, NeurIPS, 2022 (Tencent). [Paper][Code (in construction)]

SViT: “Bringing Image Scene Structure to Video via Frame-Clip Consistency of Object Tokens”, NeurIPS, 2022 (Tel Aviv). [Paper][Website]

ST-Adapter: “ST-Adapter: Parameter-Efficient Image-to-Video Transfer Learning”, NeurIPS, 2022 (CUHK). [Paper][Code (in construction)]

ATA: “Alignment-guided Temporal Attention for Video Action Recognition”, NeurIPS, 2022 (Microsoft). [Paper]

AIA: “Attention in Attention: Modeling Context Correlation for Efficient Video Classification”, TCSVT, 2022 (University of Science and Technology of China). [Paper][PyTorch]

MSCA: “Vision Transformer with Cross-attention by Temporal Shift for Efficient Action Recognition”, arXiv, 2022 (Nagoya Institute of Technology). [Paper]

VAST: “Efficient Attention-free Video Shift Transformers”, arXiv, 2022 (Samsung). [Paper]

Video-MobileFormer: “Video Mobile-Former: Video Recognition with Efficient Global Spatial-temporal Modeling”, arXiv, 2022 (Microsoft). [Paper]

MAM2: “It Takes Two: Masked Appearance-Motion Modeling for Self-supervised Video Transformer Pre-training”, arXiv, 2022 (Baidu). [Paper]

?: “Linear Video Transformer with Feature Fixation”, arXiv, 2022 (SenseTime). [Paper]

STAN: “Two-Stream Transformer Architecture for Long Video Understanding”, arXiv, 2022 (The University of Surrey, UK). [Paper]

PatchBlender: “PatchBlender: A Motion Prior for Video Transformers”, arXiv, 2022 (Mila). [Paper]

DualPath: “Dual-path Adaptation from Image to Video Transformers”, CVPR, 2023 (Yonsei University). [Paper][PyTorch (in construction)]

S-ViT: “Streaming Video Model”, CVPR, 2023 (Microsoft). [Paper][Code (in construction)]

TubeViT: “Rethinking Video ViTs: Sparse Video Tubes for Joint Image and Video Learning”, CVPR, 2023 (Google). [Paper]

AdaMAE: “AdaMAE: Adaptive Masking for Efficient Spatiotemporal Learning with Masked Autoencoders”, CVPR, 2023 (JHU). [Paper][PyTorch]

ObjectViViT: “How can objects help action recognition?”, CVPR, 2023 (Google). [Paper]

SMViT: “Simple MViT: A Hierarchical Vision Transformer without the Bells-and-Whistles”, ICML, 2023 (Meta). [Paper]

Hiera: “Hiera: A Hierarchical Vision Transformer without the Bells-and-Whistles”, ICML, 2023 (Meta). [Paper][PyTorch]

Video-FocalNet: “Video-FocalNets: Spatio-Temporal Focal Modulation for Video Action Recognition”, ICCV, 2023 (MBZUAI). [Paper][PyTorch][Website]

ATM: “What Can Simple Arithmetic Operations Do for Temporal Modeling?”, ICCV, 2023 (Baidu). [Paper][Code (in construction)]

STA: “Prune Spatio-temporal Tokens by Semantic-aware Temporal Accumulation”, ICCV, 2023 (Huawei). [Paper]

Helping-Hands: “Helping Hands: An Object-Aware Ego-Centric Video Recognition Model”, ICCV, 2023 (Oxford). [Paper][PyTorch]

SUM-L: “Learning from Semantic Alignment between Unpaired Multiviews for Egocentric Video Recognition”, ICCV, 2023 (University of Delaware, Delaware). [Paper][Code (in construction)]

BEAR: “A Large-scale Study of Spatiotemporal Representation Learning with a New Benchmark on Action Recognition”, ICCV, 2023 (UCF). [Paper][GitHub]

UniFormerV2: “UniFormerV2: Spatiotemporal Learning by Arming Image ViTs with Video UniFormer”, ICCV, 2023 (CAS). [Paper][PyTorch]

CAST: “CAST: Cross-Attention in Space and Time for Video Action Recognition”, NeurIPS, 2023 (Kyung Hee University). [Paper][PyTorch][Website]

PPMA: “Learning Human Action Recognition Representations Without Real Humans”, NeurIPS (Datasets and Benchmarks), 2023 (IBM). [Paper][PyTorch]

SVT: “SVT: Supertoken Video Transformer for Efficient Video Understanding”, arXiv, 2023 (Meta). [Paper]

PLAR: “Prompt Learning for Action Recognition”, arXiv, 2023 (Maryland). [Paper]

SFA-ViViT: “Optimizing ViViT Training: Time and Memory Reduction for Action Recognition”, arXiv, 2023 (Google). [Paper]

TAdaConv: “Temporally-Adaptive Models for Efficient Video Understanding”, arXiv, 2023 (NUS). [Paper][PyTorch]

ZeroI2V: “ZeroI2V: Zero-Cost Adaptation of Pre-trained Transformers from Image to Video”, arXiv, 2023 (Nanjing University). [Paper]

MV-Former: “Multi-entity Video Transformers for Fine-Grained Video Representation Learning”, arXiv, 2023 (Meta). [Paper][PyTorch]

GeoDeformer: “GeoDeformer: Geometric Deformable Transformer for Action Recognition”, arXiv, 2023 (HKUST). [Paper]

Early-ViT: “Early Action Recognition with Action Prototypes”, arXiv, 2023 (Amazon). [Paper]

MCA: “Don’t Judge by the Look: A Motion Coherent Augmentation for Video Recognition”, ICLR, 2024 (Northeastern University). [Paper][PyTorch]

VideoMamba: “VideoMamba: State Space Model for Efficient Video Understanding”, arXiv, 2024 (Shanghai AI Lab). [Paper][PyTorch]

Video-Mamba-Suite: “Video Mamba Suite: State Space Model as a Versatile Alternative for Video Understanding”, arXiv, 2024 (Shanghai AI Lab). [Paper][PyTorch]

7879

7879

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?