source: https://docs.docker.com/get-started

1 Docker Overview

1.1 Docker Architecture

Docker is an open container platform for developing, shipping, and running applications.

1.2 Docker Components

-

Docker daemon (dockerd) listens for Docker API requests and manages Docker objects such as images, containers, networks, and volumes. A daemon can also communicate with other daemons to manage Docker services.

-

Docker client (docker) is the primary way that many Docker users interact with Docker. The docker command uses the Docker API. The Docker client can communicate with more than one daemon.

-

Docker registries store Docker images. Docker Hub is a public registry and Docker is configured to look for images on Docker Hub by default.

1.3 Docker objects

Docker objects include images, containers, networks, volumes, plugins, and other objects.

- Images

An image is a read-only template with instructions for creating a Docker container. Often, an image is based on another image, with some additional customization. For example, you may build an image which is based on the ubuntu image, but installs the Apache web server and your application, as well as the configuration details needed to make your application run.

To build your own image, you create a Dockerfile with a simple syntax for defining the steps needed to create the image and run it. Each instruction in a Dockerfile creates a layer in the image. When you change the Dockerfile and rebuild the image, only those layers which have changed are rebuilt. This is part of what makes images so lightweight, small, and fast. - Containers

A container is a runnable instance of an image. You can create, start, stop, move, or delete a container using the Docker API or CLI. You can connect a container to one or more networks, attach storage to it, or even create a new image based on its current state.

When a container is removed, any changes to its state that are not stored in persistent storage disappear.

1.4 Example docker run command

The following command runs an ubuntu container, attaches interactively to your local command-line session, and runs /bin/bash.

docker run -i -t ubuntu /bin/bash

When you run this command, the following happens (assuming you are using the default registry configuration):

-

If you do not have the ubuntu image locally, Docker pulls it from your configured registry, as though you had run docker pull ubuntu manually.

-

Docker creates a new container, as though you had run a docker container create command manually.

-

Docker allocates a read-write filesystem to the container, as its final layer. This allows a running container to create or modify files and directories in its local filesystem.

-

Docker creates a network interface to connect the container to the default network, since you did not specify any networking options. This includes assigning an IP address to the container. By default, containers can connect to external networks using the host machine’s network connection.

-

Docker starts the container and executes /bin/bash. Because the container is running interactively and attached to your terminal (due to the -i and -t flags), you can provide input using your keyboard while the output is logged to your terminal.

-

When you type exit to terminate the /bin/bash command, the container stops but is not removed. You can start it again or remove it.

2 Get Docker

2.1 Docker Desktop and Docker Engine

- Docker Desktop is an easy-to-install application for your Mac or Windows environment that enables you to build and share containerized applications and microservices. Docker Desktop includes Docker Engine, Docker CLI client, Docker Compose, Docker Content Trust, Kubernetes, and Credential Helper.

- Docker Engine is an open source containerization technology for building and containerizing your applications. Docker Engine acts as a client-server application with:

- A server with a long-running daemon process dockerd. The daemon creates and manage Docker objects, such as images, containers, networks, and volumes.

- APIs which specify interfaces that programs can use to talk to and instruct the Docker daemon.

- A command line interface (CLI) client docker. The CLI uses Docker APIs to control or interact with the Docker daemon through scripting or direct CLI commands. Many other Docker applications use the underlying API and CLI.

2.2 Install Docker Engine on Ubuntu

2.2.1 Prerequisites

- OS requirements: the 64-bit version of one of these Ubuntu versions:

Ubuntu Hirsute 21.04

Ubuntu Focal 20.04 (LTS)

Ubuntu Bionic 18.04 (LTS)

Docker Engine is supported on x86_64 (or amd64), armhf, arm64, and s390x architectures. - Uninstall old versions

Older versions of Docker were called docker, docker.io, or docker-engine. If these are installed, uninstall them:

sudo apt-get remove docker docker-engine docker.io containerd runc

It’s OK if apt-get reports that none of these packages are installed.

The contents of /var/lib/docker/, including images, containers, volumes, and networks, are preserved.

3. Supported storage drivers

Docker Engine on Ubuntu supports overlay2 (default), aufs and btrfs storage drivers.

2.2.2 Install using the repository

- Set up the repository

(1) Update the apt package index and install packages to allow apt to use a repository over HTTPS:

sudo apt-get update

sudo apt-get install \

apt-transport-https \

ca-certificates \

curl \

gnupg \

lsb-release

(2) Add Docker’s official GPG key:

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg

(3) set up the stable repository.

Note: The lsb_release -cs sub-command below returns the name of your Ubuntu distribution, such as xenial.

echo \

"deb [arch=amd64 signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu \

$(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

- Install Docker Engine

(1) Update the apt package index, and install the latest version of Docker Engine and containerd, or go to the next step to install a specific version:

sudo apt-get update

sudo apt-get install docker-ce docker-ce-cli containerd.io

(2) (optional) To install a specific version of Docker Engine, list the available versions in the repo, then select and install:

a. List the versions available in your repo:

apt-cache madison docker-ce

b. Install a specific version using the version string from the second column, for example, 5:18.09.1~3-0~ubuntu-xenial.

sudo apt-get install docker-ce=<VERSION_STRING> docker-ce-cli=<VERSION_STRING> containerd.io

(3) Verify that Docker Engine is installed correctly by running the hello-world image.

sudo docker run hello-world

Docker Engine is installed and running. The docker group is created but no users are added to it. You need to use sudo to run Docker commands. Continue to Linux postinstall to allow non-privileged users to run Docker commands and for other optional configuration steps.

- Upgrade Docker Engine

To upgrade Docker Engine, first run sudo apt-get update, then follow the installation instructions, choosing the new version you want to install.

2.2.3 Uninstall Docker Engine

Uninstall the Docker Engine, CLI, and Containerd packages:

sudo apt-get purge docker-ce docker-ce-cli containerd.io

Images, containers, volumes, or customized configuration files on your host are not automatically removed. To delete all images, containers, and volumes:

sudo rm -rf /var/lib/docker

sudo rm -rf /var/lib/containerd

You must delete any edited configuration files manually.

3 Get Docker Started

3.1 Getting Started

Start the container for this tutorial:

docker run -d -p 80:80 docker/getting-started

// -d : run the container in detached mode (in the background)

// -p 80:80 : map port 80 of the host to port 80 in the container

// docker/getting-started : the image to use

3.2 Demo Application

3.2.1 Getting App

- For the rest of this tutorial, we will be working with a simple todo list manager that is running in Node.js.

- First we need to get the application source code. For real projects, you will typically clone the repo. But for this tutorial we just download a ZIP file, extract the files ~/docker/app. Open the folder in VSCode.

- (todo) How to clone the repo directory and files?

3.2.2 Building Container Image

In order to build the application, we need to use a Dockerfile. A Dockerfile is simply a text-based script of instructions that is used to create a container image.

vim docker/app/Dockerfile

FROM node:12-alpine

RUN apk add --no-cache python g++ make

WORKDIR /app

COPY . .

RUN yarn install --production

CMD ["node", "src/index.js"]

Please check that the file Dockerfile has no file extension like .txt.

docker build -t getting-started .

// -t : tags a human-readable name for the final image.

- First a lot of “layers” were downloaded one by one, because we instructed the builder that we wanted to start from the node:12-alpine image.

- Then we copied images in our application

- We used yarn to install app dependencies.

- CMD specifies the default command to run when starting a container from this image.

3.2.3 Starting App Container

docker run -dp 3000:3000 getting-started

open web browser to http://localhost:3000

3.3.3 Updating App

- update source Code

In the src/static/js/app.js file, update line 56:You have no todo items yet! Add one above!

- build new image

docker build -t getting-started .

- start container

docker run -dp 3000:3000 getting-started

- Error - port is allocated

docker: Error response from daemon: driver failed programming external connectivity on endpoint laughing_burnell

(bb242b2ca4d67eba76e79474fb36bb5125708ebdabd7f45c8eaf16caaabde9dd): Bind for 0.0.0.0:3000 failed: port is already allocated.

We aren’t able to start the new container because our old container is still running. This is because the container is using the host’s port 3000 and only one process on the machine (containers included) can listen to a specific port. To fix this, we need to remove the old container.

- Replacing Old Container (why change port doesn’t work ? todo)

docker ps // show container ID

docker stop <the-container-id>

docker rm <the-container-id>

docker rm -f <the-container-id> //use this one cmd instead of the previous two cmds

4 Persisting Data

4.1 Container’s Filesystem

- Look at an example:

docker run -d ubuntu bash -c "shuf -i 1-10000 -n 1 -o /data.txt && tail -f /dev/null"

// "shuf ..." picks a single random number and writes it to /data.txt

// "tail ... " watch a file to keep the container running.

docker exec <container-id> cat /data.txt

We can see a random number.

But when we run another ubuntu, there is no /data.txt file.

docker run -it ubuntu ls /

todo: the container is not running, because “docker ps” can not show the container, but “docker ps -a” will show it. What is the difference? How to startup a container? Is it command “run” not enough?

4.2 Persisting Data with Named Volumes

- Create a named volume

docker volume create todo-db // Where is the file exist ? Can't find on host not using "root". /var/lib/docker/volumes/todo-db/_data

- Stop the todo app container

- Start the todo app container with -v flag to specify a volume mount, and mount it to /etc/todos.

docker run -dp 3000:3000 -v todo-db:/etc/todos getting-started

- Open the app and add a few items.

- Remove the container.

- Run a new container and can see the items you added there.

4.3 Where is the Volume and Data?

docker volume inspect todo-db

[

{

"CreatedAt": "2021-10-05T19:07:33+08:00",

"Driver": "local",

"Labels": {},

"Mountpoint": "/var/lib/docker/volumes/todo-db/_data",

"Name": "todo-db",

"Options": {},

"Scope": "local"

}

]

5 Using Bind Mounts

5.1 Using Bind Mounts

Named volumes are great if we simply want to store data, as we don’t have to worry about where the data is stored.

With bind mounts, we control the exact mountpoint on the host. It is often used to provide additional data into containers. When working on an application, we can use a bind mount to mount our source code into the container to let it see code changes, respond, and let us see the changes right away.

For Node-based applications, nodemon is a great tool to watch for file changes and then restart the application. There are equivalent tools in most other languages and frameworks.

5.2 Quick Comparison

Bind mounts and named volumes are the two main types of volumes that come with the Docker engine. However, additional volume drivers are available to support other use cases (SFTP, Ceph, NetApp, S3, and more).

| Items | Named Volumes | Bind Mounts |

|---|---|---|

| Host Location | Docker Chooses | You Control |

| Mount example | my-volume:/usr/local/data | /path/to/data:/usr/local/data |

| Populates new volume with container contents | Yes | No |

| Supports Volume Drivers | Yes | No |

5.3 Starting a Dev-Mode Container

5.3.1 Steps to support Dev Workflow

- Mount source code into the container

- Install all dependencies, including the “dev” dependencies

- Start nodemon to watch for filesystem changes

5.3.2 example

- Make sure you don’t have any previous getting-started containers running.

- Run the following command.

docker run -dp 3000:3000 \

-w /app -v "$(pwd):/app" \

node:12-alpine \

sh -c "yarn install && yarn run dev"

-w /app - sets the "working directory" or the current directory in container that the command will run from

-v "$(pwd):/app" - bind mount the current directory from the host into the /app directory in container

node:12-alpine - the base image to use for our app from the Dockerfile

sh -c "yarn install && yarn run dev" - We're starting a shell using sh (alpine doesn't have bash) and running yarn install to install all dependencies and then running yarn run dev.

If we look in the package.json, we’ll see that the dev script is starting nodemon.

- Watch the logs using docker logs -f .

$ sudo docker logs -f <container-id>

$ nodemon src/index.js

[nodemon] 1.19.2

[nodemon] to restart at any time, enter `rs`

[nodemon] watching dir(s): *.*

[nodemon] starting `node src/index.js`

Using sqlite database at /etc/todos/todo.db

Listening on port 3000

When I change app.js file and saved, the logs shows something changed.

( exit out by hitting Ctrl+C)

- Make a change to the app. In the src/static/js/app.js file (line 109), change the “Add Item” button to “Add”.

- {submitting ? 'Adding...' : 'Add Item'}

+ {submitting ? 'Adding...' : 'Add'}

- Simply refresh the page (localhost:3000) and you should see the change reflected in the browser almost immediately.

- Feel free to make any other changes you’d like to make. When you’re done, stop the container and build your new image using docker build -t getting-started …

Using bind mounts is very common for local development setups. The advantage is that the dev machine doesn’t need to have all of the build tools and environments installed. With a single docker run command, the dev environment is pulled and ready to go.

6 Multi Container Apps

6.1 Start MySQL

- Create the network.

docker network create todo-app

- Start a MySQL container and attach it to the network.

We’re also going to define a few environment variables that the database will use to initialize the database.

docker run -d \

--network todo-app --network-alias mysql \

-v todo-mysql-data:/var/lib/mysql \

-e MYSQL_ROOT_PASSWORD=secret \

-e MYSQL_DATABASE=todos \

mysql:5.7

We’re using a volume named todo-mysql-data here, but we never ran a docker volume create command. It is docker recognizes we want to use a named volume and creates one automatically for us.

- To confirm we have the database up and running, connect to the database and verify it connects.

docker exec -it <mysql-container-id> mysql -u root -p

6.2 Connect to MySQL

- Start a new container using the nicolaka/netshoot image(networking tools image), and connect it to the same network.

docker run -it --network todo-app nicolaka/netshoot

- Inside the netshoot container, use the dig command to look up the IP for the hostname mysql.

dig mysql

And you’ll get an output like this…

In the “ANSWER SECTION”, you will see an A record for mysql that resolves to 172.18.0.2. While mysql isn’t normally a valid hostname, Docker was able to resolve it to the IP address of the container that had that network alias .

What this means is… our app only simply needs to connect to a host named mysql and it’ll talk to the database! It doesn’t get much simpler than that!

6.3 Run your app with MySQL

While using environment variables to set connection settings is generally ok for development, it is HIGHLY DISCOURAGED when running applications in production. Diogo Monica, the former lead of security at Docker, wrote a fantastic blog post explaining why. https://diogomonica.com/2017/03/27/why-you-shouldnt-use-env-variables-for-secret-data/

A more secure mechanism is to use the secret support provided by your container orchestration framework. In most cases, these secrets are mounted as files in the running container. You’ll see many apps (including the MySQL image and the todo app) also support env vars with a _FILE suffix to point to a file containing the variable.

- Specify the environment variables and connect the container to our app network.

docker run -dp 3000:3000 \

-w /app -v "$(pwd):/app" \

--network todo-app \

-e MYSQL_HOST=mysql \

-e MYSQL_USER=root \

-e MYSQL_PASSWORD=secret \

-e MYSQL_DB=todos \

node:12-alpine \

sh -c "yarn install && yarn run dev"

- Look at the logs for the container (docker logs ), indicating it’s using the mysql database.

- Open the app in browser and add a few items to your todo list.

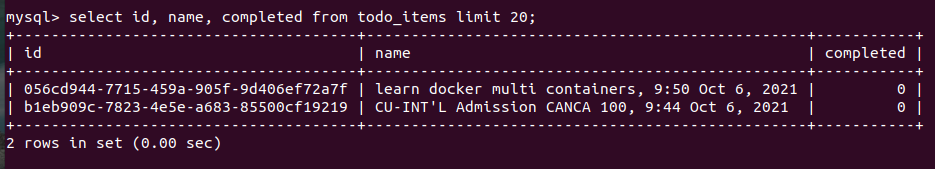

- Connect to the mysql database and prove that the items are being written to the database.

docker exec -it <mysql-container-id> mysql -u root -p

7 Use Docker Compose

7.1 Install Docker Compose

sudo apt install docker-compose // version 1.25.0

7.2 Create Compose File

- At the root of the app project, create a file named docker-compose.yml.

- In the compose file, start off by defining the schema version.

In most cases, it’s best to use the latest supported version. You can look at the Compose file reference for the current schema versions and the compatibility matrix. - Define the list of services (or containers) we want to run as part of our application.

7.3 Define App Service

version: "3.7"

services:

app:

image: node:12-alpine

command: sh -c "yarn install && yarn run dev"

ports:

- 3000:3000

working_dir: /app

volumes:

- ./:/app

environment:

MYSQL_HOST: mysql

MYSQL_USER: root

MYSQL_PASSWORD: secret

MYSQL_DB: todos

7.4 Define MySQL service

version: "3.7"

services:

app:

...

mysql:

image: mysql:5.7

volumes:

- todo-mysql-data:/var/lib/mysql

environment:

MYSQL_ROOT_PASSWORD: secret

MYSQL_DATABASE: todos

volumes:

todo-mysql-data:

// When we ran the container with docker run, the named volume was created automatically.

// However, that doesn’t happen when running with Docker Compose.

7.5 Run the app stack with a single command

- Make sure no other copies of the app/db are running first (docker ps and docker rm -f ).

- Start up the application stack using the docker-compose up command. We’ll add the -d flag to run everything in the background.

docker-compose up -d

- Let’s look at the logs using the docker-compose logs -f command. You’ll see the logs from each of the services interleaved into a single stream. This is incredibly useful when you want to watch for timing-related issues. The -f flag “follows” the log, so will give you live output as it’s generated.

- At this point, you should be able to open your app and see it running. And hey! We’re down to a single command!

7.6 Tear it all down

docker-compose down --volume

// with --volume, docker-compose will delete the named volume having been used

8 Image Building Best Practices

8.1 Security Scanning

After building an image, always scan it for security vulnerabilities.

Docker has partnered with Snyk(sync.io) to provide the vulnerability scanning service.

You must be logged in to Docker Hub to scan your images. Run the command docker login, and then scan your images using ''docker scan ‘’.

8.2 Image layering

- docker image history to see the layers.

- docker image history --no-trunc // to show full output without trunc

8.3 Layer caching

- Once a layer changes, all downstream layers have to be recreated as well.

- The previous dockerfile is as follows:

FROM

Learn more about the "FROM" Dockerfile command.

node:12-alpine

WORKDIR /app

COPY . .

RUN yarn install --production

CMD ["node", "src/index.js"]

It can be impoved if we restucture the file to help support the caching of dependencies.

3. For Node-based applications, those dependencies are defined in the package.json file. So, what if we copied only that file in first, install the dependencies, and then copy in everything else? Then, we only recreate the yarn dependencies if there was a change to the package.json.

(1) Update the Dockerfile to copy in the package.json first, install dependencies, and then copy everything else in.

FROM node:12-alpine

WORKDIR /app

COPY package.json yarn.lock ./

RUN yarn install --production

COPY . .

CMD ["node", "src/index.js"]

(2) Create a file named .dockerignore in the same folder as the Dockerfile with the following contents “node_moduls”.

(3) Build a new image

docker build -t getting-started .

You’ll see that all layers were rebuilt. Perfectly fine since we changed the Dockerfile quite a bit.

(4)Now, make a change to the src/static/index.html file (like change the

8.4 Multi-stage builds

8.4.1 Maven/Tomcat example

# syntax=docker/dockerfile:1

FROM maven AS build

WORKDIR /app

COPY . .

RUN mvn package

FROM tomcat

COPY --from=build /app/target/file.war /usr/local/tomcat/webapps

- Use one stage (called build) to perform the actual Java build using Maven.

- In the second stage (starting at FROM tomcat), we copy in files from the build stage.

- The final image is only the last stage being created (which can be overridden using the --target flag).

8.4.2 React example

# syntax=docker/dockerfile:1

FROM node:12 AS build

WORKDIR /app

COPY package* yarn.lock ./

RUN yarn install

COPY public ./public

COPY src ./src

RUN yarn run build

FROM nginx:alpine

COPY --from=build /app/build /usr/share/nginx/html

- We are using a node:12 image to perform the build (maximizing layer caching)

- Then copying the output into an nginx container.

267

267

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?