1.首先建立一个model.py文件,用来写神经网络,代码如下:

# ResNet50.py

import torch

import torch.nn as nn

import torch.nn.functional as F

class Conv(nn.Module):

def __init__(self, in_channels, out_channels, kernel_size=1, stride=1,

padding=None, groups=1, activation=True):

super(Conv, self).__init__()

padding = kernel_size // 2 if padding is None else padding

self.conv = nn.Conv2d(in_channels, out_channels, kernel_size, stride,

padding, groups=groups, bias=False)

self.bn = nn.BatchNorm2d(out_channels)

self.act = nn.ReLU(inplace=True) if activation else nn.Identity()

def forward(self, x):

return self.act(self.bn(self.conv(x)))

class Bottleneck(nn.Module):

def __init__(self, in_channels, out_channels, down_sample=False, groups=1):

super(Bottleneck, self).__init__()

stride = 2 if down_sample else 1

mid_channels = out_channels // 4

self.shortcut = Conv(in_channels, out_channels, kernel_size=1, stride=stride, activation=False) \

if in_channels != out_channels else nn.Identity()

self.conv = nn.Sequential(*[

Conv(in_channels, mid_channels, kernel_size=1, stride=1),

Conv(mid_channels, mid_channels, kernel_size=3, stride=stride, groups=groups),

Conv(mid_channels, out_channels, kernel_size=1, stride=1, activation=False)

])

def forward(self, x):

y = self.conv(x) + self.shortcut(x)

return F.relu(y, inplace=True)

class ResNet50(nn.Module):

def __init__(self, num_classes):

super(ResNet50, self).__init__()

self.stem = nn.Sequential(*[

Conv(3, 64, kernel_size=7, stride=2),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

])

self.stages = nn.Sequential(*[

self._make_stage(64, 256, down_sample=False, num_blocks=3),

self._make_stage(256, 512, down_sample=True, num_blocks=4),

self._make_stage(512, 1024, down_sample=True, num_blocks=6),

self._make_stage(1024, 2048, down_sample=True, num_blocks=3),

])

self.head = nn.Sequential(*[

nn.AvgPool2d(kernel_size=7, stride=1, padding=0),

nn.Flatten(start_dim=1, end_dim=-1),

nn.Linear(2048, num_classes)

])

@staticmethod

def _make_stage(in_channels, out_channels, down_sample, num_blocks):

layers = [Bottleneck(in_channels, out_channels, down_sample=down_sample)]

for _ in range(1, num_blocks):

layers.append(Bottleneck(out_channels, out_channels, down_sample=False))

return nn.Sequential(*layers)

def forward(self, x):

return self.head(self.stages(self.stem(x)))

# if __name__ == "__main__":

# inputs = torch.rand((8, 3, 224, 224)).cuda()

# model = ResNet50(num_classes=1000).cuda().train()

# outputs = model(inputs)

# print(outputs.shape)

2.下载数据集

DATA_URL = 'http://download.tensorflow.org/example_images/flower_photos.tgz'

3.下载完后写一个spile_data.py文件,将数据集进行分类

#spile_data.py

import os

from shutil import copy

import random

def mkfile(file):

if not os.path.exists(file):

os.makedirs(file)

file = 'flower_data/flower_photos'

flower_class = [cla for cla in os.listdir(file) if ".txt" not in cla]

mkfile('flower_data/train')

for cla in flower_class:

mkfile('flower_data/train/'+cla)

mkfile('flower_data/val')

for cla in flower_class:

mkfile('flower_data/val/'+cla)

split_rate = 0.1

for cla in flower_class:

cla_path = file + '/' + cla + '/'

images = os.listdir(cla_path)

num = len(images)

eval_index = random.sample(images, k=int(num*split_rate))

for index, image in enumerate(images):

if image in eval_index:

image_path = cla_path + image

new_path = 'flower_data/val/' + cla

copy(image_path, new_path)

else:

image_path = cla_path + image

new_path = 'flower_data/train/' + cla

copy(image_path, new_path)

print("\r[{}] processing [{}/{}]".format(cla, index+1, num), end="") # processing bar

print()

print("processing done!")

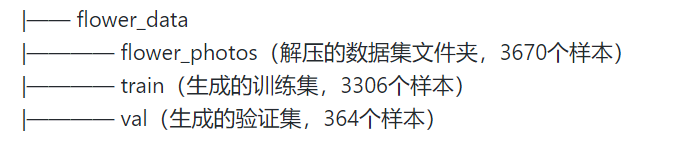

之后应该是这样:

4.再写一个train.py文件,用来训练模型

import torch

import torch.nn as nn

from torchvision import transforms, datasets

import json

import os

import torch.optim as optim

from model import ResNet50

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

data_transform = {

"train": transforms.Compose([transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])]),

"val": transforms.Compose([transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])])}

data_root = os.getcwd() # get data root path

image_path = data_root + "/flower_data/" # flower data set path

train_dataset = datasets.ImageFolder(root=image_path+"train",

transform=data_transform["train"])

train_num = len(train_dataset)

# {'daisy':0, 'dandelion':1, 'roses':2, 'sunflower':3, 'tulips':4}

flower_list = train_dataset.class_to_idx

cla_dict = dict((val, key) for key, val in flower_list.items())

cla_dict = dict((val, key) for key, val in flower_list.items())

# write dict into json file

json_str = json.dumps(cla_dict, indent=4)

with open('class_indices.json', 'w') as json_file:

json_file.write(json_str)

batch_size = 16

train_loader = torch.utils.data.DataLoader(train_dataset,

batch_size=batch_size, shuffle=True,

num_workers=0)

validate_dataset = datasets.ImageFolder(root=image_path + "val",

transform=data_transform["val"])

val_num = len(validate_dataset)

validate_loader = torch.utils.data.DataLoader(validate_dataset,

batch_size=batch_size, shuffle=False,

num_workers=0)

net = ResNet50(num_classes=5)

# load pretrain weights

model_weight_path = "./resnet50.pth"

pre_weights = torch.load(model_weight_path)

# delete classifier weights

pre_dict = {k: v for k, v in pre_weights.items() if "classifier" not in k}

missing_keys, unexpected_keys = net.load_state_dict(pre_dict, strict=False)

net.to(device)

loss_function = nn.CrossEntropyLoss()

optimizer = optim.Adam(net.parameters(), lr=0.0001)

best_acc = 0.0

save_path = './resnet50_train.pth'

for epoch in range(5):

# train

net.train()

running_loss = 0.0

for step, data in enumerate(train_loader, start=0):

images, labels = data

optimizer.zero_grad()

logits = net(images.to(device))#.to(device)

print("===>",logits.shape,labels.shape)

loss = loss_function(logits, labels.to(device))

loss.backward()

optimizer.step()

# print statistics

running_loss += loss.item()

# print train process

rate = (step+1)/len(train_loader)

a = "*" * int(rate * 50)

b = "." * int((1 - rate) * 50)

print("\rtrain loss: {:^3.0f}%[{}->{}]{:.4f}".format(int(rate*100), a, b, loss), end="")

print()

# validate

net.eval()

acc = 0.0 # accumulate accurate number / epoch

with torch.no_grad():

for val_data in validate_loader:

val_images, val_labels = val_data

outputs = net(val_images.to(device)) # eval model only have last output layer

# loss = loss_function(outputs, test_labels)

predict_y = torch.max(outputs, dim=1)[1]

acc += (predict_y == val_labels.to(device)).sum().item()

val_accurate = acc / val_num

if val_accurate > best_acc:

best_acc = val_accurate

torch.save(net.state_dict(), save_path)

print('[epoch %d] train_loss: %.3f test_accuracy: %.3f' %

(epoch + 1, running_loss / step, val_accurate))

print('Finished Training')

1175

1175

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?