提示:刘二大人系列视频学习笔记

Content

提示:以下是本篇文章正文内容,下面案例可供参考

Chapter 1 GoogLeNet

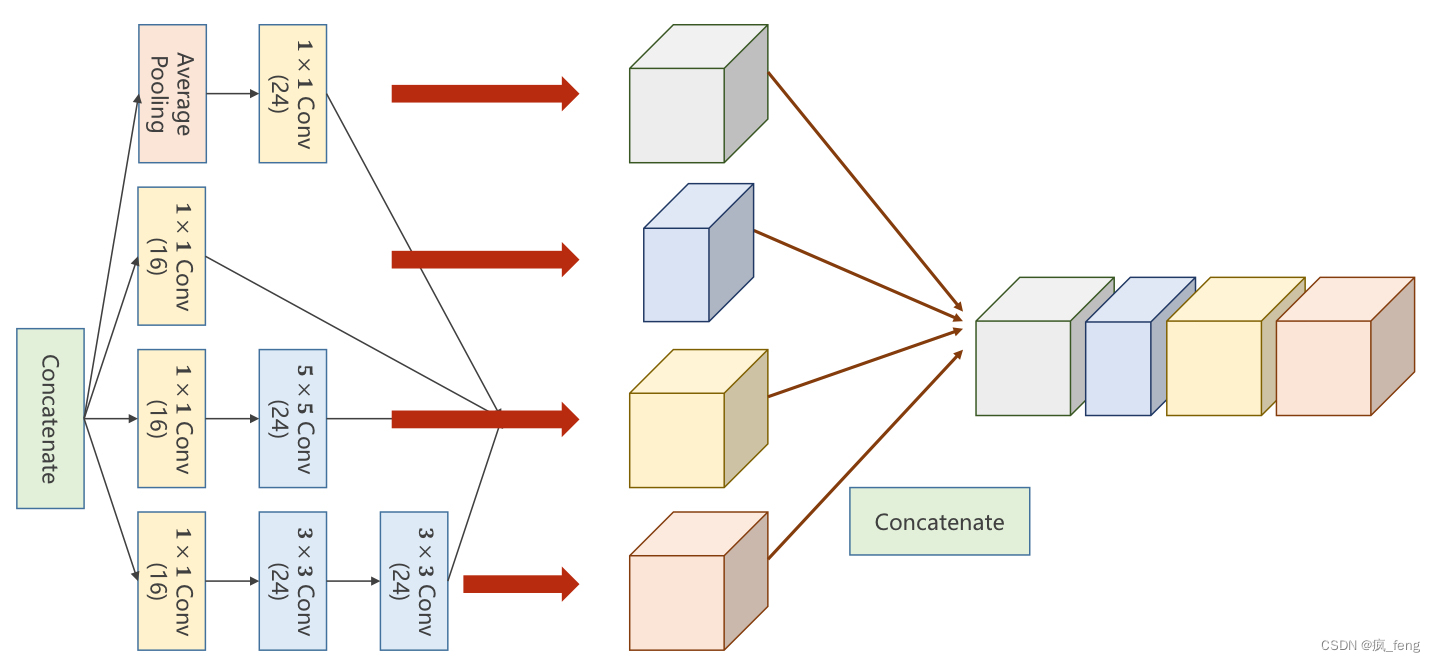

1.1 Implementation of Inception Module

- Concatenate:

...

outputs = [branch1x1,branch5x5,branch3x3,branch_pool]

return torch.cat(outputs,dim=1) #concatenate

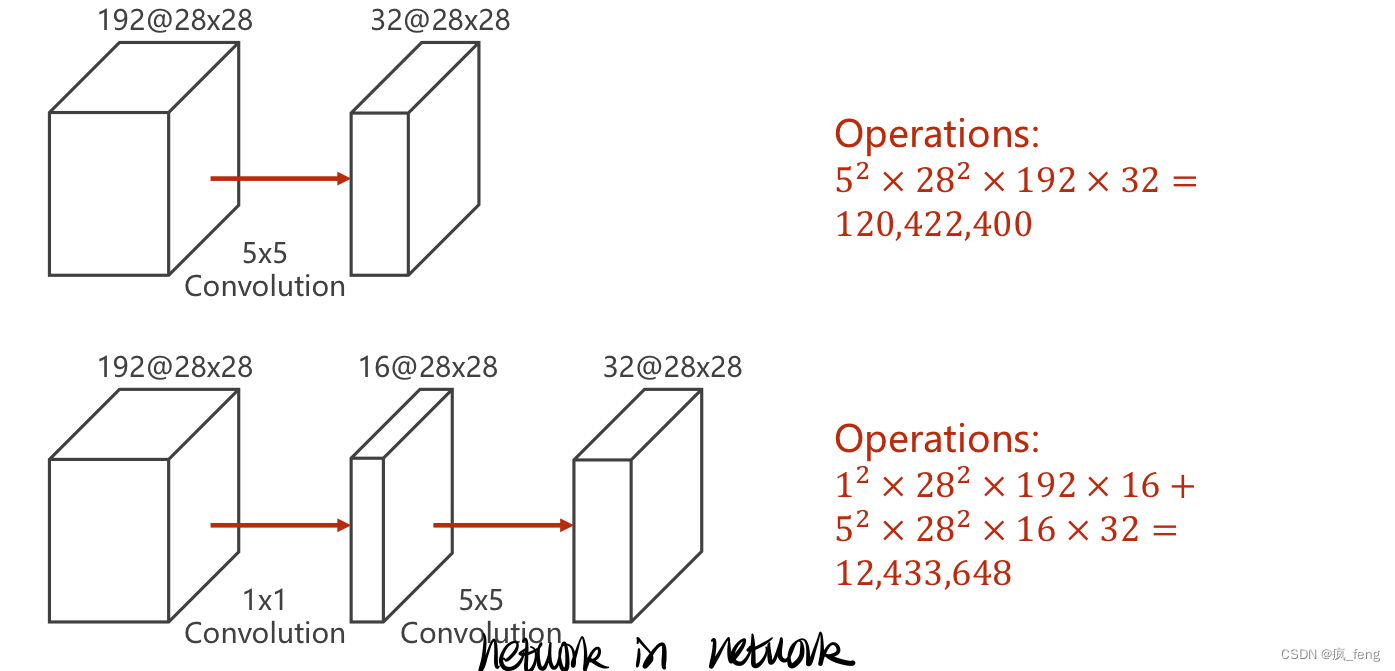

- the effect of 1*1 conv:

1.2 Code

import torch

import torch.nn as nn

from torchvision import transforms #for constructing DataLoader

from torchvision import datasets #for constructing DataLoader

from torch.utils.data import DataLoader #for constructing DataLoader

import torch.nn.functional as F #for using relu() and pooling

import torch.optim as optim #for constructing Optimizer

#prepare dataset

batch_size=64

transform= transforms.Compose ([transforms.ToTensor( ),transforms.Normalize((0.1307,),(0.3081,))])#convert the PIL Image to Tensor[0,1]

# normalization according to mean and std of mnist

train_dataset=datasets.MNIST(root='./dataset',train=True,download=False,transform=transform)

train_loader=DataLoader(train_dataset,shuffle=True,batch_size=batch_size)

test_dataset=datasets.MNIST(root='./dataset',train=False,download=False,transform=transform)

test_loader=DataLoader(test_dataset,shuffle=False,batch_size=batch_size)

#design model using Class

class InceptionA(nn.Module):

def __init__(self,in_channels):

super(InceptionA, self).__init__()

self.branch1x1 = nn.Conv2d(in_channels,16,kernel_size=1)

self.branch5x5_1 = nn.Conv2d(in_channels,16,kernel_size=1)

self.branch5x5_2 = nn.Conv2d(16,24,kernel_size=5,padding=2)

self.branch3x3_1 = nn.Conv2d(in_channels,16,kernel_size=1)

self.branch3x3_2 = nn.Conv2d(16,24,kernel_size=3,padding=1)

self.branch3x3_3 = nn.Conv2d(24,24,kernel_size=3,padding=1)

self.branch_pool = nn.Conv2d(in_channels,24,kernel_size=1)

def forward(self,x):

branch1x1 = self.branch1x1(x)

branch5x5 = self.branch5x5_1(x)

branch5x5 = self.branch5x5_2(branch5x5)

branch3x3 = self.branch3x3_1(x)

branch3x3 = self.branch3x3_2(branch3x3)

branch3x3 = self.branch3x3_3(branch3x3)

branch_pool = F.avg_pool2d(x,kernel_size=3,stride=1,padding=1)

branch_pool = self.branch_pool(branch_pool)

outputs = [branch1x1,branch5x5,branch3x3,branch_pool]

return torch.cat(outputs,dim=1) #concatenate

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1,10,kernel_size=5)

self.conv2 = nn.Conv2d(88, 20, kernel_size=5)#88=24*3+16

self.incep1 = InceptionA(in_channels=10)

self.incep2 = InceptionA(in_channels=20)

self.mp = nn.MaxPool2d(2)

self.fc = nn.Linear(1408, 10)

def forward(self, x):

in_size = x.size(0)

x = F.relu(self.mp(self.conv1(x)))

x = self.incep1(x)

x = F.relu(self.mp(self.conv2(x)))

x = self.incep2(x)

x = x.view(in_size, -1)#change into one dimension

x = self.fc(x)

return x

model = Net()

# construct loss and optimizer

criterion = torch.nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.5)

# training cycle forward, backward, update

def train(epoch):

running_loss = 0.0

for batch_idx, data in enumerate(train_loader, 0):

inputs, target = data

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, target)

loss.backward()

optimizer.step()

running_loss += loss.item()

if batch_idx % 300 == 299:

print('[%d, %5d] loss: %.3f' % (epoch + 1, batch_idx + 1, running_loss / 300))

running_loss = 0.0

def test():

correct = 0

total = 0

with torch.no_grad():

for data in test_loader:

images, labels = data

outputs = model(images)

_, predicted = torch.max(outputs.data, dim=1) #similar with dict

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('accuracy on test set: %d %% ' % (100 * correct / total))

if __name__ == '__main__':

for epoch in range(10):

train(epoch)

test()

1.3 Result

[1, 300] loss: 0.766

[1, 600] loss: 0.209

[1, 900] loss: 0.137

accuracy on test set: 96 %

[2, 300] loss: 0.110

[2, 600] loss: 0.101

[2, 900] loss: 0.090

accuracy on test set: 97 %

[3, 300] loss: 0.082

[3, 600] loss: 0.078

[3, 900] loss: 0.068

accuracy on test set: 97 %

[4, 300] loss: 0.065

[4, 600] loss: 0.060

[4, 900] loss: 0.061

accuracy on test set: 97 %

[5, 300] loss: 0.057

[5, 600] loss: 0.055

[5, 900] loss: 0.050

accuracy on test set: 98 %

[6, 300] loss: 0.050

[6, 600] loss: 0.051

[6, 900] loss: 0.045

accuracy on test set: 98 %

[7, 300] loss: 0.045

[7, 600] loss: 0.046

[7, 900] loss: 0.044

accuracy on test set: 98 %

[8, 300] loss: 0.037

[8, 600] loss: 0.039

[8, 900] loss: 0.045

accuracy on test set: 98 %

[9, 300] loss: 0.033

[9, 600] loss: 0.040

[9, 900] loss: 0.039

accuracy on test set: 98 %

[10, 300] loss: 0.036

[10, 600] loss: 0.032

[10, 900] loss: 0.036

accuracy on test set: 98 %

1.4 More details

1.average pool

branch_pool = F.avg_pool2d(x,kernel_size=3,stride=1,padding=1)

2.view()

x = x.view(in_size, -1)

for batch_idx, data in enumerate(train_loader, 0)

_, predicted = torch.max(outputs.data, dim=1)

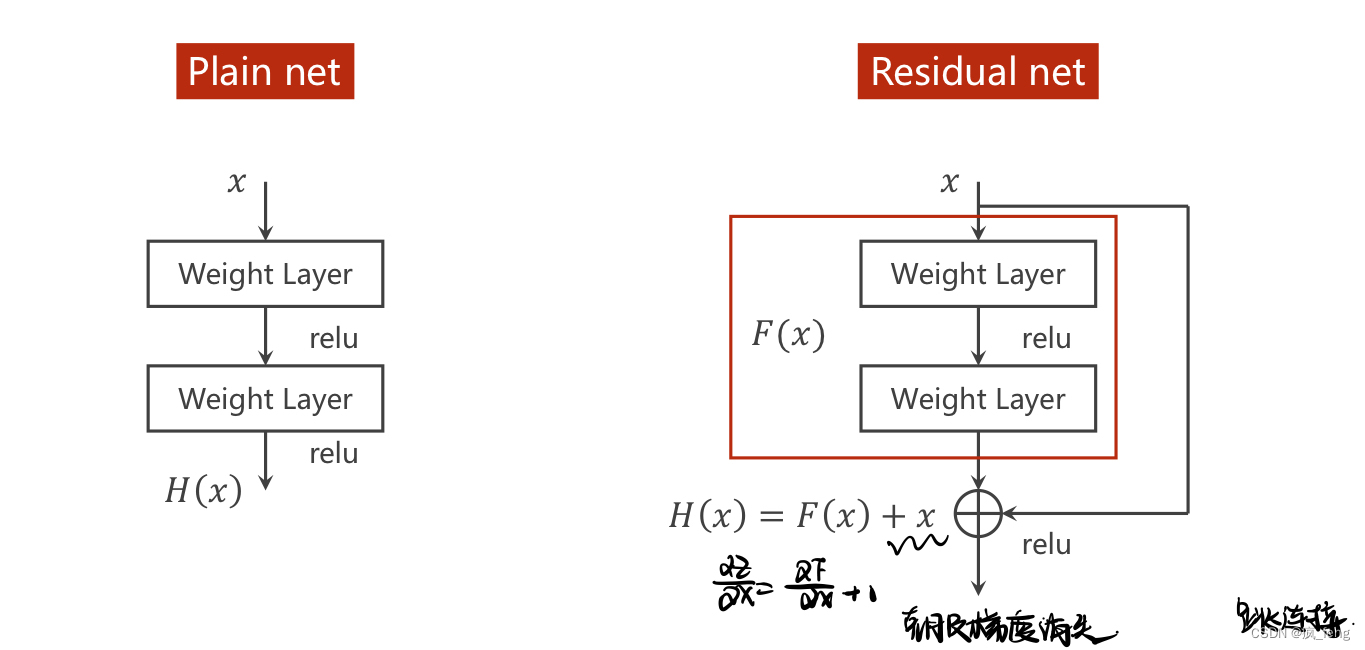

Chapter 2 Deep Residual Learning

2.1 Implementation of Deep Residual Learning

H (x) = f (x) + X, the tensors’ dimensions must be the same.

2.2 Code

import torch

import torch.nn as nn

from torchvision import transforms

from torchvision import datasets

from torch.utils.data import DataLoader

import torch.nn.functional as F

import torch.optim as optim

# prepare dataset

batch_size = 64

transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.1307,), (0.3081,))]) # 归一化,均值和方差

train_dataset = datasets.MNIST(root='./dataset', train=True, download=True, transform=transform)

train_loader = DataLoader(train_dataset, shuffle=True, batch_size=batch_size)

test_dataset = datasets.MNIST(root='./dataset', train=False, download=True, transform=transform)

test_loader = DataLoader(test_dataset, shuffle=False, batch_size=batch_size)

# design model using class

class ResidualBlock(nn.Module):

def __init__(self, channels):

super(ResidualBlock, self).__init__()

self.channels = channels

self.conv1 = nn.Conv2d(channels, channels, kernel_size=3, padding=1)

self.conv2 = nn.Conv2d(channels, channels, kernel_size=3, padding=1)

def forward(self, x):

y = F.relu(self.conv1(x))

y = self.conv2(y)

return F.relu(x + y)

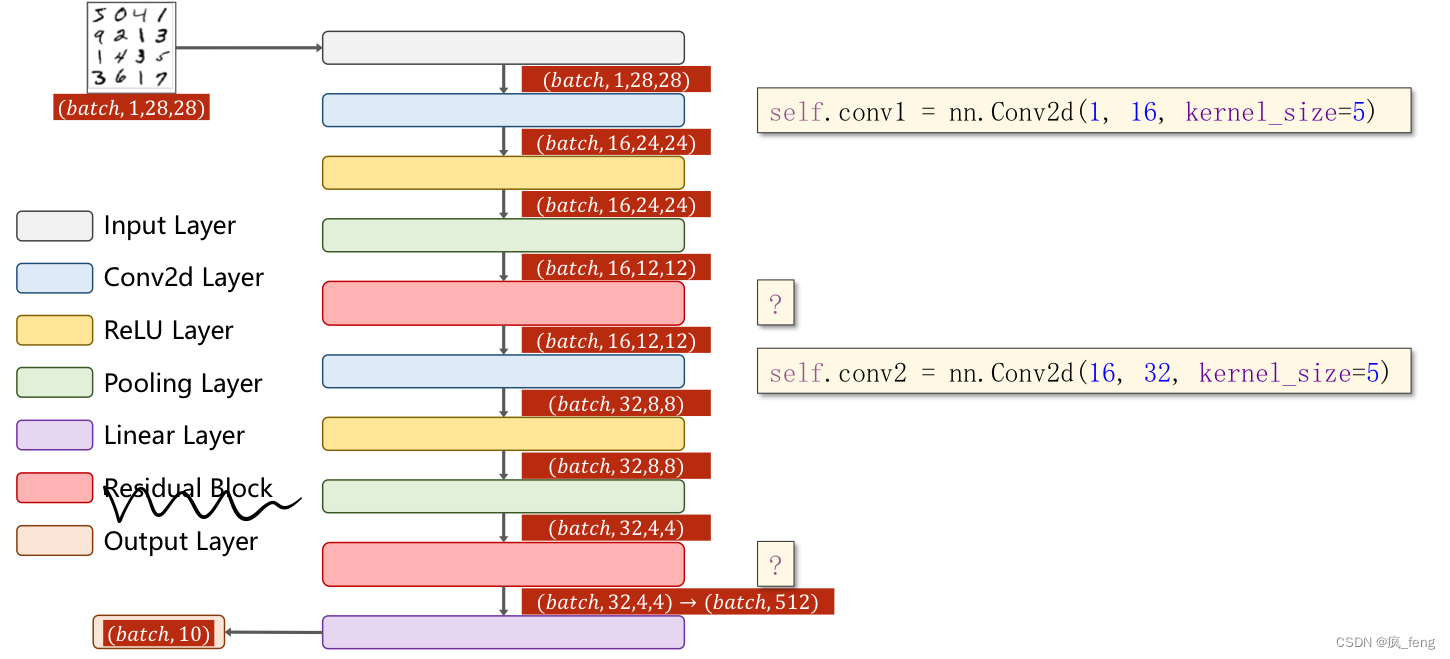

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 16, kernel_size=5)

self.conv2 = nn.Conv2d(16, 32, kernel_size=5)

self.rblock1 = ResidualBlock(16)

self.rblock2 = ResidualBlock(32)

self.mp = nn.MaxPool2d(2)

self.fc = nn.Linear(512, 10)

def forward(self, x):

in_size = x.size(0)

x = self.mp(F.relu(self.conv1(x)))

x = self.rblock1(x)

x = self.mp(F.relu(self.conv2(x)))

x = self.rblock2(x)

x = x.view(in_size, -1)

x = self.fc(x)

return x

model = Net()

# construct loss and optimizer

criterion = torch.nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.5)

# training cycle forward, backward, update

def train(epoch):

running_loss = 0.0

for batch_idx, data in enumerate(train_loader, 0):

inputs, target = data

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, target)

loss.backward()

optimizer.step()

running_loss += loss.item()

if batch_idx % 300 == 299:

print('[%d, %5d] loss: %.3f' % (epoch + 1, batch_idx + 1, running_loss / 300))

running_loss = 0.0

def test():

correct = 0

total = 0

with torch.no_grad():

for data in test_loader:

images, labels = data

outputs = model(images)

_, predicted = torch.max(outputs.data, dim=1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('accuracy on test set: %d %% ' % (100 * correct / total))

if __name__ == '__main__':

for epoch in range(10):

train(epoch)

test()

2.3 Result

[1, 300] loss: 0.544

[1, 600] loss: 0.141

[1, 900] loss: 0.103

accuracy on test set: 96 %

[2, 300] loss: 0.088

[2, 600] loss: 0.074

[2, 900] loss: 0.071

accuracy on test set: 97 %

[3, 300] loss: 0.063

[3, 600] loss: 0.061

[3, 900] loss: 0.052

accuracy on test set: 98 %

[4, 300] loss: 0.046

[4, 600] loss: 0.046

[4, 900] loss: 0.048

accuracy on test set: 98 %

[5, 300] loss: 0.039

[5, 600] loss: 0.040

[5, 900] loss: 0.041

accuracy on test set: 98 %

[6, 300] loss: 0.038

[6, 600] loss: 0.036

[6, 900] loss: 0.034

accuracy on test set: 98 %

[7, 300] loss: 0.027

[7, 600] loss: 0.032

[7, 900] loss: 0.034

accuracy on test set: 98 %

[8, 300] loss: 0.029

[8, 600] loss: 0.026

[8, 900] loss: 0.029

accuracy on test set: 99 %

[9, 300] loss: 0.025

[9, 600] loss: 0.024

[9, 900] loss: 0.026

accuracy on test set: 99 %

[10, 300] loss: 0.022

[10, 600] loss: 0.026

[10, 900] loss: 0.019

accuracy on test set: 99 %

117

117

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?