1.What does a neuron compute?

A.A neuron computes a function g that scales the input x linearly (Wx + b)

B.A neuron computes an activation function followed by a linear function (z = Wx + b)

C.A neuron computes a linear function (z = Wx + b) followed by an activation function

D.A neuron computes the mean of all features before applying the output to an activation function

答案:C

we generally say that the output of a neuron is a = g(Wx + b) where g is the activation function (sigmoid, tanh, ReLU, …).

简单来说用激活函数计算一个线性组合

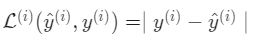

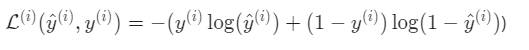

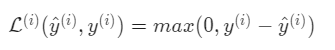

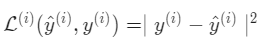

2.Which of these is the “Logistic Loss”

A.

B.

C.

D.

答案:B

3.Suppose img is a (32,32,3) array, representing a 32x32 image with 3 color channels red, green and blue. How do you reshape this into a column vector?

A.x = img.reshape((32323,1))

B.x = img.reshape((3,32*32))

C.x = img.reshape((1,32*32,*3))

D.x = img.reshape((32*32,3))

答案:A

4.Consider the two following random arrays a and b:

a = np.random.randn(2,3) # a.shape = (2, 3)

b=np.random.randn(2,1) # b.shape = (2, 1)

c = a + b

What will be the shape of c?

A.The computation cannot happen because the sizes don’t match. It’s going to be “Error”!

B.c.shape = (3, 2)

C.c.shape = (2, 1)

D.c.shape = (2, 3)

答案:D

This is broadcasting. b (column vector) is copied 3 times so that it can be summed to each column of a.

5.Consider the two following random arrays a and b:

a=np.random.randn(4,3) # a.shape = (4, 3)

b=np.random.randn(3,2) # b.shape = (3,2)

c = a*b

What will be the shape of c?

A.c.shape = (3, 3)

B.c.shape = (4,2)

C.The computation cannot happen because the sizes don’t match. It’s going to be “Error”!

D.c.shape = (4, 3)

答案: C

Indeed! In numpy the “*” operator indicates element-wise multiplication. It is different from “np.dot()”. If you would try “c = np.dot(a,b)” you would get c.shape = (4, 2).

主要是区分 * 和 dot

*是每个元素对应相乘

dot()是矩阵乘法

6.Suppose you have nx input features per example. Recall that X = [x(1) x(2) … x(m)].

What is the dimension of X?

A.(m,1)

B.(n_x, m)

C.(1,m)

D.(m,n_x)

答案:B

每个x是nx1, 共有m个x

7.Recall that np.dot(a,b)np.dot(a,b) performs a matrix multiplication on a and b, whereas ab performs an element-wise multiplication.

Consider the two following random arrays aa and bb:

a = np.random.randn(12288, 150) # a.shape = (12288, 150)

b = np.random.randn(150, 45) # b.shape = (150, 45)

c = np.dot(a,b)

What is the shape of c?

A.c.shape = (150,150)

B.c.shape = (12288, 150)

C.c.shape = (12288, 45)

D.The computation cannot happen because the sizes don’t match. It’s going to be “Error”!

答案:C

remember that a np.dot(a, b) has shape (number of rows of a, number of columns of b). The sizes match because :“number of columns of a = 150 = number of rows of b”

矩阵乘法

8.Consider the following code snippet:

a.shape = (3,4)

b.shape = (4,1)

for i in range(3):

for j in range(4):

c[i][j] = a[i][j] + b[j]

How do you vectorize this?

A.c = a.T + b

B.c = a + b.T

C.c = a + b

D.c = a.T + b.T

答案:B

9.Consider the following code:

a = np.random.randn(3, 3)

b = np.random.randn(3, 1)

c = a*b

What will be c? (If you’re not sure, feel free to run this in python to find out).

A.It will lead to an error since you cannot use “*” to operate on these two matrices. You need to instead use np.dot(a,b)

B.This will multiply a 3x3 matrix a with a 3x1 vector, thus resulting in a 3x1 vector. That is, c.shape = (3,1).

C.This will invoke broadcasting, so b is copied three times to become (3,3), and * is an element-wise product so c.shape will be (3, 3)

D.This will invoke broadcasting, so b is copied three times to become (3, 3), and * invokes a matrix multiplication operation of two 3x3 matrices so c.shape will be (3, 3)

答案:C

10.Consider the following computation graph.

What is the output J?

A.J = (c - 1)*(b + a)

B.J = (b - 1) * (c + a)

C.J = (a - 1) * (b + c)

D.J = ab + bc + a*c

答案:C

J = u+v-w

= ab + ac - (b+c)

= a(b+c) -(b+c)

=(a-1)(b+c)

2602

2602

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?