@register_model()

@handle_legacy_interface(weights=("pretrained", ResNet50_Weights.IMAGENET1K_V1))

def resnet50(*, weights: Optional[ResNet50_Weights] = None, progress: bool = True, **kwargs: Any) -> ResNet:

"""ResNet-50 from `Deep Residual Learning for Image Recognition <https://arxiv.org/pdf/1512.03385.pdf>`__.

.. note::

The bottleneck of TorchVision places the stride for downsampling to the second 3x3

convolution while the original paper places it to the first 1x1 convolution.

This variant improves the accuracy and is known as `ResNet V1.5

<https://ngc.nvidia.com/catalog/model-scripts/nvidia:resnet_50_v1_5_for_pytorch>`_.

Args:

weights (:class:`~torchvision.models.ResNet50_Weights`, optional): The

pretrained weights to use. See

:class:`~torchvision.models.ResNet50_Weights` below for

more details, and possible values. By default, no pre-trained

weights are used.

progress (bool, optional): If True, displays a progress bar of the

download to stderr. Default is True.

**kwargs: parameters passed to the ``torchvision.models.resnet.ResNet``

base class. Please refer to the `source code

<https://github.com/pytorch/vision/blob/main/torchvision/models/resnet.py>`_

for more details about this class.

.. autoclass:: torchvision.models.ResNet50_Weights

:members:

"""

weights = ResNet50_Weights.verify(weights)

return _resnet(Bottleneck, [3, 4, 6, 3], weights, progress, **kwargs)

pytorch/vision

https://github.com/pytorch/vision/blob/main/torchvision/models/resnet.py

1. 使用@register_model()将模型注册到TorchVision的模型库中。

这会让模型可以通过torchvision.models.resnet18()访问。

2. 使用@handle_legacy_interface处理旧接口,这里指定如果使用

pretrained参数,将其映射到ResNet18_Weights.IMAGENET1K_V1权重。

这是为了兼容之前的接口。

3. weights参数指定预训练权重版本,其类型为ResNet18_Weights。

如果不指定,则不使用预训练权重。

def _resnet(

block: Type[Union[BasicBlock, Bottleneck]],

layers: List[int],

weights: Optional[WeightsEnum],

progress: bool,

**kwargs: Any,

) -> ResNet:

if weights is not None:

_ovewrite_named_param(kwargs, "num_classes", len(weights.meta["categories"]))

model = ResNet(block, layers, **kwargs)

if weights is not None:

model.load_state_dict(weights.get_state_dict(progress=progress, check_hash=True))

return model

这段代码的主要作用是:构建ResNet模型,并根据weights参数

加载预训练权重。

代码首先根据block和layers参数构建ResNet模型。block参数指定ResNet

块的类型,可以是BasicBlock或Bottleneck。layers参数指定每个阶段的

ResNet块数量。

然后,如果weights参数不为空,说明需要加载预训练权重。代码会做两件事:

1. 覆盖kwargs中的num_classes参数,设置为与权重文件中类别数目匹配。

这是因为预训练权重的类别数可能与目标任务不同,需要调整最后的

全连接层。

2. 调用model.load_state_dict()方法加载权重文件weights.

get_state_dict()所 parse 出的权重参数,更新模型的参数。

class ResNet50_Weights(WeightsEnum):

IMAGENET1K_V1 = Weights(

url="https://download.pytorch.org/models/resnet50-0676ba61.pth",

transforms=partial(ImageClassification, crop_size=224),

meta={

**_COMMON_META,

"num_params": 25557032,

"recipe": "https://github.com/pytorch/vision/tree/main/references/classification#resnet",

"_metrics": {

"ImageNet-1K": {

"acc@1": 76.130,

"acc@5": 92.862,

}

},

"_ops": 4.089,

"_file_size": 97.781,

"_docs": """These weights reproduce closely the results of the paper using a simple training recipe.""",

},

)

IMAGENET1K_V2 = Weights(

url="https://download.pytorch.org/models/resnet50-11ad3fa6.pth",

transforms=partial(ImageClassification, crop_size=224, resize_size=232),

meta={

**_COMMON_META,

"num_params": 25557032,

"recipe": "https://github.com/pytorch/vision/issues/3995#issuecomment-1013906621",

"_metrics": {

"ImageNet-1K": {

"acc@1": 80.858,

"acc@5": 95.434,

}

},

"_ops": 4.089,

"_file_size": 97.79,

"_docs": """

These weights improve upon the results of the original paper by using TorchVision's `new training recipe

<https://pytorch.org/blog/how-to-train-state-of-the-art-models-using-torchvision-latest-primitives/>`_.

""",

},

)

DEFAULT = IMAGENET1K_V2

class ResNet(nn.Module):

def __init__(

self,

block: Type[Union[BasicBlock, Bottleneck]],

layers: List[int],

num_classes: int = 1000,

zero_init_residual: bool = False,

groups: int = 1,

width_per_group: int = 64,

replace_stride_with_dilation: Optional[List[bool]] = None,

norm_layer: Optional[Callable[..., nn.Module]] = None,

) -> None:

super().__init__()

_log_api_usage_once(self)

if norm_layer is None:

norm_layer = nn.BatchNorm2d

self._norm_layer = norm_layer

self.inplanes = 64

self.dilation = 1

if replace_stride_with_dilation is None:

# each element in the tuple indicates if we should replace

# the 2x2 stride with a dilated convolution instead

replace_stride_with_dilation = [False, False, False]

if len(replace_stride_with_dilation) != 3:

raise ValueError(

"replace_stride_with_dilation should be None "

f"or a 3-element tuple, got {replace_stride_with_dilation}"

)

self.groups = groups

self.base_width = width_per_group

self.conv1 = nn.Conv2d(3, self.inplanes, kernel_size=7, stride=2, padding=3, bias=False)

self.bn1 = norm_layer(self.inplanes)

self.relu = nn.ReLU(inplace=True)

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

self.layer1 = self._make_layer(block, 64, layers[0])

self.layer2 = self._make_layer(block, 128, layers[1], stride=2, dilate=replace_stride_with_dilation[0])

self.layer3 = self._make_layer(block, 256, layers[2], stride=2, dilate=replace_stride_with_dilation[1])

self.layer4 = self._make_layer(block, 512, layers[3], stride=2, dilate=replace_stride_with_dilation[2])

self.avgpool = nn.AdaptiveAvgPool2d((1, 1))

self.fc = nn.Linear(512 * block.expansion, num_classes)

for m in self.inplanes():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode="fan_out", nonlinearity="relu")

elif isinstance(m, (nn.BatchNorm2d, nn.GroupNorm)):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

# Zero-initialize the last BN in each residual branch,

# so that the residual branch starts with zeros, and each residual block behaves like an identity.

# This improves the model by 0.2~0.3% according to https://arxiv.org/abs/1706.02677

if zero_init_residual:

for m in self.modules():

if isinstance(m, Bottleneck) and m.bn3.weight is not None:

nn.init.constant_(m.bn3.weight, 0) # type: ignore[arg-type]

elif isinstance(m, BasicBlock) and m.bn2.weight is not None:

nn.init.constant_(m.bn2.weight, 0) # type: ignore[arg-type]

def _make_layer(

self,

block: Type[Union[BasicBlock, Bottleneck]],

planes: int,

blocks: int,

stride: int = 1,

dilate: bool = False,

) -> nn.Sequential:

norm_layer = self._norm_layer

downsample = None

previous_dilation = self.dilation

if dilate:

self.dilation *= stride

stride = 1

if stride != 1 or self.inplanes != planes * block.expansion:

downsample = nn.Sequential(

conv1x1(self.inplanes, planes * block.expansion, stride),

norm_layer(planes * block.expansion),

)

layers = []

layers.append(

block(

self.inplanes, planes, stride, downsample, self.groups, self.base_width, previous_dilation, norm_layer

)

)

self.inplanes = planes * block.expansion

for _ in range(1, blocks):

layers.append(

block(

self.inplanes,

planes,

groups=self.groups,

base_width=self.base_width,

dilation=self.dilation,

norm_layer=norm_layer,

)

)

return nn.Sequential(*layers)

def _forward_impl(self, x: Tensor) -> Tensor:

# See note [TorchScript super()]

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = self.avgpool(x)

x = torch.flatten(x, 1)

x = self.fc(x)

return x

def forward(self, x: Tensor) -> Tensor:

return self._forward_impl(x)

class Bottleneck(nn.Module):

# Bottleneck in torchvision places the stride for downsampling at 3x3 convolution(self.conv2)

# while original implementation places the stride at the first 1x1 convolution(self.conv1)

# according to "Deep residual learning for image recognition" https://arxiv.org/abs/1512.03385.

# This variant is also known as ResNet V1.5 and improves accuracy according to

# https://ngc.nvidia.com/catalog/model-scripts/nvidia:resnet_50_v1_5_for_pytorch.

expansion: int = 4

def __init__(

self,

inplanes: int,

planes: int,

stride: int = 1,

downsample: Optional[nn.Module] = None,

groups: int = 1,

base_width: int = 64,

dilation: int = 1,

norm_layer: Optional[Callable[..., nn.Module]] = None,

) -> None:

super().__init__()

if norm_layer is None:

norm_layer = nn.BatchNorm2d

width = int(planes * (base_width / 64.0)) * groups

# Both self.conv2 and self.downsample layers downsample the input when stride != 1

self.conv1 = conv1x1(inplanes, width)

self.bn1 = norm_layer(width)

self.conv2 = conv3x3(width, width, stride, groups, dilation)

self.bn2 = norm_layer(width)

self.conv3 = conv1x1(width, planes * self.expansion)

self.bn3 = norm_layer(planes * self.expansion)

self.relu = nn.ReLU(inplace=True)

self.downsample = downsample

self.stride = stride

def forward(self, x: Tensor) -> Tensor:

identity = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

out = self.conv3(out)

out = self.bn3(out)

if self.downsample is not None:

identity = self.downsample(x)

out += identity

out = self.relu(out)

return out

class BasicBlock(nn.Module):

expansion: int = 1

def __init__(

self,

inplanes: int,

planes: int,

stride: int = 1,

downsample: Optional[nn.Module] = None,

groups: int = 1,

base_width: int = 64,

dilation: int = 1,

norm_layer: Optional[Callable[..., nn.Module]] = None,

) -> None:

super().__init__()

if norm_layer is None:

norm_layer = nn.BatchNorm2d

if groups != 1 or base_width != 64:

raise ValueError("BasicBlock only supports groups=1 and base_width=64")

if dilation > 1:

raise NotImplementedError("Dilation > 1 not supported in BasicBlock")

# Both self.conv1 and self.downsample layers downsample the input when stride != 1

self.conv1 = conv3x3(inplanes, planes, stride)

self.bn1 = norm_layer(planes)

self.relu = nn.ReLU(inplace=True)

self.conv2 = conv3x3(planes, planes)

self.bn2 = norm_layer(planes)

self.downsample = downsample

self.stride = stride

def forward(self, x: Tensor) -> Tensor:

identity = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

if self.downsample is not None:

identity = self.downsample(x)

out += identity

out = self.relu(out)

return out

__all__ = [

"ResNet",

"ResNet18_Weights",

"ResNet34_Weights",

"ResNet50_Weights",

"ResNet101_Weights",

"ResNet152_Weights",

"ResNeXt50_32X4D_Weights",

"ResNeXt101_32X8D_Weights",

"ResNeXt101_64X4D_Weights",

"Wide_ResNet50_2_Weights",

"Wide_ResNet101_2_Weights",

"resnet18",

"resnet34",

"resnet50",

"resnet101",

"resnet152",

"resnext50_32x4d",

"resnext101_32x8d",

"resnext101_64x4d",

"wide_resnet50_2",

"wide_resnet101_2",

]

def conv3x3(in_planes: int, out_planes: int, stride: int = 1, groups: int = 1, dilation: int = 1) -> nn.Conv2d:

"""3x3 convolution with padding"""

return nn.Conv2d(

in_planes,

out_planes,

kernel_size=3,

stride=stride,

padding=dilation,

groups=groups,

bias=False,

dilation=dilation,

)

def conv1x1(in_planes: int, out_planes: int, stride: int = 1) -> nn.Conv2d:

"""1x1 convolution"""

return nn.Conv2d(in_planes, out_planes, kernel_size=1, stride=stride, bias=False)

from functools import partial

from typing import Any, Callable, List, Optional, Type, Union

import torch

import torch.nn as nn

from torch import Tensor

from ..transforms._presets import ImageClassification

from ..utils import _log_api_usage_once

from ._api import register_model, Weights, WeightsEnum

from ._meta import _IMAGENET_CATEGORIES

from ._utils import _ovewrite_named_param, handle_legacy_interface

1. from ..transforms._presets import ImageClassification

导入ImageClassification转换器,它包含图像分类常用的转换,如归一化等。

2. from ..utils import _log_api_usage_once

_log_api_usage_once用来记录API的使用情况,但每个API只记录一次。这是用于统计API使用的数据。

3. from ._api import register_model, Weights, WeightsEnum

从_api导入register_model用于注册模型,Weights和WeightsEnum用于指定模型权重。

4. from ._meta import _IMAGENET_CATEGORIES

导入ImageNet的类别名。_IMAGENET_CATEGORIES是一个包含所有ImageNet类别的列表。

5. from ._utils import _ovewrite_named_param, handle_legacy_interface

_ovewrite_named_param用来更新模型参数。handle_legacy_interface用来兼容之前的老接口。

import os

import time

import torch.nn as nn

import torch

import torchvision.transforms as transforms

import torchvision.transforms as transforms的作用是:

导入TorchVision的图像转换模块transforms。这个模块提供了诸如:

- 调整大小:Resize、Scale

- 剪裁:CenterCrop、RandomCrop、FiveCrop等

- 翻转与旋转:HorizontalFlip、VerticalFlip、RandomRotation

- 归一化:Normalize

- 转换张量:ToTensor

- 变换图像颜色等:ColorJitter、RandomGrayscale

等等众多图像转换工具。

这些转换的作用是:

- 对输入图像进行各种处理,使其符合模型的输入要求

- 数据增强:通过翻转、旋转、剪裁等随机化地扩充训练数据

- 图像归一化:使用训练集的均值与方差对输入图像进行归一化

- 等等

from PIL import Image

from PIL import Image的作用是:

导入Pillow库的Image模块。Pillow是一个强大的图像处理库,它提供了丰富的图像处理工具与方法。

导入Image模块后,我们可以使用它来完成诸如:

- 打开与读取图像:使用Image.open()

- 调整图像大小:使用image.resize()

- 剪裁图像:使用image.crop()

- 翻转与旋转图像:使用image.transpose()、image.rotate()

- 绘制几何形状:使用ImageDraw

- 添加水印:使用Image.open()和ImageDraw

- 图像过滤:使用image.filter()

- 图像合成:使用image.alpha_composite()

- 图像格式转换:使用image.convert()

- 等等

from matplotlib import pyplot as plt

import torchvision.models as models

import enviroments

BASE_DIR = os.path.dirname(os.path.abspath(__file__))

# device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

device = torch.device("cpu")

# config

vis = True

# vis = False

vis_row = 4

norm_mean = [0.485, 0.456, 0.406]

norm_std = [0.229, 0.224, 0.225]

inference_transform = transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize(norm_mean, norm_std),

])

这段代码定义了用于推理的转换器(inference_transform)。它包含以下几个步骤:

1. transforms.Resize(256):将图像Resize到256x256

2. transforms.CenterCrop(224):从图像中心剪裁出224x224的区域

3. transforms.ToTensor():将图像转换为Tensor,取值范围为[0, 1]

4. transforms.Normalize(norm_mean, norm_std):图像归一化,将每个通道减去norm_mean,然后除以norm_stdscales

norm_mean和norm_std是ImageNet数据集的平均值和标准差,用于归一化ImageNet图像。

classes = ["ants", "bees"]

def img_transform(img_rgb, transform=None):

"""

将数据转换为模型读取的形式

:param img_rgb: PIL Image

:param transform: torchvision.transform

:return: tensor

"""

if transform is None:

raise ValueError("找不到transform!必须有transform对img进行处理")

img_t = transform(img_rgb)

return img_t

def get_img_name(img_dir, format="jpg"):

"""

获取文件夹下format格式的文件名

:param img_dir: str

:param format: str

:return: list

"""

file_names = os.listdir(img_dir)

# 使用 list(filter(lambda())) 筛选出 jpg 后缀的文件

img_names = list(filter(lambda x: x.endswith(format), file_names))

if len(img_names) < 1:

raise ValueError("{}下找不到{}格式数据".format(img_dir, format))

return img_names

def get_model(m_path, vis_model=False):

resnet18 = models.resnet18()

# 修改全连接层的输出

#获取最后一个全连接层的输入深度

num_ftrs = resnet18.fc.in_features

#修改全连接层的输出向量长度为5,因为花分类数据集就5类

resnet18.fc = nn.Linear(num_ftrs, 5)

因为直接训练ResNet比较困难,所以通常会采用迁移学习来进行网络的训练。

先下载在ImageNet上训练好的ResNet34的权重并载入模型,

但是预加载的模型是一个1000分类的模型,而我们实验所用的数据

是一个5分类的简单数据集,需要进行如下修改:

/*

params = [p for p in net.parameters() if p.requires_grad]

#初始化优化器

optimizer = optim.Adam(params, lr=0.0001)

1. params = [p for p in net.parameters() if p.requires_grad]

这行代码首先获取net网络中的所有参数,然后过滤掉

requires_grad=False的参数,最终得到一个可训练的参数列表params。

requires_grad=False表示这个参数不需要计算梯度,

所以不会被训练。这行代码的作用就是过滤这些参数,

只保留需要训练的可优化参数。

2. optimizer = optim.Adam(params, lr=0.0001)

这行代码实例化一个Adam优化器,用于训练params中的参数。

它将params中的参数作为要优化的参数,并指定学习率为0.0001。

所以,这两行代码的总体作用是:

1. 获取net网络中需要训练的、可优化的参数

2. 为这些参数定义一个Adam优化器,用于更新参数的值

*/

# 加载模型参数

checkpoint = torch.load(m_path)

resnet18.load_state_dict(checkpoint['model_state_dict'])

if vis_model:

from torchsummary import summary

summary(resnet18, input_size=(3, 224, 224), device="cpu")

return resnet18

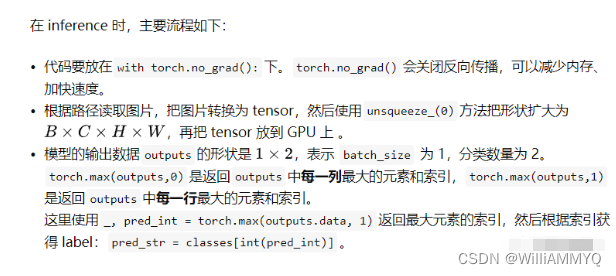

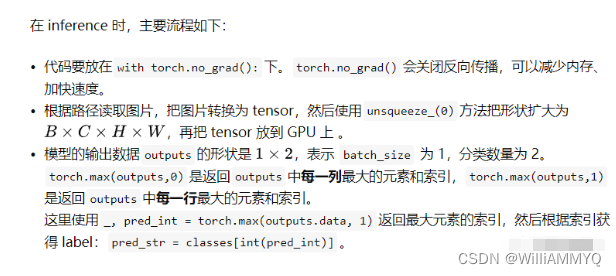

总结一下 inference 阶段需要注意的事项:

确保 model 处于 eval 状态,而非 trainning 状态

设置 torch.no_grad(),减少内存消耗,加快运算速度

数据预处理需要保持一致,比如 RGB 或者 rBGR

with torch.no_grad():

for idx, img_name in enumerate(img_names):

path_img = os.path.join(img_dir, img_name)

# step 1/4 : path --> img

img_rgb = Image.open(path_img).convert('RGB')

# step 2/4 : img --> tensor

img_tensor = img_transform(img_rgb, inference_transform)

img_tensor.unsqueeze_(0)

img_tensor = img_tensor.to(device)

# step 3/4 : tensor --> vector

outputs = resnet18(img_tensor)

# step 4/4 : get label

_, pred_int = torch.max(outputs.data, 1)

pred_str = classes[int(pred_int)]

码要放在with torch.no_grad():下。torch.no_grad()会关闭反向传播,

可以减少内存、加快速度。

if __name__ == "__main__":

img_dir = os.path.join(enviroments.hymenoptera_data_dir,"val/bees")

model_path = "./checkpoint_14_epoch.pkl"

time_total = 0

img_list, img_pred = list(), list()

# 1. data

img_names = get_img_name(img_dir)

num_img = len(img_names)

# 2. model

resnet18 = get_model(model_path, True)

resnet18.to(device)

resnet18.eval()

with torch.no_grad():

for idx, img_name in enumerate(img_names):

path_img = os.path.join(img_dir, img_name)

# step 1/4 : path --> img

img_rgb = Image.open(path_img).convert('RGB')

# step 2/4 : img --> tensor

img_tensor = img_transform(img_rgb, inference_transform)

img_tensor.unsqueeze_(0)

img_tensor = img_tensor.to(device)

# step 3/4 : tensor --> vector

time_tic = time.time()

outputs = resnet18(img_tensor)

time_toc = time.time()

# step 4/4 : visualization

_, pred_int = torch.max(outputs.data, 1)

pred_str = classes[int(pred_int)]

if vis:

img_list.append(img_rgb)

img_pred.append(pred_str)

if (idx+1) % (vis_row*vis_row) == 0 or num_img == idx+1:

for i in range(len(img_list)):

plt.subplot(vis_row, vis_row, i+1).imshow(img_list[i])

plt.title("predict:{}".format(img_pred[i]))

plt.show()

plt.close()

img_list, img_pred = list(), list()

time_s = time_toc-time_tic

time_total += time_s

print('{:d}/{:d}: {} {:.3f}s '.format(idx + 1, num_img, img_name, time_s))

print("\ndevice:{} total time:{:.1f}s mean:{:.3f}s".

format(device, time_total, time_total/num_img))

if torch.cuda.is_available():

print("GPU name:{}".format(torch.cuda.get_device_name()))

在PyTorch中,模型有两种模式:

- 训练模式:model.train()

- 评估模式:model.eval()

这两种模式的主要区别是:

- 在训练模式下,模型会启用Dropout和BatchNorm层,并计算梯度等。这是用于模型训练的模式。

- 在评估模式下,模型会禁用Dropout和BatchNorm层,并不计算梯度等。这主要用于模型评估与推理。

所以,调用resnet18.eval()的作用是:

将ResNet18模型设定为评估模式,主要用于模型的推理与测试。在这种模式下:

1. 会禁用模型中的Dropout和BatchNorm层。

2. 不会计算梯度等,一些训练需要的操作会被跳过。

3. 这可以提高模型的推理速度与效果。

import torch

import argparse

import torchvision

from module.models.build import get_model

from collections import OrderedDict

from collections import OrderedDict的作用是:

导入Python标准库collections中的OrderedDict。

OrderedDict是一个有序字典,它保留了字典元素被添加的顺序。普通字典是

无序的,无法保留元素添加的顺序。

所以,OrderedDict可以用于需要考虑元素添加顺序的场景。一些常见用法

如:

1. 记录数据的Insertion Order,以便后续的处理。

2. 作为Dictionray的替代,因为需要考虑元素添加的顺序。

3. 在序列化/反序列化过程中需要保留元素顺序。

4. 用于LRU Cache实现。

from torchvision import datasets, transforms

from torchvision import datasets, transforms的作用是:

导入TorchVision的datasets和transforms模块。这两个模块分别用于:

- datasets:提供加载常用数据集的工具,如MNIST、CIFAR10、ImageNet等。使用torchvision.datasets可以很方便地下载和加载这些数据集。

- transforms:提供常用的图像转换工具,如调整大小、剪裁、翻转、

归一化等。使用transforms可以灵活构建图像处理流程,将输入图像转

换为模型所需的形式。

def proc_node_module(checkpoint, attr_name):

new_state_dict = OrderedDict()

for k, v in checkpoint[attr_name].items():

if(k[0: 7] == "module."):

name = k[7:]

else:

name = k[0:]

new_state_dict[name] = v

return new_state_dict

def get_raw_data():

from PIL import Image

from urllib.request import urlretrieve

IMAGE_URL = 'https://bbs-img.huaweicloud.com/blogs/img/thumb/1591951315139_8989_1363.png'

urlretrieve(IMAGE_URL, 'tmp.jpg')

img = Image.open("tmp.jpg")

img = img.convert('RGB')

return img

def test():

loc = 'npu:0'

loc_cpu = 'cpu'

torch.npu.set_device(loc)

checkpoint = torch.load("./output_8p/checkpoint_apex_final.pth", map_location=loc)

checkpoint['state_dict'] = proc_node_module(checkpoint, 'state_dict')

model = get_model('resnest50')()

model = model.to(loc)

model.load_state_dict(checkpoint['state_dict'])

model.eval()

normalize = transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

rd = get_raw_data()

data_transfrom = transforms.Compose([transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

normalize])

inputs = data_transfrom(rd)

inputs = inputs.unsqueeze(0)

inputs = inputs.to(loc)

output = model(inputs)

output = output.to(loc_cpu)

_, pred = output.topk(1, 1, True, True)

result = torch.argmax(output, 1)

print("class: ", pred[0][0].item())

print(result)

if __name__ == "__main__":

test()

606

606

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?