1. 概述

类间继承关系和方法增量简述

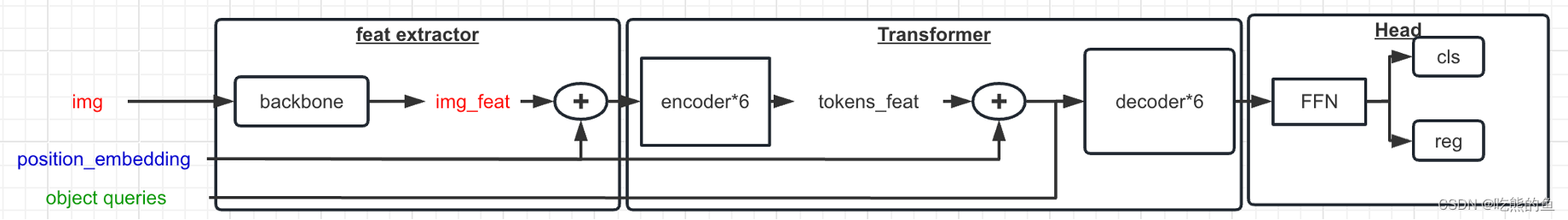

2. DetectionTransformer

2.1 整体结构

三个部分清晰分明:

(1) 通过self.extract_feat调用backbone和neck模块提取特征;

(2) 通过self.forward_transformer搭建Transformer模块的调用逻辑,但每个部分没有实现,子类Detector主要实现这些内容;

(3) 通过self.bbox_head.predict/loss调用head的推理和loss计算模块,对应的head类主要实现这些内容。

模块结构图

模块代码图 (建议对照代码看图)

batch_inputs:images, shape=(B, C, H, W)

batch_data_samples: It usually includes information such as `gt_instance` or `gt_panoptic_seg` or `gt_sem_seg`.

2.2 self.forward_transformer代码图

每个DetectionTransformr的子类主要就是实现self.forward_transformer中调用的四个函数:

- pre_transformer

- forward_dencoder

- pre_decoder

- forward_decoder

def forward_transformer(self,

img_feats: Tuple[Tensor],

batch_data_samples: OptSampleList = None) -> Dict:

encoder_inputs_dict, decoder_inputs_dict = self.pre_transformer(

img_feats, batch_data_samples)

encoder_outputs_dict = self.forward_encoder(**encoder_inputs_dict)

tmp_dec_in, head_inputs_dict = self.pre_decoder(**encoder_outputs_dict)

decoder_inputs_dict.update(tmp_dec_in)

decoder_outputs_dict = self.forward_decoder(**decoder_inputs_dict)

head_inputs_dict.update(decoder_outputs_dict)

return head_inputs_dict

后面结合DETR的具体实现我们可以更好的理解这个内部的各个变量的含义。

3. DETR (了解大致的结构)

3.1 DETR Detector

正如前文所说,DetectionTransformer的子类Detector就是实现self.forward_transformer中的四个调用函数。它主要是为encoder和decoder提供输入(特征,位置编码PE和掩码mask)的准备。

- encoder核心是SelfAttention(x, PE, masks),q k v都来自于同一个变量,PE和masks分别是position_embedding和输入掩码。

- decoder核心是CrossAttention(q, kv, q_PE, kv_PE, kv_masks),代码中称kv为memory。

在DETR中这四个函数的作用如下:

- pre_transformer:为encoder提供x,PE,masks,同时这个PE和masks也是decoder中的kv_PE和kv_masks。

- forward_decoder:直接地调用encoder,输出增强后的特征x’。

- pre_decoder:为decoder提供query和query_pos,这里有个细节query_pos是learnable的Embedding,而query是一个全0tensor不可学习。

- forward_decoder:根据前面的x’作为k/v,kv_PE和kv_masks,query和query_pos作为输入,调用Decoder。

def pre_decoder(self, memory: Tensor) -> Tuple[Dict, Dict]:

batch_size = memory.size(0) # (bs, num_feat_points, dim)

query_pos = self.query_embedding.weight

# (num_queries, dim) -> (bs, num_queries, dim)

query_pos = query_pos.unsqueeze(0).repeat(batch_size, 1, 1)

query = torch.zeros_like(query_pos)

decoder_inputs_dict = dict(query_pos=query_pos, query=query, memory=memory)

head_inputs_dict = dict()

return decoder_inputs_dict, head_inputs_dict

3.2 DETRHead

head主要就是实现三个内容

- forward: 通过分类回归头拿到结果;

- predict:只在推理时调用,预测以及相应的后处理。

- loss: 只在训练时,调用label assign策略,计算损失函数;

forward

def _init_layers(self) -> None:

"""Initialize layers of the transformer head."""

# cls branch

self.fc_cls = Linear(self.embed_dims, self.cls_out_channels)

# reg branch

self.activate = nn.ReLU()

self.reg_ffn = FFN(self.embed_dims, self.embed_dims, self.num_reg_fcs,

dict(type='ReLU', inplace=True), dropout=0.0, add_residual=False)

# NOTE the activations of reg_branch here is the same as

# those in transformer, but they are actually different

# in DAB-DETR (prelu in transformer and relu in reg_branch)

self.fc_reg = Linear(self.embed_dims, 4)

def forward(self, hidden_states: Tensor) -> Tuple[Tensor]:

# hidden_states: (num_decoder_layers, bs, num_queries, dim) If `return_intermediate_dec` in detr.py is True else (1, bs, num_queries, dim)

# Note cls_out_channels should include background.

# (num_decoder_layers, bs, num_queries, cls_out_channels)

layers_cls_scores = self.fc_cls(hidden_states).

# normalized coordinate format (cx, cy, w, h), has shape

# (num_decoder_layers, bs, num_queries, 4)

layers_bbox_preds = self.fc_reg(

self.activate(self.reg_ffn(hidden_states))).sigmoid()

return layers_cls_scores, layers_bbox_preds

predict

predict调用forward获得cls/reg预测结果后,调用predict_by_feat进行后处理。而predict_by_feat则通过调用_predict_by_feat_single对每张图进行后处理,因此核心代码在_predict_by_feat_single中,完成两件事:

- 得分topk过滤:每个结果取最高得分类别,按得分取topk(max_per_img)

- box格式转换与缩放:(cx,cy,w,h)变成(x1,y1,x2,y2)并乘以图片长宽,rescale回原图尺度

def _predict_by_feat_single(self,

cls_score: Tensor,

bbox_pred: Tensor,

img_meta: dict,

rescale: bool = True) -> InstanceData:

"""Transform outputs from the last decoder layer into bbox predictions

for each image.

Args:

cls_score (Tensor): Box score logits from the last decoder layer

for each image. Shape [num_queries, cls_out_channels].

bbox_pred (Tensor): Sigmoid outputs from the last decoder layer

for each image, with coordinate format (cx, cy, w, h) and

shape [num_queries, 4].

img_meta (dict): Image meta info.

rescale (bool): If True, return boxes in original image

space. Default True.

Returns:

:obj:`InstanceData`: Detection results of each image

after the post process.

Each item usually contains following keys.

- scores (Tensor): Classification scores, has a shape

(num_instance, )

- labels (Tensor): Labels of bboxes, has a shape

(num_instances, ).

- bboxes (Tensor): Has a shape (num_instances, 4),

the last dimension 4 arrange as (x1, y1, x2, y2).

"""

assert len(cls_score) == len(bbox_pred) # num_queries

max_per_img = self.test_cfg.get('max_per_img', len(cls_score))

img_shape = img_meta['img_shape']

# exclude background

if self.loss_cls.use_sigmoid:

cls_score = cls_score.sigmoid()

scores, indexes = cls_score.view(-1).topk(max_per_img)

det_labels = indexes % self.num_classes

bbox_index = indexes // self.num_classes

bbox_pred = bbox_pred[bbox_index]

else:

scores, det_labels = F.softmax(cls_score, dim=-1)[..., :-1].max(-1)

scores, bbox_index = scores.topk(max_per_img)

bbox_pred = bbox_pred[bbox_index]

det_labels = det_labels[bbox_index]

det_bboxes = bbox_cxcywh_to_xyxy(bbox_pred)

det_bboxes[:, 0::2] = det_bboxes[:, 0::2] * img_shape[1]

det_bboxes[:, 1::2] = det_bboxes[:, 1::2] * img_shape[0]

det_bboxes[:, 0::2].clamp_(min=0, max=img_shape[1])

det_bboxes[:, 1::2].clamp_(min=0, max=img_shape[0])

if rescale:

assert img_meta.get('scale_factor') is not None

det_bboxes /= det_bboxes.new_tensor(

img_meta['scale_factor']).repeat((1, 2))

results = InstanceData()

results.bboxes = det_bboxes

results.scores = scores

results.labels = det_labels

return results

loss

4. DeformableDETR

multi-scale feat (scale embedding)

two stage prediction

877

877

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?