EncNet全名Context Encoding Network,收录于CVPR2018。

原文地址:EncNet。

EncNet主要思想参考于作者的另一篇文章,Deep TEN: Texture Encoding Network。关于这篇文章的介绍可以参考纹理识别--(Deep TEN)Deep TEN: Texture Encoding Network。

关于EncNet的介绍可以参考语义分割--(EncNet)Context Encoding for Semantic Segmentation。

本文以复现EncNet为主。

EncNet思想

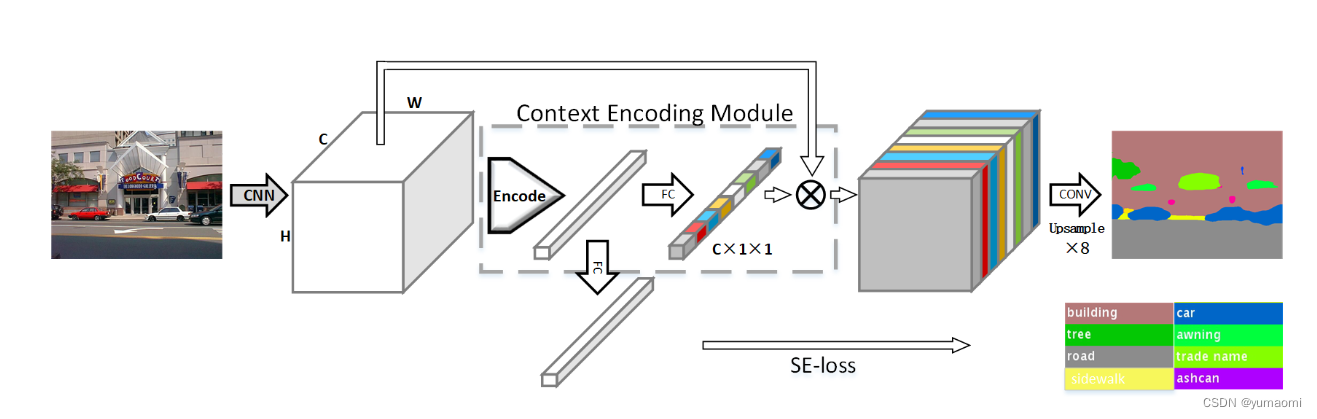

1. 论文引入了Context Encoding Module(上下文编码模块)来捕捉全局信息的上下文信息,尤其是与场景相关联的类别信息。参考了CAnet,实现了一个通道注意力机制,预测一组特征图的放缩因子作为循环用于突出需要强调的类别。

2.引入了SE loss来实现对场景内类别的关注,相当于给模型一个先验知识,比如先告诉模型这张图片的背景是卧室,卧室里面只会存在床、枕头、桌子等,而不会出现飞机、汽车,强制模型去学习某个场景内包含的类别。

EncNet模型

个人的理解是,EncNet通过通道注意力和SE loss两个trick来增加模型对上下文语义的理解。

1.通过计算每个通道的缩放因子,来突出类别和类别相关的特征图,实现一个类似于通道注意力的机制。

2.SE loss迫使模型学习每个场景内可能会出现的类别,为模型提供一个先验知识。同时,文章中提到,SE loss对于不同大小的物体目标的计算方式是等同的,不同于像素级别的损失,SE loss根据个体的类别来计算,这就使大物体和小物体在loss的贡献上相同,这种loss有利于小目标的分割。

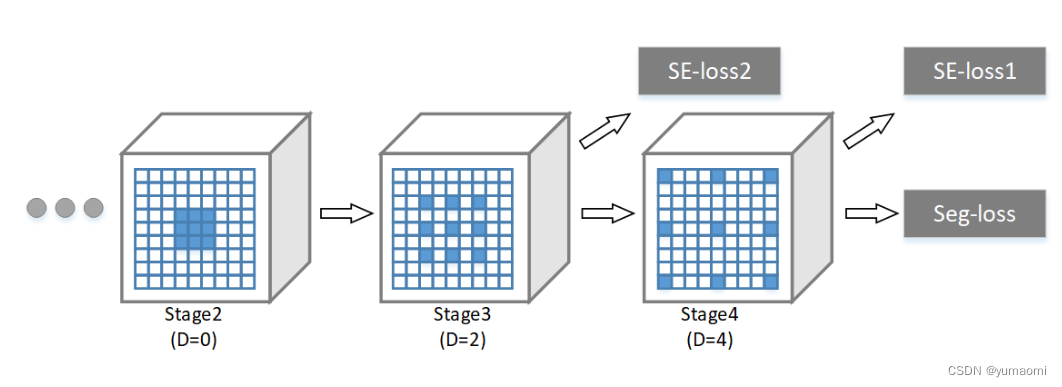

文章中还对backbone网络做了一部分改动,将backbone的最后两层网络的空洞卷积速率设为2和4。在第三层和第四层均可以输出一个SE loss。

模型复现

backbone-resnet50

import torch

import torch.nn as nn

class BasicBlock(nn.Module):

expansion: int = 4

def __init__(self, inplanes, planes, stride = 1, downsample = None, groups = 1,

base_width = 64, dilation = 1, norm_layer = None):

super(BasicBlock, self).__init__()

if norm_layer is None:

norm_layer = nn.BatchNorm2d

if groups != 1 or base_width != 64:

raise ValueError("BasicBlock only supports groups=1 and base_width=64")

if dilation > 1:

raise NotImplementedError("Dilation > 1 not supported in BasicBlock")

# Both self.conv1 and self.downsample layers downsample the input when stride != 1

self.conv1 = nn.Conv2d(inplanes, planes ,kernel_size=3, stride=stride,

padding=dilation,groups=groups, bias=False,dilation=dilation)

self.bn1 = norm_layer(planes)

self.relu = nn.ReLU(inplace=True)

self.conv2 = nn.Conv2d(planes, planes ,kernel_size=3, stride=stride,

padding=dilation,groups=groups, bias=False,dilation=dilation)

self.bn2 = norm_layer(planes)

self.downsample = downsample

self.stride = stride

def forward(self, x):

identity = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

if self.downsample is not None:

identity = self.downsample(x)

out += identity

out = self.relu(out)

return out

class Bottleneck(nn.Module):

expansion = 4

def __init__(self, inplanes, planes, stride=1, downsample= None,

groups = 1, base_width = 64, dilation = 1, norm_layer = None,):

super(Bottleneck, self).__init__()

if norm_layer is None:

norm_layer = nn.BatchNorm2d

width = int(planes * (base_width / 64.0)) * groups

# Both self.conv2 and self.downsample layers downsample the input when stride != 1

self.conv1 = nn.Conv2d(inplanes, width, kernel_size=1, stride=1, bias=False)

self.bn1 = norm_layer(width)

self.conv2 = nn.Conv2d(width, width, kernel_size=3, stride=stride, bias=False, padding=dilation, dilation=dilation)

self.bn2 = norm_layer(width)

self.conv3 = nn.Conv2d(width, planes * self.expansion, kernel_size=1, stride=1, bias=False)

self.bn3 = norm_layer(planes * self.expansion)

self.relu = nn.ReLU(inplace=True)

self.downsample = downsample

self.stride = stride

def forward(self, x):

identity = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

out = self.conv3(out)

out = self.bn3(out)

if self.downsample is not None:

identity = self.downsample(x)

out += identity

out = self.relu(out)

return out

class ResNet(nn.Module):

def __init__(

self,block, layers,num_classes = 1000, zero_init_residual = False, groups = 1,

width_per_group = 64, replace_stride_with_dilation = None, norm_layer = None):

super(ResNet, self).__init__()

if norm_layer is None:

norm_layer = nn.BatchNorm2d

self._norm_layer = norm_layer

self.inplanes = 64

self.dilation = 1

if replace_stride_with_dilation is None:

# each element in the tuple indicates if we should replace

# the 2x2 stride with a dilated convolution instead

replace_stride_with_dilation = [False, False, False]

if len(replace_stride_with_dilation) != 3:

raise ValueError(

"replace_stride_with_dilation should be None "

f"or a 3-element tuple, got {replace_stride_with_dilation}"

)

self.groups = groups

self.base_width = width_per_group

self.conv1 = nn.Conv2d(3, self.inplanes, kernel_size=7, stride=2, padding=3, bias=False)

self.bn1 = norm_layer(self.inplanes)

self.relu = nn.ReLU(inplace=True)

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

self.layer1 = self._make_layer(block, 64, layers[0])

self.layer2 = self._make_layer(block, 128, layers[1], stride=1, dilate=replace_stride_with_dilation[0])

self.layer3 = self._make_layer(block, 256, layers[2], stride=2, dilate=replace_stride_with_dilation[1])

self.layer4 = self._make_layer(block, 512, layers[3], stride=1, dilate=replace_stride_with_dilation[2])

self.avgpool = nn.AdaptiveAvgPool2d((1, 1))

self.fc = nn.Linear(512 * block.expansion, num_classes)

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode="fan_out", nonlinearity="relu")

elif isinstance(m, (nn.BatchNorm2d, nn.GroupNorm)):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

# Zero-initialize the last BN in each residual branch,

# so that the residual branch starts with zeros, and each residual block behaves like an identity.

# This improves the model by 0.2~0.3% according to https://arxiv.org/abs/1706.02677

if zero_init_residual:

for m in self.modules():

if isinstance(m, Bottleneck):

nn.init.constant_(m.bn3.weight, 0) # type: ignore[arg-type]

elif isinstance(m, BasicBlock):

nn.init.constant_(m.bn2.weight, 0) # type: ignore[arg-type]

def _make_layer(

self,

block,

planes,

blocks,

stride = 1,

dilate = False,

):

norm_layer = self._norm_layer

downsample = None

previous_dilation = self.dilation

if dilate:

self.dilation *= stride

stride = stride

if stride != 1 or self.inplanes != planes * block.expansion:

downsample = nn.Sequential(

nn.Conv2d(self.inplanes, planes * block.expansion, kernel_size=1, stride=stride, bias=False),

norm_layer(planes * block.expansion))

layers = []

layers.append(

block(

self.inplanes, planes, stride, downsample, self.groups, self.base_width, previous_dilation, norm_layer

)

)

self.inplanes = planes * block.expansion

for _ in range(1, blocks):

layers.append(

block(

self.inplanes,

planes,

groups=self.groups,

base_width=self.base_width,

dilation=self.dilation,

norm_layer=norm_layer,

)

)

return nn.Sequential(*layers)

def _forward_impl(self, x):

outs = []

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

outs.append(x)

x = self.layer2(x)

outs.append(x)

x = self.layer3(x)

outs.append(x)

x = self.layer4(x)

outs.append(x)

return outs

def forward(self, x) :

return self._forward_impl(x)

def _resnet(block, layers, pretrained_path = None, **kwargs,):

model = ResNet(block, layers, **kwargs)

if pretrained_path is not None:

model.load_state_dict(torch.load(pretrained_path), strict=False)

return model

def resnet50(pretrained_path=None, **kwargs):

return ResNet._resnet(Bottleneck, [3, 4, 6, 3],pretrained_path,**kwargs)

def resnet101(pretrained_path=None, **kwargs):

return ResNet._resnet(Bottleneck, [3, 4, 23, 3],pretrained_path,**kwargs)EncNet

Encoding层

import torch

from torch import nn as nn

from torch.nn import functional as F

class Encoding(nn.Module):

def __init__(self, channels, num_codes):

super(Encoding, self).__init__()

# init codewords and smoothing factor

self.channels, self.num_codes = channels, num_codes

std = 1. / ((num_codes * channels)**0.5)

# [num_codes, channels]

self.codewords = nn.Parameter(

torch.empty(num_codes, channels,

dtype=torch.float).uniform_(-std, std),

requires_grad=True)

# [num_codes]

self.scale = nn.Parameter(

torch.empty(num_codes, dtype=torch.float).uniform_(-1, 0),

requires_grad=True)

def scaled_l2(self, x, codewords, scale):

num_codes, channels = codewords.size()

batch_size = x.size(0)

reshaped_scale = scale.view((1, 1, num_codes))

expanded_x = x.unsqueeze(2).expand(

(batch_size, x.size(1), num_codes, channels))

reshaped_codewords = codewords.view((1, 1, num_codes, channels))

scaled_l2_norm = reshaped_scale * (

expanded_x - reshaped_codewords).pow(2).sum(dim=3)

return scaled_l2_norm

def aggregate(self, assigment_weights, x, codewords):

num_codes, channels = codewords.size()

reshaped_codewords = codewords.view((1, 1, num_codes, channels))

batch_size = x.size(0)

expanded_x = x.unsqueeze(2).expand(

(batch_size, x.size(1), num_codes, channels))

encoded_feat = (assigment_weights.unsqueeze(3) *

(expanded_x - reshaped_codewords)).sum(dim=1)

return encoded_feat

def forward(self, x):

assert x.dim() == 4 and x.size(1) == self.channels

# [batch_size, channels, height, width]

batch_size = x.size(0)

# [batch_size, height x width, channels]

x = x.view(batch_size, self.channels, -1).transpose(1, 2).contiguous()

# assignment_weights: [batch_size, channels, num_codes]

assigment_weights = F.softmax(self.scaled_l2(x, self.codewords, self.scale), dim=2)

# aggregate

#print("assigment_weights:",assigment_weights.shape)

encoded_feat = self.aggregate(assigment_weights, x, self.codewords)

#print("encoded_feat1:",encoded_feat.shape)

return encoded_feat

def __repr__(self):

repr_str = self.__class__.__name__

repr_str += f'(Nx{self.channels}xHxW =>Nx{self.num_codes}' \

f'x{self.channels})'

return repr_str

EncNet部分

import torch

import torch.nn as nn

import torch.nn.functional as F

class EncModule(nn.Module):

def __init__(self, in_channels, num_codes):

super(EncModule, self).__init__()

self.encoding_project = nn.Conv2d(

in_channels,

in_channels,

1,

)

# TODO: resolve this hack

# change to 1d

self.encoding = nn.Sequential(

Encoding(channels=in_channels, num_codes=num_codes),

nn.BatchNorm1d(num_codes),

nn.ReLU(inplace=True))

self.fc = nn.Sequential(

nn.Linear(in_channels, in_channels), nn.Sigmoid())

def forward(self, x):

"""Forward function."""

encoding_projection = self.encoding_project(x)

encoding_feat = self.encoding(encoding_projection).mean(dim=1)

#print("encoding_feat2: ",encoding_feat.shape)

batch_size, channels, _, _ = x.size()

gamma = self.fc(encoding_feat)

y = gamma.view(batch_size, channels, 1, 1)

output = F.relu_(x + x * y)

return encoding_feat, output

class EncHead(nn.Module):

def __init__(self,num_classes=33,

num_codes=32,

use_se_loss=True,

add_lateral=False,

**kwargs):

super(EncHead, self).__init__()

self.use_se_loss = use_se_loss

self.add_lateral = add_lateral

self.num_codes = num_codes

self.in_channels = [256, 512, 1024, 2048]

self.channels = 512

self.num_classes = num_classes

self.bottleneck = nn.Conv2d(

self.in_channels[-1],

self.channels,

3,

padding=1,

)

if add_lateral:

self.lateral_convs = nn.ModuleList()

for in_channels in self.in_channels[:-1]: # skip the last one

self.lateral_convs.append(

nn.Conv2d(

in_channels,

self.channels,

1,

))

self.fusion = nn.Conv2d(

len(self.in_channels) * self.channels,

self.channels,

3,

padding=1,

)

self.enc_module = EncModule(

self.channels,

num_codes=num_codes,

)

self.cls_seg = nn.Sequential(

nn.Conv2d(512, 256, 3, padding=1),

nn.BatchNorm2d(256),

nn.ReLU(),

nn.Conv2d(256, 33, 3, padding=1)

)

if self.use_se_loss:

self.se_layer = nn.Linear(self.channels, self.num_classes)

def forward(self, inputs):

"""Forward function."""

feat = self.bottleneck(inputs[-1])

if self.add_lateral:

laterals = [

nn.functional.interpolate(input=lateral_conv(inputs[i]),size=feat.shape[2:],

mode='bilinear')

for i, lateral_conv in enumerate(self.lateral_convs)

]

feat = self.fusion(torch.cat([feat, *laterals], 1))

encode_feat, output = self.enc_module(feat)

output = nn.functional.interpolate(input = output, scale_factor=8, mode="bilinear")

output = self.cls_seg(output)

if self.use_se_loss:

se_output = self.se_layer(encode_feat)

return output, se_output

else:

return output

class ENCNet(nn.Module):

def __init__(self, num_classes):

super(ENCNet, self).__init__()

self.num_classes = num_classes

self.backbone = ResNet.resnet50()

self.decoder = EncHead()

def forward(self, x):

x = self.backbone(x)

x = self.decoder(x)

return x数据集-Camvid

数据集下载及使用教程见:语义分割数据集:CamVid数据集的创建和使用-pytorch

# 导入库

import os

os.environ['CUDA_VISIBLE_DEVICES'] = '0'

import torch

import torch.nn as nn

import torch.optim as optim

import torch.nn.functional as F

from torch import optim

from torch.utils.data import Dataset, DataLoader, random_split

from tqdm import tqdm

import warnings

warnings.filterwarnings("ignore")

import os.path as osp

import matplotlib.pyplot as plt

from PIL import Image

import numpy as np

import albumentations as A

from albumentations.pytorch.transforms import ToTensorV2

#用于做可视化, 暂时没用到

Cam_COLORMAP = [[0, 0, 0], [128, 0, 0], [0, 128, 0], [128, 128, 0],

[0, 0, 128], [128, 0, 128], [0, 128, 128], [128, 128, 128],

[64, 0, 0], [192, 0, 0], [64, 128, 0], [192, 128, 0],

[64, 0, 128], [192, 0, 128], [64, 128, 128], [192, 128, 128],

[0, 64, 0], [128, 64, 0], [0, 192, 0], [128, 192, 0],

[0, 64, 128], [0, 32, 128],[0, 16, 128],[0, 64, 64],[0, 64, 32],

[0, 64, 16],[64, 64, 128],[0, 32, 16],[32,32,32],[16,16,16],[32,16,128],

[192,16,16],[32,32,196],[192,32,128], [25,15,125],[32,124,23],[111,222,113],

]

#32类

Cam_CLASSES = ['Animal','Archway','Bicyclist','Bridge','Building','Car','CartLuggagePram','Child',

'Column_Pole','Fence','LaneMkgsDriv','LaneMkgsNonDriv','Misc_Text','MotorcycleScooter',

'OtherMoving','ParkingBlock','Pedestrian','Road','RoadShoulder','Sidewalk','SignSymbol',

'Sky', 'SUVPickupTruck','TrafficCone','TrafficLight', 'Train','Tree','Truck_Bus', 'Tunnel',

'VegetationMisc', 'Void','Wall']

torch.manual_seed(17)

# 自定义数据集CamVidDataset

class CamVidDataset(torch.utils.data.Dataset):

"""CamVid Dataset. Read images, apply augmentation and preprocessing transformations.

Args:

images_dir (str): path to images folder

masks_dir (str): path to segmentation masks folder

class_values (list): values of classes to extract from segmentation mask

augmentation (albumentations.Compose): data transfromation pipeline

(e.g. flip, scale, etc.)

preprocessing (albumentations.Compose): data preprocessing

(e.g. noralization, shape manipulation, etc.)

"""

def __init__(self, images_dir, masks_dir):

self.transform = A.Compose([

A.Resize(224, 224),

A.HorizontalFlip(),

A.VerticalFlip(),

A.Normalize(),

ToTensorV2(),

])

self.ids = os.listdir(images_dir)

self.images_fps = [os.path.join(images_dir, image_id) for image_id in self.ids]

self.masks_fps = [os.path.join(masks_dir, image_id) for image_id in self.ids]

def __getitem__(self, i):

# read data

image = np.array(Image.open(self.images_fps[i]).convert('RGB'))

mask = np.array( Image.open(self.masks_fps[i]).convert('RGB'))

image = self.transform(image=image,mask=mask)

return image['image'], image['mask'][:,:,0]

def __len__(self):

return len(self.ids)

# 设置数据集路径

DATA_DIR = r'dataset\camvid' # 根据自己的路径来设置

x_train_dir = os.path.join(DATA_DIR, 'train_images')

y_train_dir = os.path.join(DATA_DIR, 'train_labels')

x_valid_dir = os.path.join(DATA_DIR, 'valid_images')

y_valid_dir = os.path.join(DATA_DIR, 'valid_labels')

train_dataset = CamVidDataset(

x_train_dir,

y_train_dir,

)

val_dataset = CamVidDataset(

x_valid_dir,

y_valid_dir,

)

train_loader = DataLoader(train_dataset, batch_size=8, shuffle=True,drop_last=True)

val_loader = DataLoader(val_dataset, batch_size=8, shuffle=True,drop_last=True)训练函数

由于需要输出SE loss,这里对训练函数做了一部分改动

model = ENCNet(num_classes=33).cuda()

#model.load_state_dict(torch.load(r"checkpoints/resnet50-11ad3fa6.pth"),strict=False)

def train_batch_ch13(net, X, y, loss, trainer, devices):

"""Train for a minibatch with mutiple GPUs (defined in Chapter 13).

Defined in :numref:`sec_image_augmentation`"""

if isinstance(X, list):

# Required for BERT fine-tuning (to be covered later)

X = [x.to(devices[0]) for x in X]

else:

X = X.to(devices[0])

y = y.to(devices[0])

net.train()

trainer.zero_grad()

pred = net(X)[0]

# 33是类别数, pred.shape[0]是batch_size的大小

exist_class = torch.FloatTensor([[1 if c in y[i_batch] else 0 for c in range(33)]

for i_batch in range(pred.shape[0])])

exist_class = exist_class.cuda()

pred2 = net(X)[1]

l = loss(pred, y)

l1 = nn.functional.mse_loss(pred2, exist_class)

l = l.sum()+ 0.2*l1

l.backward()

trainer.step()

train_loss_sum = l.sum()

train_acc_sum = d2l.accuracy(pred, y)

return train_loss_sum, train_acc_sum

def evaluate_accuracy_gpu(net, data_iter, device=None):

"""Compute the accuracy for a model on a dataset using a GPU.

Defined in :numref:`sec_lenet`"""

if isinstance(net, nn.Module):

net.eval() # Set the model to evaluation mode

if not device:

device = next(iter(net.parameters())).device

# No. of correct predictions, no. of predictions

metric = d2l.Accumulator(2)

with torch.no_grad():

for X, y in data_iter:

if isinstance(X, list):

# Required for BERT Fine-tuning (to be covered later)

X = [x.to(device) for x in X]

else:

X = X.to(device)

y = y.to(device)

metric.add(d2l.accuracy(net(X)[0], y), d2l.size(y))

return metric[0] / metric[1]

from d2l import torch as d2l

from tqdm import tqdm

import pandas as pd

#损失函数选用多分类交叉熵损失函数

lossf = nn.CrossEntropyLoss(ignore_index=255)

#选用adam优化器来训练

optimizer = optim.SGD(model.parameters(),lr=0.1)

scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=50, gamma=0.1, last_epoch=-1)

#训练50轮

epochs_num = 50

def train_ch13(net, train_iter, test_iter, loss, trainer, num_epochs,scheduler,

devices=d2l.try_all_gpus()):

timer, num_batches = d2l.Timer(), len(train_iter)

animator = d2l.Animator(xlabel='epoch', xlim=[1, num_epochs], ylim=[0, 1],

legend=['train loss', 'train acc', 'test acc'])

net = nn.DataParallel(net, device_ids=devices).to(devices[0])

loss_list = []

train_acc_list = []

test_acc_list = []

epochs_list = []

time_list = []

for epoch in range(num_epochs):

# Sum of training loss, sum of training accuracy, no. of examples,

# no. of predictions

metric = d2l.Accumulator(4)

for i, (features, labels) in enumerate(train_iter):

timer.start()

l, acc = train_batch_ch13(

net, features, labels.long(), loss, trainer, devices)

metric.add(l, acc, labels.shape[0], labels.numel())

timer.stop()

if (i + 1) % (num_batches // 5) == 0 or i == num_batches - 1:

animator.add(epoch + (i + 1) / num_batches,

(metric[0] / metric[2], metric[1] / metric[3],

None))

test_acc = evaluate_accuracy_gpu(net, test_iter)

animator.add(epoch + 1, (None, None, test_acc))

scheduler.step()

print(f"epoch {epoch+1} --- loss {metric[0] / metric[2]:.3f} --- train acc {metric[1] / metric[3]:.3f} --- test acc {test_acc:.3f} --- cost time {timer.sum()}")

#---------保存训练数据---------------

df = pd.DataFrame()

loss_list.append(metric[0] / metric[2])

train_acc_list.append(metric[1] / metric[3])

test_acc_list.append(test_acc)

epochs_list.append(epoch+1)

time_list.append(timer.sum())

df['epoch'] = epochs_list

df['loss'] = loss_list

df['train_acc'] = train_acc_list

df['test_acc'] = test_acc_list

df['time'] = time_list

df.to_excel("savefile/EncNet_camvid1.xlsx")

#----------------保存模型-------------------

if np.mod(epoch+1, 5) == 0:

torch.save(model.state_dict(), f'checkpoints/EncNet_{epoch+1}.pth')

开始训练

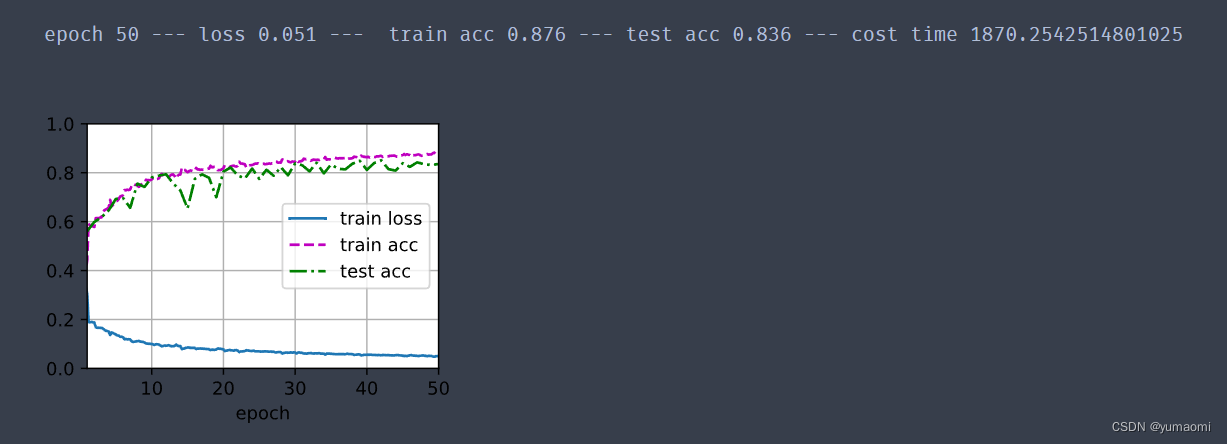

train_ch13(model, train_loader, val_loader, lossf, optimizer, epochs_num,scheduler)训练结果

273

273

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?