Perplexity 是衡量语言模型(如 GPT、BERT 等)性能的一个指标,用于评估模型对给定文本的预测准确性。它可以理解为模型对文本的“困惑度”,或者说模型预测下一个词的能力有多好。

什么是 Perplexity

- Perplexity 反映了语言模型在生成或者预测一段文本时的“困惑程度”。如果 perplexity 值较低,意味着模型对文本的预测比较准确,较高的 perplexity 值则表示模型在预测时有较大的不确定性或困惑。

- 它计算的是模型在给定测试集上的平均不确定性,即模型对一组单词序列生成概率的倒数。

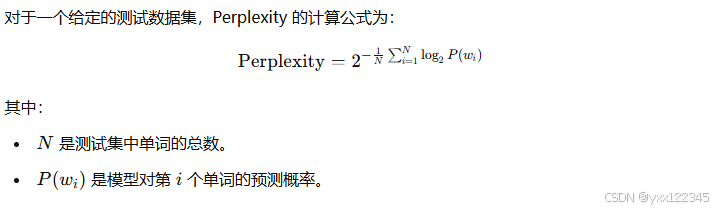

Perplexity 的计算公式

Perplexity 实际上是在测量模型对整个测试数据集的平均预测不确定性。

直观理解

可以把 perplexity 看作是模型在下一个单词出现时的选择数目。假如 perplexity 等于 10,意味着模型在预测下一个词时有大约 10 个可能的选择。较低的 perplexity(比如接近 1)表明模型对文本非常自信,较高的 perplexity 表明模型在预测时缺乏信心。

示例说明

假设有一个简单的句子:“The cat is on the…”,让模型来预测下一个单词。

- 如果模型非常准确地预测到“mat”的概率很高,那么 perplexity 值会很低。

- 如果模型对许多单词的概率都差不多(比如 “mat”、“floor”、“table” 等都有相似的概率),那么 perplexity 就会更高,表示模型对预测没有很强的自信。

在语言模型中的意义

- 较低的 perplexity 值:意味着模型对数据的拟合程度更好,预测能力更强。

- 较高的 perplexity 值:表明模型对数据的理解较差,可能会需要更多的训练或者更好的数据预处理。

总之,Perplexity 是一种用于评估语言模型在处理自然语言时准确性的重要指标。

1058

1058

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?