dropout技术的实现比较简单,直接设置一个mask,在训练的时候让部分神经元“失活”即可,在测试的时候要让所有的神经元参与进来,废话不多说,直接上代码:

layers.py

import numpy as np

def simple_forward(x,w,b):

output=x.dot(w)+b

return output

def relu_func(x):

return np.maximum(x,0)

def relu_forward(x,w,b):

temp=simple_forward(x,w,b)

return relu_func(temp)

def relu_backward(dout,x,w,b=None):

dw=x.T.dot(dout)

db=np.sum(dout,axis=0)

dx=dout.dot(w.T)

return dx,dw,db

def batchnorm_relu_forward(x,w,b,gamma,beta,config):

output=simple_forward(x,w,b)

eps = config.setdefault('eps', 1e-5)

momentum = config.setdefault('momentum', 0.9)

mode = config['mode']

N, D = output.shape

running_mean = config.get('running_mean', np.zeros(D, dtype=output.dtype))

running_var = config.get('running_var', np.zeros(D, dtype=output.dtype))

if mode == 'train':

sample_mean = np.mean(output, axis=0) # 1 * D

sample_var = np.var(output, axis=0) # 1 * D

output_normalized = (output - sample_mean) / np.sqrt(sample_var + eps) # N * D

output = output_normalized * gamma + beta # N * D

running_mean = momentum * running_mean + (1 - momentum) * sample_mean

running_var = momentum * running_var + (1 - momentum) * sample_var

config['sample_mean']=sample_mean

config['sample_var']=sample_var

config['output_normalized']=output_normalized

config['gamma']=gamma

config['eps']=eps

if mode == 'test':

output_normalized = (output - running_mean) / np.sqrt(running_var + eps)

output = output_normalized * gamma + beta

# Store the updated running means back into bn_param

config['running_mean'] = running_mean

config['running_var'] = running_var

out=relu_func(output)

return out,config

def batchnorm_relu_backward(dout,x,w,b,config):

res_x=simple_forward(x,w,b)

sample_mean=config['sample_mean']

sample_var=config['sample_var']

x_normalized=config['output_normalized']

gamma=config['gamma']

eps=config['eps']

dx_normalized = dout * gamma # N * D

sample_std_inv = 1 / np.sqrt(sample_var + eps) # 1 * D

x_mu = res_x - sample_mean # N * D

dsample_var = -0.5 * np.sum(dx_normalized * x_mu, axis=0) * (sample_std_inv ** 3)

dsample_mean = -1 * (np.sum(dx_normalized * sample_std_inv, axis=0) + 2 * dsample_var * np.mean(x_mu, axis=0))

dx = dx_normalized * sample_std_inv + 2 * dsample_var * x_mu / res_x.shape[0] + dsample_mean / res_x.shape[0]

dgamma = np.sum(dout * x_normalized, axis=0) # 1 * D

dbeta = np.sum(dout, axis=0) # 1 * D

dx,dw,db=relu_backward(dx,x,w,b)

return dx,dw,db,dgamma,dbeta

def dropout_forward(input,config):

mode=config['mode']

rate=config['drop_rate']

if(mode=='train'):

mask = (np.random.rand(*input.shape) < rate) / rate

output = input * mask

if(mode=='test'):

output=input

mask=None

return output,mask

def dropout_backward(dout,mask):

dx=dout*mask

return dx

FullConVet.py

import numpy as np

import Strategy as st

from layers import *

class FullyConNet:

lr_decay=0.95

iter_per_ann=400

parameter={}

layers=[]

weight_init=2e-2

update_rule=None

learning_rate=0

batch_size=0

epoch=0

reg=0

config={}

batch_norm=False

batch_norm_config={}

dropout=0

dr_config={}

def __init__(self,input_layer,hidden_layer,output_layer,update_rule,

learning_rate,batch_size,epoch,reg,batch_norm,dropout):

self.dropout=dropout

self.reg=reg

self.batch_size=batch_size

self.epoch=epoch

self.layers=[input_layer]+hidden_layer+[output_layer]

self.batch_norm=batch_norm

if(hasattr(st,update_rule)):

self.update_rule=getattr(st,update_rule)

length=len(hidden_layer)+1 #6

for i in range(0,length):#0,1,2,3,4,5

self.parameter['w'+str(i)]=self.weight_init*np.random.randn(self.layers[i],self.layers[i+1])

self.parameter['b'+str(i)]=np.zeros(self.layers[i+1])

if(self.batch_norm):

for i in range(0,length-1):#0,1,2,3,4

self.batch_norm_config['w'+str(i)]={'mode':'train'}

self.parameter['gamma'+str(i)]=np.ones(self.layers[i+1])

self.parameter['beta'+str(i)]=np.zeros(self.layers[i+1])

for i in self.parameter:

self.config[i] = {"learning_rate": learning_rate}

if(self.dropout>0):

self.dr_config={'mode':'train','drop_rate':dropout}

def forward_process(self,train_data,cache_output=None,cache_drop=None):

if(cache_output==None):

cache_output=[]

if(self.dropout>0):

cache_drop=[]

layers_len=len(self.layers)-1 #6

X=train_data

cache_output.append(X)

for i in range(0,layers_len-1):#0,1,2,3,4

if(self.batch_norm==True):

temp_output,self.batch_norm_config['w'+str(i)]=batchnorm_relu_forward(X,self.parameter['w'+str(i)],

self.parameter['b'+str(i)],self.parameter['gamma'+str(i)],self.parameter['beta'+str(i)],

self.batch_norm_config['w'+str(i)])

else:

temp_output=relu_forward(X,self.parameter['w'+str(i)],self.parameter['b'+str(i)])

cache_output.append(temp_output)

X=temp_output

if(self.dropout>0):

X,mask=dropout_forward(X,self.dr_config)

cache_drop.append(mask)

ind=layers_len-1

X=simple_forward(X,self.parameter['w'+str(ind)],self.parameter['b'+str(ind)])

cache_output.append(X)

return X,cache_output,cache_drop

def backward_process(self,batch_size,train_label,cache_output,cache_drop):

temp_output=cache_output[-1] #取出最后一层的输出

temp_output = temp_output - np.max(temp_output, axis=1, keepdims=True)

temp_output = np.exp(temp_output)

total_output = np.sum(temp_output, axis=1, keepdims=True)

temp_output = temp_output / total_output

temp_output[range(batch_size),train_label]-=1

temp_output=temp_output/batch_size #平均化

dout=temp_output #求出微分

layer_num=len(self.layers)-1 #6

grads={}

input_data=cache_output[layer_num-1] #y5

dout, dw, db = relu_backward(dout, input_data,

self.parameter['w' + str(layer_num-1)], self.parameter['b' + str(layer_num-1)])

dout[input_data <= 0] = 0

grads['w' + str(layer_num-1)] = dw + self.reg * self.parameter['w' + str(layer_num-1)]

grads['b' + str(layer_num-1)] = db # 记录梯度的变化,便于下一步的更新

for i in range(layer_num-1)[::-1]:#4, 3,2,1, 0 引入batch_norm函数

input_data=cache_output[i]

if(self.dropout>0):

dout=dropout_backward(dout,cache_drop[i])

if(self.batch_norm==True):

dout,dw,db,dgamma,dbeta=batchnorm_relu_backward(dout,input_data,self.parameter['w'+str(i)],

self.parameter['b'+str(i)],self.batch_norm_config['w'+str(i)])

grads['gamma' + str(i)] = dgamma

grads['beta' + str(i)] = dbeta

else:

dout,dw,db=relu_backward(dout,input_data,self.parameter['w'+str(i)],self.parameter['b'+str(i)])

dout[input_data<=0]=0

grads['w'+str(i)]=dw+self.reg*self.parameter['w'+str(i)]

grads['b'+str(i)]=db #记录梯度的变化,便于下一步的更新

for item in self.parameter:

new_item,new_config=self.update_rule(self.parameter[item],grads[item],self.config[item])

self.parameter[item]=new_item

self.config[item]=new_config

def Train(self,train_data,train_label):

total=train_data.shape[0]

iters_per_epoch=max(1,total/self.batch_size)

total_iters=int(iters_per_epoch*self.epoch)

for i in range(0,total_iters):

sample_ind=np.random.choice(total,self.batch_size,replace=True)

cur_train_data=train_data[sample_ind,:]

cur_train_label=train_label[sample_ind]

cache_output=[]

cache_drop=[]

temp,cache_output,cache_drop=self.forward_process(cur_train_data,cache_output,cache_drop)

self.backward_process(self.batch_size,cur_train_label,cache_output,cache_drop)

if((i+1)%self.iter_per_ann==0):

for c in self.parameter:

self.config[c]['learning_rate']*=self.lr_decay

def Test(self,data,label):

if(self.batch_norm):

for i in range(0, 5): # 0,1,2,3,4

self.batch_norm_config['w' + str(i)]['mode'] = 'test'

if(self.dropout>0):

self.dr_config['mode']='test'

cache_output=[]

cache_drop=[]

res,cache_output,cache_drop=self.forward_process(data,cache_output)

ind_res=np.argmax(res,axis=1)

print(ind_res)

print(label)

return np.mean(ind_res==label)

Strategy.py

import numpy as np

def adam(value,dvalue,config):

config.setdefault('eps',1e-8)

config.setdefault('beta1',0.9)

config.setdefault('beta2',0.999)

config.setdefault('learning_rate',9e-4)

t = config.get('t', 0)

v = config.get('v', np.zeros_like(value))

m = config.get('m', np.zeros_like(value))

beta1=config['beta1']

beta2=config['beta2']

eps=config['eps']

learning_rate=config['learning_rate']

t+=1

m=beta1*m+(1-beta1)*dvalue

mt=m/(1-beta1**t)

v=beta2*v+(1-beta2)*(dvalue**2)

vt=v/(1-beta2**t)

new_value=value-learning_rate*mt/(np.sqrt(vt)+eps)

config['t']=t

config['v']=v

config['m']=m

return new_value,config

def sgd(value,dvalue,config):

value=value-config['learning_rate']*dvalue

return value,config

def Momentum_up(value,dvalue,config):

learning_rate=config['learning_rate']

v=config.get('v',np.zeros_like(dvalue))

mu=config.get('mu',0.9)

v=mu*v-learning_rate*dvalue

value+=v

config['v']=v

config['mu']=mu

return value,config

def Adagrad(value,dvalue,config):

eps=config.get('eps',1e-6)

cache=config.get('cache',np.zeros_like(dvalue))

learning_rate=config['learning_rate']

cache+=dvalue**2

value=value-learning_rate*dvalue/(np.sqrt(cache)+eps)

config['eps']=eps

config['cache']=cache

return value,config

def RMSprop(value,dvalue,config):

decay_rate=config.get('decay_rate',0.99)

cache=config.get('cache',np.zeros_like(dvalue))

learning_rate=config['learning_rate']

eps=config.get('eps',1e-8)

cache=decay_rate*cache+(1-decay_rate)*(dvalue**2)

value=value-learning_rate*dvalue/(np.sqrt(cache)+eps)

config['cache']=cache

config['eps']=eps

config['decay_rate']=decay_rate

return value,config

Solver.py

import numpy as np

import pickle as pic

from FullyConNet import *

class Solver:

input_layer=3072

hidden_layer=[100,100,100,100,100]

output_layer=10

train_data, train_label, validate_data, validate_label, test_data, test_label = [], [], [], [], [], []

def readData(self, file):

with open(file, 'rb') as fo:

dict = pic.load(fo, encoding='bytes')

return dict

def Init(self,path_train,path_test):

for i in range(1, 6):

cur_path = path_train + str(i)

read_temp = self.readData(cur_path)

if (i == 1):

self.train_data = read_temp[b'data']

self.train_label = read_temp[b'labels']

else:

self.train_data = np.append(self.train_data, read_temp[b'data'], axis=0)

self.train_label += read_temp[b'labels']

mean_image = np.mean(self.train_data, axis=0)

self.train_data = self.train_data - mean_image # 预处理

read_infor = self.readData(path_test)

self.train_label = np.array(self.train_label)

self.test_data = read_infor[b'data'] # 测试数据集

self.test_label = np.array(read_infor[b'labels']) # 测试标签

self.test_data = self.test_data - mean_image # 预处理

amount_train = self.train_data.shape[0]

amount_validate = 20000

amount_train -= amount_validate

self.validate_data = self.train_data[amount_train:, :] # 验证数据集

self.validate_label = self.train_label[amount_train:] # 验证标签

self.train_data = self.train_data[:amount_train, :] # 训练数据集

self.train_label = self.train_label[:amount_train] # 训练标签

def Train(self):

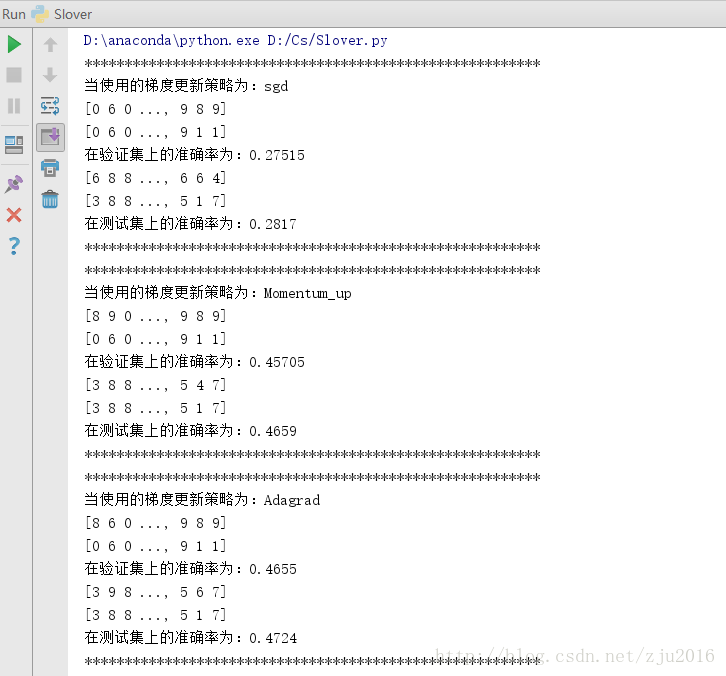

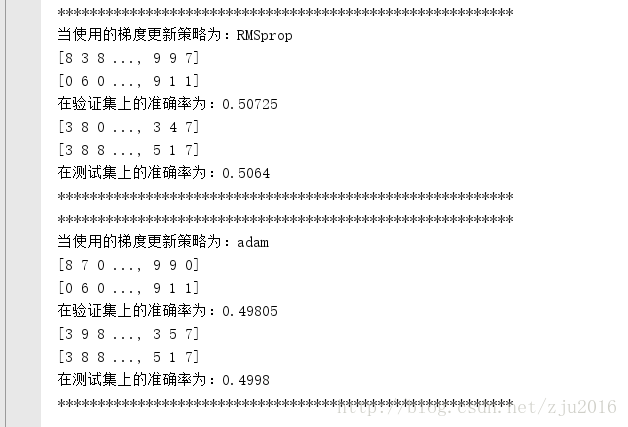

strategy=['sgd','Momentum_up','Adagrad','RMSprop','adam']

for s in strategy:

neuralNet=FullyConNet(self.input_layer,self.hidden_layer,self.output_layer,s,9e-4,250,10,0.0,True,0.9)

neuralNet.Train(self.train_data,self.train_label)

print("*********************************************************")

print("当使用的梯度更新策略为:"+s)

print("在验证集上的准确率为:"+str(neuralNet.Test(self.validate_data,self.validate_label)))

print("在测试集上的准确率为:"+str(neuralNet.Test(self.test_data,self.test_label)))

print("*********************************************************")

if __name__=='__main__':

a=Solver()

a.Init("D:\\data\\cifar-10-batches-py\\data_batch_",

"D:\\data\\cifar-10-batches-py\\test_batch")

a.Train()

运行样例截图:

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?