Cases from MorvanZhou

案例1:Q-Learning 寻宝藏

案例描述

例子的环境是一个一维世界,在世界的右边有宝藏,探索者只要得到宝藏就会记住得到宝藏的方法,这是他用强化学习所学习到的行为。

// T 就是宝藏的位置, o 是探索者的位置

-o—T

算法描述

Q-Learning 是一种记录行为值(Q Value)的方法,每种在一定状态的行为都会有一个值

Q

(

s

,

a

)

Q(s,a)

Q(s,a) 就是说行为 Action 在 State 状态的值是

Q

(

s

,

a

)

Q(s, a)

Q(s,a)。

s 在上面的探索者游戏中, 就是 o 所在的地点了。而每一个地点探索者都能做出两个行为 left / right, 这就是探索者的所有可行的 a 啦.

如果在某个地点 s1,探索者计算了他能有的两个行为, a1 / a2 = left / right, 计算结果是

Q

(

s

1

,

a

1

)

>

Q

(

s

1

,

a

2

)

Q(s_1,a_1)> Q(s_1, a_2)

Q(s1,a1)>Q(s1,a2) , 那么探索者就会选择 left 这个行为。这就是 Q learning 的行为选择简单规则。

具体实现

1、导入相关模块

import time

import numpy as np

import pandas as pd

2、预设参数

np.random.seed(2) # 随机数种子

N_STATES = 6 # 1维世界的宽度

ACTIONS = ['left', 'right'] # 探索者可以选择的动作

MAX_EPISODES = 13 # 最大回合数

FRESH_TIME = 0.3 # 移动间隔时间

EPSILON = 0.9 # 贪婪度

ALPHA = 0.1 # 学习率

GAMMA = 0.9 # 奖励递减参数

3、定义环境函数

- 函数输入值:当前的状态

State、当前的回合数episode、当前的步数step_counter。 - 函数输出值:如果探索者到达终点,输出当前的回合数和探索者找到宝藏所用的总步数。如果探索者没有到达终点,就输出当前环境。

def update_env(S, episode, step_counter):

env_list = ['-'] * (N_STATES-1) + ['T']

if S == 'terminal':

interaction = 'Episode %s: total_steps = %s' % (episode+1, step_counter)

print('\r{}'.format(interaction),end='')

time.sleep(2)

print('\r ', end='')

else:

env_list[S] = 'o'

interaction = ''.join(env_list)

print('\r{}'.format(interaction), end='')

time.sleep(FRESH_TIME)

4、定义获取环境反馈参数

- 函数输入值:当前状态

State和当前采取的动作Action。 - 函数输出值:返回下一个状态

S_和 奖励R;如果是选择向右走,且到达宝藏位置,就返回S_为‘terminal’,R为1;若向右走没有到达宝藏位置或者向左走,则没有奖励。

def get_env_feedback(S,A):

if A == 'right':

if S == N_STATES - 2:

S_ = 'terminal'

R = 1

else:

S_ = S + 1

R = 0

else:

R = 0

if S == 0:

S_ = S

else:

S_ = S - 1

return S_, R

5、定义一个 Q-Value 表

- 函数输入值:状态

n_states和actions;将所有的Q-Values放在q_table中,更新q_table就是更新探索者的最佳决策值。 - 函数输出值:

q_table。

def build_q_table(n_states, actions):

table = pd.DataFrame(np.zeros((n_states, len(actions))),columns=actions)

return table

6、定义动作选择函数

- 函数输入值:当前状态

State和q_table。 - 函数返回值:动作的名字

action_name,这里通过设置贪婪度来决定。

def choose_action(state, q_table):

state_actions = q_table.iloc[state,:]

if (np.random.uniform()>EPSILON) or ((state_actions == 0).all()):

action_name = np.random.choice(ACTIONS)

else:

action_name = state_actions.idxmax()

return action_name

7、定义强化学习函数

def rl():

q_table = build_q_table(N_STATES, ACTIONS)

for episode in range(MAX_EPISODES):

step_counter = 0

S = 0

is_terminated = False

update_env(S, episode, step_counter)

while not is_terminated:

A = choose_action(S, q_table)

S_, R = get_env_feedback(S, A)

q_predict = q_table.loc[S,A]

if S_ != 'terminal':

q_target = R + GAMMA*q_table.iloc[S_,:].max()

else:

q_target = R

is_terminated = True

q_table.loc[S,A] += ALPHA*(q_target - q_predict)

S = S_

update_env(S, episode, step_counter+1)

step_counter += 1

return q_table

8、执行

if __name__ == "__main__":

q_table = rl()

print('\r\nQ-table:\n')

print(q_table)

案例2:Q-Learning 走迷宫

案例描述

让探索者学会走迷宫,红色的是探索者(agent),黄色的是天堂 (reward 1), 黑色的地狱 (reward -1)。

算法描述

通过 Q-Learning 算法更新实现,就是不断更新 Q- table 里的值, 然后再根据新的值来判断要在某个 State 采取怎样的 Action。这里采用 On-Policy,就是将当前的经历当场学习并且运用。

具体实现

1、构建强化学习算法主体

import numpy as np

import pandas as pd

class QLearningTable:

def __init__(self, actions, learning_rate=0.01, reward_decay=0.9, e_greedy=0.9):

self.actions = actions

self.lr = learning_rate

self.gamma = reward_decay

self.epsilon = e_greedy

self.q_table = pd.DataFrame(columns=self.actions, dtype=np.float64)

def choose_action(self, observation):

self.check_state_exist(observation)

if np.random.uniform() < self.epsilon:

state_action = self.q_table.loc[observation,:]

action = np.random.choice(state_action[state_action==np.max(state_action)].index)

else:

action = np.random.choice(self.actions)

return action

def learn(self, s, a, r, s_):

self.check_state_exist(s_)

q_predict = self.q_table.loc[s, a]

if s_ != 'terminal':

q_target = r + self.gamma*self.q_table.loc[s_, :].max()

else:

q_target = r

self.q_table.loc[s, a] += self.lr*(q_target-q_predict)

def check_state_exist(self, state):

if state not in self.q_table.index:

self.q_table = self.q_table.append(pd.Series([0]*len(self.actions),index=self.q_table.columns,name=state))

2、搭建环境

import numpy as np

import time

import sys

if sys.version_info.major == 2:

import Tkinter as tk

else:

import tkinter as tk

UNIT = 40 # pixels

MAZE_H = 8 # grid height

MAZE_W = 8 # grid width

class Maze(tk.Tk, object):

def __init__(self):

super(Maze, self).__init__()

self.action_space = ['u', 'd', 'l', 'r']

self.n_actions = len(self.action_space)

self.title('maze')

self.geometry('{0}x{1}'.format(MAZE_H * UNIT, MAZE_H * UNIT))

self._build_maze()

def _build_maze(self):

self.canvas = tk.Canvas(self, bg='white',

height=MAZE_H * UNIT,

width=MAZE_W * UNIT)

# create grids

for c in range(0, MAZE_W * UNIT, UNIT):

x0, y0, x1, y1 = c, 0, c, MAZE_H * UNIT

self.canvas.create_line(x0, y0, x1, y1)

for r in range(0, MAZE_H * UNIT, UNIT):

x0, y0, x1, y1 = 0, r, MAZE_W * UNIT, r

self.canvas.create_line(x0, y0, x1, y1)

# create origin

origin = np.array([20, 20])

# hell

hell1_center = origin + np.array([UNIT * 2, UNIT])

self.hell1 = self.canvas.create_rectangle(

hell1_center[0] - 15, hell1_center[1] - 15,

hell1_center[0] + 15, hell1_center[1] + 15,

fill='black')

# hell

hell2_center = origin + np.array([UNIT, UNIT * 2])

self.hell2 = self.canvas.create_rectangle(

hell2_center[0] - 15, hell2_center[1] - 15,

hell2_center[0] + 15, hell2_center[1] + 15,

fill='black')

# hell

hell3_center = origin + np.array([UNIT * 2, UNIT * 6])

self.hell3 = self.canvas.create_rectangle(

hell3_center[0] - 15, hell3_center[1] - 15,

hell3_center[0] + 15, hell3_center[1] + 15,

fill='black')

# hell

hell4_center = origin + np.array([UNIT * 6, UNIT * 2])

self.hell4 = self.canvas.create_rectangle(

hell4_center[0] - 15, hell4_center[1] - 15,

hell4_center[0] + 15, hell4_center[1] + 15,

fill='black')

# hell

hell5_center = origin + np.array([UNIT * 4, UNIT * 4])

self.hell5 = self.canvas.create_rectangle(

hell5_center[0] - 15, hell5_center[1] - 15,

hell5_center[0] + 15, hell5_center[1] + 15,

fill='black')

# hell

hell6_center = origin + np.array([UNIT * 4, UNIT * 1])

self.hell6 = self.canvas.create_rectangle(

hell6_center[0] - 15, hell6_center[1] - 15,

hell6_center[0] + 15, hell6_center[1] + 15,

fill='black')

# hell

hell7_center = origin + np.array([UNIT * 1, UNIT * 3])

self.hell7 = self.canvas.create_rectangle(

hell7_center[0] - 15, hell7_center[1] - 15,

hell7_center[0] + 15, hell7_center[1] + 15,

fill='black')

# hell

hell8_center = origin + np.array([UNIT * 2, UNIT * 4])

self.hell8 = self.canvas.create_rectangle(

hell8_center[0] - 15, hell8_center[1] - 15,

hell8_center[0] + 15, hell8_center[1] + 15,

fill='black')

# hell

hell9_center = origin + np.array([UNIT * 3, UNIT * 2])

self.hell9 = self.canvas.create_rectangle(

hell9_center[0] - 15, hell9_center[1] - 15,

hell9_center[0] + 15, hell9_center[1] + 15,

fill='black')

# create oval

oval_center = origin + UNIT * 3

self.oval = self.canvas.create_oval(

oval_center[0] - 15, oval_center[1] - 15,

oval_center[0] + 15, oval_center[1] + 15,

fill='yellow')

# create red rect

self.rect = self.canvas.create_rectangle(

origin[0] - 15, origin[1] - 15,

origin[0] + 15, origin[1] + 15,

fill='red')

# pack all

self.canvas.pack()

def reset(self):

self.update()

time.sleep(0.5)

self.canvas.delete(self.rect)

origin = np.array([20, 20])

self.rect = self.canvas.create_rectangle(

origin[0] - 15, origin[1] - 15,

origin[0] + 15, origin[1] + 15,

fill='red')

# return observation

return self.canvas.coords(self.rect)

def step(self, action):

s = self.canvas.coords(self.rect)

base_action = np.array([0, 0])

if action == 0: # up

if s[1] > UNIT:

base_action[1] -= UNIT

elif action == 1: # down

if s[1] < (MAZE_H - 1) * UNIT:

base_action[1] += UNIT

elif action == 2: # right

if s[0] < (MAZE_W - 1) * UNIT:

base_action[0] += UNIT

elif action == 3: # left

if s[0] > UNIT:

base_action[0] -= UNIT

self.canvas.move(self.rect, base_action[0], base_action[1]) # move agent

s_ = self.canvas.coords(self.rect) # next state

# reward function

if s_ == self.canvas.coords(self.oval):

reward = 1

done = True

s_ = 'terminal'

elif s_ in [self.canvas.coords(self.hell1), self.canvas.coords(self.hell2), self.canvas.coords(self.hell3),

self.canvas.coords(self.hell4),self.canvas.coords(self.hell5),self.canvas.coords(self.hell6),self.canvas.coords(self.hell7),

self.canvas.coords(self.hell8),self.canvas.coords(self.hell9)]:

reward = -1

done = True

s_ = 'terminal'

else:

reward = 0

done = False

return s_, reward, done

def render(self):

time.sleep(0.1)

self.update()

def update():

for t in range(10):

s = env.reset()

while True:

env.render()

a = 1

s, r, done = env.step(a)

if done:

break

if __name__ == '__main__':

env = Maze()

env.after(100, update)

env.mainloop()

3、运行

from maze_env import Maze

from RL_brain import QLearningTable

def update():

for episode in range(150):

observation = env.reset() # 初始化State的观测值

print(episode)

while True:

env.render()

action = RL.choose_action(str(observation)) # 选择Action

# print("observation: {}".format(observation))

observation_, reward, done = env.step(action)

RL.learn(str(observation), action, reward, str(observation_))

# print(RL.q_table)

observation = observation_

if done:

break

print('game over')

env.destroy()

if __name__ == '__main__':

env = Maze()

RL = QLearningTable(actions=list(range(env.n_actions)))

env.after(100, update)

env.mainloop()

案例3:Deep-Q-Network 走迷宫

案例描述

让探索者学会走迷宫,红色的是探索者(agent),黄色的是天堂 (reward 1), 黑色的地狱 (reward -1)。

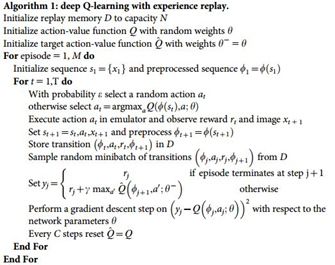

算法描述

Deep Q Network 的简称叫 DQN, 是将 Q learning 的优势 和 Neural networks 结合了。如果我们使用 tabular Qlearning, 对于每一个 state, action 我们都需要存放在一张 q_table 的表中。如果像显示生活中, 情况可就比那个迷宫的状况复杂多了, 我们有千千万万个 state, 如果将这千万个 state 的值都放在表中, 受限于我们计算机硬件, 这样从表中获取数据, 更新数据是没有效率的. 这就是 DQN 产生的原因了。我们可以使用神经网络来估算这个 state 的值, 这样就不需要一张表了。

具体实现

1、搭建强化学习主体

import numpy as np

import pandas as pd

import torch

import torch.nn as nn

import torch.nn.functional as F

import matplotlib.pyplot as plt

import copy

np.random.seed(1)

torch.manual_seed(1)

# define the network architecture

class Net(nn.Module):

def __init__(self, n_feature, n_hidden, n_output):

super(Net, self).__init__()

self.el = nn.Linear(n_feature, n_hidden)

self.q = nn.Linear(n_hidden, n_output)

def forward(self, x):

x = self.el(x)

x = F.relu(x)

x = self.q(x)

return x

class DeepQNetwork():

def __init__(self, n_actions, n_features, n_hidden=20, learning_rate=0.01, reward_decay=0.9, e_greedy=0.9,

replace_target_iter=200, memory_size=500, batch_size=32, e_greedy_increment=None,

):

self.n_actions = n_actions

self.n_features = n_features

self.n_hidden = n_hidden

self.lr = learning_rate

self.gamma = reward_decay

self.epsilon_max = e_greedy

self.replace_target_iter = replace_target_iter

self.memory_size = memory_size

self.batch_size = batch_size

self.epsilon_increment = e_greedy_increment

self.epsilon = 0 if e_greedy_increment is not None else self.epsilon_max

# total learning step

self.learn_step_counter = 0

# initialize zero memory [s, a, r, s_]

self.memory = np.zeros((self.memory_size, n_features*2+2))

self.loss_func = nn.MSELoss()

self.cost_his = []

self._build_net()

def _build_net(self):

self.q_eval = Net(self.n_features, self.n_hidden, self.n_actions)

self.q_target = Net(self.n_features, self.n_hidden, self.n_actions)

self.optimizer = torch.optim.RMSprop(self.q_eval.parameters(), lr=self.lr)

def store_transition(self, s, a, r, s_):

if not hasattr(self, 'memory_counter'):

self.memory_counter = 0

transition = np.hstack((s, [a, r], s_))

# replace the old memory with new memory

index = self.memory_counter % self.memory_size

self.memory[index, :] = transition

self.memory_counter += 1

def choose_action(self, observation):

observation = torch.Tensor(observation[np.newaxis, :])

if np.random.uniform() < self.epsilon:

actions_value = self.q_eval(observation)

action = np.argmax(actions_value.data.numpy())

else:

action = np.random.randint(0, self.n_actions)

return action

def learn(self):

# check to replace target parameters

if self.learn_step_counter % self.replace_target_iter == 0:

self.q_target.load_state_dict(self.q_eval.state_dict())

print("\ntarget params replaced\n")

# sample batch memory from all memory

if self.memory_counter > self.memory_size:

sample_index = np.random.choice(self.memory_size, size=self.batch_size)

else:

sample_index = np.random.choice(self.memory_counter, size=self.batch_size)

batch_memory = self.memory[sample_index, :]

# q_next is used for getting which action would be choosed by target network in state s_(t+1)

q_next, q_eval = self.q_target(torch.Tensor(batch_memory[:, -self.n_features:])), self.q_eval(torch.Tensor(batch_memory[:, :self.n_features]))

# used for calculating y, we need to copy for q_eval because this operation could keep the Q_value that has not been selected unchanged,

# so when we do q_target - q_eval, these Q_value become zero and wouldn't affect the calculation of the loss

q_target = torch.Tensor(q_eval.data.numpy().copy())

batch_index = np.arange(self.batch_size, dtype=np.int32)

eval_act_index = batch_memory[:, self.n_features].astype(int)

reward = torch.Tensor(batch_memory[:, self.n_features+1])

q_target[batch_index, eval_act_index] = reward + self.gamma*torch.max(q_next, 1)[0]

loss = self.loss_func(q_eval, q_target)

self.optimizer.zero_grad()

loss.backward()

self.optimizer.step()

# increase epsilon

self.cost_his.append(loss)

self.epsilon = self.epsilon + self.epsilon_increment if self.epsilon < self.epsilon_max else self.epsilon_max

self.learn_step_counter += 1

def plot_cost(self):

plt.plot(np.arange(len(self.cost_his)), self.cost_his)

plt.ylabel('Cost')

plt.xlabel('training steps')

plt.show()

2、搭建环境

import numpy as np

import time

import sys

if sys.version_info.major == 2:

import Tkinter as tk

else:

import tkinter as tk

UNIT = 40 # pixels

MAZE_H = 4 # grid height

MAZE_W = 4 # grid width

class Maze(tk.Tk, object):

def __init__(self):

super(Maze, self).__init__()

self.action_space = ['u', 'd', 'l', 'r']

self.n_actions = len(self.action_space)

self.n_features = 2

self.title('maze')

self.geometry('{0}x{1}'.format(MAZE_H * UNIT, MAZE_H * UNIT))

self._build_maze()

def _build_maze(self):

self.canvas = tk.Canvas(self, bg='white',

height=MAZE_H * UNIT,

width=MAZE_W * UNIT)

# create grids

for c in range(0, MAZE_W * UNIT, UNIT):

x0, y0, x1, y1 = c, 0, c, MAZE_H * UNIT

self.canvas.create_line(x0, y0, x1, y1)

for r in range(0, MAZE_H * UNIT, UNIT):

x0, y0, x1, y1 = 0, r, MAZE_W * UNIT, r

self.canvas.create_line(x0, y0, x1, y1)

# create origin

origin = np.array([20, 20])

# hell

hell1_center = origin + np.array([UNIT * 2, UNIT])

self.hell1 = self.canvas.create_rectangle(

hell1_center[0] - 15, hell1_center[1] - 15,

hell1_center[0] + 15, hell1_center[1] + 15,

fill='black')

# hell

# hell2_center = origin + np.array([UNIT, UNIT * 2])

# self.hell2 = self.canvas.create_rectangle(

# hell2_center[0] - 15, hell2_center[1] - 15,

# hell2_center[0] + 15, hell2_center[1] + 15,

# fill='black')

# create oval

oval_center = origin + UNIT * 2

self.oval = self.canvas.create_oval(

oval_center[0] - 15, oval_center[1] - 15,

oval_center[0] + 15, oval_center[1] + 15,

fill='yellow')

# create red rect

self.rect = self.canvas.create_rectangle(

origin[0] - 15, origin[1] - 15,

origin[0] + 15, origin[1] + 15,

fill='red')

# pack all

self.canvas.pack()

def reset(self):

self.update()

time.sleep(0.1)

self.canvas.delete(self.rect)

origin = np.array([20, 20])

self.rect = self.canvas.create_rectangle(

origin[0] - 15, origin[1] - 15,

origin[0] + 15, origin[1] + 15,

fill='red')

# return observation

return (np.array(self.canvas.coords(self.rect)[:2]) - np.array(self.canvas.coords(self.oval)[:2]))/(MAZE_H*UNIT)

def step(self, action):

s = self.canvas.coords(self.rect)

base_action = np.array([0, 0])

if action == 0: # up

if s[1] > UNIT:

base_action[1] -= UNIT

elif action == 1: # down

if s[1] < (MAZE_H - 1) * UNIT:

base_action[1] += UNIT

elif action == 2: # right

if s[0] < (MAZE_W - 1) * UNIT:

base_action[0] += UNIT

elif action == 3: # left

if s[0] > UNIT:

base_action[0] -= UNIT

self.canvas.move(self.rect, base_action[0], base_action[1]) # move agent

next_coords = self.canvas.coords(self.rect) # next state

# reward function

if next_coords == self.canvas.coords(self.oval):

reward = 1

done = True

elif next_coords in [self.canvas.coords(self.hell1)]:

reward = -1

done = True

else:

reward = 0

done = False

s_ = (np.array(next_coords[:2]) - np.array(self.canvas.coords(self.oval)[:2]))/(MAZE_H*UNIT)

return s_, reward, done

def render(self):

# time.sleep(0.01)

self.update()

3、运行

from maze_env import Maze

from RL_brain import DeepQNetwork

def run_maze():

step = 0

for episode in range(300):

print("episode: {}".format(episode))

observation = env.reset()

while True:

print("step: {}".format(step))

env.render()

action = RL.choose_action(observation)

observation_, reward, done = env.step(action)

RL.store_transition(observation, action, reward, observation_)

if (step>200) and (step%5==0):

RL.learn()

observation = observation_

if done:

break

step += 1

print('game over')

env.destroy()

if __name__ == '__main__':

env = Maze()

RL = DeepQNetwork(env.n_actions, env.n_features,

learning_rate=0.01,

reward_decay=0.9,

e_greedy=0.9,

replace_target_iter=200,

memory_size=2000

)

env.after(100, run_maze)

env.mainloop()

RL.plot_cost()

747

747

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?