卷积神经网络

1.导包

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import sklearn

import tensorflow as tf

from tensorflow import keras

from sklearn.datasets import load_sample_image

china = load_sample_image("china.jpg") / 255

flower = load_sample_image("flower.jpg") / 255

plt.subplot(1,2,1)

plt.imshow(china)

plt.subplot(1,2,2)

plt.imshow(flower)

print("china.jpg的维度:",china.shape)

print("flower.jpg的维度:",flower.shape)

images = np.array([china,flower])

images_shape = images.shape

print("数据集的维度:",images_shape)加载两个示例图像,并对它们进行了归一化处理。然后,显示了这两个图像,并打印了形状和数据集的形状。

- 卷积层

u = 7 #卷积核边长

s = 1 #滑动步长

p = 5 #输出特征图数目

conv = keras.layers.Conv2D(filters= p, kernel_size=u, strides=s,padding="SAME", activation="relu", input_shape=images_shape)

image_after_conv = conv(images)

print("卷积后的张量大小:", image_after_conv.shape)![]()

- 汇聚层

3.1最大汇聚

pool_max = keras.layers.MaxPool2D(pool_size=2)

image_after_pool_max = pool_max(image_after_conv)

print("最大汇聚后的张量大小:",image_after_pool_max.shape)![]()

3.2平均汇聚

pool_avg = keras.layers.AvgPool2D(pool_size=2)

image_after_pool_avg = pool_avg(image_after_conv)

print("平均汇聚后的张量大小:",image_after_pool_avg.shape)![]()

3.3全局平均汇聚

pool_global_avg = keras.layers.GlobalAvgPool2D()

image_after_pool_global_avg = pool_global_avg(image_after_conv)

print("全局平均汇聚后的张量大小:",image_after_pool_global_avg.shape)![]()

搭建卷积神经网络进行手写数字识别

path = "C:/Users/14919/OneDrive/Desktop/工坊/深度学习-工坊/MNIST/" #存放.csv的文件夹

train_Data = pd.read_csv( path+'mnist_train.csv', header = None) #训练数据

test_Data = pd.read_csv( path+'mnist_test.csv', header = None) #测试数据

X, y = train_Data.iloc[:,1:].values/255, train_Data.iloc[:,0].values #数据归一化

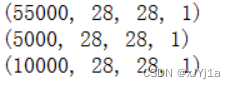

X_valid, X_train = X[:5000].reshape(5000,28,28) , X[5000:].reshape(55000,28,28) #验证集与训练集

y_valid, y_train = y[:5000], y[5000:]

X_test,y_test = test_Data.iloc[:,1:].values.reshape(10000,28,28)/255, test_Data.iloc[:,0].values #测试集

X_train = X_train[..., np.newaxis]

X_valid = X_valid[..., np.newaxis]

X_test = X_test[..., np.newaxis]

print(X_train.shape)

print(X_valid.shape)

print(X_test.shape)

处理MNIST数据集的常见流程,包括数据加载、归一化、分割成训练集、验证集和测试集,以及将二维图像数据转换为适合深度学习模型的格式。

# 搭建卷积神经网络

model_cnn_mnist = tf.keras.models.Sequential([

tf.keras.layers.Conv2D(32, kernel_size=3, padding="same", activation="relu", input_shape=(28, 28, 1)),

tf.keras.layers.Conv2D(64, kernel_size=3, padding="same", activation="relu"),

tf.keras.layers.MaxPooling2D(pool_size=2),

tf.keras.layers.Flatten(),

tf.keras.layers.Dropout(0.25),

tf.keras.layers.Dense(128, activation="relu"),

tf.keras.layers.Dropout(0.5),

tf.keras.layers.Dense(10, activation="softmax")

])

model_cnn_mnist.compile(loss="sparse_categorical_crossentropy", optimizer="nadam", metrics=["accuracy"])

model_cnn_mnist.fit(X_train, y_train, epochs=10, validation_data=(X_valid, y_valid))

model_cnn_mnist.evaluate(X_test, y_test, batch_size=1)![]()

class ResidualUnit(keras.layers.Layer):

def __init__(self, filters, strides=1, activation="relu"):

super().__init__()

self.activation = keras.activations.get(activation)

self.main_layers = [

keras.layers.Conv2D(filters, 3, strides=strides, padding = "SAME", use_bias = False),

keras.layers.BatchNormalization(),

self.activation,

keras.layers.Conv2D(filters, 3, strides=1, padding = "SAME", use_bias = False),

keras.layers.BatchNormalization()]

# 当滑动步长s = 1时,残差连接直接将输入与卷积结果相加,skip_layers为空,即实线连接

self.skip_layers = []

# 当滑动步长s = 2时,残差连接无法直接将输入与卷积结果相加,需要对输入进行卷积处理,即虚线连接

if strides > 1:

self.skip_layers = [

keras.layers.Conv2D(filters, 1, strides=strides, padding = "SAME", use_bias = False),

keras.layers.BatchNormalization()]

def call(self, inputs):

Z = inputs

for layer in self.main_layers:

Z = layer(Z)

skip_Z = inputs

for layer in self.skip_layers:

skip_Z = layer(skip_Z)

return self.activation(Z + skip_Z)搭建完整的ResNet-34神经网络

model = keras.models.Sequential()

model.add(keras.layers.Conv2D(64, 7, strides=2, padding = "SAME", use_bias = False))

model.add(keras.layers.BatchNormalization())

model.add(keras.layers.Activation("relu"))

model.add(keras.layers.MaxPool2D(pool_size=3, strides=2, padding="SAME"))

prev_filters = 64

for filters in [64] * 3 + [128] * 4 + [256] * 6 + [512] * 3:

strides = 1 if filters == prev_filters else 2 #在每次特征图数目扩展时,设置滑动步长为2

model.add(ResidualUnit(filters, strides=strides))

prev_filters = filters

model.add(keras.layers.GlobalAvgPool2D())

model.add(keras.layers.Flatten())

model.add(keras.layers.Dense(10, activation="softmax"))

model_cnn_mnist.compile(loss="sparse_categorical_crossentropy", optimizer="nadam", metrics=["accuracy"])

model_cnn_mnist.fit(X_train, y_train, epochs=10, validation_data=(X_valid, y_valid))

model_cnn_mnist.evaluate(X_test, y_test, batch_size=1)![]()

13万+

13万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?