文章目录

LLM推理及部署:https://www.bilibili.com/video/BV1VpU6YcEuS

1 [LLMs inference] quantization 量化整体介绍(bitsandbytes、GPTQ、GGUF、AWQ)

Quantize量化概念与技术细节

题外话,在七八年前,一些关于表征的研究,会去做表征的压缩,比如二进制嵌入这种事情,其实做得很简单,无非是找个阈值,然后将浮点数划归为零一值,现在的Quantize差不多也是这么一回事,冷饭重炒,但在当下LLM的背景下,明显比那时候更有意义。

- HuggingFace bitsandbytes包

- GPTQ: data compression, GPU,arxiv.2210.17323

- GPTQ is a post-training quantization (PTQ) method for 4-bit quantization that focuses primarily on GPU inference and performance.

- to quantizing the weights of transformer-based models

- first applies scalar quant to the weights, followed by vector quant to the residuals

- The idea behind the method is that it will try to compress all weights to a 4-bit quantization by minimizing the mean squared error to that weight.

- During inference, it will dynamically dequantize its weights to float16 for improved performance whilst keeping memory low.

- GGUF: ggml, CPU, 这是与GPTQ相对应的量化方法,在CPU上实现推理优化。(过时)

- c++,

- llama.cpp, https://github.com/ggerganov/llama.cpp

- AWQ:activation aware quantization,arxiv.2306.00978

- 声称是对GPTQ的优化,提升了速度,但牺牲的精度小(都这样说)

安装(源码安装更容易成功):

# Latest HF transformers version for Mistral-like models

# !pip install git+https://github.com/huggingface/transformers.git

# !pip install accelerate bitsandbytes xformers

# GPTQ Dependencies

# !pip install optimum

# !pip install auto-gptq --extra-index-url https://huggingface.github.io/autogptq-index/whl/cu118/

# 我这边走的是源码安装

# GGUF Dependencies

# !pip install 'ctransformers[cuda]'

在llama3-8b上的测试:

from torch import bfloat16

import torch

from transformers import pipeline, AutoTokenizer, AutoModelForCausalLM

# Load in your LLM without any compression tricks

model_id = "meta-llama/Meta-Llama-3-8B-Instruct"

# model_id = "HuggingFaceH4/zephyr-7b-beta"

pipe = pipeline(

"text-generation",

model=model_id,

torch_dtype=bfloat16,

device_map="auto"

)

pipe.model

输出模型的结构:

LlamaForCausalLM(

(model): LlamaModel(

(embed_tokens): Embedding(128256, 4096)

(layers): ModuleList(

(0-31): 32 x LlamaDecoderLayer(

(self_attn): LlamaSdpaAttention(

(q_proj): Linear(in_features=4096, out_features=4096, bias=False)

(k_proj): Linear(in_features=4096, out_features=1024, bias=False)

(v_proj): Linear(in_features=4096, out_features=1024, bias=False)

(o_proj): Linear(in_features=4096, out_features=4096, bias=False)

(rotary_emb): LlamaRotaryEmbedding()

)

(mlp): LlamaMLP(

(gate_proj): Linear(in_features=4096, out_features=14336, bias=False)

(up_proj): Linear(in_features=4096, out_features=14336, bias=False)

(down_proj): Linear(in_features=14336, out_features=4096, bias=False)

(act_fn): SiLU()

)

(input_layernorm): LlamaRMSNorm()

(post_attention_layernorm): LlamaRMSNorm()

)

)

(norm): LlamaRMSNorm()

)

(lm_head): Linear(in_features=4096, out_features=128256, bias=False)

)

一个细节,查看任意一个layer的权重值的分布(查看前10000个),发现是基本呈现零均值的正态分布的,这也是后面normal float(nf4)就是基于这样的前提做的量化:

import seaborn as sns

q_proj = pipe.model.model.layers[0].self_attn.q_proj.weight.detach().to(torch.float16).cpu().numpy().flatten()

plt.figure(figsize=(10, 6))

sns.histplot(q_proj[:10000], bins=50, kde=True)

chat template:

- llama3

<|begin_of_text|><|start_header_id|>system<|end_header_id|>....<|eot_id|><|start_header_id|>user<|end_header_id|>...<|eot_id|><|start_header_id|>assistant<|end_header_id|>...

- zephyr

<|system|> ... </s><|user|> ... </s><|assistant|> ... </s>

具体使用template:

# See https://huggingface.co/docs/transformers/main/en/chat_templating

messages = [

{

"role": "system",

"content": "You are a friendly chatbot.",

},

{

"role": "user",

"content": "Tell me a funny joke about Large Language Models."

},

]

prompt = pipe.tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

print(prompt)

T = AutoTokenizer.from_pretrained(model_id)

# T

# T.encode('<|system|>')

<|begin_of_text|><|start_header_id|>system<|end_header_id|>

You are a friendly chatbot.<|eot_id|><|start_header_id|>user<|end_header_id|>

Tell me a funny joke about Large Language Models.<|eot_id|><|start_header_id|>assistant<|end_header_id|>

使用pipe进行生成:

outputs = pipe(

prompt,

max_new_tokens=256,

do_sample=True,

temperature=0.1,

top_p=0.95

)

(torch.cuda.max_memory_allocated(device='cuda:0') + torch.cuda.max_memory_allocated(device='cuda:1')) / (1024*1024*1024) # 15.021286964416504,差不多是15GB

print(outputs[0]['generated_text'])

"""

<|begin_of_text|><|start_header_id|>system<|end_header_id|>

You are a friendly chatbot.<|eot_id|><|start_header_id|>user<|end_header_id|>

Tell me a funny joke about Large Language Models.<|eot_id|><|start_header_id|>assistant<|end_header_id|>

Here's one:

Why did the Large Language Model go to therapy?

Because it was struggling to "process" its emotions and was feeling a little "disconnected" from its users! But in the end, it just needed to "retrain" its thoughts and "update" its perspective!

Hope that made you LOL!

"""

使用accelerate作sharding(分片)

from accelerate import Accelerator

# Shard our model into pieces of 1GB

accelerator = Accelerator()

accelerator.save_model(

model=pipe.model,

save_directory="./content/model",

max_shard_size="4GB"

)

量化概述

- 4bit-NormalFloat (NF4, qlora: lora on a quantize LLMs,arxiv.2305.14314) consists of three steps:

- Normalization: The weights of the model are normalized so that we expect the weights to fall within a certain range. This allows for more efficient representation of more common values.(密度高的地方多分配离散值,密度低的地方少分配离散值,前提就是上面的正态分布)

- The weights of the model are first normalized to have zero mean and unit variance. This ensures that the weights are distributed around zero and fall within a certain range.

- Quantization: The weights are quantized to 4-bit. In NF4, the quantization levels are evenly spaced with respect to the normalized weights, thereby efficiently representing the original 32-bit weights.(所谓那些int4模型,就是每个权重都由16个离散值表示,int8就是64个,以此类推,这个主意之前bf16, float32, float16的具体表征,三者都有1bit用来存符号,bf16跟float32的区别在于小数位减少,float16则两者都变少,分别是1+8+7,1+8+23,1+5+10,比如同样一个0.1234,三者的结果就是0.1235351…,0.1234000…,0.1234130…,而75505则对应75505,inf,75264,即bf16是做了一个权衡,能表示很大的数,但是精度不够)

- The normalized weights are then quantized to 4 bits. This involves mapping the original high-precision weights to a smaller set of low-precision values. In the case of NF4, the quantization levels are chosen to be evenly spaced in the range of the normalized weights.

- Dequantization: Although the weights are stored in 4-bit, they are dequantized during computation which gives a performance boost during inference.

- During the forward pass and backpropagation, the quantized weights are dequantized back to full precision. This is done by mapping the 4-bit quantized values back to their original range. The dequantized weights are used in the computations, but they are stored in memory in their 4-bit quantized form.

- Normalization: The weights of the model are normalized so that we expect the weights to fall within a certain range. This allows for more efficient representation of more common values.(密度高的地方多分配离散值,密度低的地方少分配离散值,前提就是上面的正态分布)

- bitsandbytes 的分位数计算

- 密度高的地方多分配,密度低的地方少分配

- https://github.com/bitsandbytes-foundation/bitsandbytes/blob/main/bitsandbytes/functional.py#L267

- https://zhuanlan.zhihu.com/p/647378373

验证一下上面bf16, f32, f16的区别:

torch.set_printoptions(sci_mode=False)

X = torch.tensor([0.1234, 75535])

print(X, X.dtype) # tensor([ 0.1234, 75535.0000]) torch.float32

print(X.to(torch.float16)) # tensor([0.1234, inf], dtype=torch.float16)

print(X.to(torch.bfloat16)) # tensor([ 0.1235, 75776.0000], dtype=torch.bfloat16)

接下来手动量化(用BitsAndBytes)

# Delete any models previously created

# del pipe, accelerator

del pipe

# Empty VRAM cache

import gc

gc.collect()

torch.cuda.empty_cache()

from transformers import BitsAndBytesConfig

from torch import bfloat16

model_id = "meta-llama/Meta-Llama-3-8B-Instruct"

# Our 4-bit configuration to load the LLM with less GPU memory

bnb_config = BitsAndBytesConfig(

load_in_4bit=True, # 4-bit quantization

bnb_4bit_quant_type='nf4', # Normalized float 4

bnb_4bit_use_double_quant=True, # Second quantization after the first

bnb_4bit_compute_dtype=bfloat16 # Computation type

)

# Zephyr with BitsAndBytes Configuration

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(

model_id,

quantization_config=bnb_config,

device_map='auto',

)

# Create a pipeline

pipe = pipeline(model=model, tokenizer=tokenizer, task='text-generation')

(torch.cuda.max_memory_allocated('cuda:0') + torch.cuda.max_memory_allocated('cuda:1')) / (1024*1024*1024) # 5.5174360275268555,内存占用相较于上面的15G明显减少

参数含义在论文中都有,同样可以打印prompt都是没有区别的,输出发生变化

# See https://huggingface.co/docs/transformers/main/en/chat_templating

messages = [

{

"role": "system",

"content": "You are a friendly chatbot.",

},

{

"role": "user",

"content": "Tell me a funny joke about Large Language Models."

},

]

prompt = pipe.tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

print(prompt)

"""

<|begin_of_text|><|start_header_id|>system<|end_header_id|>

You are a friendly chatbot.<|eot_id|><|start_header_id|>user<|end_header_id|>

Tell me a funny joke about Large Language Models.<|eot_id|><|start_header_id|>assistant<|end_header_id|>

"""

outputs = pipe(

prompt,

max_new_tokens=256,

do_sample=True,

temperature=0.1,

top_p=0.95

)

print(outputs[0]["generated_text"])

"""

<|begin_of_text|><|start_header_id|>system<|end_header_id|>

You are a friendly chatbot.<|eot_id|><|start_header_id|>user<|end_header_id|>

Tell me a funny joke about Large Language Models.<|eot_id|><|start_header_id|>assistant<|end_header_id|>

Why did the Large Language Model go to therapy?

Because it was struggling to "process" its emotions and was worried it would "overfit" to its own biases!

"""

但是这个量化是不完全的混合精度量化(有int8也有float16):

-

load_in_8bit:

- embed_tokens 继续是 torch.float16

- 每个layer的内部(self attention)以及 mlp 部分是 int8

- 每个layer的output(layernorm)部分是 float16(如果 load 时传入了

torch_dtype=torch.bfloat16,则这部分为 torch.float16) - 同理适用于 load_in_4bit

model.embed_tokens.weight torch.float16 cuda:0 model.layers.0.self_attn.q_proj.weight torch.int8 cuda:0 model.layers.0.self_attn.k_proj.weight torch.int8 cuda:0 model.layers.0.self_attn.v_proj.weight torch.int8 cuda:0 model.layers.0.self_attn.o_proj.weight torch.int8 cuda:0 model.layers.0.mlp.gate_proj.weight torch.int8 cuda:0 model.layers.0.mlp.up_proj.weight torch.int8 cuda:0 model.layers.0.mlp.down_proj.weight torch.int8 cuda:0 model.layers.0.input_layernorm.weight torch.float16 cuda:0 model.layers.0.post_attention_layernorm.weight torch.float16 cuda:0

具体的参数输出和推理:

import torch

from torch import nn

from transformers import BitsAndBytesConfig, AutoModelForCausalLM, AutoTokenizer

from transformers.optimization import AdamW

# del model

import gc # garbage collect library

gc.collect()

torch.cuda.empty_cache()

model = AutoModelForCausalLM.from_pretrained("meta-llama/Meta-Llama-3-8B",

quantization_config=BitsAndBytesConfig(

load_in_8bit=True,

# load_in_4bit=True

),

torch_dtype=torch.bfloat16,

device_map="auto")

for name, para in model.named_parameters():

print(name, para.dtype, para.shape, para.device)

# ------

tokenizer = AutoTokenizer.from_pretrained('meta-llama/Meta-Llama-3-8B')

tokenizer.pad_token = tokenizer.eos_token

# 示例训练数据

texts = [

"Hello, how are you?",

"The quick brown fox jumps over the lazy dog."

]

# Tokenize数据

inputs = tokenizer(texts, return_tensors="pt", padding=True, truncation=True)

input_ids = inputs["input_ids"]

attention_mask = inputs["attention_mask"]

# 移动到GPU(如果可用)

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

input_ids = input_ids.to(device)

attention_mask = attention_mask.to(device)

# model.to(device)

# 设置优化器和损失函数

optimizer = AdamW(model.parameters(), lr=5e-5)

loss_fn = nn.CrossEntropyLoss()

# 模型训练步骤

model.train()

outputs = model(input_ids, attention_mask=attention_mask, labels=input_ids)

loss = outputs.loss

# 反向传播

optimizer.zero_grad()

loss.backward()

optimizer.step()

GPTQ

# Delete any models previously created

del tokenizer, model, pipe

# Empty VRAM cache

import torch

import gc

gc.collect()

torch.cuda.empty_cache()

- https://huggingface.co/MaziyarPanahi/Meta-Llama-3-8B-Instruct-GPTQ

- install

- https://github.com/AutoGPTQ/AutoGPTQ

- 走源码安装是 ok 的;

- https://github.com/AutoGPTQ/AutoGPTQ

# GPTQ Dependencies

# !pip install optimum

# !pip install auto-gptq --extra-index-url https://huggingface.github.io/autogptq-index/whl/cu118/

from transformers import AutoModelForCausalLM, AutoTokenizer, pipeline

# Load LLM and Tokenizer

model_id = "MaziyarPanahi/Meta-Llama-3-8B-Instruct-GPTQ"

tokenizer = AutoTokenizer.from_pretrained(model_id, use_fast=True)

model = AutoModelForCausalLM.from_pretrained(

model_id,

device_map="auto",

trust_remote_code=False,

revision="main"

)

# Create a pipeline

pipe = pipeline(model=model, tokenizer=tokenizer, task='text-generation')

# See https://huggingface.co/docs/transformers/main/en/chat_templating

messages = [

{

"role": "system",

"content": "You are a friendly chatbot.",

},

{

"role": "user",

"content": "Tell me a funny joke about Large Language Models."

},

]

prompt = pipe.tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

print(prompt)

outputs = pipe(

prompt,

max_new_tokens=256,

do_sample=True,

temperature=0.1,

top_p=0.95

)

print(outputs[0]["generated_text"])

(torch.cuda.max_memory_allocated('cuda:0') + torch.cuda.max_memory_allocated('cuda:1')) / (1024*1024*1024) # 5.626893043518066,跟上面bytesandbits差不太多

GGUF

HUGGINGFACE的QuantFactory仓库下有很多量化模型,比如llama3-8b的:https://huggingface.co/QuantFactory/Meta-Llama-3-8B-instruct-GGUF

- GPT-Generated Unified Format,是由Georgi Gerganov定义发布的一种大模型文件格式。Georgi Gerganov是著名开源项目llama.cpp的创始人。

- GGML:GPT-Generated Model Language

- Although GPTQ does compression well, its focus on GPU can be a disadvantage if you do not have the hardware to run it.

- GGUF, previously GGML, is a quantization method that allows users to use the CPU to run an LLM but also offload some of its layers to the GPU for a speed up (

llama.cpp中的-ngl). Although using the CPU is generally slower than using a GPU for inference, it is an incredible format for those running models on CPU or Apple devices. - Especially since we are seeing smaller and more capable models appearing, like Mistral 7B, the GGUF format might just be here to stay!

- GGUF, previously GGML, is a quantization method that allows users to use the CPU to run an LLM but also offload some of its layers to the GPU for a speed up (

- Q4_K_M

- Q stands for Quantization.

- 4 indicates the number of bits used in the quantization process.

- K refers to the use of k-means clustering in the quantization.

- M represents the size of the model after quantization.

- (S = Small, M = Medium, L = Large).

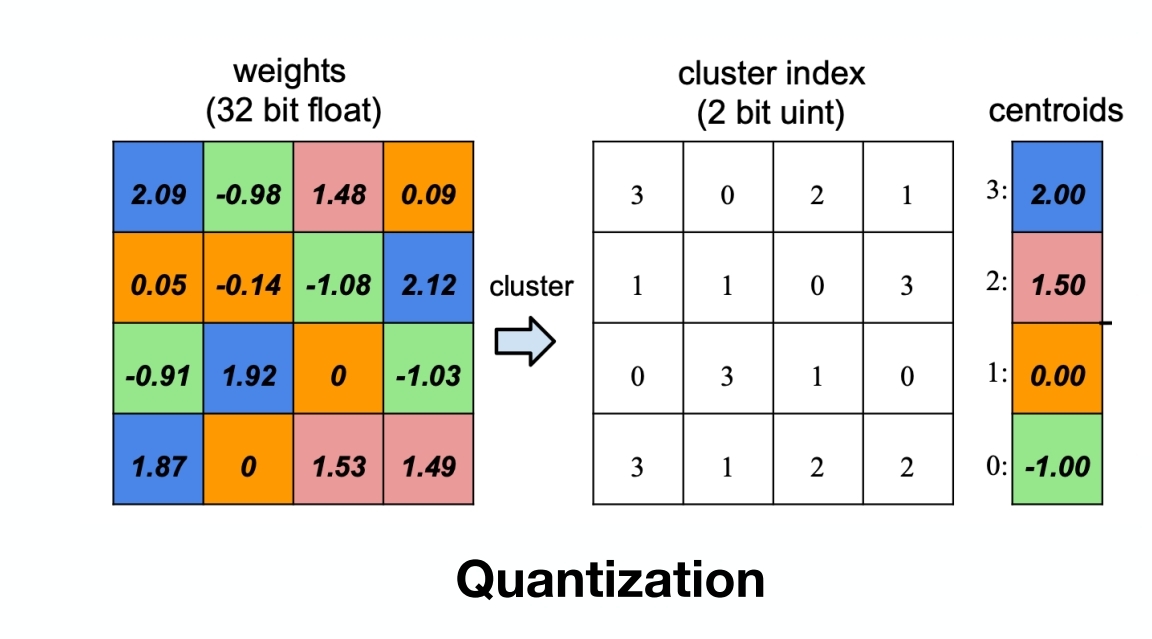

这里说GGUF用的K均值聚类来做的量化,下面是一个通用的idea(不代表GGUF就是这么做的),其实就是一种分层聚类,还是数值型的,很浅然:

代码实现:

import numpy as np

from sklearn.cluster import KMeans

# 原始权重矩阵

weights = np.array([

[2.09, -0.98, 1.48, 0.09],

[0.05, -0.14, -1.08, 2.12],

[-0.91, 1.92, 0, -1.03],

[1.87, 0, 1.53, 1.49]

])

# K-means聚类

kmeans = KMeans(n_clusters=4)

kmeans.fit(weights.reshape(-1, 1))

cluster_indices = kmeans.predict(weights.reshape(-1, 1)).reshape(weights.shape)

centroids = kmeans.cluster_centers_.flatten()

# 根据质心值排序

sorted_indices = np.argsort(centroids)

sorted_centroids = centroids[sorted_indices]

# 创建索引映射

index_map = {old_idx: new_idx for new_idx, old_idx in enumerate(sorted_indices)}

# 更新量化索引矩阵

new_cluster_indices = np.vectorize(index_map.get)(cluster_indices)

print("重新排序后的量化索引矩阵:\n", new_cluster_indices)

print("重新排序后的质心值:\n", sorted_centroids)

"""

重新排序后的量化索引矩阵:

[[3 0 2 1]

[1 1 0 3]

[0 3 1 0]

[3 1 2 2]]

重新排序后的质心值:

[-1. 0. 1.5 2. ]

"""

使用GGUF进行推理优化:(建议用llama.cpp,否则容易失败)

del tokenizer, model, pipe

# Empty VRAM cache

import torch

import gc

gc.collect()

torch.cuda.empty_cache()

from ctransformers import AutoModelForCausalLM

from transformers import AutoTokenizer, pipeline

# Load LLM and Tokenizer

# Use `gpu_layers` to specify how many layers will be offloaded to the GPU.

model = AutoModelForCausalLM.from_pretrained(

"QuantFactory/Meta-Llama-3-8B-Instruct-GGUF",

model_file="Meta-Llama-3-8B-Instruct.Q4_K_M.gguf",

# model_type="llama",

gpu_layers=20, hf=True

)

tokenizer = AutoTokenizer.from_pretrained(

"QuantFactory/Meta-Llama-3-8B-Instruct-GGUF", use_fast=True

)

# Create a pipeline

pipe = pipeline(model=model, tokenizer=tokenizer, task='text-generation')

AWQ

A new format on the block is AWQ (Activation-aware Weight Quantization) which is a quantization method similar to GPTQ. There are several differences between AWQ and GPTQ as methods but the most important one is that AWQ assumes that not all weights are equally important for an LLM’s performance.

In other words, there is a small fraction of weights that will be skipped during quantization which helps with the quantization loss.

As a result, their paper mentions a significant speed-up compared to GPTQ whilst keeping similar, and sometimes even better, performance.

下面使用vllm框架进行部署:

from vllm import LLM, SamplingParams

# Load the LLM

sampling_params = SamplingParams(temperature=0.0, top_p=1.0, max_tokens=256)

llm = LLM(

model="casperhansen/llama-3-8b-instruct-awq",

quantization='awq',

dtype='half',

gpu_memory_utilization=.95,

max_model_len=4096

)

tokenizer = AutoTokenizer.from_pretrained("casperhansen/llama-3-8b-instruct-awq")

# See https://huggingface.co/docs/transformers/main/en/chat_templating

messages = [

{

"role": "system",

"content": "You are a friendly chatbot.",

},

{

"role": "user",

"content": "Tell me a funny joke about Large Language Models."

},

]

prompt = tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

print(prompt)

# Generate output based on the input prompt and sampling parameters

output = llm.generate(prompt, sampling_params)

print(output[0].outputs[0].text)

2 [LLMs inference] quantization 量化整体介绍(bitsandbytes、GPTQ、GGUF、AWQ)

hf transformers 中的 KV cache

import os

os.environ['http_proxy'] = 'http://127.0.0.1:7890'

os.environ['https_proxy'] = 'http://127.0.0.1:7890'

import torch

from transformers import GPT2LMHeadModel, GPT2Tokenizer

# 加载预训练模型和分词器

model = GPT2LMHeadModel.from_pretrained('gpt2')

tokenizer = GPT2Tokenizer.from_pretrained('gpt2')

# 编码初始输入

input_ids = tokenizer.encode("Hello, my name is", return_tensors='pt')

# batch_size, seq_len

input_ids, input_ids.shape

# (tensor([[15496, 11, 616, 1438, 318]]), torch.Size([1, 5]))

第一步生成

2 × batch_size × seq_len × n layers × d m o d e l × p r e c i s o n 2\times \text{batch\_size}\times \text{seq\_len}\times n_{\text{layers}}\times d_{model}\times precison 2×batch_size×seq_len×nlayers×dmodel×precison

past_key_values = (

(key_layer_1, value_layer_1),

(key_layer_2, value_layer_2),

...

(key_layer_N, value_layer_N)

)

{key/value}_layer_ishape- (batch_size, num_heads, seq_length, head_dim)

- d_model = num_heads * head_dim

具体实现:

# 第一步生成

output = model(input_ids, use_cache=True)

next_token_logits = output.logits[:, -1, :] # 获取最后一个时间步的 logits

past_key_values = output.past_key_values # 缓存键和值

output.logits.shape # torch.Size([1, 5, 50257])

len(past_key_values), past_key_values[0][0].shape # (12, torch.Size([1, 12, 5, 64]))

看一下模型的配置:

# "vocab_size": 50257

# "n_layer": 12,

# "n_head": 12,

# "n_embd": 768,

model.config

GPT2Config {

"_name_or_path": "gpt2",

"activation_function": "gelu_new",

"architectures": [

"GPT2LMHeadModel"

],

"attn_pdrop": 0.1,

"bos_token_id": 50256,

"embd_pdrop": 0.1,

"eos_token_id": 50256,

"initializer_range": 0.02,

"layer_norm_epsilon": 1e-05,

"model_type": "gpt2",

"n_ctx": 1024,

"n_embd": 768,

"n_head": 12,

"n_inner": null,

"n_layer": 12,

"n_positions": 1024,

"reorder_and_upcast_attn": false,

"resid_pdrop": 0.1,

"scale_attn_by_inverse_layer_idx": false,

"scale_attn_weights": true,

"summary_activation": null,

"summary_first_dropout": 0.1,

"summary_proj_to_labels": true,

"summary_type": "cls_index",

"summary_use_proj": true,

"task_specific_params": {

"text-generation": {

"do_sample": true,

"max_length": 50

}

},

"transformers_version": "4.45.0.dev0",

"use_cache": true,

"vocab_size": 50257

}

然后采样下一个token:

raw_past_key_values = output.past_key_values

raw_past_key_values[0][0].shape # (1, 12, 5, 64)

# 采样下一个令牌(例如取最大概率的令牌)

next_token = torch.argmax(next_token_logits, dim=-1).unsqueeze(-1)

# 第二步生成,使用缓存

output = model(next_token, past_key_values=past_key_values, use_cache=True)

next_token_logits = output.logits[:, -1, :]

past_key_values = output.past_key_values

# 重复上述步骤,直到生成结束

可以检验一下结果是否正确:

past_key_values[0][0].shape # torch.Size([1, 12, 6, 64])

raw_past_key_values[0][0].shape, past_key_values[0][0].shape # (torch.Size([1, 12, 5, 64]), torch.Size([1, 12, 6, 64]))

torch.allclose(past_key_values[0][0][:, :, :5, :], raw_past_key_values[0][0]) # True

对比一下使用kvcache和不使用kvcache的区别:

model_name = 'gpt2'

model = GPT2LMHeadModel.from_pretrained(model_name)

tokenizer = GPT2Tokenizer.from_pretrained(model_name)

# 将模型设置为评估模式

model.eval()

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

model.to(device)

模型结构:

GPT2LMHeadModel(

(transformer): GPT2Model(

(wte): Embedding(50257, 768)

(wpe): Embedding(1024, 768)

(drop): Dropout(p=0.1, inplace=False)

(h): ModuleList(

(0-11): 12 x GPT2Block(

(ln_1): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(attn): GPT2SdpaAttention(

(c_attn): Conv1D(nf=2304, nx=768)

(c_proj): Conv1D(nf=768, nx=768)

(attn_dropout): Dropout(p=0.1, inplace=False)

(resid_dropout): Dropout(p=0.1, inplace=False)

)

(ln_2): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(mlp): GPT2MLP(

(c_fc): Conv1D(nf=3072, nx=768)

(c_proj): Conv1D(nf=768, nx=3072)

(act): NewGELUActivation()

(dropout): Dropout(p=0.1, inplace=False)

)

)

)

(ln_f): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

)

(lm_head): Linear(in_features=768, out_features=50257, bias=False)

)

测试用例

input_text = "Once upon a time"

input_ids = tokenizer.encode(input_text, return_tensors='pt').to(device) # 形状: (1, seq_length)

max_length = 30 # 生成的最大长度,包括输入长度

import os

os.environ["CUDA_LAUNCH_BLOCKING"] = "1"

# 使用 model.generate 生成文本(贪婪搜索)

greedy_output = model.generate(

input_ids,

max_length=max_length,

num_return_sequences=1,

do_sample=False # 关闭采样,使用贪婪搜索

)

greedy_text = tokenizer.decode(greedy_output[0], skip_special_tokens=True)

print("\n=== 使用 model.generate(贪婪搜索)生成的文本 ===")

print(greedy_text)

输出结果:

=== 使用 model.generate(贪婪搜索)生成的文本 ===

Once upon a time, the world was a place of great beauty and great danger. The world was a place of great danger, and the world was

然后我们可以手动逐步生成文本(贪心搜索):

# 手动逐步生成文本(贪婪搜索)

generated_tokens = input_ids

past_key_values = None

steps = max_length - input_ids.shape[1]

for step in range(steps):

if step == 0:

# 第一轮,传递整个输入

outputs = model(generated_tokens, use_cache=True)

else:

# 后续轮次,只传递最后一个 token

outputs = model(next_token, use_cache=True, past_key_values=past_key_values)

# 更新 past_key_values

past_key_values = outputs.past_key_values

# 获取 logits 并选择下一个 token

next_token_logits = outputs.logits[:, -1, :] # 取最后一个时间步的 logits

# 选择概率最高的 token(贪婪搜索)

next_token = torch.argmax(next_token_logits, dim=-1).unsqueeze(-1) # 形状: (batch_size, 1)

# 将新生成的 token 添加到生成的序列中

generated_tokens = torch.cat((generated_tokens, next_token), dim=1)

# 解码生成的文本

greedy_loop_text = tokenizer.decode(generated_tokens[0], skip_special_tokens=True)

print("\n=== 使用循环和 past_key_values(贪婪搜索)逐步生成的文本 ===")

print(greedy_loop_text)

输出结果:

=== 使用循环和 past_key_values(贪婪搜索)逐步生成的文本 ===

Once upon a time, the world was a place of great beauty and great danger. The world was a place of great danger, and the world was

3 [LLMs serving] openrouter & vllm host LLM 推理服务,openai api 兼容

openrouter

A unified interface for LLMs。大模型的中间商,也可能是中间商的中间商;

- 不是所有的模型都可以方便地本地部署 (*2 gpu memory)

- qwen/qwen-2.5-72b-instruct

- deepseek-v3;

- llama3.1-405b;

- 远端api快速验证,evaluate,科研或者工程;

- 虚拟信用卡

- https://bewildcard.com/i/CHUNHUI3

- 关于一个模型的不同 provider 的路由策略(provider routing)

- https://openrouter.ai/docs/provider-routing

from openai import OpenAI

from dotenv import load_dotenv, find_dotenv

import os

assert load_dotenv(find_dotenv())

client = OpenAI(

base_url="https://openrouter.ai/api/v1",

api_key=os.getenv('OPENROUTER_API_KEY'),

)

completion = client.chat.completions.create(

model="deepseek/deepseek-chat",

messages=[

{

"role": "user",

"content": "what model are you?"

}

]

)

print(completion.choices[0].message.content)

"""

I am an instance of OpenAI's language model, specifically based on the GPT-4 architecture. My design allows me to understand and generate human-like text based on the input I receive. I can assist with a wide range of tasks, from answering questions and providing explanations to generating creative content and offering advice. Let me know how I can help you today!

"""

vllm

- https://docs.vllm.ai/en/latest/getting_started/quickstart.html

- vllm

- easy, fast, cheap llm serving

- serving/deploying/hosting

- fastapi-based (uvicorn) server for online serving

- OpenAI-Compatible Server

- finish_reason

- https://platform.openai.com/docs/api-reference/chat/object

- length: if the maximum number of tokens specified in the request was reached

- stop: which means the API returned the full chat completion generated by the model without running into any limits.

- This will be stop if the model hit a natural stop point or a provided stop sequence,

快速上手

from vllm import LLM

prompts = ['Hello, my name is ', 'The captail of China is ']

llm = LLM(model='meta-llama/Meta-Llama-3.1-8B', max_model_len=4096)

outputs = llm.generate(prompts)

print(outputs[0].outputs[0].text)

print(outputs[1].outputs[0].text)

- the current vLLM instance can use total_gpu_memory (23.65GiB) x gpu_memory_utilization (0.90) = 21.28GiB

- model weights take 14.99GiB;

- non_torch_memory takes 0.09GiB;

- PyTorch activation peak memory takes 1.20GiB;

- the rest of the memory reserved for KV Cache is 5.01GiB.

- the current vLLM instance can use total_gpu_memory (23.65GiB) x gpu_memory_utilization (0.95) = 22.47GiB

- model weights take 14.99GiB;

- non_torch_memory takes 0.09GiB;

- PyTorch activation peak memory takes 1.20GiB;

- the rest of the memory reserved for KV Cache is 6.19GiB.

- the current vLLM instance can use total_gpu_memory (23.65GiB) x gpu_memory_utilization (0.95) = 22.47GiB

- model weights take 7.51GiB;

- non_torch_memory takes 0.28GiB;

- PyTorch activation peak memory takes 1.20GiB;

- the rest of the memory reserved for KV Cache is 13.47GiB.

OpenAI-Compatible Server

$ vllm serve meta-llama/Llama-3.1-8B-Instruct --max_model_len 8192

$ vllm serve meta-llama/Llama-3.1-8B-Instruct --dtype auto --api-key keytest --gpu_memory_utilization 0.95 --max_model_len 8192

$ nohup vllm serve meta-llama/Llama-3.1-8B-Instruct --dtype auto --api-key keytest --gpu_memory_utilization 0.95 --max_model_len 8192 &

$ python -m vllm.entrypoints.openai.api_server \

--model meta-llama/Llama-3.1-8B-Instruct \

--max_model_len 8192

http://localhost:8000/- 默认参数

- ip: localhost

- port: 8000

- dtype: auto

- device: auto

- api_key: None

- gpu_memory_utilization: 0.9

- max_model_len: None

4 [LLMs inference] vllm & sglang offline inference,tensor parallel vs. data parall

video: https://www.bilibili.com/video/BV1jGXHYfEdx

code: https://github.com/chunhuizhang/llm_rl/tree/main/tutorials/infra/inference/scripts

dynamic batch to inference

- vllm/sglang is dynamic batch to inference,

- Validation datasets are sent to inference engines as a whole batch, which will schedule the memory themselves.

目前OpenRouter也很好用,但VLLM可能是受众面最广的

Parameters

vllm的一些参数

LLM--max-model-len: Model context length. If unspecified, will be automatically derived from the model config.max_seq_lenQwen/Qwen2.5-7B-Instruct-1M(config.json,max_position_embeddings: 1010000)

max_num_seqs=256, # 控制批处理中的最大序列数(batch size)max_num_batched_tokens=4096, # 控制批处理中的最大token数

SamplingParamsmax_tokens: Maximum number of tokens to generate per output sequence.stop,stop_token_idsstop=stop_condition

llm = LLM('Qwen/Qwen2.5-7B-Instruct')

llm.llm_engine.scheduler_config.max_model_len # 32768

llm.llm_engine.scheduler_config.max_num_seqs # 256

llm.llm_engine.scheduler_config.max_num_batched_tokens # 32768

sglang的参考:

- https://docs.sglang.ai/backend/server_arguments.html

- https://docs.sglang.ai/backend/offline_engine_api.html

vllm推理的示例脚本:

import os

from tqdm import tqdm

import torch

from transformers import AutoTokenizer

from vllm import LLM, SamplingParams

os.environ["NCCL_IGNORE_DISABLED_P2P"] = "1"

os.environ["TOKENIZERS_PARALLELISM"] = "true"

def generate(question_list,model_path):

llm = LLM(

model=model_path,

trust_remote_code=True,

tensor_parallel_size=torch.cuda.device_count(),

gpu_memory_utilization=0.90,

)

sampling_params = SamplingParams(max_tokens=8192,

temperature=0.0,

n=1)

outputs = llm.generate(question_list, sampling_params, use_tqdm=True)

completions = [[output.text for output in output_item.outputs] for output_item in outputs]

return completions

def make_conv_hf(question, tokenizer):

# for math problem

content = question + "\n\nPresent the answer in LaTex format: \\boxed{Your answer}"

# for code problem

# content = question + "\n\nWrite Python code to solve the problem. Present the code in \n```python\nYour code\n```\nat the end."

msg = [

{"role": "user", "content": content}

]

chat = tokenizer.apply_chat_template(msg, tokenize=False, add_generation_prompt=True)

return chat

def run():

model_path = "Qwen/Qwen2.5-7B-Instruct"

all_problems = [

"which number is larger? 9.11 or 9.9?"

]

tokenizer = AutoTokenizer.from_pretrained(model_path)

completions = generate([make_conv_hf(problem_data, tokenizer) for problem_data in all_problems],model_path)

print(completions)

if __name__ == "__main__":

run()

推理测试:

-

baseline vs. new model

- qwen2.5-7B-Instruct on gsm8k test dataset

- report 85.4%;

- https://arxiv.org/pdf/2412.15115

-

new model 训练用数据集,及超参等;

-

evaluate metrics

- accuracy

-

实验前,列好表,留好空,跑实验就是填空的过程;

-

Qwen/Qwen2.5-7B-Instructongsm8ktest set, on a dual 4090s:

# 单卡

python vllm_tp_dp.py --mode dp --num_gpus 1

# dp = 2

python vllm_tp_dp.py --mode dp --num_gpus 2

# tp = 2

python vllm_tp_dp.py --mode tp --num_gpus 2

# 单卡

python sglang_tp_dp.py --mode dp --num_gpus 1

# dp = 2

python sglang_tp_dp.py --mode dp --num_gpus 2

# tp = 2

python sglang_tp_dp.py --mode tp --num_gpus 2

| time(s) | accuracy | ||

|---|---|---|---|

| vllm | 单卡 | 115.24 | 1034.0/1319 = 0.7839 |

| vllm | dp=2 | 80.88 | 1031.0/1319=0.7817 |

| vllm | tp=2 | 132.45 | 1034.0/1319 = 0.7839 |

| sglang | 单卡 | 120.17 | 1062.0/1319 = 0.8051 |

| sglang | dp=2 | 82.96 | 1069.0/1319 = 0.8105 |

| sglang | tp=2 | 91.41 | 1058.0/1319 = 0.8021 |

- dp & tp

- dp size: 就是模型复制的次数(model replicas)

- num_gpus = dp_size x tp_size

- vllm

- https://docs.vllm.ai/en/latest/serving/offline_inference.html

- dual 4090s (不支持 p2p access)

# GPU blocks: 25626, # CPU blocks: 9362

INFO 03-21 20:07:55 distributed_gpu_executor.py:57] # GPU blocks: 25626, # CPU blocks: 9362 INFO 03-21 20:07:55 distributed_gpu_executor.py:61] Maximum concurrency for 32768 tokens per request: 12.51x-- 25626 * 16 / 32768 = 12.51x

- sglang

python3 -m sglang.check_env- https://docs.sglang.ai/backend/offline_engine_api.html

- dp

- sglang::scheduler_DP0_TP0

- sglang::scheduler_DP1_TP0

- tp

- sglang::scheduler_TP0

- sglang::scheduler_TP1

vllm对于kv-cache的处理:

- vllm管理空间的级别是block级别,gpu-block,比如一个block可以生成16个token,那么最多浪费15个token

- 测试中很容易发现vllm肯定是有省内存的操作的,直接调用模型进行forward很多时候都会OOM

gsm8k的案例

GSM8K的数据脚本(gsm.py):

import re

import os

import datasets

def extract_raw_solution(solution_str):

solution = re.search("#### (\\-?[0-9\\.\\,]+)", solution_str)

assert solution is not None

final_solution = solution.group(0)

final_solution = final_solution.split('#### ')[1].replace(',', '')

return final_solution

def make_map_fn(split):

def process_fn(example, idx):

question_raw = example.pop('question')

question = question_raw + ' ' + instruction_following

answer_raw = example.pop('answer')

solution = extract_raw_solution(answer_raw)

data = {

"data_source": data_source,

"prompt": [{

"role": "user",

"content": question,

}],

"ability": "math",

"reward_model": {

"style": "rule",

"ground_truth": solution

},

"extra_info": {

'split': split,

'index': idx,

'answer': answer_raw,

"question": question_raw,

}

}

return data

return process_fn

def extract_solution(solution_str, method='strict'):

assert method in ['strict', 'flexible']

if method == 'strict':

# this also tests the formatting of the model

solution = re.search("#### (\\-?[0-9\\.\\,]+)", solution_str)

if solution is None:

final_answer = None

else:

final_answer = solution.group(0)

final_answer = final_answer.split('#### ')[1].replace(',', '').replace('$', '')

elif method == 'flexible':

answer = re.findall("(\\-?[0-9\\.\\,]+)", solution_str)

final_answer = None

if len(answer) == 0:

# no reward is there is no answer

pass

else:

invalid_str = ['', '.']

# find the last number that is not '.'

for final_answer in reversed(answer):

if final_answer not in invalid_str:

break

return final_answer

def compute_score(solution_str, ground_truth, method='strict', format_score=0., score=1.):

"""The scoring function for GSM8k.

Reference: Trung, Luong, et al. "Reft: Reasoning with reinforced fine-tuning." Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). 2024.

Args:

solution_str: the solution text

ground_truth: the ground truth

method: the method to extract the solution, choices are 'strict' and 'flexible'

format_score: the score for the format

score: the score for the correct answer

"""

answer = extract_solution(solution_str=solution_str, method=method)

if answer is None:

return 0

else:

if answer == ground_truth:

return score

return format_score

if __name__ == "__main__":

data_source = 'openai/gsm8k'

dataset = datasets.load_dataset(data_source, 'main')

# train_dataset = dataset['train']

test_dataset = dataset['test']

instruction_following = "Let's think step by step and output the final answer after \"####\"."

test_dataset = test_dataset.map(function=make_map_fn('test'), with_indices=True)

test_dataset.to_parquet(os.path.join('./data', 'gsm8k_test.parquet'))

使用sglang推理gsm8k的示例:

import time

import sglang as sgl

import argparse

from datasets import load_dataset

from transformers import AutoTokenizer

from gsm8k import extract_solution, compute_score

import os

os.environ["NCCL_IGNORE_DISABLED_P2P"] = '1'

def generate(llm, prompts, args=None):

sampling_params = {

"max_new_tokens": args.max_tokens,

"temperature": args.temperature,

}

outputs = llm.generate(prompts, sampling_params)

responses = [output['text'] for output in outputs]

return responses

if __name__ == "__main__":

args = argparse.ArgumentParser()

args.add_argument("--model_name", type=str, default="Qwen/Qwen2.5-7B-Instruct")

args.add_argument("--num_gpus", type=int, default=2)

# tp or dp

args.add_argument("--mode", type=str, default="tp")

args.add_argument("--data_path", type=str, default="./data/gsm8k_test.parquet")

args.add_argument("--temperature", type=float, default=0.0)

args.add_argument("--max_tokens", type=int, default=2048)

args.add_argument("--max_model_len", type=int, default=4096)

args.add_argument("--n", type=int, default=1)

args.add_argument("--extract_method", type=str, default="strict")

args.add_argument("--num_prompts", type=int, default=-1)

args = args.parse_args()

tokenizer = AutoTokenizer.from_pretrained(args.model_name)

test_parquet = load_dataset('parquet', data_files=args.data_path)['train']

prompts = []

for example in test_parquet:

prompt = [example['prompt'][0]]

prompt = tokenizer.apply_chat_template(prompt, tokenize=False, add_generation_prompt=True)

prompts.append(prompt)

if args.num_prompts != -1:

prompts = prompts[:args.num_prompts]

t0 = time.time()

if args.mode == "tp":

llm = sgl.Engine(model_path=args.model_name,

dp_size=1,

tp_size=args.num_gpus,

mem_fraction_static=0.8,

enable_p2p_check=True)

elif args.mode == "dp":

llm = sgl.Engine(model_path=args.model_name,

dp_size=args.num_gpus,

tp_size=1)

all_responses = generate(llm, prompts, args=args)

t1 = time.time()

total_score = 0

for example, response in zip(test_parquet, all_responses):

gt_answer = example['reward_model']['ground_truth']

model_resp = response

model_answer = extract_solution(model_resp, args.extract_method)

score = compute_score(model_resp, gt_answer, args.extract_method)

print(f"Example: {example['prompt'][0]}")

print(f"Response: {model_resp}")

print(f"Solution: {model_answer}")

print(f"Score: {score}")

print("-"*100)

total_score += score

print(f"accuray: {total_score}/{len(prompts)} = {total_score / len(prompts)}")

print(f"Time taken of {args.mode} mode: {t1 - t0} seconds")

这个是vllm推理gsm8k的示例:

import argparse

import os

import pandas as pd

import torch

from vllm import LLM, SamplingParams

from vllm.utils import get_open_port

from datasets import load_dataset

from transformers import AutoTokenizer

from multiprocessing import Process

import re

from multiprocessing import Queue

import time

from gsm8k import extract_solution, compute_score

def generate(llm, prompts, use_tqdm=False, args=None):

sampling_params = SamplingParams(max_tokens=args.max_tokens,

temperature=args.temperature,

n=args.n)

outputs = llm.generate(prompts, sampling_params, use_tqdm=use_tqdm)

responses = [[output.text for output in output_item.outputs] for output_item in outputs]

return responses

def tp_generate(prompts, args):

llm = LLM(

model=args.model_name,

trust_remote_code=True,

tensor_parallel_size=args.num_gpus,

max_model_len=args.max_model_len,

)

responses = generate(llm, prompts, use_tqdm=True, args=args)

return responses

def sub_dp(prompts, DP_size, dp_rank, TP_size, args, results_queue):

os.environ["VLLM_DP_RANK"] = str(dp_rank)

os.environ["VLLM_DP_SIZE"] = str(DP_size)

os.environ["VLLM_DP_MASTER_IP"] = args.dp_master_ip

os.environ["VLLM_DP_MASTER_PORT"] = str(args.dp_master_port)

# tp_size = 1:

# dp_rank = 0: 0;

# dp_rank = 1: 1;

# tp_size = 2:

# dp_rank = 0: 0, 1;

# dp_rank = 1: 2, 3;

# dp size = # gpus / tp size

os.environ["CUDA_VISIBLE_DEVICES"] = ",".join(

str(i) for i in range(dp_rank * TP_size, (dp_rank + 1) * TP_size))

promts_per_rank = len(prompts) // DP_size

start = dp_rank * promts_per_rank

end = start + promts_per_rank

prompts = prompts[start:end]

if len(prompts) == 0:

prompts = ["Placeholder"]

print(f"DP rank {dp_rank} needs to process {len(prompts)} prompts")

llm = LLM(model=args.model_name,

trust_remote_code=True,

max_model_len=args.max_model_len,

tensor_parallel_size=TP_size)

responses = generate(llm, prompts, use_tqdm=False, args=args)

print(f"DP rank {dp_rank} finished processing {len(responses)} prompts")

results_queue.put((dp_rank, start, end, responses))

print(f'results queue size: {results_queue.qsize()}')

return responses

def dp_generate(prompts, args):

DP_size = args.num_gpus

TP_size = 1

procs = []

results_queue = Queue()

for i in range(DP_size):

proc = Process(target=sub_dp, args=(prompts, DP_size, i, TP_size, args, results_queue))

proc.start()

procs.append(proc)

all_results = []

for _ in range(DP_size):

dp_rank, start, end, responses = results_queue.get()

all_results.append((dp_rank, start, end, responses))

for proc in procs:

proc.join()

all_results.sort(key=lambda x: x[0]) # 按 dp_rank 排序

all_responses = []

for _, start, end, responses in all_results:

if responses and responses[0][0] != "Placeholder":

all_responses.extend(responses)

return all_responses

if __name__ == "__main__":

args = argparse.ArgumentParser()

args.add_argument("--model_name", type=str, default="Qwen/Qwen2.5-7B-Instruct")

args.add_argument("--num_gpus", type=int, default=2)

# tp or dp

args.add_argument("--mode", type=str, default="tp")

args.add_argument("--data_path", type=str, default="./data/gsm8k_test.parquet")

args.add_argument("--temperature", type=float, default=0.0)

args.add_argument("--max_tokens", type=int, default=8192)

args.add_argument("--max_model_len", type=int, default=4096)

args.add_argument("--n", type=int, default=1)

args.add_argument("--dp_master_ip", type=str, default="127.0.0.1")

args.add_argument("--dp_master_port", type=int, default=get_open_port())

args.add_argument("--extract_method", type=str, default="strict")

args.add_argument("--num_prompts", type=int, default=-1)

args = args.parse_args()

tokenizer = AutoTokenizer.from_pretrained(args.model_name)

test_parquet = load_dataset('parquet', data_files=args.data_path)['train']

prompts = []

for example in test_parquet:

prompt = [example['prompt'][0]]

prompt = tokenizer.apply_chat_template(prompt, tokenize=False, add_generation_prompt=True)

prompts.append(prompt)

if args.num_prompts != -1:

prompts = prompts[:args.num_prompts]

t0 = time.time()

if args.mode == "tp":

all_responses = tp_generate(prompts, args)

elif args.mode == "dp":

all_responses = dp_generate(prompts, args)

t1 = time.time()

total_score = 0

for example, response in zip(test_parquet, all_responses):

gt_answer = example['reward_model']['ground_truth']

model_resp = response[0]

model_answer = extract_solution(model_resp, args.extract_method)

score = compute_score(model_resp, gt_answer, args.extract_method)

print(f"Example: {example['prompt'][0]}")

print(f"Response: {model_resp}")

print(f"Solution: {model_answer}")

print(f"Score: {score}")

print("-"*100)

total_score += score

print(f"accuray: {total_score}/{len(prompts)} = {total_score / len(prompts)}")

print(f"Time taken of {args.mode} mode: {t1 - t0} seconds")

603

603

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?