深度置信网络DBN的实现方式在网上有很多说法。总结下来有几种。1、多层RBM堆叠,最后采用罗杰斯特回归进行分类选择;2、采用RBM堆叠,然后采用BP神经网络进行梯度下降训练,得到最终的权重;3、多层堆叠RBM,在最后一层加上标志位输入;4、多层堆叠RBM,采用睡醒方式训练;5、多层堆叠RBM,每层RBM上行和下行的权重不同,非首尾层RBM的上行权重为下行权重2倍。由于说法太多,又没有时间一一证明,就用1实现了一个。但是第5种实现方法好像理论依据比较充足,后期有时间再实现吧。老规矩,先上试验代码:

#include <vector>

#include <iostream>

#include <string>

#include <iomanip>

#include <conio.h>

#include <random>

#include "dbn.hpp"

struct train_data

{

mat<28, 28, double> mt_image;

mat<10, 1, double> mt_label;

int i_num;

};

void assign_mat(mat<28, 28, double>& mt, const unsigned char* sz)

{

int i_sz_cnt = 0;

for (int r = 0; r < 28; ++r)

{

for (int c = 0; c < 28; ++c)

{

mt.get(r, c) = sz[i_sz_cnt++];

}

}

}

int main(int argc, char** argv)

{

unsigned char sz_image_buf[28 * 28];

std::vector<train_data> vec_train_data;

ht_memory mry_train_images(ht_memory::big_endian);

mry_train_images.read_file("./data/train-images.idx3-ubyte");

int32_t i_image_magic_num = 0, i_image_num = 0, i_image_col_num = 0, i_image_row_num = 0;

mry_train_images >> i_image_magic_num >> i_image_num >> i_image_row_num >> i_image_col_num;

printf("magic num:%d | image num:%d | image_row:%d | image_col:%d\r\n"

, i_image_magic_num, i_image_num, i_image_row_num, i_image_col_num);

ht_memory mry_train_labels(ht_memory::big_endian);

mry_train_labels.read_file("./data/train-labels.idx1-ubyte");

int32_t i_label_magic_num = 0, i_label_num = 0;

mry_train_labels >> i_label_magic_num >> i_label_num;

for (int i = 0; i < i_image_num; ++i)

{

memset(sz_image_buf, 0, sizeof(sz_image_buf));

train_data td;

unsigned char uc_label = 0;

mry_train_images.read((char*)sz_image_buf, sizeof(sz_image_buf));

assign_mat(td.mt_image, sz_image_buf);

td.mt_image = td.mt_image / 256.;

mry_train_labels >> uc_label;

td.i_num = uc_label;

td.mt_label.get((int)uc_label, 0) = 1;

vec_train_data.push_back(td);

}

std::random_device rd;

std::mt19937 rng(rd());

std::shuffle(vec_train_data.begin(), vec_train_data.end(), rng);

using dbn_type = dbn<double, 28 * 28, 10, 10>;

using mat_type = mat<28 * 28, 1, double>;

using ret_type = dbn_type::ret_type;

dbn_type dbn_net;

std::vector<mat_type> vec_input;

std::vector<ret_type> vec_expect;

for (int i = 0; i < 10; ++i)

{

vec_input.push_back(vec_train_data[i].mt_image.one_col());

vec_expect.push_back(vec_train_data[i].mt_label.one_col());

}

dbn_net.pretrain(vec_input, 10000);

dbn_net.finetune(vec_expect, 10000);

while (1)

{

std::string str_test_num;

std::cout << "#";

std::getline(std::cin, str_test_num);

int i_test_num = std::stoi(str_test_num);

auto pred = dbn_net.forward(vec_train_data[i_test_num].mt_image.one_col());

pred.print();

vec_train_data[i_test_num].mt_label.one_col().print();

if ('q' == _getch())break;

}

return 0;

}

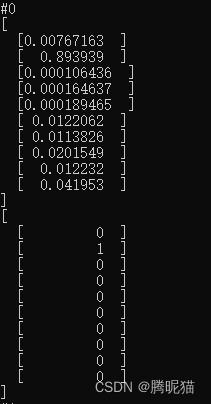

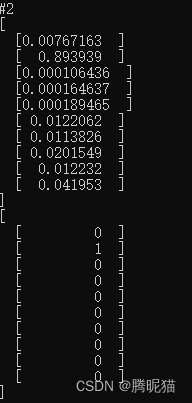

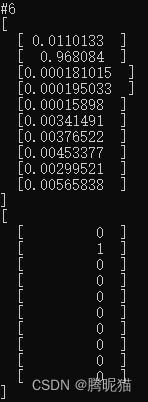

这个试验程序直接用了minist数据集进行测试,DBN主要作用是记忆联想。程序运行结果如下,可以看出来可以正确联想出结果(当然也不能每次都得到这么好的结果):

下面是多层RBM堆叠,最后采用softmax激活函数的BP神经网络进行模式判别。

#ifndef _DBN_HPP_

#define _DBN_HPP_

#include "bp.hpp"

#include "restricked_boltzman_machine.hpp"

/*

DBN的主要思路是通过RBM对输入进行编码,然后将编码后的数据通过BP神经网络进行模式判断

*/

template<typename val_t, int iv, int ih, int...is>

struct dbn

{

restricked_boltzman_machine<iv, ih, val_t> rbm;

dbn<val_t, ih, is...> dbn_next;

using ret_type = typename dbn<val_t, ih, is...>::ret_type;

void pretrain(const std::vector<mat<iv, 1> >& vec, const int& i_epochs = 100)

{

/* 训练当前层 */

for (int i = 0; i < i_epochs; ++i)

for (auto itr = vec.begin(); itr != vec.end(); ++itr)

{

rbm.train(*itr);

}

/* 准备下层数据 */

std::vector<mat<ih, 1, val_t> > vec_hs;

for (auto itr = vec.begin(); itr != vec.end(); ++itr)

{

vec_hs.push_back(rbm.forward(*itr));

}

/* 用隐含层结果训练下一层 */

dbn_next.pretrain(vec_hs, i_epochs);

}

void finetune(const std::vector<ret_type>& vec_expected, const int& i_epochs = 100)

{

dbn_next.finetune(vec_expected, i_epochs);

}

auto forward(const mat<iv, 1>& v1)

{

return dbn_next.forward(rbm.forward(v1));

}

};

template<typename val_t, int iv, int ih

1、多层RBM堆叠,最后采用罗杰斯特回归进行分类选择;2、采用RBM堆叠,然后采用BP神经网络进行梯度下降训练,得到最终的权重;3、多层堆叠RBM,在最后一层加上标志位输入;4、多层堆叠RBM,采用睡醒方式训练;5、多层堆叠RBM,每层RBM上行和下行的权重不同,非首尾层RBM的上行权重为下行权重2倍。由于说法太多,又没有时间一一证明,就用1实现了一个。但是第5种实现方法好像理论依据比较充足,后期有时间再实现吧。下面是多层RBM堆叠,最后采用softmax激活函数的BP神经网络进行模式判别。

1、多层RBM堆叠,最后采用罗杰斯特回归进行分类选择;2、采用RBM堆叠,然后采用BP神经网络进行梯度下降训练,得到最终的权重;3、多层堆叠RBM,在最后一层加上标志位输入;4、多层堆叠RBM,采用睡醒方式训练;5、多层堆叠RBM,每层RBM上行和下行的权重不同,非首尾层RBM的上行权重为下行权重2倍。由于说法太多,又没有时间一一证明,就用1实现了一个。但是第5种实现方法好像理论依据比较充足,后期有时间再实现吧。下面是多层RBM堆叠,最后采用softmax激活函数的BP神经网络进行模式判别。

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

308

308

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?