网上关于DeepAR论文解读、模型介绍以及代码复现已经有很多优秀的文章了,这里总结一下核心要点。

我们平常用的最多的都是点预测,即预测下一时刻的商品销量、股票价格、交通流量等等,这些本质上都属于均值回归。如果我们想关注某些分位数的预测量,这时就可以结合分位数回归,只需要将模型的损失函数改为分位数损失即可,用pytorch实现如下,erros=真实值-预测值。

loss =torch.mean(torch.where(errors >= 0, quantile * errors, (1-quantile) * errors))如果想一次输出多个分位数,则可修改为下列代码:

losses=[]

for i, q in enumerate(quantile):

losses.append(torch.mean(torch.where(errors >= 0, q * errors, (1-q) * errors)))但是这样直接预测容易产生一个问题:分位数交叉,于是有了分布回归,即预测一个分布,例如预测一个高斯分布的参数:均值和标准差,然后根据这个分布采样一些数据点,基于采样的点再去计算分位数,就不会出现分位数交叉了,这也是这篇论文的核心思想。本文用的是LSTM模型。

DeepAR论文地址

https://linkinghub.elsevier.com/retrieve/pii/S0169207019301888

本文还有一个核心的点是采用缩放因子进行多重时间序列。这里先总结一下时间序列预测的类型:

按照变量个数可分为单变量预测(AR,MA,ARIMA模型,即没有特征,只有预测量这一列数据)和多变量预测(包含多个特征/输出);

按照预测步数可分为单步预测(只预测未来t时刻一个时刻的预测量)和多步预测(预测未来多个时刻的预测量);

按照时间序列数目多少可以划分为单重预测和多重预测,单重预测就好比我们只关注一个商品的需求变化,多重预测就是同时关注多个商品。因为每个商品之间的需求肯定有内在的联系,对于成千上万种商品我们不可能针对每一个商品单独训练一个时间序列预测模型,那样成本太大了,所以就有了多重预测,我们针对多个商品训练一个预测模型。

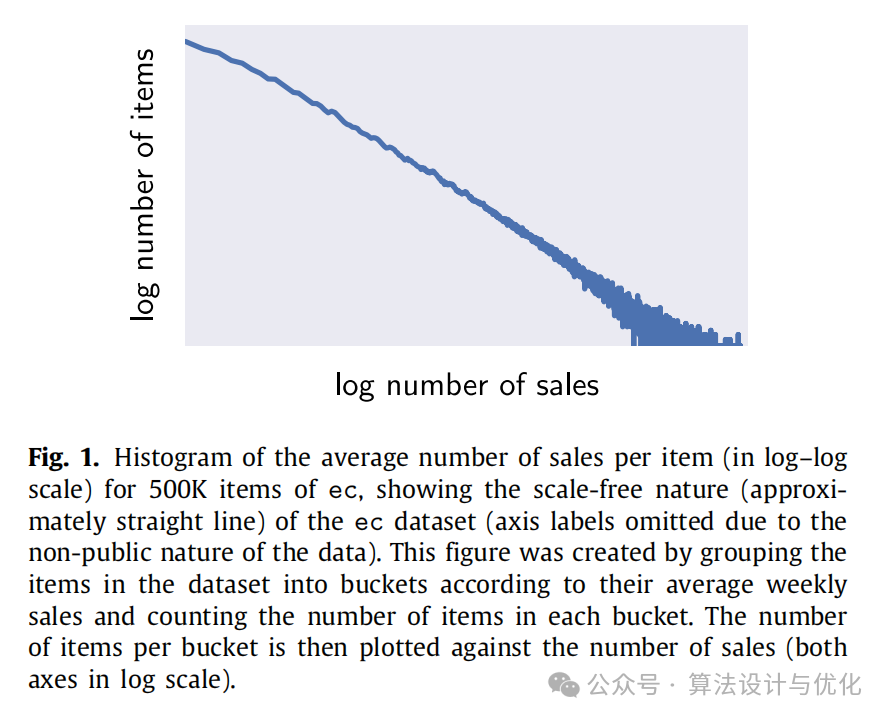

这时就会有一个问题,不同商品之间的销售量可能差别太大,如原文中的图1所示,横轴x为销售量,纵轴为销售量为x的商品数量,可以看到差别很大,你要是不做任何处理直接预测显然效果不好,作者提出了采用缩放因子对数据进行处理,即通过将自回归输入除以项目相关的尺度因子来解决这个问题,反过来,将尺度相关的似然参数乘以相同的因子。同时,作者采用了不均匀采样方式以避免模型不能很好的拟合大尺度小数量的时间序列。

下面我们来基于pytorch实现DeepAR,代码主要基于这位大佬的文章:

参考内容:

https://blog.csdn.net/cyj972628089/article/details/130836308

DeepAR代码实现

为了首先将代码跑通,我们只需要将第256行的代码改为自己的文件名,再修改258、259的reshape形状即可。

对于单重预测,(series_num,len,features_num)对应1,时间序列长度,特征数

对于多重预测,(series_num,len*num,features_num)对应1,时间序列长度*时间序列数量,特征数(这个就需要对输入和似然参数乘以缩放因子了,本代码中没有体现,读者可自行修改)

import torch

from torch import nn

import torch.nn.functional as F

from torch.optim import Adam

import numpy as np

import random

import matplotlib.pyplot as plt

from tqdm import tqdm

import pandas as pd

from progressbar import *

random.seed(2)

np.random.seed(2)

num_epoches = 100

lr = 1e-3

n_layers = 2

hidden_size = 50

embedding_size = 10 #将上一时刻的真实值编码为embedding_size长度

likelihood = "g"

num_obs_to_train = 9 #训练的历史窗口长度

seq_len = 3 #预测的未来窗口长度

show_plot = True

run_test = True

standard_scaler = True

log_scaler = False

mean_scaler = False

batch_size = 32

sample_size = 100

def train_test_split(X, y, train_ratio=0.7):

'''

- X (array like): shape (num_samples, num_periods, num_features)

- y (array like): shape (num_samples, num_periods)

'''

num_ts, num_periods, num_features = X.shape

train_periods = int(num_periods * train_ratio)

random.seed(2)

Xtr = X[:, :train_periods, :]

ytr = y[:, :train_periods]

Xte = X[:, train_periods:, :]

yte = y[:, train_periods:]

return Xtr, ytr, Xte, yte

class StandardScaler:

def fit_transform(self, y):

self.mean = np.mean(y)

self.std = np.std(y) + 1e-4

return (y - self.mean) / self.std

def inverse_transform(self, y):

return y * self.std + self.mean

def transform(self, y):

return (y - self.mean) / self.std

class MeanScaler:

def fit_transform(self, y):

self.mean = np.mean(y)

return y / self.mean

def inverse_transform(self, y):

return y * self.mean

def transform(self, y):

return y / self.mean

class LogScaler:

def fit_transform(self, y):

return np.log1p(y)

def inverse_transform(self, y):

return np.expm1(y)

def transform(self, y):

return np.log1p(y)

class NegativeBinomial(nn.Module):

def __init__(self, input_size, output_size):

'''

Negative Binomial Supports Positive Count Data

Args:

input_size (int): hidden h_{i,t} column size

output_size (int): embedding size

'''

super(NegativeBinomial, self).__init__()

self.mu_layer = nn.Linear(input_size, output_size)

self.sigma_layer = nn.Linear(input_size, output_size)

def forward(self, h): # h为神经网络隐藏层输出 (batch, hidden_size)

_, hidden_size = h.size()

alpha_t = torch.log(1 + torch.exp(self.sigma_layer(h))) + 1e-6

mu_t = torch.log(1 + torch.exp(self.mu_layer(h)))

return mu_t, alpha_t # (batch, output_size)

def negative_binomial_sample(mu, alpha):

'''

Negative Binomial Sample

Args:

ytrue (array like)

mu (array like)

alpha (array like)

maximuze log l_{nb} = log Gamma(z + 1/alpha) - log Gamma(z + 1) - log Gamma(1 / alpha)

- 1 / alpha * log (1 + alpha * mu) + z * log (alpha * mu / (1 + alpha * mu))

minimize loss = - log l_{nb}

Note: torch.lgamma: log Gamma function

'''

var = mu + mu * mu * alpha

ypred = mu + torch.randn_like(mu) * torch.sqrt(var)

return ypred

def negative_binomial_loss(ytrue, mu, alpha):

'''

Negative Binomial Sample

Args:

ytrue (array like)

mu (array like)

alpha (array like)

maximuze log l_{nb} = log Gamma(z + 1/alpha) - log Gamma(z + 1) - log Gamma(1 / alpha)

- 1 / alpha * log (1 + alpha * mu) + z * log (alpha * mu / (1 + alpha * mu))

minimize loss = - log l_{nb}

Note: torch.lgamma: log Gamma function

'''

likelihood = torch.lgamma(ytrue + 1. / alpha) - torch.lgamma(ytrue + 1) - torch.lgamma(1. / alpha) \

- 1. / alpha * torch.log(1 + alpha * mu) \

+ ytrue * torch.log(alpha * mu / (1 + alpha * mu))

return -likelihood.mean()

class Gaussian(nn.Module):

def __init__(self, hidden_size, output_size):

'''

Gaussian Likelihood Supports Continuous Data

Args:

input_size (int): hidden h_{i,t} column size

output_size (int): embedding size

'''

super(Gaussian, self).__init__()

self.mu_layer = nn.Linear(hidden_size, output_size)

self.sigma_layer = nn.Linear(hidden_size, output_size)

def forward(self, h): # h为神经网络隐藏层输出 (batch, hidden_size)

_, hidden_size = h.size()

sigma_t = torch.log(1 + torch.exp(self.sigma_layer(h))) + 1e-6

mu_t = self.mu_layer(h)

return mu_t, sigma_t # (batch, output_size)

def gaussian_sample(mu, sigma):

'''

Gaussian Sample

Args:

ytrue (array like)

mu (array like) # (num_ts, 1)

sigma (array like): standard deviation # (num_ts, 1)

gaussian maximum likelihood using log

l_{G} (z|mu, sigma) = (2 * pi * sigma^2)^(-0.5) * exp(- (z - mu)^2 / (2 * sigma^2))

'''

# likelihood = (2 * np.pi * sigma ** 2) ** (-0.5) * \

# torch.exp((- (ytrue - mu) ** 2) / (2 * sigma ** 2))

# return likelihood

gaussian = torch.distributions.normal.Normal(mu, sigma)

ypred = gaussian.sample()

return ypred # (num_ts, 1)

def gaussian_likelihood_loss(z, mu, sigma):

'''

Gaussian Liklihood Loss

Args:

z (tensor): true observations, shape (num_ts, num_periods)

mu (tensor): mean, shape (num_ts, num_periods)

sigma (tensor): standard deviation, shape (num_ts, num_periods)

likelihood:

(2 pi sigma^2)^(-1/2) exp(-(z - mu)^2 / (2 sigma^2))

log likelihood:

-1/2 * (log (2 pi) + 2 * log (sigma)) - (z - mu)^2 / (2 sigma^2)

'''

negative_likelihood = torch.log(sigma + 1) + (z - mu) ** 2 / (2 * sigma ** 2) + 6

return negative_likelihood.mean()

class DeepAR(nn.Module):

def __init__(self, input_size, embedding_size, hidden_size, num_layers, lr, likelihood="g"):

super(DeepAR, self).__init__()

self.embedding_size = embedding_size

self.input_size = input_size

self.input_embed = nn.Linear(1, embedding_size)

self.encoder = nn.LSTM(embedding_size+input_size, hidden_size, \

num_layers, bias=True, batch_first=True,dropout=0.2)

if likelihood == "g":

self.likelihood_layer = Gaussian(hidden_size, 1)

elif likelihood == "nb":

self.likelihood_layer = NegativeBinomial(hidden_size, 1)

self.likelihood = likelihood

def forward(self, X, y, Xf):

'''

Args:

num_time_series = batch_size

X (array like): shape (num_time_series, num_obs_to_train, num_features)

y (array like): shape (num_time_series, num_obs_to_train)

Xf (array like): shape (num_time_series, seq_len, num_features)

Return:

mu (array like): shape (num_time_series, num_obs_to_train + seq_len)

sigma (array like): shape (num_time_series, num_obs_to_train + seq_len)

'''

if isinstance(X, type(np.empty(2))): # 转换为tensor

X = torch.from_numpy(X).float()

y = torch.from_numpy(y).float()

Xf = torch.from_numpy(Xf).float()

num_ts, num_obs_to_train, _ = X.size()

_, seq_len, num_features = Xf.size()

ynext = None

ypred = []

mus = []

sigmas = []

h, c = None, None

# 遍历所有时间点

for s in range(num_obs_to_train + seq_len): # num_obs_to_train为历史序列长度,seq_len为预测长度

if s < num_obs_to_train: # Encoder,ynext为真实值

if s == 0: ynext = torch.zeros((num_ts,1)).to(device)

else: ynext = y[:, s-1].view(-1, 1) # (num_ts,1) # 取上一时刻的真实值

yembed = self.input_embed(ynext).view(num_ts, 1,self.embedding_size) # (num_ts,embedding_size)

x = X[:, s, :].view(num_ts, 1,self.input_size) # (num_ts,num_features)

else: # Decoder,ynext为预测值

if s == num_obs_to_train: ynext = y[:, s-1].view(-1, 1) # (num_ts,1) # 预测的第一个时间点取上一时刻的真实值

yembed = self.input_embed(ynext).view(num_ts, -1,self.embedding_size) # (num_ts,embedding_size)

x = Xf[:, s-num_obs_to_train, :].view(num_ts, 1,self.input_size) # (num_ts,num_features)

x = torch.cat([x, yembed], dim=2) # (num_ts, num_features + embedding)

inp =x

if h is None and c is None:

out, (h, c) = self.encoder(inp) # h size (num_layers, num_ts, hidden_size)

else:

out, (h, c) = self.encoder(inp, (h, c))

hs = h[-1, :, :] # (num_ts, hidden_size)

hs = F.relu(hs) # (num_ts, hidden_size)

mu, sigma = self.likelihood_layer(hs) # (num_ts, 1)

mus.append(mu.view(-1, 1))

sigmas.append(sigma.view(-1, 1))

if self.likelihood == "g":

ynext = gaussian_sample(mu, sigma) #(num_ts, 1)

elif self.likelihood == "nb":

alpha_t = sigma

mu_t = mu

ynext = negative_binomial_sample(mu_t, alpha_t) #(num_ts, 1)

# if without true value, use prediction

if s >= num_obs_to_train and s < num_obs_to_train + seq_len: #在预测区间内

ypred.append(ynext)

ypred = torch.cat(ypred, dim=1).view(num_ts, -1) #(num_ts, seq_len)

mu = torch.cat(mus, dim=1).view(num_ts, -1) #(num_ts, num_obs_to_train + seq_len)

sigma = torch.cat(sigmas, dim=1).view(num_ts, -1) #(num_ts, num_obs_to_train + seq_len)

return ypred, mu, sigma

device=torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

data = pd.read_csv("knn_oj.csv")

X = np.asarray(data.iloc[:,:-1]).reshape((1, 121, 16))

y = np.asarray(data.iloc[:,-1]).reshape((1, 121))

print("X_shape=",X.shape) # (series_num,len,features_num)

print("y_shape=",y.shape) # (series_num,len)

def sliding_window(DataSet, width, multi_vector = True): #DataSet has to be as an Array

print(width)

if multi_vector: #三维 (num_samples,length,features)

num_samples,length,features = DataSet.shape

else: #二维 (num_samples,length)

DataSet = DataSet[:,:,np.newaxis] #(num_samples,length,1)

num_samples,length,features = DataSet.shape

x = DataSet[:,0:width,:] #(num_samples,width,features)

x = x[np.newaxis,:,:,:] #(1,num_samples,width,features)

for i in range(length - width):

i += 1

tmp = DataSet[:,i:i + width,:]#(num_samples,width,features)

tmp = tmp[np.newaxis,:,:,:] #(1,num_samples,width,features)

x = np.concatenate([x,tmp],0) #(i+1,num_samples,width,features)

return x

width = num_obs_to_train + seq_len

X_data = sliding_window(X, width, multi_vector = True) #(len-width+1,num_samples,width,features)

Y_data = sliding_window(y, width, multi_vector = False) #(len-width+1,num_samples,width,1)

print("x的维度为:",X_data.shape)

print("y的维度为:",Y_data.shape)

# 取其中一类序列

i = 0

X_data = X_data[:,i,:,:]

Y_data = Y_data[:,i,:,0]

print("x的维度为:",X_data.shape)

print("y的维度为:",Y_data.shape)

# SPLIT TRAIN TEST

from sklearn.model_selection import train_test_split

Xtr, Xte, ytr, yte = train_test_split(X_data, Y_data,

test_size=0.3,

random_state=0,

shuffle=False)

print("X_train:{},y_train:{}".format(Xtr.shape,ytr.shape))

print("X_test:{},y_test:{}".format(Xte.shape,yte.shape))

# # # 标准化

yscaler = None

if standard_scaler:

yscaler = StandardScaler()

elif log_scaler:

yscaler = LogScaler()

elif mean_scaler:

yscaler = MeanScaler()

if yscaler is not None:

ytr = yscaler.fit_transform(ytr)

# #构造Dtaloader

Xtr=torch.as_tensor(torch.from_numpy(Xtr), dtype=torch.float32)

ytr=torch.as_tensor(torch.from_numpy(ytr),dtype=torch.float32)

Xte=torch.as_tensor(torch.from_numpy(Xte), dtype=torch.float32)

yte=torch.as_tensor(torch.from_numpy(yte),dtype=torch.float32)

train_dataset=torch.utils.data.TensorDataset(Xtr,ytr) #训练集dataset

train_Loader=torch.utils.data.DataLoader(train_dataset,batch_size=batch_size)

# 定义模型和优化器

num_ts, num_periods, num_features = X.shape

model = DeepAR(num_features, embedding_size,

hidden_size, n_layers,lr, likelihood).to(device)

optimizer = Adam(model.parameters(), lr=lr)

losses = []

cnt = 0

# training

print("开启训练")

progress = ProgressBar()

for epoch in progress(range(num_epoches)):

# print("Epoch {} starts...".format(epoch))

for x,y in train_Loader:

x = x.to(device) # (batch_size, num_obs_to_train+seq_len, num_features)

y = y.to(device) # (batch_size, num_obs_to_train+seq_len)

Xtrain = x[:,:num_obs_to_train,:].float() # (batch_size, num_obs_to_train, num_features)

ytrain = y[:,:num_obs_to_train].float() # (batch_size, num_obs_to_train)

Xf = x[:,-seq_len:,:].float() # (batch_size, seq_len, num_features)

yf = y[:,-seq_len:].float() # (batch_size, seq_len)

ypred, mu, sigma = model(Xtrain, ytrain, Xf) # ypred:(batch_size, seq_len), mu&sigma:(batch_size, num_obs_to_train + seq_len)

# ypred_rho = ypred

# e = ypred_rho - yf

# loss = torch.max(rho * e, (rho - 1) * e).mean()

## gaussian loss

ytrain = torch.cat([ytrain, yf], dim=1) # (batch_size, num_obs_to_train+seq_len)

if likelihood == "g":

loss = gaussian_likelihood_loss(ytrain, mu, sigma)

elif likelihood == "nb":

loss = negative_binomial_loss(ytrain, mu, sigma)

losses.append(loss.item())

optimizer.zero_grad()

loss.backward()

optimizer.step()

cnt += 1

if show_plot:

plt.plot(range(len(losses)), losses, "k-")

plt.xlabel("Period")

plt.ylabel("Loss")

plt.show()

# # test

print("开启测试")

X_test_sample = Xte[:,:,:].reshape(-1,num_obs_to_train+seq_len,num_features).to(device) # (num_samples, num_obs_to_train+seq_len, num_features)

y_test_sample = yte[:,:].reshape(-1,num_obs_to_train+seq_len).to(device) # (num_samples, num_obs_to_train+seq_len)

X_test = X_test_sample[:,:num_obs_to_train,:] # (num_samples, num_obs_to_train, num_features)

Xf_test = X_test_sample[:, -seq_len:, :] # (num_samples, seq_len, num_features)

y_test = y_test_sample[:, :num_obs_to_train] # (num_samples, num_obs_to_train)

yf_test = y_test_sample[:, -seq_len:] # (num_samples, seq_len)

if yscaler is not None:

y_test = yscaler.transform(y_test)

result = []

n_samples = sample_size # 采样个数

for _ in tqdm(range(n_samples)):

y_pred, _, _ = model(X_test, y_test, Xf_test) # ypred:(num_samples, seq_len)

# y_pred = y_pred.cpu().numpy()

y_pred = y_pred.detach().cpu().numpy()

if yscaler is not None:

y_pred = yscaler.inverse_transform(y_pred)

result.append(y_pred[:,:,np.newaxis]) # y_pred[:,:,np.newaxis]:(num_samples, seq_len,1)

# result.append(y_pred.reshape((-1, 1)))

result = np.concatenate(result, axis=2) # (num_samples, seq_len, n_samples)

p50 = np.quantile(result, 0.5, axis=2) # (num_samples, seq_len)

p90 = np.quantile(result, 0.9, axis=2) # (num_samples, seq_len)

p10 = np.quantile(result, 0.1, axis=2) # (num_samples, seq_len)

i = -1 #选取其中一条序列进行可视化

if show_plot: # 序列总长度为:历史窗口长度(num_obs_to_train)+预测长度(seq_len)

plt.plot([k + seq_len + num_obs_to_train - seq_len for k in range(seq_len)], p50[i,:], "r-",marker='o',) # 绘制50%分位数曲线

plt.fill_between(x=[k + seq_len + num_obs_to_train - seq_len for k in range(seq_len)], y1=p10[i,:], y2=p90[i,:], alpha=0.3)

yplot = y_test_sample[i,:].cpu() #真实值 (1, seq_len+num_obs_to_train)

plt.plot(range(len(yplot)), yplot, "k-",marker='o',)

ymin, ymax = plt.ylim()

plt.vlines(seq_len + num_obs_to_train - seq_len, ymin, ymax, color="blue", linestyles="dashed", linewidth=2)

plt.title('Prediction uncertainty')

plt.legend(["P50 forecast", "P10-P90 quantile", "true"], loc="upper left")

plt.xlabel("Periods")

plt.ylabel("logmove")

plt.show()

3243

3243

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?