本教程的灵感来源于谷歌云负责维护开发者关系的Kaz Sato制作的一个“猜拳机器”教程[1],见下图。该教程使用弯曲传感器和Tensorflow来识别猜拳手势,然后选择相应的选项:石头、剪刀、布。该项目还上了大名鼎鼎的谷歌大脑负责人Jeff Dean关于谷歌大脑在2017年的进展回顾里[2]。

图1

我想既然已经使用了Tensorflow,为什么不使用摄像头和卷积神经网络来识别手势,以实现同样的功能呢?

说干就干,本教程准备使用Tensorflow+树莓派,通过摄像头获取手势图片,然后通过卷积神经网络来识别手势。这里的主要难点是做到“实时和准确”:为了使人感觉不出有明显的延迟,处理时间必须保持在40ms以内(25fps),而且识别的精度也必须足够高,估计要95%以上。

本教程使用笔记本电脑进行模型的训练,使用树莓派进行模型的部署。这里假定读者对树莓派和Tensorflow有一定的了解。关于树莓派系统的安装和基本知识、摄像头以及舵机的使用,这里就不多做介绍;另外,Tensorflow在电脑端的安装和操作也不多作介绍。

这里先奉上最后的成果:

软硬件配置

本教程的电脑端使用的软硬件配置如下:

笔记本:ThinkPad E430C

操作系统: Windows 7

Python版本: 3.5.3

Tensorflow版本: 1.5.0

树莓派端的软硬件配置如下:

树莓派: 树莓派3 Model B

操作系统: 16g内存卡,2017-11-29-raspbian-stretch

摄像头: CSI接口 500万像素

Python版本: 2.7.0

Tensorflow版本: 1.1.0

舵机: 辉盛SG90

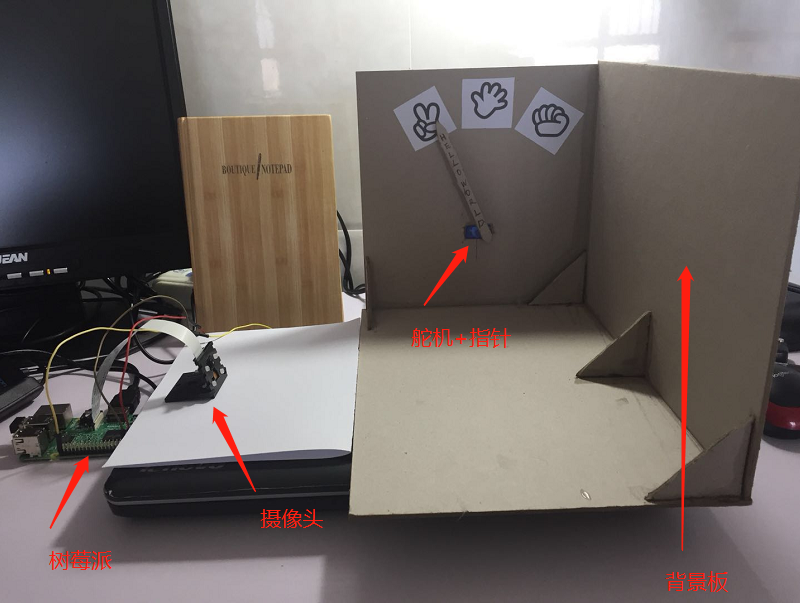

这里的配置都不是必须一样的,也不是最优的,而是因为我的电脑和树莓派现在的版本刚好是现在的样子。组装好的硬件系统如下所示:

图2

第一步: 树莓派安装OpenCV和Tensorflow

OpenCV的安装比较简单,依次输入以下代码即可

sudo apt-get update

sudo apt-get upgrade #更新系统

sudo apt-get install libopencv-dev

sudo apt-get install python-opencv如果要安装Tensorflow的最新版本,需要从源代码进行编译,比较费时间。这里使用大神sanjabrahams[3]为树莓派预编译的二进制版本进行安装。现在的预编译的最新版本是Tensorflow 1.1.0,但已经够用了。安装步骤如下:

安装pip, python-dev

sudo apt-get install python-pip

sudo apt-get install python-dev使用Python 2.7安装

wget https://github.com/samjabrahams/tensorflow-on-raspberry-pi/releases/download/v1.1.0/tensorflow-1.1.0-cp27-none-linux_armv7l.whl

sudo pip install tensorflow-1.1.0-cp27-none-linux_armv7l.whl如果因为网络原因无法下载上述预编译的文件,可以到下面的百度网盘下载

链接:https://pan.baidu.com/s/1pMKyDg3 密码:4nqr

第二步: 收集数据

一般来说,深度学习的数据都是比较贵的。但是,在本教程中,数据比较廉价,打开摄像头,在图2的背景板前做“石头、剪刀、布”手势即可获取足够多的数据。为了识别时精度比较高,确保你的手势出现在背景板前的不同位置。获取的部分数据如下:

图3

接着,将上述数据进行手工分类,分别存放在四个类别的文件夹中,我这里按照“石头、剪刀、布和其他”的英文命名文件夹:others, paper, rock, scissors,然后将4个文件夹存放在images文件中。每一类手势的图片大概在3000张左右。最后,将获取的图片拷贝到笔记本中用于训练。

树莓派调用摄像头拍摄图片的程序如下(cam.py):

import cv2

clicked = False

def onMouse(event, x, y, flags, param):

global clicked

if event == cv2.EVENT_LBUTTONUP:

clicked = True

cameraCapture = cv2.VideoCapture(0)

cameraCapture.set(3, 100)

cameraCapture.set(4, 100) # 帧宽度和帧高度都设置为100像素

cv2.namedWindow('MyWindow')

cv2.setMouseCallback('MyWindow', onMouse)

print('showing camera feed. Click window or press and key to stop.')

success, frame = cameraCapture.read()

print(success)

count = 0

while success and cv2.waitKey(1)==-1 and not clicked:

cv2.imshow('MyWindow', cv2.flip(frame,0))

success, frame = cameraCapture.read()

name = 'images/image'+str(count)+'.jpg'

cv2.imwrite(name,frame)

count+=1

cv2.destroyWindow('MyWindow')

cameraCapture.release()第三步:数据处理

第二步收集的数据是以图片格式储存在各个文件中。需要将图片转换成Tensorflow能够处理的数据,并且打乱顺序,以及将数据分成训练集和测试集,这里80%作为训练集,20%作为测试集。通过以下代码,可以实现该功能(read_image.py)

from PIL import Image

import numpy as np

from PIL import Image

from pylab import *

import os

import glob

# 训练时所用输入长、宽和通道大小

w = 28

h = 28

# 将标签转换成one-hot矢量

def to_one_hot(label):

label_one = np.zeros((len(label),np.max(label)+1))

for i in range(len(label)):

label_one[i, label[i]]=1

return label_one

# 读入图片并转化成相应的维度

def read_img(path):

cate = [path + x for x in os.listdir(path) if os.path.isdir(path + x)]

imgs = []

labels = []

for idx, folder in enumerate(cate):

for im in glob.glob(folder + '/*.jpg'):

print('reading the image: %s' % (im))

# 读入图片,转化成灰度图,并缩小到相应维度

img = array(Image.open(im).convert('L').resize((w,h)),dtype=float32)

imgs.append(img)

#img = array(img)

labels.append(idx)

data,label = np.asarray(imgs, np.float32), to_one_hot(np.asarray(labels, np.int32))

# 将图片随机打乱

num_example = data.shape[0]

arr = np.arange(num_example)

np.random.shuffle(arr)

data = data[arr]

label = label[arr]

# 80%用于训练,20%用于验证

ratio = 0.8

s = np.int(num_example * ratio)

x_train = data[:s]

y_train = label[:s]

x_val = data[s:]

y_val = label[s:]

x_train = np.reshape(x_train, [-1, w*h])

x_val = np.reshape(x_val, [-1, w*h])

return x_train, y_train, x_val, y_val

if __name__=="__main__":

path = 'images/'

x_train, y_train, x_val, y_val = read_img(path) 第四步:网络架构

这是一个简单的图像分类问题,可以使用卷积神经网络来进行分类。因为是运用在树莓派上,所以首先想到的是使用MobileNets网络架构进行分类。我尝试过使用mobilenet_0.50_128模型,虽然模型大小只有5M左右,但是在树莓派上识别一张图片的时间大概在0.5s左右,远远不能满足本项目的要求。究其原因,是因为MobileNets对输入图片的尺寸要求至少是128*128像素,这大大增加了计算量。基于速度和精度的要求,本项目图片输入尺寸保持在28*28*1像素(和MNIST数据集一样),通过一个小的卷积网络来实现分类。卷积网络架构如下表所示,总共有4个隐藏层:

| 类型 | Kernel尺寸/步长(或注释) | 输入尺寸 |

| 卷积 | 3*3*16/1 | 28*28*1 |

| 池化 | 2*2/2 | 28*28*16 |

| 卷积 | 3*3*32/1 | 14*14*16 |

| 池化 | 2*2/2 | 14*14*32 |

| 全连接 | (7*7*32)*256 | 1*(7*7*32) |

| Dropout | 注:随机失活 | 1*256 |

| 全连接 | 256*4 | 1*256 |

| Softmax | 分类输出 | 1*4 |

上述卷积神经网络的代码如下(model.py):

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import tensorflow as tf

import numpy as np

w = np.int32(28)

h = np.int32(28)

# 定义权重

def weight_variable(shape, name):

return tf.Variable(tf.truncated_normal(shape, stddev=0.1), name=name)

# 定义偏差

def bias_variable(shape, name):

return tf.Variable(tf.constant(0.1, shape=shape), name=name)

# 网络架构

def model(x, keep_prob):

x_image = tf.reshape(x, [-1, w, h, 1])

# Conv1

with tf.name_scope('conv1'):

W_conv1 = weight_variable([3, 3, 1, 16], name="weight")

b_conv1 = bias_variable([16], name='bias')

h_conv1 = tf.nn.relu(

tf.nn.conv2d(x_image, W_conv1, strides=[1,1,1,1], padding="SAME", name='conv')

+ b_conv1)

h_pool1 = tf.nn.max_pool(h_conv1, ksize=[1,2,2,1], strides=[1,2,2,1],

padding="SAME", name="pool")

# Conv2

with tf.name_scope('conv2'):

W_conv2 = weight_variable([3, 3, 16, 32], name="weight")

b_conv2 = bias_variable([32], name='bias')

h_conv2 = tf.nn.relu(

tf.nn.conv2d(h_pool1, W_conv2, strides=[1,1,1,1], padding="SAME", name='conv')

+ b_conv2)

h_pool2 = tf.nn.max_pool(h_conv2, ksize=[1,2,2,1], strides=[1,2,2,1],

padding="SAME", name="pool")

# fc1

with tf.name_scope('fc1'):

W_fc1 = weight_variable([7*7*32, 256], name="weight")

b_fc1 = bias_variable([256], name='bias')

h_pool2_flat = tf.reshape(h_pool2, [-1, 7*7*32])

h_fc1 = tf.nn.relu(

tf.matmul(h_pool2_flat, W_fc1)+b_fc1)

# Dropout

h_fc1_drop = tf.nn.dropout(h_fc1, keep_prob)

# fc2

with tf.name_scope('fc2'):

W_fc2 = weight_variable([256, 4], name="weight")

b_fc2 = bias_variable([4], name='bias')

y = tf.nn.softmax(

tf.matmul(h_fc1_drop, W_fc2)+b_fc2, name="output")

return y这一步对上面的网络架构进行训练,并保存模型在文件夹model中(train.py)

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import tensorflow as tf

import argparse

import sys

import model

import read_image

import numpy as np

w = 28

h = 28

def main(args):

lr = args.learning_rate

batch_size = args.batch_size

epoches = args.epoches

keep_prob_value = args.keep_prob

train(lr,batch_size, epoches, keep_prob_value)

def train(lr, batch_size, epoches, keep_prob_value):

# 读入图片

path = 'images/'

x_train, y_train, x_val, y_val = read_image.read_img(path)

x_train = x_train/255.0 # 图片预处理

x_val = x_val/255.0

x = tf.placeholder(tf.float32, [None, w*h], name="images")

y_ = tf.placeholder(tf.float32, [None, 4], name="labels")

keep_prob = tf.placeholder(tf.float32,name="keep_prob")

y = model.model(x, keep_prob)

# Cost function

cross_entropy = tf.reduce_mean(-tf.reduce_sum(y_*tf.log(y),

reduction_indices=[1]),name="corss_entropy")

train_step = tf.train.AdamOptimizer(lr).minimize(cross_entropy)

correct_prediction = tf.equal(tf.argmax(y,1), tf.argmax(y_,1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32), name="accuracy")

saver = tf.train.Saver()

# Start training

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

for i in range(epoches+1):

iters = np.int32(len(x_train)/batch_size)+1

for j in range(iters):

if j==iters-1:

batch0 = x_train[j*batch_size:]

batch1 = y_train[j*batch_size:]

else:

batch0 = x_train[j*batch_size:(j+1)*batch_size]

batch1 = y_train[j*batch_size:(j+1)*batch_size]

if i%25==0 and j==1:

train_accuracy, cross_ent = sess.run([accuracy, cross_entropy],

feed_dict={x:batch0, y_:batch1,

keep_prob: keep_prob_value})

print("step %d, training accuracy %g, corss_entropy %g" % (i, train_accuracy, cross_ent))

# Save model

saver_path = saver.save(sess,"model/model.ckpt")

print("Model saved in file:", saver_path)

test_accuracy = sess.run(accuracy, feed_dict={x:x_val,

y_:y_val,

keep_prob: 1.0})

print("test accuracy %g" % test_accuracy)

sess.run(train_step, feed_dict={x:batch0, y_:batch1,

keep_prob:keep_prob_value})

test_accuracy = sess.run(accuracy, feed_dict={x:x_val,

y_:y_val,

keep_prob: 1.0})

print("test accuracy %g" % test_accuracy)

def parse_arguments(argv):

parser = argparse.ArgumentParser()

parser.add_argument('--learning_rate', type=float,

help="learning rate", default=1e-4)

parser.add_argument('--batch_size', type=float,

help="batch_size", default=50)

parser.add_argument('--epoches', type=float,

help="epoches", default=100)

parser.add_argument('--keep_prob', type=float,

help="keep prob", default=0.5)

return parser.parse_args(argv)

if __name__=="__main__":

main(parse_arguments(sys.argv[1:]))

大概进行10分钟的训练,测试精度可以达到98.9%,模型大小在5M以内,还不错。

第六步:测试算法

这一步测试该网络架构识别一张图片需要花费多少时间,通过测试,识别一张图片的时间在25ms左右。代码如下(test.py)

from PIL import Image

from matplotlib.pylab import *

import numpy as np

import argparse

import tensorflow as tf

import time

w = 28

h = 28

classes = ['others','paper','rock','scissors']

def main(args):

filename = 'images/scissors/image69.jpg'

model_dir = 'model/model.ckpt'

# Restore model

saver = tf.train.import_meta_graph(model_dir+".meta")

with tf.Session() as sess:

saver.restore(sess, model_dir)

x = tf.get_default_graph().get_tensor_by_name("images:0")

keep_prob = tf.get_default_graph().get_tensor_by_name("keep_prob:0")

y = tf.get_default_graph().get_tensor_by_name("fc2/output:0")

# Read image

pil_im = array(Image.open(filename).convert('L').resize((w,h)),dtype=float32)

pil_im = pil_im/255.0

pil_im = pil_im.reshape((1,w*h))

time1 = time.time()

prediction = sess.run(y, feed_dict={x:pil_im,keep_prob: 1.0})

index = np.argmax(prediction)

time2 = time.time()

print("The classes is: %s. (the probability is %g)" % (classes[index], prediction[0][index]))

print("Using time %g" % (time2-time1))

def parse_arguments(argv):

parser = argparse.ArgumentParser()

parser.add_argument('--filename', type=str,

help="The image name",default="images/paper/image104.jpg")

parser.add_argument('--model_dir', type=str,

help="model file", default="model/model.ckpt")

return parser.parse_args(argv)

if __name__=="__main__":

main(parse_arguments(sys.argv[1:]))

第7步:使用算法

通过前面几步就训练好了网络模型,这时可以将models文件夹(包含训练好的模型)拷贝到树莓派中,然后按照图2的布置,在背景板前猜拳,运行以下代码(rps.py),就可以得到想要的结果。看着还不错哦。

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import tensorflow as tf

import numpy as np

import PIL.Image as Image

import time

import cv2

import RPi.GPIO as GPIO

import signal

import atexit

# 舵机旋转的角度

def rotate(p, angle):

p.ChangeDutyCycle(7.5+10*angle/180)

time.sleep(1)

p.ChangeDutyCycle(0)

# 石头剪刀布主程序

def rps(model_dir, classes, p):

clicked = False

def onMouse(event, x, y, flags, param):

global clicked

if event == cv2.EVENT_LBUTTONUP:

clicked = True

cameraCapture = cv2.VideoCapture(0)

cameraCapture.set(3, 100) # 帧宽度

cameraCapture.set(4, 100) # 帧高度

cv2.namedWindow('MyWindow')

cv2.setMouseCallback('MyWindow', onMouse)

print('showing camera feed. Click window or press and key to stop.')

success, frame = cameraCapture.read()

print(success)

count = 0

flag = 0

saver = tf.train.import_meta_graph(model_dir+".meta")

with tf.Session() as sess:

saver.restore(sess, model_dir)

x = tf.get_default_graph().get_tensor_by_name("images:0")

keep_prob = tf.get_default_graph().get_tensor_by_name("keep_prob:0")

y = tf.get_default_graph().get_tensor_by_name("fc2/output:0")

count=0

while success and cv2.waitKey(1)==-1 and not clicked:

time1 = time.time()

cv2.imshow('MyWindow', frame)

success, frame = cameraCapture.read()

img = Image.fromarray(frame)

# 将图片转化成灰度并缩小尺寸

img = np.array(img.convert('L').resize((28, 28)),dtype=np.float32)

img = img.reshape((1,28*28))

img = img/255.0 # 图像前处理

prediction = sess.run(y, feed_dict={x:img,keep_prob: 1.0})

index = np.argmax(prediction)

probability = prediction[0][index]

# 设置probability为0.8是为了提高识别稳定性

if index==1 and flag!=1 and probability>0.8:

rotate(p, 30)

flag=1

elif index==2 and flag!=2 and probability>0.8:

rotate(p, 0)

flag = 2

elif index==3 and flag!=3 and probability>0.8:

rotate(p, -30)

flag = 3

time2 = time.time()

if count%30==0:

print(classes[index])

print('using time: ', time2-time1)

print('probability: %.3g' % probability)

count+=1

cv2.destroyWindow('MyWindow')

cameraCapture.release()

if __name__=="__main__":

classes = ['others','paper','rock','scissors']

model_dir="model/model.ckpt"

atexit.register(GPIO.cleanup)

servopin = 21 # BCM模式下的21引脚

GPIO.setmode(GPIO.BCM)

GPIO.setup(servopin, GPIO.OUT, initial=False)

p = GPIO.PWM(servopin,50) #50HZ

p.start(0)

time.sleep(2)

rps(model_dir, classes, p)

以上的代码可以在我的github上下载:https://github.com/Curisan/rps

[1] https://baijia.baidu.com/s?id=1581394064696070458&wfr=pc&fr=ch_lst

[2] http://mp.weixin.qq.com/s/6V-zQYwvWuvu3bwtkpaM7w

[3] https://github.com/samjabrahams/tensorflow-on-raspberry-pi

2576

2576

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?