2022

CVPR

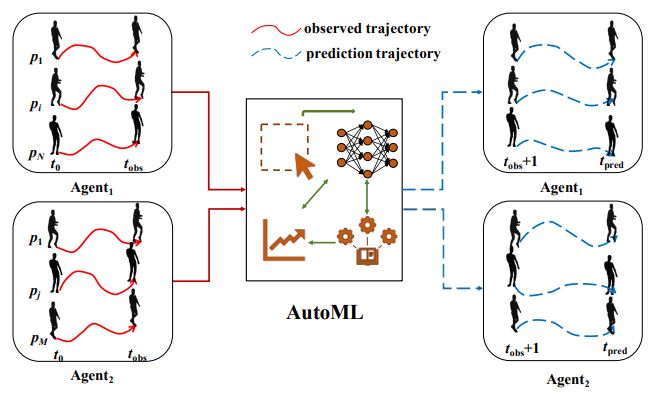

ATPFL: Automatic Trajectory Prediction Model Design Under Federated Learning Framework

ATPFL helps users federate multi-source trajectory datasets to automatically design and train a powerful TP model. ATPFL帮助用户联合多源轨迹数据集,自动设计和训练强大的TP轨迹预测模型。

链接:

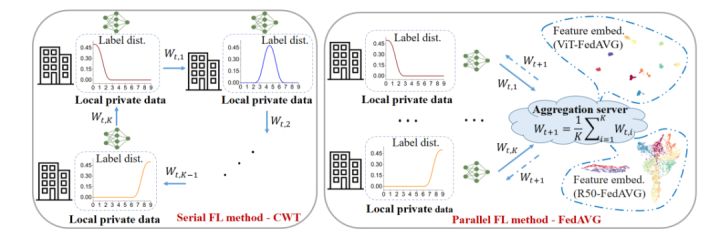

Rethinking Architecture Design for Tackling Data Heterogeneity in Federated Learning

ViT-FL demonstrate that self-attention-based architectures (e.g., Transformers) are more robust to distribution shifts and hence improve federated learning over heterogeneous data. ViT-FL证明了基于自注意力机制架构(如 Transformers)对分布的转变更加稳健,从而改善了异构数据的联邦学习。

链接:

https://arxiv.org/abs/2106.06047

FedCorr: Multi-Stage Federated Learning for Label Noise Correction

FedCorr, a general multi-stage framework to tackle heterogeneous label noise in FL, without making any assumptions on the noise models of local clients, while still maintaining client data privacy. FedCorr 一个通用的多阶段框架来处理FL中的异质标签噪声,不对本地客户的噪声模型做任何假设,同时仍然保持客户数据的隐私。

链接:

http://arxiv.org/abs/2204.04677

FedCor: Correlation-Based Active Client Selection Strategy for Heterogeneous Federated Learning

FedCor, an FL framework built on a correlation-based client selection strategy, to boost the convergence rate of FL. FedCor 一个建立在基于相关性的客户选择策略上的FL框架,以提高FL的收敛率。

链接:

http://arxiv.org/abs/2103.13822

Layer-Wised Model Aggregation for Personalized Federated Learning

A novel pFL training framework dubbed Layer-wised Personalized Federated learning (pFedLA) that can discern the importance of each layer from different clients, and thus is able to optimize the personalized model aggregation for clients with heterogeneous data. "层级个性化联合学习"(pFedLA),它可以从不同的客户那里分辨出每一层的重要性,从而能够为拥有异质数据的客户优化个性化的模型聚合。

链接:

http://arxiv.org/abs/2205.03993

Local Learning Matters: Rethinking Data Heterogeneity in Federated Learning

FedAlign rethinks solutions to data heterogeneity in FL with a focus on local learning generality rather than proximal restriction. 我们重新思考FL中数据异质性的解决方案,重点是本地学习的通用性(generality)而不是近似限制。

链接:

https://arxiv.org/abs/2111.14213

Federated Learning With Position-Aware Neurons

Federated semi-supervised learning (FSSL) aims to derive a global model by training fully-labeled and fully-unlabeled clients or training partially labeled clients. RSCFed presents a Random Sampling Consensus Federated learning, by considering the uneven reliability among models from fully-labeled clients, fully-unlabeled clients or partially labeled clients. 联邦半监督学习(FSSL)旨在通过训练有监督和无监督的客户或半监督的客户来得出一个全局模型。随机抽样共识联合学习,即RSCFed,考虑来自有监督的客户、无监督的客户或半监督的客户的模型之间不均匀的可靠性。

链接:

http://arxiv.org/abs/2203.14666

Learn From Others and Be Yourself in Heterogeneous Federated Learning

FCCL (Federated Cross-Correlation and Continual Learning) For heterogeneity problem,FCCL leverages unlabeled public data for communication and construct cross-correlation matrix to learn a generalizable representation under domain shift. Meanwhile, for catastrophic forgetting, FCCL utilizes knowledge distillation in local updating, providing inter and intra domain information without leaking privacy. FCCL(联邦交叉相关和持续学习)对于异质性问题,FCCL利用未标记的公共数据进行交流,并构建交叉相关矩阵来学习领域转移下的可泛化表示。同时,对于灾难性遗忘,FCCL利用局部更新中的知识提炼,在不泄露隐私的情况下提供域间和域内信息。

Robust Federated Learning With Noisy and Heterogeneous Clients

RHFL (Robust Heterogeneous Federated Learning) simultaneously handles the label noise and performs federated learning in a single framework. RHFL(稳健模型异构联邦学习),它同时处理标签噪声并在一个框架内执行联邦学习。

ResSFL: A Resistance Transfer Framework for Defending Model Inversion Attack in Split Federated Learning

ResSFL, a Split Federated Learning Framework that is designed to be MI-resistant during training. ResSFL一个分割学习的联邦学习框架,它被设计成在训练期间可以抵抗MI模型逆向攻击。Model Inversion (MI) attack 模型逆向攻击 。

链接:

http://arxiv.org/abs/2205.04007

FedDC: Federated Learning With Non-IID Data via Local Drift Decoupling and Correction

FedDC propose a novel federated learning algorithm with local drift decoupling and correction. FedDC 一种带有本地漂移解耦和校正的新型联邦学习算法。

解读:

[CVPR2022]FedDC: Federated Learning with Non-IID Data via Local Drift Decoupling and Correction - 知乎

链接:

http://arxiv.org/abs/2203.11751

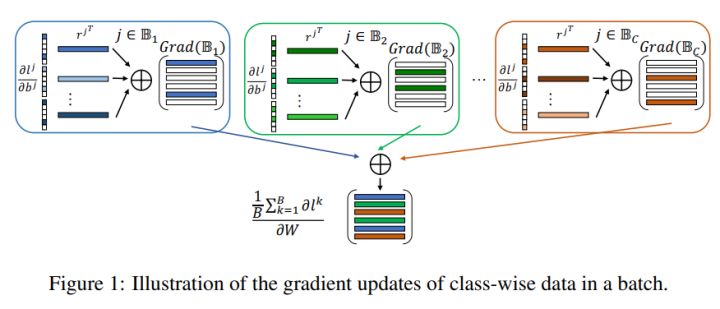

Federated Class-Incremental Learning

Global-Local Forgetting Compensation (GLFC) model, to learn a global class incremental model for alleviating the catastrophic forgetting from both local and global perspectives. 全局-局部遗忘补偿(GLFC)模型,从局部和全局的角度学习一个全局类增量模型来缓解灾难性的遗忘问题。

链接:

http://arxiv.org/abs/2203.11473

Fine-Tuning Global Model via Data-Free Knowledge Distillation for Non-IID Federated Learning

FedFTG, a data-free knowledge distillation method to fine-tune the global model in the server, which relieves the issue of direct model aggregation. FedFTG, 一种无数据的知识蒸馏方法来微调服务器中的全局模型,它缓解了直接模型聚合的问题。

链接:

https://arxiv.org/abs/2203.09249

Differentially Private Federated Learning With Local Regularization and Sparsification

DP-FedAvg+BLUR+LUS study the cause of model performance degradation in federated learning under user-level DP guarantee and propose two techniques, Bounded Local Update Regularization and Local Update Sparsification, to increase model quality without sacrificing privacy. DP-FedAvg+BLUR+LUS 研究了在用户级DP保证下联合学习中模型性能下降的原因,提出了两种技术,即有界局部更新正则化和局部更新稀疏化,以提高模型质量而不牺牲隐私。

链接:

http://arxiv.org/abs/2203.03106

Auditing Privacy Defenses in Federated Learning via Generative Gradient Leakage

Generative Gradient Leakage (GGL) validate that the private training data can still be leaked under certain defense settings with a new type of leakage. 生成梯度泄漏(GGL)验证了在某些防御设置下,私人训练数据仍可被泄漏。

链接:

http://arxiv.org/abs/2203.15696

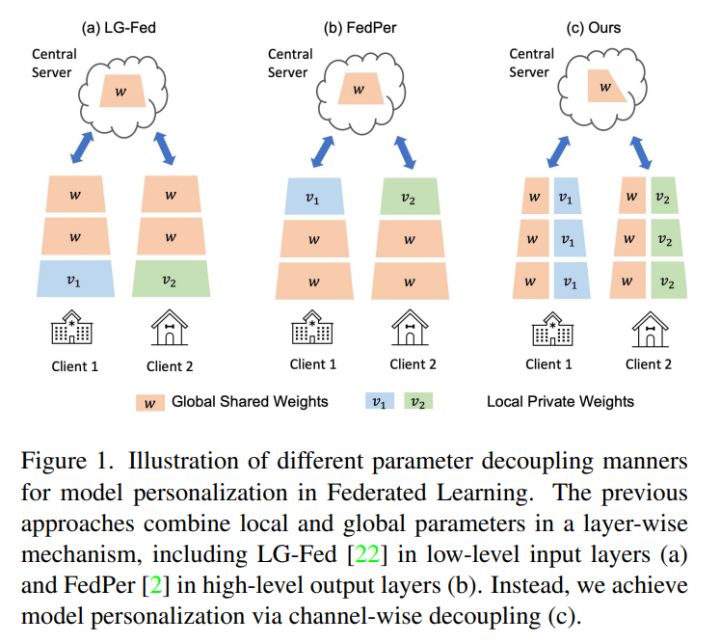

CD2-pFed: Cyclic Distillation-Guided Channel Decoupling for Model Personalization in Federated Learning

CD2-pFed, a novel Cyclic Distillation-guided Channel Decoupling framework, to personalize the global model in FL, under various settings of data heterogeneity. CD2-pFed,一个新的循环蒸馏引导的通道解耦框架,在各种数据异质性的设置下,在FL中实现全局模型的个性化。

链接:

http://arxiv.org/abs/2203.15696

Closing the Generalization Gap of Cross-Silo Federated Medical Image Segmentation

FedSM propose a novel training framework to avoid the client drift issue and successfully close the generalization gap compared with the centralized training for medical image segmentation tasks for the first time. 新的训练框架FedSM,以避免客户端漂移问题,并首次成功地缩小了与集中式训练相比在医学图像分割任务中的泛化差距。

链接:

http://arxiv.org/abs/2203.10144

2021

CVPR

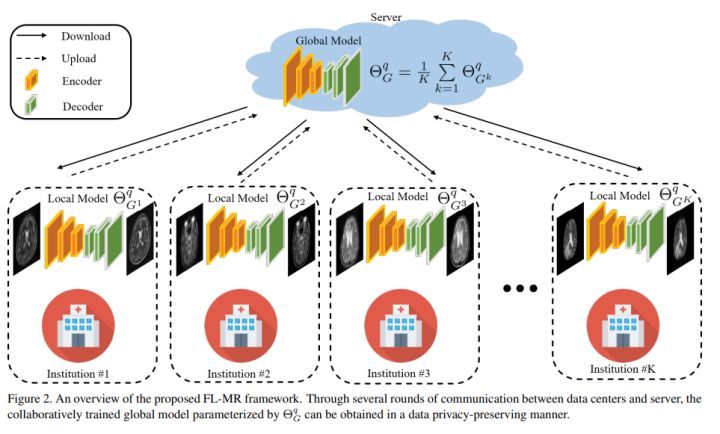

Multi-Institutional Collaborations for Improving Deep Learning-Based Magnetic Resonance Image Reconstruction Using Federated Learning

FL-MRCM propose a federated learning (FL) based solution in which we take advantage of the MR data available at different institutions while preserving patients' privacy. FL-MRCM 一个基于联邦学习(FL)的解决方案,其中我们利用了不同机构的MR数据,同时保护了病人的隐私。

链接:

https://arxiv.org/pdf/2103.02148.pdf

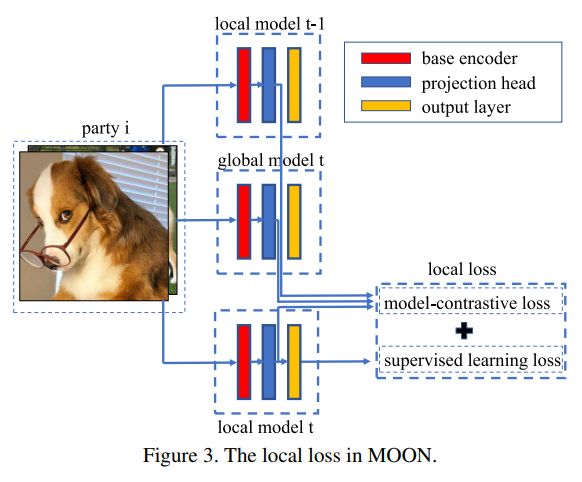

Model-Contrastive Federated Learning

MOON: model-contrastive federated learning. MOON is to utilize the similarity between model representations to correct the local training of individual parties, i.e., conducting contrastive learning in model-level. MOON 模型对比学习。MOON的关键思想是利用模型表征之间的相似性来修正各方的局部训练,即在模型层面进行对比学习。

解读:

https://weisenhui.top/posts/17666.html

链接:

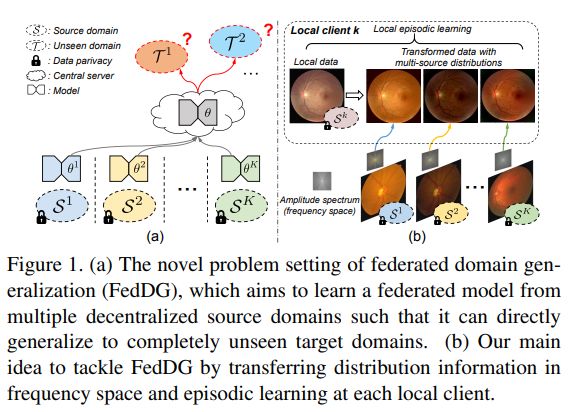

FedDG: Federated Domain Generalization on Medical Image Segmentation via Episodic Learning in Continuous Frequency Space

FedDG-ELCFS A novel problem setting of federated domain generalization (FedDG), which aims to learn a federated model from multiple distributed source domains such that it can directly generalize to unseen target domains. Episodic Learning in Continuous Frequency Space (ELCFS), for this problem by enabling each client to exploit multi-source data distributions under the challenging constraint of data decentralization. FedDG-ELCFS 联邦域泛化(FedDG)旨在从多个分布式源域中学习一个联邦模型,使其能够直接泛化到未见过的目标域中。连续频率空间中的偶发学习(ELCFS),使每个客户能够在数据分散的挑战约束下利用多源数据分布。

链接:

https://arxiv.org/pdf/2103.06030.pdf

Soteria: Provable Defense Against Privacy Leakage in Federated Learning From Representation Perspective

Soteria propose a defense against model inversion attack in FL, learning to perturb data representation such that the quality of the reconstructed data is severely degraded, while FL performance is maintained. Soteria 一种防御FL中模型反转攻击的方法,关键思想是学习扰乱数据表示,使重建数据的质量严重下降,而FL性能保持不变。

链接:

https://arxiv.org/pdf/2012.06043.pdf

ICCV

Federated Learning for Non-IID Data via Unified Feature Learning and Optimization Objective Alignment

FedUFO a Unified Feature learning and Optimization objectives alignment method for non-IID FL. FedUFO 一种针对non IID FL的统一特征学习和优化目标对齐算法。

链接:

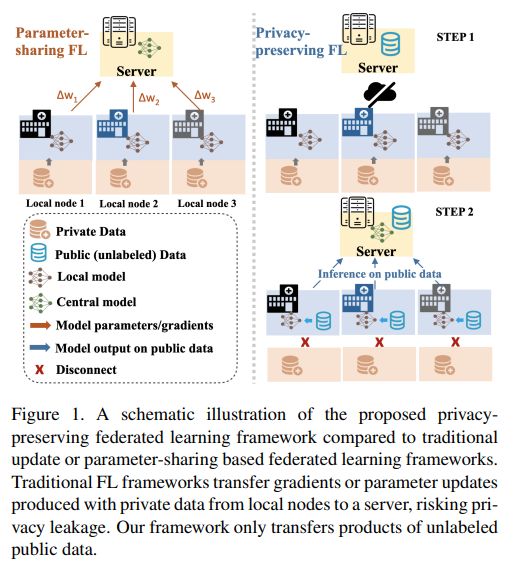

Ensemble Attention Distillation for Privacy-Preserving Federated Learning

FedAD propose a new distillation-based FL frame-work that can preserve privacy by design, while also consuming substantially less network communication resources when compared to the current methods. FedAD 一个新的基于蒸馏的FL框架,它可以通过设计来保护隐私,同时与目前的方法相比,消耗的网络通信资源也大大减少

链接:

Collaborative Unsupervised Visual Representation Learning from Decentralized Data

FedU a novel federated unsupervised learning framework. FedU 一个新颖的无监督联邦学习框架.

链接:

https://arxiv.org/abs/2108.06492

MM

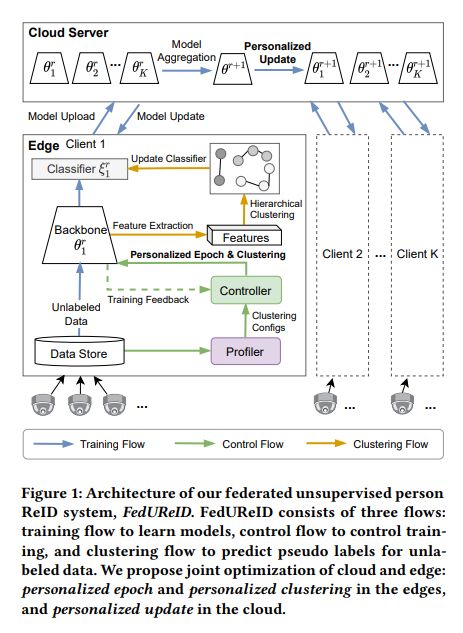

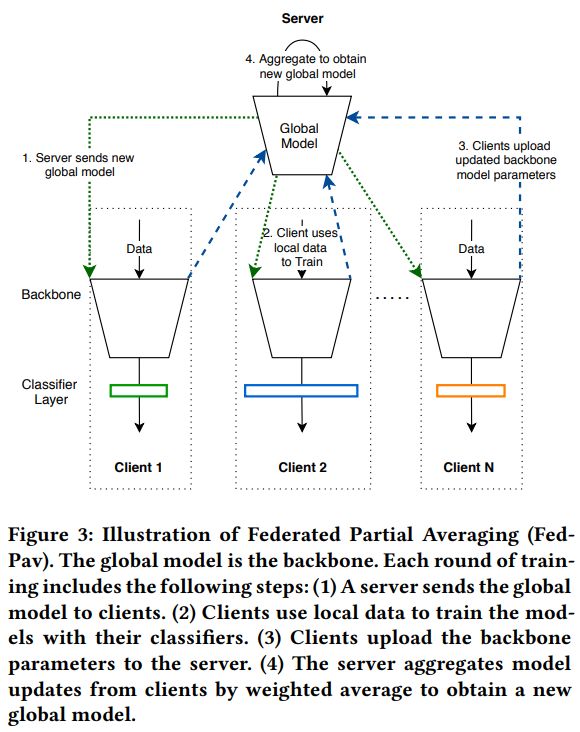

Joint Optimization in Edge-Cloud Continuum for Federated Unsupervised Person Re-identification

FedUReID, a federated unsupervised person ReID system to learn person ReID models without any labels while preserving privacy. FedUReID,一个联合的无监督人物识别系统,在没有任何标签的情况下学习人物识别模型,同时保护隐私。

链接:

https://arxiv.org/abs/2108.06493

2020

ECCV

Federated Visual Classification with Real-World Data Distribution

Introduce two new large-scale datasets for species and landmark classification, with realistic per-user data splits that simulate real-world edge learning scenarios. We also develop two new algorithms (FedVC, FedIR) that intelligently resample and reweight over the client pool, bringing large improvements in accuracy and stability in training. 为物种和地标分类引入了两个新的大规模数据集,每个用户的现实数据分割模拟了真实世界的边缘学习场景。我们还开发了两种新的算法(FedVC、FedIR),在客户池上智能地重新取样和重新加权,在训练中带来了准确性和稳定性的巨大改进。

链接:

https://arxiv.org/abs/2003.08082

MM

InvisibleFL: Federated Learning over Non-Informative Intermediate Updates against Multimedia Privacy Leakages

InvisibleFL propose a privacy-preserving solution that avoids multimedia privacy leakages in federated learning. InvisibleFL 提出了一个保护隐私的解决方案,以避免联合学习中的多媒体隐私泄漏。

链接:

https://dl.acm.org/doi/10.1145/3394171.3413923

Performance Optimization of Federated Person Re-identification via Benchmark Analysis data.

FedReID implement federated learning to person re-identification and optimize its performance affected by statistical heterogeneity in the real-world scenario. FedReID 实现了对行人重识别任务的联邦学习,并优化了其在真实世界场景中受统计异质性影响的性能。

解读:

FedReID: 联邦学习在行人重识别上的首次深入实践 - 知乎

链接:

https://arxiv.org/abs/2008.11560

如果希望了解联邦学习在计算机视觉领域的顶会论文清单及其相关信息,请点击如下链接:

参考

计算机视觉领域的顶会论文清单:https://github.com/youngfish42/Awesome-Federated-Learning-on-Graph-and-Tabular-Data#fl-in-top-cv-conferences

作者简介:

知乎:白小鱼。目前在上海交通大学计算机系攻读博士学位,主要研究兴趣包括联邦学习、小样本学习。

2159

2159

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?