(1)RNN循环神经网络处理带有序列的数据:例如自然语言、股市金融数据等

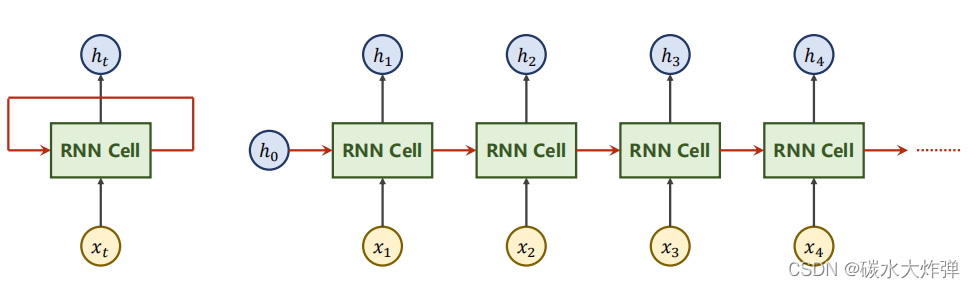

(2)RNN结构:x1和h0运算后的结果h1再送入下一个RNN Cell中,下图所示的RNN Cell都是相同的。

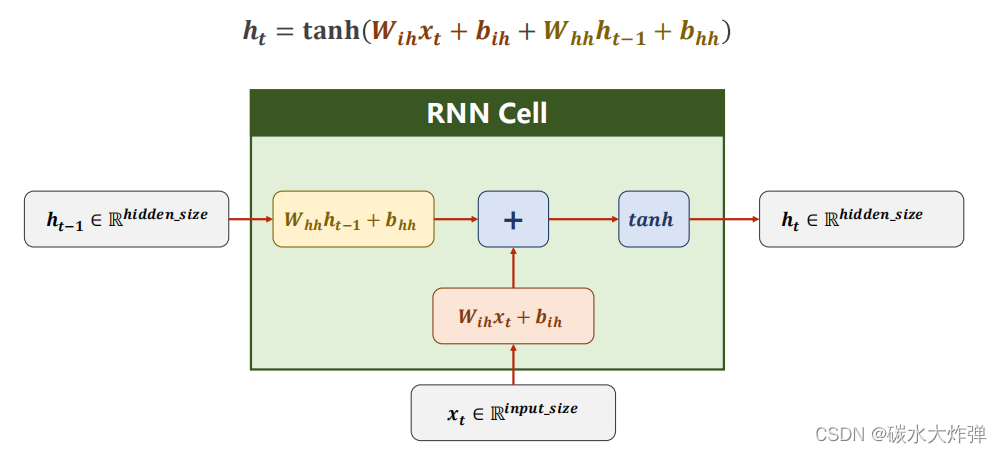

(3)RNN Cell中的运算如下图所示,Whh的维度为hidden_size x hidden_size,Wih的维度为hidden_size x input_size,由此可以得出一个RNN Cell需要的参数为input_size和hidden_size

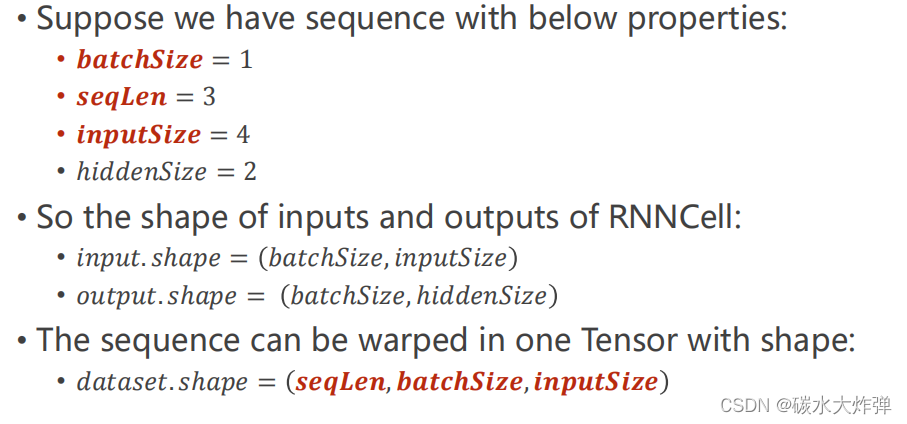

(4)构建RNN Cell:假设batchSize=1,seqLen=3表示输入的序列有3个,inputSize为输入维度,hiddenSize为隐层维度

根据以上假设,构建RNN的代码如下:

import torch

batch_size = 1

seq_len = 3

input_size = 4

hidden_size = 2

cell = torch.nn.RNNCell(input_size=input_size, hidden_size=hidden_size)

# (seq, batch, features)

dataset = torch.randn(seq_len, batch_size, input_size)

hidden = torch.zeros(batch_size, hidden_size)

for idx, input in enumerate(dataset):

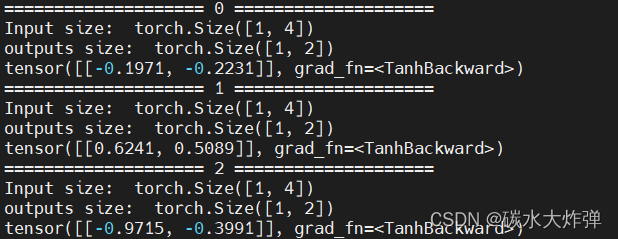

print('=' * 20, idx, '=' * 20)

print('Input size: ', input.shape)

hidden = cell(input, hidden)

print('outputs size: ', hidden.shape)

print(hidden)Input size为[1,4],hidden size为[1,2],输出结果:

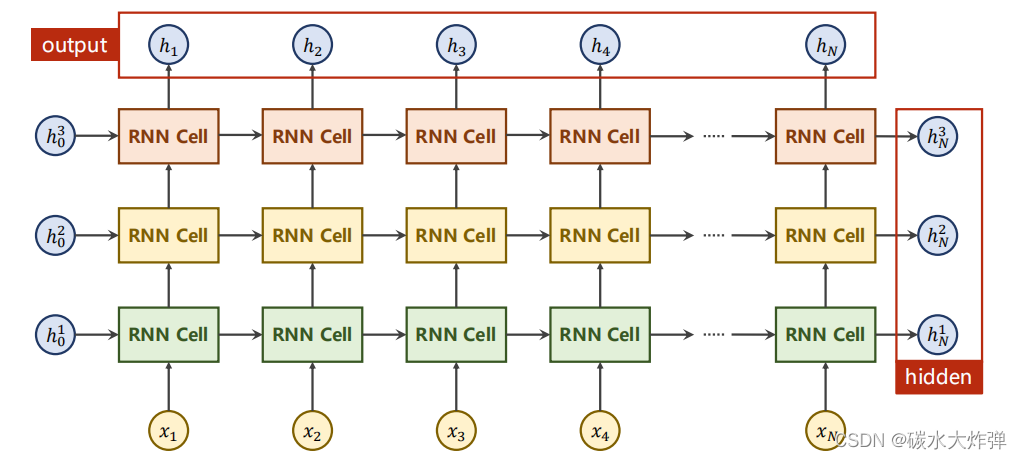

(5)当有多层不同的RNN Cell,如下图所示,每种颜色表示一种RNN Cell

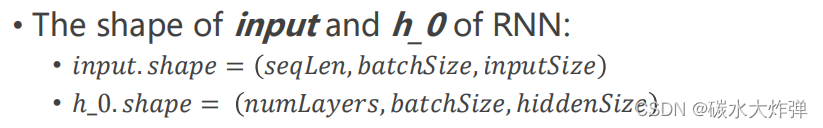

RNN的输入和h_0的结构如下,num_layers表示RNN有多少层

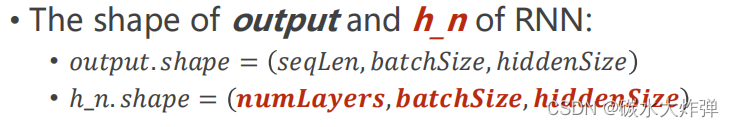

输出的结构为:

根据以上假设,构建RNN的代码如下:

根据以上假设,构建RNN的代码如下:

import torch

batch_size = 1

seq_len = 3

input_size = 4

hidden_size = 2

num_layers = 1

cell = torch.nn.RNN(input_size=input_size, hidden_size=hidden_size,

num_layers=num_layers)

# (seqLen, batchSize, inputSize)

inputs = torch.randn(seq_len, batch_size, input_size)

hidden = torch.zeros(num_layers, batch_size, hidden_size)

out, hidden = cell(inputs, hidden)

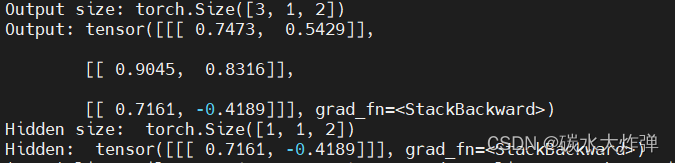

print('Output size:', out.shape)

print('Output:', out)

print('Hidden size: ', hidden.shape)

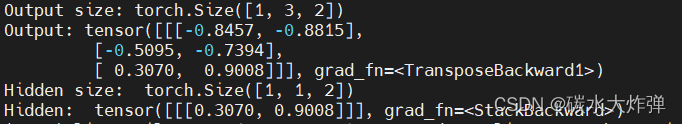

print('Hidden: ', hidden)输出结果:

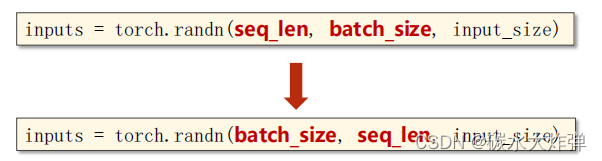

(6)batch_first=True时,inputs和output中需要把batch_size放到首位

代码测试batch_first=True:

import torch

batch_size = 1

seq_len = 3

input_size = 4

hidden_size = 2

num_layers = 1

cell = torch.nn.RNN(input_size=input_size, hidden_size=hidden_size,

num_layers=num_layers, batch_first=True)

# (seqLen, batchSize, inputSize)

inputs = torch.randn(batch_size, seq_len, input_size)

hidden = torch.zeros(num_layers, batch_size, hidden_size)

out, hidden = cell(inputs, hidden)

print('Output size:', out.shape)

print('Output:', out)

print('Hidden size: ', hidden.shape)

print('Hidden: ', hidden)输出维度由[3,1,2]变为[1,3,2]:

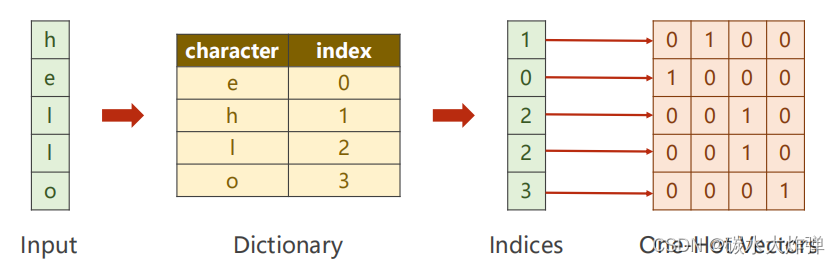

(7)独热向量:是指使用N位0或1来对N个状态进行编码,每个状态都有它独立的表示形式,并且其中只有一位为1,其他位都为0

比如我们训练一个模型从输入'hello'中学习出'ohlol',首先需要把字符转成对应的编码,'hello'中共有4种不同的字符,对这四种字符构造字典,然后用独热向量表示输入的数据'hello',独热向量的列数表示inputSize,即有几种不同的输入字符

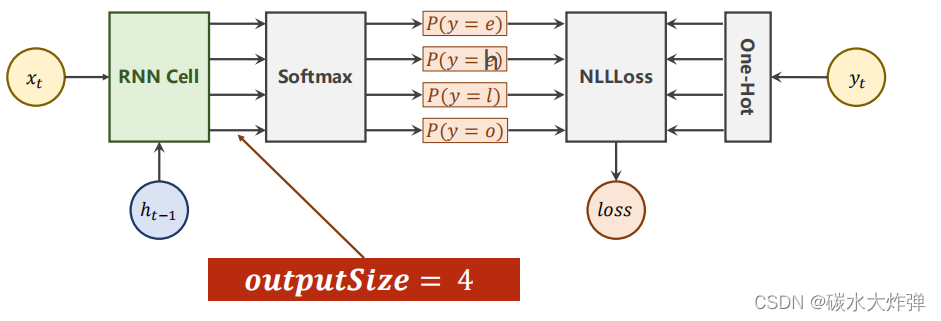

网络构建:输入的数据在经过一个RNN Cell后,输出的outputSize=4,相当于是对输出做分类,后面的激活函数和损失函数和多分类问题一致,网络结构如下:

代码实现:

# 独热向量

import torch

input_size = 4

hidden_size = 4

batch_size = 1

idx2char = ['e', 'h', 'l', 'o']

x_data = [1, 0, 2, 2, 3]

y_data = [3, 1, 2, 3, 2]

one_hot_lookup = [[1, 0, 0, 0],

[0, 1, 0, 0],

[0, 0, 1, 0],

[0, 0, 0, 1]]

x_one_hot = [one_hot_lookup[x] for x in x_data]

inputs = torch.Tensor(x_one_hot).view(-1, batch_size, input_size)

labels = torch.LongTensor(y_data).view(-1, 1)

#print('labels:', labels)

class Model(torch.nn.Module):

def __init__(self, input_size, hidden_size, batch_size):

super(Model, self).__init__()

self.batch_size = batch_size

self.input_size = input_size

self.hidden_size = hidden_size

self.rnncell = torch.nn.RNNCell(input_size=self.input_size,

hidden_size=self.hidden_size)

def forward(self, input, hidden):

hidden = self.rnncell(input, hidden)

return hidden

def init_hidden(self):

return torch.zeros(self.batch_size, self.hidden_size)

net = Model(input_size, hidden_size, batch_size)

criterion = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(net.parameters(), lr=0.1)

for epoch in range(15):

loss = 0

optimizer.zero_grad()

hidden = net.init_hidden()

print('Predicted string: ', end='')

for input, label in zip(inputs, labels):

hidden = net(input, hidden)

loss += criterion(hidden, label)

_, idx = hidden.max(dim=1)

print(idx2char[idx.item()], end='')

loss.backward()

optimizer.step()

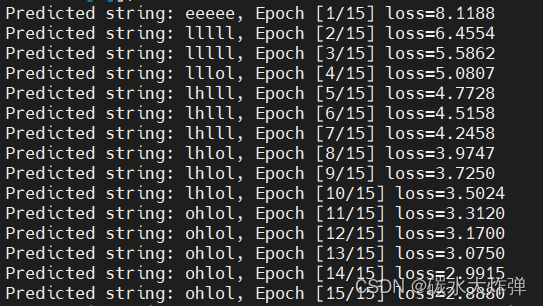

print(', Epoch [%d/15] loss=%.4f' % (epoch+1, loss.item()))输出结果:

(8) 参数中加入num_layers,实现上述模型的代码:

import torch

input_size = 4

hidden_size = 4

num_layers = 1

batch_size = 1

seq_len = 5

idx2char = ['e', 'h', 'l', 'o']

x_data = [1, 0, 2, 2, 3]

y_data = [3, 1, 2, 3, 2]

one_hot_lookup = [[1, 0, 0, 0],

[0, 1, 0, 0],

[0, 0, 1, 0],

[0, 0, 0, 1]]

x_one_hot = [one_hot_lookup[x] for x in x_data]

inputs = torch.Tensor(x_one_hot).view(seq_len, batch_size, input_size)

labels = torch.LongTensor(y_data)

class Model(torch.nn.Module):

def __init__(self, input_size, hidden_size, batch_size, num_layers=1):

super(Model, self).__init__()

self.num_layers = num_layers

self.batch_size = batch_size

self.input_size = input_size

self.hidden_size = hidden_size

self.rnn = torch.nn.RNN(input_size=self.input_size,

hidden_size=self.hidden_size,

num_layers=num_layers)

def forward(self, input):

hidden = torch.zeros(self.num_layers,

self.batch_size,

self.hidden_size)

out, _ = self.rnn(input, hidden)

return out.view(-1, self.hidden_size)

net = Model(input_size, hidden_size, batch_size, num_layers)

criterion = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(net.parameters(), lr=0.05)

for epoch in range(15):

optimizer.zero_grad()

outputs = net(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

_, idx = outputs.max(dim=1)

idx = idx.data.numpy()

print('Predicted: ', ''.join([idx2char[x] for x in idx]), end='')

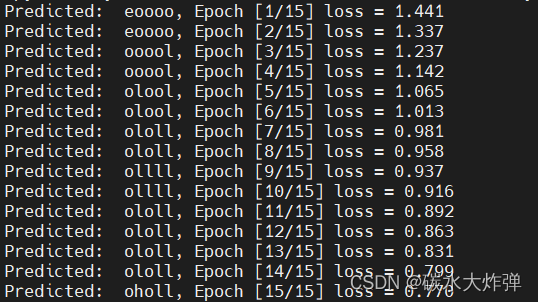

print(', Epoch [%d/15] loss = %.3f' % (epoch + 1, loss.item()))输出结果:

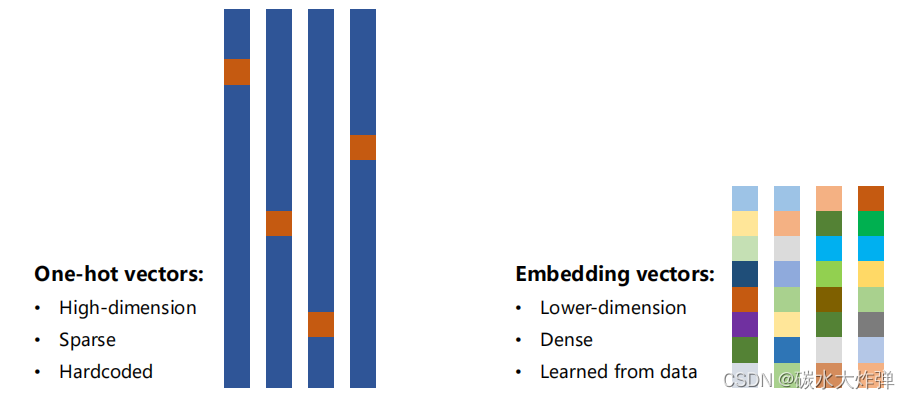

(9) 使用独热向量形成的特征矩阵会非常的稀疏,占用的空间非常的大,维度比较高,而且没有考虑编码内容与内容之间的关联,使用embedding可以解决独热向量存在的问题

代码实现:

import torch

num_class = 4

input_size = 4

hidden_size = 8

embedding_size = 10

num_layers = 2

batch_size = 1

seq_len = 5

idx2char = ['e', 'h', 'l', 'o']

x_data = [[1, 0, 2, 2, 3]] # (batch, seq_len)

y_data = [3, 1, 2, 3, 2] # (batch * seq_len)

inputs = torch.LongTensor(x_data)

labels = torch.LongTensor(y_data)

class Model(torch.nn.Module):

def __init__(self):

super(Model, self).__init__()

self.emb = torch.nn.Embedding(input_size, embedding_size)

self.rnn = torch.nn.RNN(input_size=embedding_size,

hidden_size=hidden_size,

num_layers=num_layers,

batch_first=True)

self.fc = torch.nn.Linear(hidden_size, num_class)

def forward(self, x):

hidden = torch.zeros(num_layers, x.size(0), hidden_size)

x = self.emb(x) # (batch, seqLen, embeddingSize)

x, _ = self.rnn(x, hidden)

x = self.fc(x)

return x.view(-1, num_class)

net = Model()

criterion = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(net.parameters(), lr=0.05)

for epoch in range(15):

optimizer.zero_grad()

outputs = net(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

_, idx = outputs.max(dim=1)

idx = idx.data.numpy()

print('Predicted: ', ''.join([idx2char[x] for x in idx]), end='')

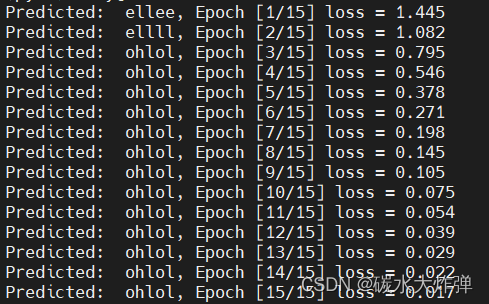

print(', Epoch [%d/15] loss = %.3f' % (epoch + 1, loss.item()))输出结果:

507

507

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?