论文网址:[2211.08927] Benchmarking Graph Neural Networks for FMRI analysis (arxiv.org)

⭐不是真正意义上的综述,应该是分析性质的文章

英文是纯手打的!论文原文的summarizing and paraphrasing。可能会出现难以避免的拼写错误和语法错误,若有发现欢迎评论指正!文章偏向于笔记,谨慎食用

目录

2.3.1. Graph convolutional networks

2.3.2. Graph attention networks

2.3.3. Graph isomorphic network

2.3.4. Spatio-temporal graph convolution network

2.3.5. Adaptive spatio-temporal graph convolution network

2.5. Data & Experimental Setup

2.5.2. Model selection & assessment

2.6.2. Scaling performance on the UKBioBank

2.6.3. Graph diffusion improves learning on noisy graphs

3.2. graph diffusion convolution (GDC)

1. 省流版

1.1. 心得

(1)有人能把节点特征当人话说出来也是挺不容易的(抹泪)(说节点特征是节点表示的真的呵呵)

(2)虽然给我拓宽了视野了解了AST-GCN,但是这...看了看标题,这真的适合fMRI数据吗?我们脑子这么随机的吗,电信号也不至于random walk吧

(3)所以你的价值是把每个baseline搬过来测一下?

(4)⭐“超参数调优的一个常见缺陷是根据最终测试集的性能进行搜索。这导致对模型泛化能力的过于乐观的估计。”

(5)你很喜欢问问题吗?

(6)侧重确实是比基线的,然后作者认为GNN都没有很行,然后觉得FC阈值选择很重要。其实不用这么care基线行不行吧?感觉基线只是一个,前人证明某个东西能吃,鲫鱼能吃金鱼不能吃。但是生吃鲫鱼也不好吃吧?那不得看后面的清蒸红烧黄焖炸煮糖醋各种各样的后期加工吗?

1.2. 论文总结图

2. 论文逐段精读

2.1. Abstract

①Testing 5 baselines of GNN and comparing them with kernel based or pixel based CNN. Then, the authors found that the performance of GNN is worse than CNN

②They reckon that GNN is limited to graph construction

2.2. Introduction

①GNN is used for phenotype prediction, disease diagnosis, and task inference

②They focus on testing the baselines

2.3. Background

2.3.1. Graph convolutional networks

①Briefly introducing GCN(额.....)

2.3.2. Graph attention networks

①....

2.3.3. Graph isomorphic network

2.3.4. Spatio-temporal graph convolution network

①ST-GCNs extract temporal features by 1D Conv, LSTM or others and spatial features by GCN

2.3.5. Adaptive spatio-temporal graph convolution network

①AST-GCNs are able to randomly initialize graph structure

2.4. Methods

2.4.1. Graph creation

①The most popular way of setting node feature in static brain graph: row of Pearson correlation(不得不说概念上来说这样定义是真**奇怪)

②The most popular way of setting edge feature in static brain graph: Pearson correlation

③"Pooling": threshold ratio hyperparameter

④Node feature of dynamic graph: time series of each ROI

⑤Edge feature/pooling strategy of dynamic graph: same as static for ST-GCN and random initialization for AST-GCN

⑥Graph construction of 5 baselines:

2.4.2. Graph Neural Networks

(1)GCNs

①Introducing spectral convolution and how it evolve into spatial convolution

(2)GATs

①Introducing GAT

②In their training, they set M=1 and choose LeakyReLU

(3)GINs

①Introducing GIN

(4)ST-GCNs

①Node feature: whole time series

②似乎没说M是什么

(5)AST-GCNs

①⭐“图结构的自适应学习是gnn领域的一个日益增长的研究领域”

2.4.3. Graph Readout

①They adopt MEAN, MEAN||MAX, and SUM readout methods

2.5. Data & Experimental Setup

2.5.1. Datasets

(1)ABIDE I/II

①Site: ABIDE I (17) + ABIDE II (19) = 29 哈哈哈哈哈还有二次参与的啊

②Sample: 1207 with 558 TD and 649 ASD (the largest 9 sites)

③Preprocessing:CPAC

④Atlas: craddock-200

(2)Rest-meta-MDD

①Cohort(?): 25

②Sample: 1453 with 628 HC and 825 MDD (the largest 9 sites)

③Preprocessing: DPARSF

④Atlas: Harvard-Oxford 112

(3)UkBioBank

①Sample: 5500 with 2750 males and 2750 females

②Atlas: AAL 116

2.5.2. Model selection & assessment

①⭐Fining hyperparameters rely on testing performance reduces the generalization of model. Thus they search hyperparameters on valiadtion set

②Cross validation: 5 fold

③Data split: 85/15 training/validation split for ABIDE and MDD; training set = [500,1000,2000,5000] on UkBioBank

pitfall n. 隐患;陷阱

2.5.3. Non-graph baselines

①Structure-agnostic baselines: MLP and support vector machines with radial basis function kernel (SVM-rbf) to learn the static lower-triangular matrix of each subject; 1D CNN for dynamic time series training

2.6. Results

2.6.1. Disorder diagnosis

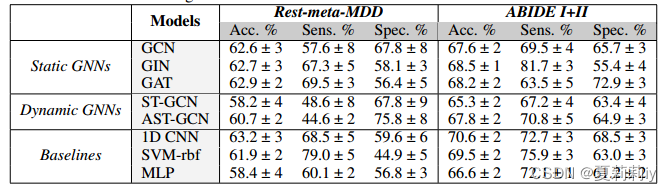

①Comparison table:

there is no outstanding model and static methods outperform dynamic

2.6.2. Scaling performance on the UKBioBank

①Discussing the results on UKBioBank:

2.6.3. Graph diffusion improves learning on noisy graphs

①Threshold of FC: 50%-95% sparsity with stride equaling to 5%

②⭐There is noise under sparsification, graph diffusion convolution (GDC) may solve this problem with:

where denotes weighting coefficients and

denotes generalized transition matrix. There are several choices.

③The effect of GDC on GCN with heat kernel coefficient and k = 2:

model is sensitive to GDC under low sparsification

2.7. Discussion

①你不会分段吗?

②Discussions......

3. 知识补充

3.1. AST-GCN

AST-GCN(Attention Spatial-Temporal Graph Convolutional Networks)是一种结合了注意力机制的时空图卷积网络。它主要用于处理和分析具有空间和时间相关性的数据,如交通流量预测、航拍视频暴力行为识别等。

在交通流量预测中,AST-GCN包含三个独立分量,分别模拟交通流量的近期依赖性、日周期性和周周期性。其主要贡献在于通过空间注意力捕捉不同位置之间的空间相关性,通过时间注意力捕捉不同时间之间的时间相关性。此外,AST-GCN还设计了时空卷积模块,包括空间图卷积和时间卷积,在真实公路交通流量数据集上取得了显著的效果。

在航拍视频暴力行为识别中,AST-GCN被用于解决航拍成像中目标出现运动模糊、尺度变化大的问题。该方法首先利用关键帧检测网络完成初定位,然后通过AST-GCN网络提取序列特征以完成行为识别确认。

AST-GCN的出现为处理和分析具有复杂时空相关性的数据提供了新的思路和方法,具有广阔的应用前景。如需更深入地了解AST-GCN的原理、实现细节以及应用案例,建议查阅相关论文和资料。

3.2. graph diffusion convolution (GDC)

参考学习:图扩散卷积:Graph_Diffusion_Convolution-CSDN博客

论文原文:23c894276a2c5a16470e6a31f4618d73-Paper.pdf (neurips.cc)

4. Reference List

ElGazzar, A., Thomas, R. & Wingen, G. (2022) 'Benchmarking Graph Neural Networks for FMRI analysis', arXiv: 2211.08927.

这篇论文对比了5种图神经网络(GNN)在fMRI数据分析中的性能,发现它们不如传统的卷积神经网络(CNN)。作者指出GNN在图构建上的局限性,并强调超参数调优可能导致过度乐观的泛化估计。同时,AST-GCN和图扩散卷积在处理噪声图谱上展示了潜力。

这篇论文对比了5种图神经网络(GNN)在fMRI数据分析中的性能,发现它们不如传统的卷积神经网络(CNN)。作者指出GNN在图构建上的局限性,并强调超参数调优可能导致过度乐观的泛化估计。同时,AST-GCN和图扩散卷积在处理噪声图谱上展示了潜力。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?