GLoRIA精读20240314 (上)

1. abstrcact

In recent years, the growing utilization of medical imaging is placing an increasing burden on radiologists. Deep learning provides a promising solution for automatic medical image analysis and clinical decision support. However, large-scale manually(compared) labeled datasets required for training deep neural networks are difficult and expensive to obtain for medical images. The purpose of this work is to develop label-efficient multimodal medical imaging representations by leveraging radiology. We propose an attention-based framework for learning global and local representations by contrasting image sub-regions and words in the paired report. In addition, we propose methods to leverage the learned representations for various downstream medical image recognition tasks with limited labels. Our results demonstrate high performance and label-efficiency for image-text retrieval, classification (finetuning and zeros shot settings)

提出高效的提取信息方式,训练编码器——>直接使用关联的(文本和图像)

2. Introduction

Advancements in medical imaging technologies have revolutionized healthcare practices and improved patient outcome. However, the growing number of imaging studies in recent years places an ever-increasing burden on radiologists, impacting the quality and speed of clinical decision making. While deep learning and computer vision provide a promising solution for automating medical image analysis, annotating medical imaging datasets requires domain expertise and is cost-prohibitive at scale. Therefore, the task of building effective medical imaging models is hindered by the lack of large-scale manually labeled dataset.

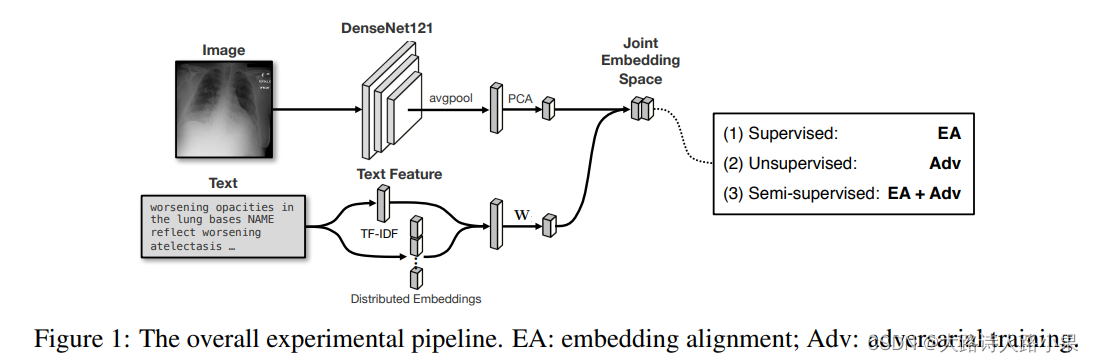

Several recent works utilize these medical reports to provide supervision signals and learn multimodal representations by maximizing mutual information between the global representations of the paired image and report [13, 3, 41, 40].

)

列举了一些医学多模态,模型

[13] Hsu, T.M.H., Weng, W.H., Boag, W., McDermott, M. and Szolovits, P., 2018. Unsupervised multimodal representation learning across medical images and reports. arXiv preprint arXiv:1811.08615.

|

[3] Chauhan, G., Liao, R., Wells, W., Andreas, J., Wang, X., Berkowitz, S., Horng, S., Szolovits, P. and Golland, P., 2020. Joint modeling of chest radiographs and radiology reports for pulmonary edema assessment. In Medical Image Computing and Computer Assisted Intervention–MICCAI 2020: 23rd International Conference, Lima, Peru, October 4–8, 2020, Proceedings, Part II 23 (pp. 529-539). Springer International Publishing.

[41] Zhang, Z., Chen, P., Sapkota, M. and Yang, L., 2017. Tandemnet: Distilling knowledge from medical images using diagnostic reports as optional semantic references. In Medical Image Computing and Computer Assisted Intervention− MICCAI 2017: 20th International Conference, Quebec City, QC, Canada, September 11-13, 2017, Proceedings, Part III 20 (pp. 320-328). Springer International Publishing.

[40] Zhang, Y., Jiang, H., Miura, Y., Manning, C.D. and Langlotz, C.P., 2022, December. Contrastive learning of medical visual representations from paired images and text. In Machine Learning for Healthcare Conference (pp. 2-25). PMLR.

However, pathology usually occupies only small proportions of medical image, making it difficult to effectively represent these subtle yet crucial visual cues using global representations alone. This motivates a need for learning localized features to capture fine-grained semantics in the image in addition to global representations. While the idea of learning local representations has been explored in several other contexts for natural images.

在自然图片领域找方法, 但是并不完全合适

However, pathology usually occupies only small proportions of medical image, making it difficult to effectively represent these subtle yet crucial visual cues using global representations alone. This motivates a need for learning localized features to capture fine-grained semantics in the image in addition to global representations. While the idea of learning local representations has been explored in several other contexts for natural images. [ 7,27,25,4]

[7] Haiwen Diao, Ying Zhang, Lin Mafdevlin2018bert, and Huchuan Lu. Similarity reasoning and filtration for image text matching. arXiv preprint arXiv:2101.01368, 2021.

[27] Li, K., Zhang, Y., Li, K., Li, Y. and Fu, Y., 2019. Visual semantic reasoning for image-text matching. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 4654-4662).

[25] Lee, K.H., Chen, X., Hua, G., Hu, H. and He, X., 2018. Stacked cross attention for image-text matching. In Proceedings of the European conference on computer vision (ECCV) (pp. 201-216).

[4] Chen, H., Ding, G., Liu, X., Lin, Z., Liu, J. and Han, J., 2020. Imram: Iterative matching with recurrent attention memory for cross-modal image-text retrieval. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 12655-12663).

including image-text retrieval and text-to image generation, these works typically require pre-trained object detection models to extract localized image features, which are not readily available for medical images.

In this work, we focus on jointly learning global and local representations for medical images using the corresponding radiology reports. Specifically, we introduce GLoRIA: a framework for learning Global-Local Representations for Images using Attenion mechanism by contrasting image sub-regions and words in the paired report. Instead of relying on pretrained object detectors, we learn attention weights that emphasize significant image sub-regions for a particular word to create context-aware local image representations

Due to the lengthy nature of medical reports, we introduce a self-attention-based image-text joint representation learning model, which is capable of multi-sentence reasoning. Furthermore, we propose a token aggregation strategy to handle abbreviations and typos common in medical reports.

😐 😐 😐 该模型能够进行多句子推理的原因是因为使用了 基于自注意力机制的结构,自注意力机制允许模型在处理输入的时候,对序列中的不同位置进行加权关注,这意味着模型能够捕捉到文本中的 长距离依赖关系 。在图像-文本联合表示学习模型中,自注意力不仅帮助模型理解文本中的复杂关系,还能够在图像和文本间建立更加深入的联系,从而支持对多句子内容的 理解和推理。这种能力特别适合于处理医学报告等长文本,因为这些文本往往包含大量的专业信息和复杂的语句结构。

We demonstrate the generalizability of our learned representations for data-efficient image-text retrieval, classification and segmentation. We conduct experiments and evaluate our methods on three different datasets: CheXpert [16], RSNA Pneumonia [32] and SIIM Pneumothorax. Utilizing both global and local representations for image-text retrieval is non-trivial due to the difficulty in incorporating multiple representations for each image-text pair. There fore, we introduce a similarity aggregation strategy to lever age signals from both global and local representations for retrieval.

Furthermore, our localized image representations are generated using attention weights that rely on words to provide context. Thus, to leverage localized representations for classification, we generate possible textual descriptions of the severity, sub-type and location for each medical condition category. This allows us to frame the image classification task by measuring the image-text similarity and enables zero-shot classification using the learned global-local representations. Finally, experimental results on various tasks and datasets show that our GLoRIA achieves good performance with limited labels and consistently outperforms other methods in previous works.

Author method (感觉废话太多)

we propose an attention-based framework for multi-modal representation learning by contrasting image sub-regions to words in the corresponding report. Our method generates context-aware local representations of images by learning attention weights that emphasize significant image sub-regions for a particular word.

- Here we first describe in Sec 3.1 the image and text encoders we use to extract features from each modality.

- In Sec. 3.2, we formalize our multimodal global-local representation learning objective.

- Finally, in Sec. 3.3, we present strategies for utilizing both global and local representations for label-efficient and zero-shot learning in various downs-stream tasks.

主要介绍,模型利用率权重信息,提取了全局和局部,分别在两种模态上

Given a paired input [x-vision, x-text], where x-vision denotes an image and x-text is the corresponding report, we use an image encoder E-vision and a text encoder E-text to extract global and local features from each modality. The global features contain the semantic information that summarizes the image and report.

The local image features capture the semantics in the image sub-regions, while the local text features are word-level embeddings.

| local | global | |

|---|---|---|

| vision | -------------: | -------------: |

| text | -------------: | -------------: |

These global and local features are used to learn multimodal representations using our framework, and encoders are trained jointly with our representation learning objective. We then apply the learned representations to downstream image recognition tasks, such as retrieval, classification and segmentation.

1. Vision encoder

To construct the image encoder E-vision, we use the ResNet-50 architecture as the backbone to extract features from the image. The global image features f-global (in R-c are extracted from the final adaptive average pooling layer of the ResNet-50 model, where c denotes the feature dimension. We extract the local image features from an intermediate convolution layer and vectorize to get the c-dimensional feature for each of m image sub-regions: f-local (in R-c*m

def resnet_50(pretrained=True):

model = models_2d.resnet50(pretrained=pretrained)

feature_dims = model.fc.in_features

model.fc = Identity()

return model, feature_dims, 1024

ResNet(

(conv1): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(maxpool): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

.

(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

)

(avgpool): AdaptiveAvgPool2d(output_size=(1, 1))

==这行全连接层,被去掉了==(fc): Linear(in_features=2048, out_features=1000, bias=True)==

)

这里,我特别要说明,池化不是丢失掉信息,从数学和信息论的角度来看,这个平均值实际上是在尝试以一个单一的数值来代表和总结整个区域的信息,就如同ViT里面,位置编码和patch的线性映射一样,位置编码与patch特征直接相加的方式,允许模型在处理每个patch的内容信息时,同时考虑其位置信息。

作者是这样理解区分,全局和局部的编码

我们知道,resnet的结构是

| 1 | 2 | 3 | 4 |

|---|---|---|---|

| (头卷积) | 4层(残差块) | (avgpool) | (fc) |

def resnet_forward(self, x, extract_features=False):

# --> fixed-size input: batch x 3 x 299 x 299

x = nn.Upsample(size=(299, 299), mode="bilinear", align_corners=True)(x)

x = self.model.conv1(x) # (batch_size, 64, 150, 150)

x = self.model.bn1(x)

x = self.model.relu(x)

x = self.model.maxpool(x)

x = self.model.layer1(x) # (batch_size, 64, 75, 75)

x = self.model.layer2(x) # (batch_size, 128, 38, 38)

x = self.model.layer3(x) # (batch_size, 256, 19, 19)

local_features = x

这里是没有卷完的 中间层,所以是 局部信息

x = self.model.layer4(x) # (batch_size, 512, 10, 10)

x = self.pool(x)

x = x.view(x.size(0), -1)

return x, local_features

这里是全部 卷完了,所以是 全局信息

网络主要采用两种结构测量(ResNet)(DenseNet)

相比于传统的深度网络架构(如 VGG 和 ResNet),DenseNet 需要更少的参数就能达到相似或更优的性能。这得益于其特征重用的机制,每一层都只需要学习新的特征表示。DenseNet 的结构促进了梯度直接从输出层反向传播到输入层,有助于减轻梯度消失问题,使得网络能够更深。

ResNet50模型大约有25,557,032个参数,而DenseNet121模型大约有7,978,856个参数。这表明ResNet50的参数数量多于DenseNet121。尽管如此,DenseNet通过其独特的密集连接结构实现了高效的特征利用和传递,表明它能够在参数数量更少的情况下,仍然实现良好的性能。

但是代码里面 DenseNet 没有看见 local feature,估计是提取不了的

def densenet_forward(self, x, extract_features=False):

pass

2. Text encoder

Medical reports typically consist of long paragraphs and require reasoning across multiple sentences. Therefore, we utilize a self-attention based language model for learning long-range semantic dependencies in medical reports. In particular, we use the BioClinicalBERT [1] model, pre trained with medical texts from the MIMIC III dataset [19] as our text encoder Et to obtain clinical-aware text embeddings. We further employ word-piece tokenization to minimize the out-of-vocabulary embeddings for abbreviations and typographical errors which are common in medical reports.

一些对文本的预处理,防止错误,英语医学语义分词

For a medical report with W words, each word is tokenized to ni sub-words.

本文提出了一种基于注意力的框架GLoRIA,通过对比图像子区域和报告中的单词,开发出在医疗影像分析中高效率且标签丰富的全球和局部表示。利用放射学报告,GLoRIA学习了全局和局部特征,适用于各种下游医疗图像识别任务,包括图像-文本检索、分类和分割,即使在有限标签下也表现出色。

本文提出了一种基于注意力的框架GLoRIA,通过对比图像子区域和报告中的单词,开发出在医疗影像分析中高效率且标签丰富的全球和局部表示。利用放射学报告,GLoRIA学习了全局和局部特征,适用于各种下游医疗图像识别任务,包括图像-文本检索、分类和分割,即使在有限标签下也表现出色。

381

381

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?