Azure Kinect 3深度相机开发--人体姿态估计并输出各个关节点坐标

前言

本文为我第一次配置Azure Kinect相机的流程记录,参考以下4个博客:

https://blog.csdn.net/qq_48188557/article/details/120626011

https://blog.csdn.net/qq_48188557/article/details/120632907

https://blog.csdn.net/Creama_/article/details/107238475

https://blog.csdn.net/weixin_41977337/article/details/105968187

一、VS配置Azure Kinect 3开发环境(C++)

1.下载并安装Azure Kinect3 SDK

官网Azure Kinect3 SDK下载地址打开之后点如下按钮:

选择v1.4.1打开之后傻瓜式下载,一路点ok就好。这样安装完的SDK位于c盘,如想选择放在其他地方也可以再安装时对路径做调整

2.配置VS-Azure Kinect3开发环境

2.1 新建C++空项目文件

2.2 新建Azure Kinect3 props文件

打开VS中的属性管理器,选择Debug| x64,右键,选择添加新项目属性表,将其命名为Azure Kinect,保存在自己选定的文件夹下(由于后续还有几个props文件,建议单独建立文件夹一起保存)。

如果出现没有属性管理器的情况,下图为解决方案:

打开“视图”-“其他窗口”-“属性窗口”

双击生成的Azure Kinect

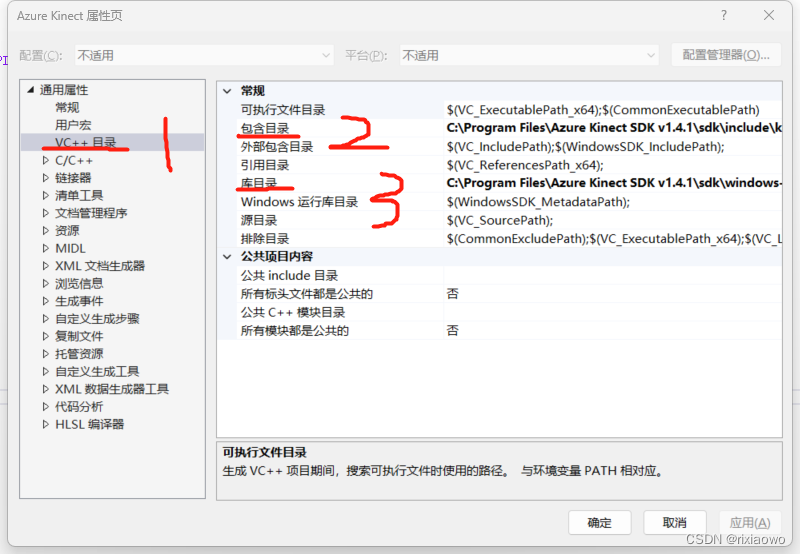

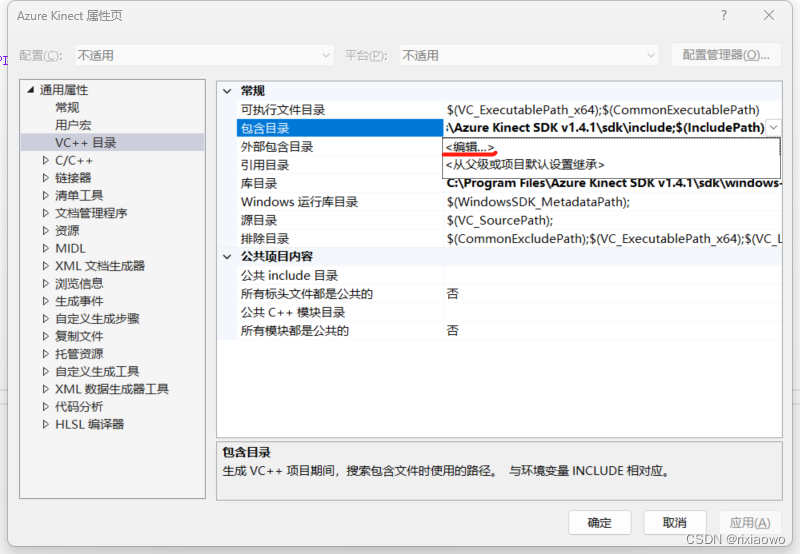

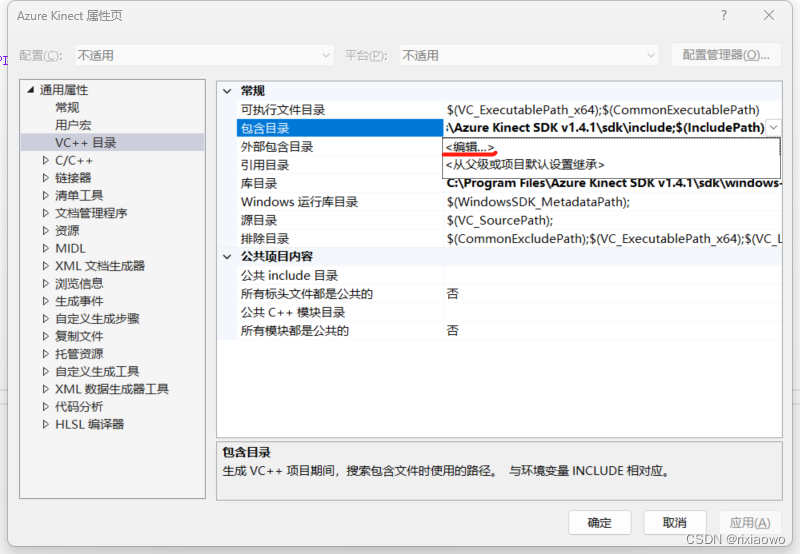

2.3 配置VC++目录

接下来打开VC++目录,在此有两步配置:

在空白区域输入:

C:\Program Files\Azure Kinect SDK v1.4.1\sdk\include

C:\Program Files\Azure Kinect SDK v1.4.1\sdk\include\k4a

C:\Program Files\Azure Kinect SDK v1.4.1\sdk\include\k4arecord(如果是默认安装Azure Kinect SDK,可以直接复制粘贴即可,改过安装路径需要视情况)

同理更改库目录,在空白区域输入:

C:\Program Files\Azure Kinect SDK v1.4.1\sdk\windows-desktop\amd64\release\lib(如果是默认安装Azure Kinect SDK,可以直接复制粘贴即可,改过安装路径需要视情况)

2.4 配置链接器

打开链接器-输入-附加依赖项-点击编辑

C:\Program Files\Azure Kinect SDK v1.4.1\sdk\windows-desktop\amd64\release\lib\k4a.lib

C:\Program Files\Azure Kinect SDK v1.4.1\sdk\windows-desktop\amd64\release\lib\k4arecord.lib

(如果是默认安装Azure Kinect SDK,可以直接复制粘贴即可,改过安装路径需要视情况)

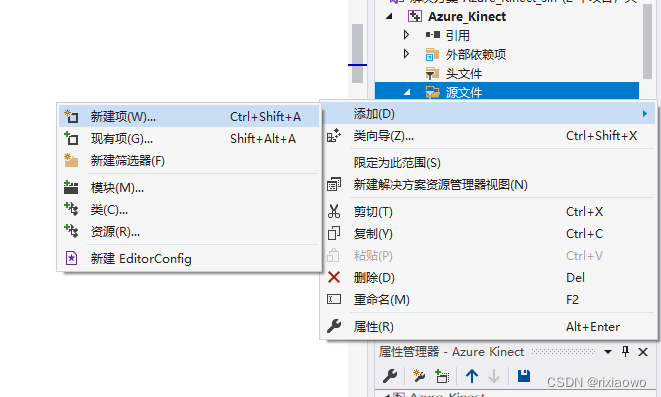

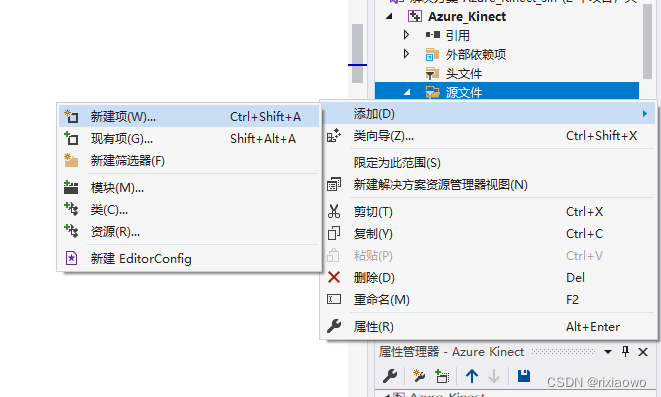

2.5 新建test文件:

#pragma comment(lib, "k4a.lib")

#include <k4a/k4a.h>

#include <stdio.h>

#include <stdlib.h>

int main()

{

uint32_t count = k4a_device_get_installed_count();

if (count == 0)

{

printf("No k4a devices attached!\n");

return 1;

}

// Open the first plugged in Kinect device

k4a_device_t device = NULL;

if (K4A_FAILED(k4a_device_open(K4A_DEVICE_DEFAULT, &device)))

{

printf("Failed to open k4a device!\n");

return 1;

}

// Get the size of the serial number

size_t serial_size = 0;

k4a_device_get_serialnum(device, NULL, &serial_size);

// Allocate memory for the serial, then acquire it

char* serial = (char*)(malloc(serial_size));

k4a_device_get_serialnum(device, serial, &serial_size);

printf("Opened device: %s\n", serial);

free(serial);

// Configure a stream of 4096x3072 BRGA color data at 15 frames per second

k4a_device_configuration_t config = K4A_DEVICE_CONFIG_INIT_DISABLE_ALL;

config.camera_fps = K4A_FRAMES_PER_SECOND_15;

config.color_format = K4A_IMAGE_FORMAT_COLOR_BGRA32;

config.color_resolution = K4A_COLOR_RESOLUTION_3072P;

// Start the camera with the given configuration

if (K4A_FAILED(k4a_device_start_cameras(device, &config)))

{

printf("Failed to start cameras!\n");

k4a_device_close(device);

return 1;

}

// Camera capture and application specific code would go here

// Shut down the camera when finished with application logic

k4a_device_stop_cameras(device);

k4a_device_close(device);

return 0;

}

运行之后会报错并在项目目录生成x64文件夹

3 拷贝Azure Kinect中的文件

将Azure Kinect中的文件拷贝到项目中,先找到下面这个目录:

将该目录下的所有文件选中,复制,将其拷贝到刚刚新建的项目的x64/Debug文件中

复制好文件之后重新运行test文件,在没有插相机的情况下输出

至此Azure Kinect环境配置完成。

二 Azure Kinect 3 BodyTrack环境配置

1.下载并安装Azure Kinect3 BodyTrack SDK

官网Azure Kinect3 SDK下载地址打开之后点如下按钮:

选择1.1.2-msi之后下载并傻瓜式安装,一路点ok就好。这样安装完的SDK位于c盘,如想选择放在其他地方也可以再安装时对路径做调整,因文件较大,c盘空间不足的谨慎安装于c盘

2.配置VS-Azure Kinect BodyTrack开发环境

2.1 新建C++空项目文件

2.2 新建Azure Kinect3 BodyTrack props文件

打开VS中的属性管理器,选择Debug| x64,右键,选择添加新项目属性表,将其命名为Azure Kinect BodyTrack,保存在自己选定的文件夹下(由于后续还有几个props文件,建议单独建立文件夹一起保存)。

双击生成的Azure Kinect BodyTrack

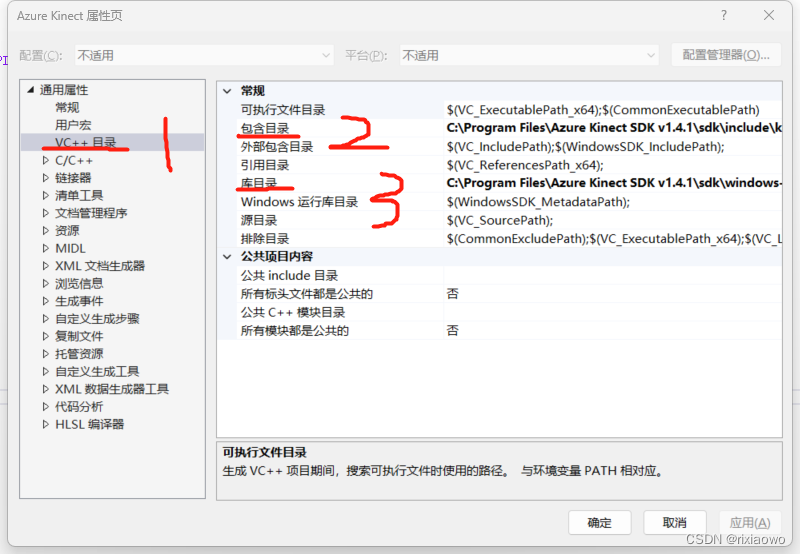

2.3 配置VC++目录

接下来打开VC++目录,在此有两步配置:

在空白区域输入:

C:\Program Files\Azure Kinect Body Tracking SDK\sdk\include

(如果是默认安装Azure Kinect BodyTrack SDK,可以直接复制粘贴即可,改过安装路径需要视情况)

同理更改库目录:

在空白区域输入:C:\Program Files\Azure Kinect Body Tracking SDK\sdk\windows-desktop\amd64\release\lib(如果是默认安装Azure Kinect BodyTrack SDK,可以直接复制粘贴即可,改过安装路径需要视情况)

2.4 配置链接器

打开链接器-输入-附加依赖项-点击编辑

C:\Program Files\Azure Kinect Body Tracking SDK\sdk\windows-desktop\amd64\release\lib\k4abt.lib(如果是默认安装Azure Kinect BodyTrack SDK,可以直接复制粘贴即可,改过安装路径需要视情况)

2.5 新建test文件覆盖之前的test文件做BodyTrack SDK测试:

#include <stdio.h>

#include <stdlib.h>

#include <k4a/k4a.h>

#include <k4abt.h>

#define VERIFY(result, error) \

if(result != K4A_RESULT_SUCCEEDED) \

{ \

printf("%s \n - (File: %s, Function: %s, Line: %d)\n", error, __FILE__, __FUNCTION__, __LINE__); \

exit(1); \

} \

int main()

{

k4a_device_t device = NULL;

VERIFY(k4a_device_open(0, &device), "Open K4A Device failed!");

// Start camera. Make sure depth camera is enabled.

k4a_device_configuration_t deviceConfig = K4A_DEVICE_CONFIG_INIT_DISABLE_ALL;

deviceConfig.depth_mode = K4A_DEPTH_MODE_NFOV_UNBINNED;

deviceConfig.color_resolution = K4A_COLOR_RESOLUTION_OFF;

VERIFY(k4a_device_start_cameras(device, &deviceConfig), "Start K4A cameras failed!");

k4a_calibration_t sensor_calibration;

VERIFY(k4a_device_get_calibration(device, deviceConfig.depth_mode, deviceConfig.color_resolution, &sensor_calibration),

"Get depth camera calibration failed!");

k4abt_tracker_t tracker = NULL;

k4abt_tracker_configuration_t tracker_config = K4ABT_TRACKER_CONFIG_DEFAULT;

VERIFY(k4abt_tracker_create(&sensor_calibration, tracker_config, &tracker), "Body tracker initialization failed!");

int frame_count = 0;

do

{

k4a_capture_t sensor_capture;

k4a_wait_result_t get_capture_result = k4a_device_get_capture(device, &sensor_capture, K4A_WAIT_INFINITE);

if (get_capture_result == K4A_WAIT_RESULT_SUCCEEDED)

{

frame_count++;

k4a_wait_result_t queue_capture_result = k4abt_tracker_enqueue_capture(tracker, sensor_capture, K4A_WAIT_INFINITE);

k4a_capture_release(sensor_capture); // Remember to release the sensor capture once you finish using it

if (queue_capture_result == K4A_WAIT_RESULT_TIMEOUT)

{

// It should never hit timeout when K4A_WAIT_INFINITE is set.

printf("Error! Add capture to tracker process queue timeout!\n");

break;

}

else if (queue_capture_result == K4A_WAIT_RESULT_FAILED)

{

printf("Error! Add capture to tracker process queue failed!\n");

break;

}

k4abt_frame_t body_frame = NULL;

k4a_wait_result_t pop_frame_result = k4abt_tracker_pop_result(tracker, &body_frame, K4A_WAIT_INFINITE);

if (pop_frame_result == K4A_WAIT_RESULT_SUCCEEDED)

{

// Successfully popped the body tracking result. Start your processing

size_t num_bodies = k4abt_frame_get_num_bodies(body_frame);

printf("%zu bodies are detected!\n", num_bodies);

k4abt_frame_release(body_frame); // Remember to release the body frame once you finish using it

}

else if (pop_frame_result == K4A_WAIT_RESULT_TIMEOUT)

{

// It should never hit timeout when K4A_WAIT_INFINITE is set.

printf("Error! Pop body frame result timeout!\n");

break;

}

else

{

printf("Pop body frame result failed!\n");

break;

}

}

else if (get_capture_result == K4A_WAIT_RESULT_TIMEOUT)

{

// It should never hit time out when K4A_WAIT_INFINITE is set.

printf("Error! Get depth frame time out!\n");

break;

}

else

{

printf("Get depth capture returned error: %d\n", get_capture_result);

break;

}

} while (frame_count < 100);

printf("Finished body tracking processing!\n");

k4abt_tracker_shutdown(tracker);

k4abt_tracker_destroy(tracker);

k4a_device_stop_cameras(device);

k4a_device_close(device);

return 0;

}

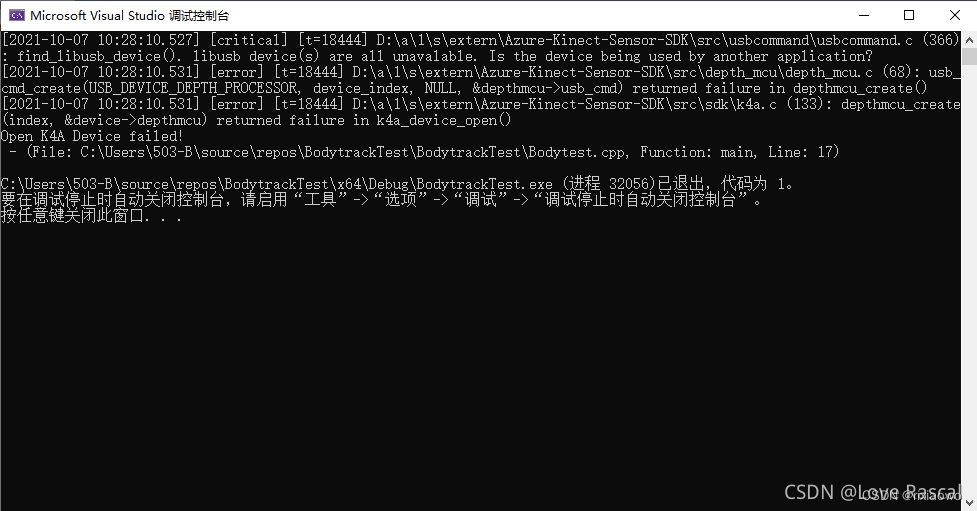

运行之后会报错并在项目目录生成x64文件夹

3 拷贝Azure Kinect BodyTrack中的文件

将Azure Kinect中的拷贝到项目中,先找到下面这个目录:

将该目录下的所有文件选中,复制,将其拷贝到刚刚新建的项目的x64/Debug文件中

复制完文件之后重新运行test文件,没插相机时输出

至此Azure Kinect BodyTrack环境配置完成。

三 OpenCV环境配置

1 OpenCV下载安装

下载之后解压到自己选择的目录,方便下文理解,我附上我的路径:D:\rixiaowo\opencv3.4.14\opencv

解压完打开文件

2 配置环境变量

这是win10的流程,因为我的电脑是win11所以此图为转载

win11环境变量在这个位置,其余与win10相同

3配置OpenCV props文件:

3.1新建OpenCV props文件:

流程如前两章所说,新建部分就不赘述了

3.2配置VC++目录包含目录、库目录和链接器:

流程也在前两章详细介绍过,因为OpenCV的路径大家都不太一样,本文贴上自己的路径

包含目录

库目录:

链接器

配置完成即可

4可能会出现的问题:opencv 报错“找不到opencv_world341d.dll ”的解决方法

转载于:https://blog.csdn.net/weixin_39354845/article/details/128461866

找到opencv的这个目录下面D:\rixiaowo\opencv3.4.14\opencv\build\x64\vc15\bin,找到

将其拷贝到C:\Windows\System32的目录下。

四 项目代码

1 完成内容介绍

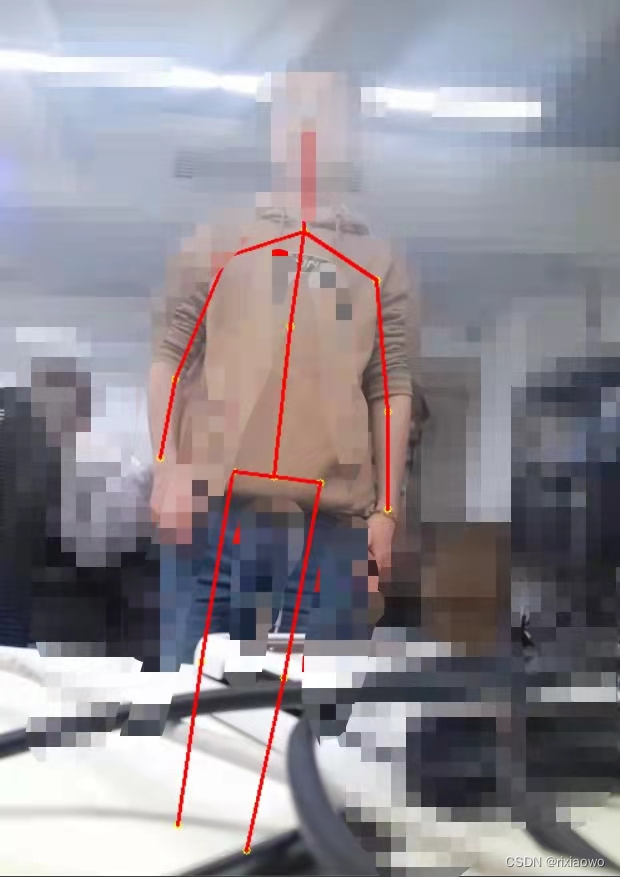

主要通过深度相机完成了对姿态点的实时估计,在可视化界面将估计的骨架点实时绘出,并同时计算各个关节的二维角度。

同时将各个关节点的二维信息和深度以及时间和关节角度保存至表格文件中。

2 代码

#define _USE_MATH_DEFINES

#include <stdio.h>

#include <stdlib.h>

#include <iostream>

#include <k4a/k4a.h>

#include <k4abt.h>

#include <math.h>

#include <fstream>

#include <chrono>

#include <thread>

#include <time.h>

#include <cmath>

using namespace std;

#pragma warning(disable:4996)

// OpenCV

#include <opencv2/opencv.hpp>

// Kinect DK

#include <k4a.hpp>

#define VERIFY(result, error) \

if(result != K4A_RESULT_SUCCEEDED) \

{ \

printf("%s \n - (File: %s, Function: %s, Line: %d)\n", error, __FILE__, __FUNCTION__, __LINE__); \

exit(1); \

} \

double get_angle(double x1, double y1, double x2, double y2, double x3, double y3)

{

double theta = atan2(x1 - x3, y1 - y3) - atan2(x2 - x3, y2 - y3);

if (theta > M_PI)

theta -= 2 * M_PI;

if (theta < -M_PI)

theta += 2 * M_PI;

theta = abs(theta * 180.0 / M_PI);

return theta;

}

double get_line_equation(double x1, double y1, double x2, double y2)

{

double k, b;

k = (y2 - y1) / (x2 - x1);

b = y1 - k * x1;

return k;

}

// 计算两条直线的夹角(弧度制)

double get_lines_angle(double k1, double k2)

{

double angle = atan(abs((k2 - k1) / (1 + k1 * k2))) * 180.0 / M_PI;

return angle;

}

string Time2Str()

{

time_t tm;

time(&tm); //获取time_t类型的当前时间

char tmp[64];

strftime(tmp, sizeof(tmp), "%Y-%m-%d %H:%M:%S", localtime(&tm));

return tmp;

}

const char* filename = "E:/bdca_project/data.csv";

int main()

{

//定义身高

k4a_device_t device = NULL;

VERIFY(k4a_device_open(0, &device), "Open K4A Device failed!");

const uint32_t device_count = k4a_device_get_installed_count();

if (1 == device_count)

{

std::cout << "Found " << device_count << " connected devices. " << std::endl;

}

else

{

std::cout << "Error: more than one K4A devices found. " << std::endl;

}

//打开设备

k4a_device_open(0, &device);

std::cout << "Done: open device. " << std::endl;

// Start camera. Make sure depth camera is enabled.

k4a_device_configuration_t deviceConfig = K4A_DEVICE_CONFIG_INIT_DISABLE_ALL;

deviceConfig.depth_mode = K4A_DEPTH_MODE_NFOV_2X2BINNED;

deviceConfig.color_resolution = K4A_COLOR_RESOLUTION_720P;

deviceConfig.camera_fps = K4A_FRAMES_PER_SECOND_30;

deviceConfig.color_format = K4A_IMAGE_FORMAT_COLOR_BGRA32;

deviceConfig.synchronized_images_only = true;// ensures that depth and color images are both available in the capture

//开始相机

//k4a_device_start_cameras(device, &deviceConfig);

VERIFY(k4a_device_start_cameras(device, &deviceConfig), "Start K4A cameras failed!");

std::cout << "Done: start camera." << std::endl;

//查询传感器校准

k4a_calibration_t sensor_calibration;

k4a_device_get_calibration(device, deviceConfig.depth_mode, deviceConfig.color_resolution, &sensor_calibration);

VERIFY(k4a_device_get_calibration(device, deviceConfig.depth_mode, deviceConfig.color_resolution, &sensor_calibration),

"Get depth camera calibration failed!");

//创建人体跟踪器

k4abt_tracker_t tracker = NULL;

k4abt_tracker_configuration_t tracker_config = K4ABT_TRACKER_CONFIG_DEFAULT;

k4abt_tracker_create(&sensor_calibration, tracker_config, &tracker);

VERIFY(k4abt_tracker_create(&sensor_calibration, tracker_config, &tracker), "Body tracker initialization failed!");

cv::Mat cv_rgbImage_with_alpha;

cv::Mat cv_rgbImage_no_alpha;

cv::Mat cv_depth;

cv::Mat cv_depth_8U;

std::ofstream outfile(filename, std::ios::app);

std::cout << outfile.is_open();

cv::VideoWriter writer;

std::string output_filename = "E:/bdca_project/output.avi";

int codec = cv::VideoWriter::fourcc('M', 'J', 'P', 'G');

int fps = 15;

cv::Size frame_size(1280, 720);

int frame_count = 0;

writer.open(output_filename, codec, fps, frame_size);

if (!writer.isOpened())

{

std::cerr << "Failed to open output file: " << output_filename << std::endl;

return -1;

}

while (true)

{

k4a_capture_t sensor_capture;

k4a_wait_result_t get_capture_result = k4a_device_get_capture(device, &sensor_capture, K4A_WAIT_INFINITE);

k4a_wait_result_t queue_capture_result = k4abt_tracker_enqueue_capture(tracker, sensor_capture, K4A_WAIT_INFINITE);

//获取RGB和depth图像

k4a_image_t rgbImage = k4a_capture_get_color_image(sensor_capture);

k4a_image_t depthImage = k4a_capture_get_depth_image(sensor_capture);

//RGB

cv_rgbImage_with_alpha = cv::Mat(k4a_image_get_height_pixels(rgbImage), k4a_image_get_width_pixels(rgbImage), CV_8UC4, k4a_image_get_buffer(rgbImage));

cout << k4a_image_get_height_pixels(rgbImage) << k4a_image_get_width_pixels(rgbImage) << endl;

cvtColor(cv_rgbImage_with_alpha, cv_rgbImage_no_alpha, cv::COLOR_BGRA2BGR);

//depth

cv_depth = cv::Mat(k4a_image_get_height_pixels(depthImage), k4a_image_get_width_pixels(depthImage), CV_16U, k4a_image_get_buffer(depthImage), k4a_image_get_stride_bytes(depthImage));

cv_depth.convertTo(cv_depth_8U, CV_8U, 1);

k4a_capture_release(sensor_capture); // Remember to release the sensor capture once you finish using it

if (queue_capture_result == K4A_WAIT_RESULT_TIMEOUT)

{

// It should never hit timeout when K4A_WAIT_INFINITE is set.

printf("Error! Add capture to tracker process queue timeout!\n");

}

else if (queue_capture_result == K4A_WAIT_RESULT_FAILED)

{

printf("Error! Add capture to tracker process queue failed!\n");

}

writer.write(cv_rgbImage_no_alpha);

//弹出结果

k4abt_frame_t body_frame = NULL;

k4a_wait_result_t pop_frame_result = k4abt_tracker_pop_result(tracker, &body_frame, K4A_WAIT_INFINITE);

if (pop_frame_result == K4A_WAIT_RESULT_SUCCEEDED)

{

// Successfully popped the body tracking result. Start your processing

//检测人体数

size_t num_bodies = k4abt_frame_get_num_bodies(body_frame);

for (size_t i = 0; i < num_bodies; i++)

{

//获取人体框架

k4abt_skeleton_t skeleton;

k4abt_frame_get_body_skeleton(body_frame, i, &skeleton);

k4a_float2_t P_HEAD_2D;

k4a_float2_t P_NECK_2D;

k4a_float2_t P_CHEST_2D;

k4a_float2_t P_HIP_2D;

k4a_float2_t P_SHOULDER_RIGHT_2D;

k4a_float2_t P_SHOULDER_LEFT_2D;

k4a_float2_t P_HIP_RIGHT_2D;

k4a_float2_t P_HIP_LEFT_2D;

k4a_float2_t P_KNEE_LEFT_2D;

k4a_float2_t P_KNEE_RIGHT_2D;

k4a_float2_t P_ANKLE_RIGHT_2D;

k4a_float2_t P_ANKLE_LEFT_2D;

k4a_float2_t P_ELBOW_RIGHT_2D;

k4a_float2_t P_ELBOW_LEFT_2D;

k4a_float2_t P_WRIST_RIGHT_2D;

k4a_float2_t P_WRIST_LEFT_2D;

int result;

//头部

k4abt_joint_t P_NOSE = skeleton.joints[K4ABT_JOINT_NOSE];

//3D转2D,并在color中画出

k4a_calibration_3d_to_2d(&sensor_calibration, &P_NOSE.position, K4A_CALIBRATION_TYPE_DEPTH, K4A_CALIBRATION_TYPE_COLOR, &P_HEAD_2D, &result);

cv::circle(cv_rgbImage_no_alpha, cv::Point(P_HEAD_2D.xy.x, P_HEAD_2D.xy.y), 3, cv::Scalar(0, 255, 255));

//颈部

k4abt_joint_t P_NECK = skeleton.joints[K4ABT_JOINT_NECK];

//3D转2D,并在color中画出

k4a_calibration_3d_to_2d(&sensor_calibration, &P_NECK.position, K4A_CALIBRATION_TYPE_DEPTH, K4A_CALIBRATION_TYPE_COLOR, &P_NECK_2D, &result);

cv::circle(cv_rgbImage_no_alpha, cv::Point(P_NECK_2D.xy.x, P_NECK_2D.xy.y), 3, cv::Scalar(0, 255, 255));

//胸部

k4abt_joint_t P_CHEST = skeleton.joints[K4ABT_JOINT_SPINE_CHEST];

//3D转2D,并在color中画出

k4a_calibration_3d_to_2d(&sensor_calibration, &P_CHEST.position, K4A_CALIBRATION_TYPE_DEPTH, K4A_CALIBRATION_TYPE_COLOR, &P_CHEST_2D, &result);

cv::circle(cv_rgbImage_no_alpha, cv::Point(P_CHEST_2D.xy.x, P_CHEST_2D.xy.y), 3, cv::Scalar(0, 255, 255));

//髋部

k4abt_joint_t P_HIP = skeleton.joints[K4ABT_JOINT_PELVIS];

//3D转2D,并在color中画出

k4a_calibration_3d_to_2d(&sensor_calibration, &P_HIP.position, K4A_CALIBRATION_TYPE_DEPTH, K4A_CALIBRATION_TYPE_COLOR, &P_HIP_2D, &result);

cv::circle(cv_rgbImage_no_alpha, cv::Point(P_HIP_2D.xy.x, P_HIP_2D.xy.y), 3, cv::Scalar(0, 255, 255));

//右肩

k4abt_joint_t P_SHOULDER_RIGHT = skeleton.joints[K4ABT_JOINT_SHOULDER_RIGHT];

//3D转2D,并在color中画出

k4a_calibration_3d_to_2d(&sensor_calibration, &P_SHOULDER_RIGHT.position, K4A_CALIBRATION_TYPE_DEPTH, K4A_CALIBRATION_TYPE_COLOR, &P_SHOULDER_RIGHT_2D, &result);

cv::circle(cv_rgbImage_no_alpha, cv::Point(P_SHOULDER_RIGHT_2D.xy.x, P_SHOULDER_RIGHT_2D.xy.y), 3, cv::Scalar(0, 255, 255));

//右髋

k4abt_joint_t P_HIP_RIGHT = skeleton.joints[K4ABT_JOINT_HIP_RIGHT];

//3D转2D,并在color中画出,并画出右肩到右髋的连线

k4a_calibration_3d_to_2d(&sensor_calibration, &P_HIP_RIGHT.position, K4A_CALIBRATION_TYPE_DEPTH, K4A_CALIBRATION_TYPE_COLOR, &P_HIP_RIGHT_2D, &result);

cv::circle(cv_rgbImage_no_alpha, cv::Point(P_HIP_RIGHT_2D.xy.x, P_HIP_RIGHT_2D.xy.y), 3, cv::Scalar(0, 255, 255));

//右膝

k4abt_joint_t P_KNEE_RIGHT = skeleton.joints[K4ABT_JOINT_KNEE_RIGHT];

//3D转2D,并在color中画出,并画出右肩到右膝、右髋到右膝的连线

k4a_calibration_3d_to_2d(&sensor_calibration, &P_KNEE_RIGHT.position, K4A_CALIBRATION_TYPE_DEPTH, K4A_CALIBRATION_TYPE_COLOR, &P_KNEE_RIGHT_2D, &result);

cv::circle(cv_rgbImage_no_alpha, cv::Point(P_KNEE_RIGHT_2D.xy.x, P_KNEE_RIGHT_2D.xy.y), 3, cv::Scalar(0, 255, 255));

//左肩

k4abt_joint_t P_SHOULDER_LEFT = skeleton.joints[K4ABT_JOINT_SHOULDER_LEFT];

//3D转2D,并在color中画出

k4a_calibration_3d_to_2d(&sensor_calibration, &P_SHOULDER_LEFT.position, K4A_CALIBRATION_TYPE_DEPTH, K4A_CALIBRATION_TYPE_COLOR, &P_SHOULDER_LEFT_2D, &result);

cv::circle(cv_rgbImage_no_alpha, cv::Point(P_SHOULDER_LEFT_2D.xy.x, P_SHOULDER_LEFT_2D.xy.y), 3, cv::Scalar(0, 255, 255));

//左髋

k4abt_joint_t P_HIP_LEFT = skeleton.joints[K4ABT_JOINT_HIP_LEFT];

//3D转2D,并在color中画出,并画出左肩到左髋的连线

k4a_calibration_3d_to_2d(&sensor_calibration, &P_HIP_LEFT.position, K4A_CALIBRATION_TYPE_DEPTH, K4A_CALIBRATION_TYPE_COLOR, &P_HIP_LEFT_2D, &result);

cv::circle(cv_rgbImage_no_alpha, cv::Point(P_HIP_LEFT_2D.xy.x, P_HIP_LEFT_2D.xy.y), 3, cv::Scalar(0, 255, 255));

//左膝

k4abt_joint_t P_KNEE_LEFT = skeleton.joints[K4ABT_JOINT_KNEE_LEFT];

//3D转2D,并在color中画出,并画出左肩到左膝、左髋到左膝的连线

k4a_calibration_3d_to_2d(&sensor_calibration, &P_KNEE_LEFT.position, K4A_CALIBRATION_TYPE_DEPTH, K4A_CALIBRATION_TYPE_COLOR, &P_KNEE_LEFT_2D, &result);

cv::circle(cv_rgbImage_no_alpha, cv::Point(P_KNEE_LEFT_2D.xy.x, P_KNEE_LEFT_2D.xy.y), 3, cv::Scalar(0, 255, 255));

//左脚腕

k4abt_joint_t P_ANKLE_LEFT = skeleton.joints[K4ABT_JOINT_ANKLE_LEFT];

k4a_calibration_3d_to_2d(&sensor_calibration, &P_ANKLE_LEFT.position, K4A_CALIBRATION_TYPE_DEPTH, K4A_CALIBRATION_TYPE_COLOR, &P_ANKLE_LEFT_2D, &result);

cv::circle(cv_rgbImage_no_alpha, cv::Point(P_ANKLE_LEFT_2D.xy.x, P_ANKLE_LEFT_2D.xy.y), 3, cv::Scalar(0, 255, 255));

//右脚腕

k4abt_joint_t P_ANKLE_RIGHT = skeleton.joints[K4ABT_JOINT_ANKLE_RIGHT];

k4a_calibration_3d_to_2d(&sensor_calibration, &P_ANKLE_RIGHT.position, K4A_CALIBRATION_TYPE_DEPTH, K4A_CALIBRATION_TYPE_COLOR, &P_ANKLE_RIGHT_2D, &result);

cv::circle(cv_rgbImage_no_alpha, cv::Point(P_ANKLE_RIGHT_2D.xy.x, P_ANKLE_RIGHT_2D.xy.y), 3, cv::Scalar(0, 255, 255));

//左手腕

k4abt_joint_t P_WRIST_LEFT = skeleton.joints[K4ABT_JOINT_WRIST_LEFT];

k4a_calibration_3d_to_2d(&sensor_calibration, &P_WRIST_LEFT.position, K4A_CALIBRATION_TYPE_DEPTH, K4A_CALIBRATION_TYPE_COLOR, &P_WRIST_LEFT_2D, &result);

cv::circle(cv_rgbImage_no_alpha, cv::Point(P_WRIST_LEFT_2D.xy.x, P_WRIST_LEFT_2D.xy.y), 3, cv::Scalar(0, 255, 255));

//右手腕

k4abt_joint_t P_WRIST_RIGHT = skeleton.joints[K4ABT_JOINT_WRIST_RIGHT];

k4a_calibration_3d_to_2d(&sensor_calibration, &P_WRIST_RIGHT.position, K4A_CALIBRATION_TYPE_DEPTH, K4A_CALIBRATION_TYPE_COLOR, &P_WRIST_RIGHT_2D, &result);

cv::circle(cv_rgbImage_no_alpha, cv::Point(P_WRIST_RIGHT_2D.xy.x, P_WRIST_RIGHT_2D.xy.y), 3, cv::Scalar(0, 255, 255));

//左手肘

k4abt_joint_t P_ELBOW_LEFT = skeleton.joints[K4ABT_JOINT_ELBOW_LEFT];

k4a_calibration_3d_to_2d(&sensor_calibration, &P_ELBOW_LEFT.position, K4A_CALIBRATION_TYPE_DEPTH, K4A_CALIBRATION_TYPE_COLOR, &P_ELBOW_LEFT_2D, &result);

cv::circle(cv_rgbImage_no_alpha, cv::Point(P_ELBOW_LEFT_2D.xy.x, P_ELBOW_LEFT_2D.xy.y), 3, cv::Scalar(0, 255, 255));

//右手肘

k4abt_joint_t P_ELBOW_RIGHT = skeleton.joints[K4ABT_JOINT_ELBOW_RIGHT];

k4a_calibration_3d_to_2d(&sensor_calibration, &P_ELBOW_RIGHT.position, K4A_CALIBRATION_TYPE_DEPTH, K4A_CALIBRATION_TYPE_COLOR, &P_ELBOW_RIGHT_2D, &result);

cv::circle(cv_rgbImage_no_alpha, cv::Point(P_ELBOW_RIGHT_2D.xy.x, P_ELBOW_RIGHT_2D.xy.y), 3, cv::Scalar(0, 255, 255));

//关节点连线

cv::line(cv_rgbImage_no_alpha, cv::Point(P_HEAD_2D.xy.x, P_HEAD_2D.xy.y), cv::Point(P_NECK_2D.xy.x, P_NECK_2D.xy.y), cv::Scalar(0, 0, 255), 2);

cv::line(cv_rgbImage_no_alpha, cv::Point(P_NECK_2D.xy.x, P_NECK_2D.xy.y), cv::Point(P_SHOULDER_LEFT_2D.xy.x, P_SHOULDER_LEFT_2D.xy.y), cv::Scalar(0, 0, 255), 2);

cv::line(cv_rgbImage_no_alpha, cv::Point(P_NECK_2D.xy.x, P_NECK_2D.xy.y), cv::Point(P_SHOULDER_RIGHT_2D.xy.x, P_SHOULDER_RIGHT_2D.xy.y), cv::Scalar(0, 0, 255), 2);

cv::line(cv_rgbImage_no_alpha, cv::Point(P_ELBOW_RIGHT_2D.xy.x, P_ELBOW_RIGHT_2D.xy.y), cv::Point(P_SHOULDER_RIGHT_2D.xy.x, P_SHOULDER_RIGHT_2D.xy.y), cv::Scalar(0, 0, 255), 2);

cv::line(cv_rgbImage_no_alpha, cv::Point(P_ELBOW_RIGHT_2D.xy.x, P_ELBOW_RIGHT_2D.xy.y), cv::Point(P_WRIST_RIGHT_2D.xy.x, P_WRIST_RIGHT_2D.xy.y), cv::Scalar(0, 0, 255), 2);

cv::line(cv_rgbImage_no_alpha, cv::Point(P_WRIST_LEFT_2D.xy.x, P_WRIST_LEFT_2D.xy.y), cv::Point(P_ELBOW_LEFT_2D.xy.x, P_ELBOW_LEFT_2D.xy.y), cv::Scalar(0, 0, 255), 2);

cv::line(cv_rgbImage_no_alpha, cv::Point(P_ELBOW_LEFT_2D.xy.x, P_ELBOW_LEFT_2D.xy.y), cv::Point(P_SHOULDER_LEFT_2D.xy.x, P_SHOULDER_LEFT_2D.xy.y), cv::Scalar(0, 0, 255), 2);

cv::line(cv_rgbImage_no_alpha, cv::Point(P_CHEST_2D.xy.x, P_CHEST_2D.xy.y), cv::Point(P_NECK_2D.xy.x, P_NECK_2D.xy.y), cv::Scalar(0, 0, 255), 2);

cv::line(cv_rgbImage_no_alpha, cv::Point(P_CHEST_2D.xy.x, P_CHEST_2D.xy.y), cv::Point(P_HIP_2D.xy.x, P_HIP_2D.xy.y), cv::Scalar(0, 0, 255), 2);

cv::line(cv_rgbImage_no_alpha, cv::Point(P_HIP_LEFT_2D.xy.x, P_HIP_LEFT_2D.xy.y), cv::Point(P_HIP_2D.xy.x, P_HIP_2D.xy.y), cv::Scalar(0, 0, 255), 2);

cv::line(cv_rgbImage_no_alpha, cv::Point(P_HIP_RIGHT_2D.xy.x, P_HIP_RIGHT_2D.xy.y), cv::Point(P_HIP_2D.xy.x, P_HIP_2D.xy.y), cv::Scalar(0, 0, 255), 2);

cv::line(cv_rgbImage_no_alpha, cv::Point(P_HIP_RIGHT_2D.xy.x, P_HIP_RIGHT_2D.xy.y), cv::Point(P_KNEE_RIGHT_2D.xy.x, P_KNEE_RIGHT_2D.xy.y), cv::Scalar(0, 0, 255), 2);

cv::line(cv_rgbImage_no_alpha, cv::Point(P_HIP_LEFT_2D.xy.x, P_HIP_LEFT_2D.xy.y), cv::Point(P_KNEE_LEFT_2D.xy.x, P_KNEE_LEFT_2D.xy.y), cv::Scalar(0, 0, 255), 2);

cv::line(cv_rgbImage_no_alpha, cv::Point(P_ANKLE_RIGHT_2D.xy.x, P_ANKLE_RIGHT_2D.xy.y), cv::Point(P_KNEE_RIGHT_2D.xy.x, P_KNEE_RIGHT_2D.xy.y), cv::Scalar(0, 0, 255), 2);

cv::line(cv_rgbImage_no_alpha, cv::Point(P_ANKLE_LEFT_2D.xy.x, P_ANKLE_LEFT_2D.xy.y), cv::Point(P_KNEE_LEFT_2D.xy.x, P_KNEE_LEFT_2D.xy.y), cv::Scalar(0, 0, 255), 2);

string time = Time2Str();

outfile << time.c_str() << ",";

//输出显示,关键点坐标(skeleton.joints_HEAD->position.v为头部坐标点,数据结构float[3])

std::cout << "鼻子坐标:";

std::cout << "Joint " << i << ": (" << P_NOSE.position.v[0] << ", "

<< P_NOSE.position.v[1] << ", "

<< P_NOSE.position.v[2] << ")" << std::endl;

outfile << P_NOSE.position.v[0] << ", "

<< P_NOSE.position.v[1] << ", "

<< P_NOSE.position.v[2] << ",";

std::cout << "颈部坐标:";

std::cout << "Joint " << i << ": (" << P_NECK.position.v[0] << ", "

<< P_NECK.position.v[1] << ", "

<< P_NECK.position.v[2] << ")" << std::endl;

outfile << P_NECK.position.v[0] << ", "

<< P_NECK.position.v[1] << ", "

<< P_NECK.position.v[2] << ",";

std::cout << "胸部坐标:";

std::cout << "Joint " << i << ": (" << P_CHEST.position.v[0] << ", "

<< P_CHEST.position.v[1] << ", "

<< P_CHEST.position.v[2] << ")" << std::endl;

outfile<< P_CHEST.position.v[0] << ", "

<< P_CHEST.position.v[1] << ", "

<< P_CHEST.position.v[2] << ",";

std::cout << "髋部坐标:";

std::cout << "Joint " << i << ": (" << P_HIP.position.v[0] << ", "

<< P_HIP.position.v[1] << ", "

<< P_HIP.position.v[2] << ")" << std::endl;

outfile << P_HIP.position.v[0] << ", "

<< P_HIP.position.v[1] << ", "

<< P_HIP.position.v[2] << ",";

std::cout << "左髋坐标:";

std::cout << "Joint " << i << ": (" << P_HIP_LEFT.position.v[0] << ", "

<< P_HIP_LEFT.position.v[1] << ", "

<< P_HIP_LEFT.position.v[2] << ")" << std::endl;

outfile << P_HIP_LEFT.position.v[0] << ", "

<< P_HIP_LEFT.position.v[1] << ", "

<< P_HIP_LEFT.position.v[2] << ",";

std::cout << "右髋坐标:";

std::cout << "Joint " << i << ": (" << P_HIP_RIGHT.position.v[0] << ", "

<< P_HIP_RIGHT.position.v[1] << ", "

<< P_HIP_RIGHT.position.v[2] << ")" << std::endl;

outfile << P_HIP_LEFT.position.v[0] << ", "

<< P_HIP_LEFT.position.v[1] << ", "

<< P_HIP_LEFT.position.v[2] << ",";

std::cout << "左膝坐标:";

std::cout << "Joint " << i << ": (" << P_KNEE_LEFT.position.v[0] << ", "

<< P_KNEE_LEFT.position.v[1] << ", "

<< P_KNEE_LEFT.position.v[2] << ")" << std::endl;

outfile << P_KNEE_LEFT.position.v[0] << ", "

<< P_KNEE_LEFT.position.v[1] << ", "

<< P_KNEE_LEFT.position.v[2] << ",";

std::cout << "右膝坐标:";

std::cout << "Joint " << i << ": (" << P_KNEE_RIGHT.position.v[0] << ", "

<< P_KNEE_RIGHT.position.v[1] << ", "

<< P_KNEE_RIGHT.position.v[2] << ")" << std::endl;

outfile << P_KNEE_RIGHT.position.v[0] << ", "

<< P_KNEE_RIGHT.position.v[1] << ", "

<< P_KNEE_RIGHT.position.v[2] << ",";

std::cout << "左腕坐标:";

std::cout << "Joint " << i << ": (" << P_WRIST_LEFT.position.v[0] << ", "

<< P_WRIST_LEFT.position.v[1] << ", "

<< P_WRIST_LEFT.position.v[2] << ")" << std::endl;

outfile << P_WRIST_LEFT.position.v[0] << ", "

<< P_WRIST_LEFT.position.v[1] << ", "

<< P_WRIST_LEFT.position.v[2] << ",";

std::cout << "右腕坐标:";

std::cout << "Joint " << i << ": (" << P_WRIST_RIGHT.position.v[0] << ", "

<< P_WRIST_RIGHT.position.v[1] << ", "

<< P_WRIST_RIGHT.position.v[2] << ")" << std::endl;

outfile << P_WRIST_RIGHT.position.v[0] << ", "

<< P_WRIST_RIGHT.position.v[1] << ", "

<< P_WRIST_RIGHT.position.v[2] << ",";

std::cout << "左肘坐标:";

std::cout << "Joint " << i << ": (" << P_ELBOW_LEFT.position.v[0] << ", "

<< P_ELBOW_LEFT.position.v[1] << ", "

<< P_ELBOW_LEFT.position.v[2] << ")" << std::endl;

outfile << P_ELBOW_LEFT.position.v[0] << ", "

<< P_ELBOW_LEFT.position.v[1] << ", "

<< P_ELBOW_LEFT.position.v[2] << ",";

std::cout << "右肘坐标:";

std::cout << "Joint " << i << ": (" << P_ELBOW_RIGHT.position.v[0] << ", "

<< P_ELBOW_RIGHT.position.v[1] << ", "

<< P_ELBOW_RIGHT.position.v[2] << ")" << std::endl;

outfile << P_ELBOW_RIGHT.position.v[0] << ", "

<< P_ELBOW_RIGHT.position.v[1] << ", "

<< P_ELBOW_RIGHT.position.v[2] << ",";

std::cout << "左脚腕坐标:";

std::cout << "Joint " << i << ": (" << P_ANKLE_LEFT.position.v[0] << ", "

<< P_ANKLE_LEFT.position.v[1] << ", "

<< P_ANKLE_LEFT.position.v[2] << ")" << std::endl;

outfile << "(" << P_ANKLE_LEFT.position.v[0] << ", "

<< P_ANKLE_LEFT.position.v[1] << ", "

<< P_ANKLE_LEFT.position.v[2] << ",";

std::cout << "右脚腕坐标:";

std::cout << "Joint " << i << ": (" << P_ANKLE_RIGHT.position.v[0] << ", "

<< P_ANKLE_RIGHT.position.v[1] << ", "

<< P_ANKLE_RIGHT.position.v[2] << ")" << std::endl;

outfile << P_ANKLE_LEFT.position.v[0] << ", "

<< P_ANKLE_LEFT.position.v[1] << ", "

<< P_ANKLE_LEFT.position.v[2] << ",";

std::cout << "右肩坐标:";

std::cout << "Joint " << i << ": (" << P_SHOULDER_RIGHT.position.v[0] << ", "

<< P_SHOULDER_RIGHT.position.v[1] << ", "

<< P_SHOULDER_RIGHT.position.v[2] << ")" << std::endl;

outfile << P_SHOULDER_RIGHT.position.v[0] << ", "

<< P_SHOULDER_RIGHT.position.v[1] << ", "

<< P_SHOULDER_RIGHT.position.v[2] << ",";

std::cout << "左肩坐标:";

std::cout << "Joint " << i << ": (" << P_SHOULDER_LEFT.position.v[0] << ", "

<< P_SHOULDER_LEFT.position.v[1] << ", "

<< P_SHOULDER_LEFT.position.v[2] << ")" << std::endl;

outfile << P_SHOULDER_RIGHT.position.v[0] << ", "

<< P_SHOULDER_RIGHT.position.v[1] << ", "

<< P_SHOULDER_RIGHT.position.v[2] << ",";

// 左肘, 右肘角度

double left_elbow_angle = get_angle(P_WRIST_LEFT_2D.xy.x, P_WRIST_LEFT_2D.xy.y, P_ELBOW_LEFT_2D.xy.x, P_ELBOW_LEFT_2D.xy.y, P_SHOULDER_LEFT_2D.xy.x, P_SHOULDER_LEFT_2D.xy.y);

double right_elbow_angle = get_angle(P_WRIST_RIGHT_2D.xy.x, P_WRIST_RIGHT_2D.xy.y, P_ELBOW_RIGHT_2D.xy.x, P_ELBOW_RIGHT_2D.xy.y, P_SHOULDER_RIGHT_2D.xy.x, P_SHOULDER_RIGHT_2D.xy.y);

//左膝,右膝角度

double left_knee_angle = get_angle(P_HIP_LEFT_2D.xy.x, P_HIP_LEFT_2D.xy.y, P_KNEE_LEFT_2D.xy.x, P_KNEE_LEFT_2D.xy.y, P_ANKLE_LEFT_2D.xy.x, P_ANKLE_LEFT_2D.xy.y);

double right_knee_angle = get_angle(P_HIP_RIGHT_2D.xy.x, P_HIP_RIGHT_2D.xy.y, P_ELBOW_RIGHT_2D.xy.x, P_ELBOW_RIGHT_2D.xy.y, P_SHOULDER_RIGHT_2D.xy.x, P_SHOULDER_RIGHT_2D.xy.y);

//左肩,右肩角度

double k1 = get_line_equation(P_NECK_2D.xy.x, P_NECK_2D.xy.y, P_CHEST_2D.xy.x, P_CHEST_2D.xy.y);

double k2 = get_line_equation(P_SHOULDER_LEFT_2D.xy.x, P_SHOULDER_LEFT_2D.xy.y, P_ELBOW_LEFT_2D.xy.x, P_ELBOW_LEFT_2D.xy.y);

double k3 = get_line_equation(P_SHOULDER_RIGHT_2D.xy.x, P_SHOULDER_RIGHT_2D.xy.y, P_ELBOW_RIGHT_2D.xy.x, P_ELBOW_RIGHT_2D.xy.y);

double left_shoulder_angle = get_lines_angle(k1, k2);

double right_shoulder_angle = get_lines_angle(k1, k3);

outfile << left_elbow_angle << "," << right_elbow_angle << "," << left_knee_angle << "," << right_knee_angle << ","

<< left_shoulder_angle << "," << right_shoulder_angle << endl;

}

}

//std::this_thread::sleep_for(std::chrono::seconds(5));

// show image

imshow("color", cv_rgbImage_no_alpha);

//imshow("depth", cv_depth_8U);

cv::waitKey(1);

k4a_image_release(rgbImage);

}

writer.release();

outfile.flush();

outfile.close();

printf("Finished body tracking processing!\n");

k4abt_tracker_shutdown(tracker);

k4abt_tracker_destroy(tracker);

k4a_device_stop_cameras(device);

k4a_device_close(device);

return 0;

}

3 项目完成之后的一些效果展示

1492

1492

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?