PyTorch示例——Dataset、DataLoader

版本信息

- PyTorch:

1.12.1 - Python:

3.7.13

导包

import torch

from torch.utils.data import TensorDataset

from torch.utils.data import DataLoader

import pandas as pd

原始数据

train_path = "./data/kaggle_house_pred_train.csv"

train_data = pd.read_csv(train_path)

train_data[:10]

构建Dataset

class HouseDataset(TensorDataset):

def __init__(self, file_path):

data = pd.read_csv(file_path)

self.len = data.shape[0]

features = self.__prehandle_X(data.iloc[:, 1:-1])

labels = data.iloc[:, [-1]]

self.X_data = torch.tensor(features.values, dtype=torch.float32)

self.y_data = torch.tensor(labels.values, dtype=torch.float32)

def __getitem__(self, index):

return self.X_data[index], self.y_data[index]

def __len__(self):

return self.len

def __prehandle_X(self, orign_features):

"""

特征数据预处理

"""

features = orign_features.drop(labels=[

'BsmtFinSF2', 'MasVnrArea', '2ndFlrSF', 'LowQualFinSF', 'BsmtFullBath',

'BsmtHalfBath', 'HalfBath', 'WoodDeckSF', 'EnclosedPorch', '3SsnPorch',

'ScreenPorch', 'PoolArea', 'MiscVal'

], axis=1)

numeric_features = features.dtypes[features.dtypes != 'object'].index

features[numeric_features] = features[numeric_features].apply(

lambda x: (x - x.mean()) / (x.std())

).fillna(0)

features = pd.get_dummies(features, dummy_na=True)

return features

def get_X_shape1(self):

return self.X_data.shape[1]

- 自己构造TensorDataset的子class,可以在大量数据的情况下,不一次加载完所有数据,而是每次调用

__getitem__才真正加载一条数据 - 目前上面的样例是直接加载所有数据的(可以方便统一只记一种写法)

- 如果先直接加载所有数据的话,也可以有简单的写法,如下

features = prehandle_X(train_data.iloc[:, 1:-1])

train_features = torch.tensor(features.values, dtype=torch.float32)

train_labels = torch.tensor(train_data.iloc[:, [-1]].values, dtype=torch.float32)

dataset = torch.utils.data.TensorDataset(train_features, train_labels)

train_dataloader = data.DataLoader(dataset, batch_size, shuffle=True)

构建模型

class MyModel(torch.nn.Module):

def __init__(self, in_features):

super(MyModel, self).__init__()

self.linear1 = torch.nn.Linear(in_features, 128)

self.linear2 = torch.nn.Linear(128, 64)

self.linear3 = torch.nn.Linear(64, 1)

self.relu = torch.nn.ReLU()

def forward(self, X):

output = self.linear1(X)

output = self.relu(output)

output = self.linear2(output)

output = self.relu(output)

output = self.linear3(output)

return output

开始训练

learning_rate = 0.005

epoch_num = 100

batch_size = 64

dataset = HouseDataset(train_path)

train_dataloader = DataLoader(dataset=dataset, batch_size=batch_size, shuffle=True)

mymodel = MyModel(in_features = dataset.get_X_shape1())

optimizer = torch.optim.Adam(mymodel.parameters(), lr = learning_rate)

loss = torch.nn.MSELoss()

train_ls = []

for epoch in range(epoch_num):

for X_train, y_train in train_dataloader:

y_pred = mymodel(X_train)

l = loss(y_pred, y_train)

optimizer.zero_grad()

l.backward()

optimizer.step()

train_ls.append(l.item())

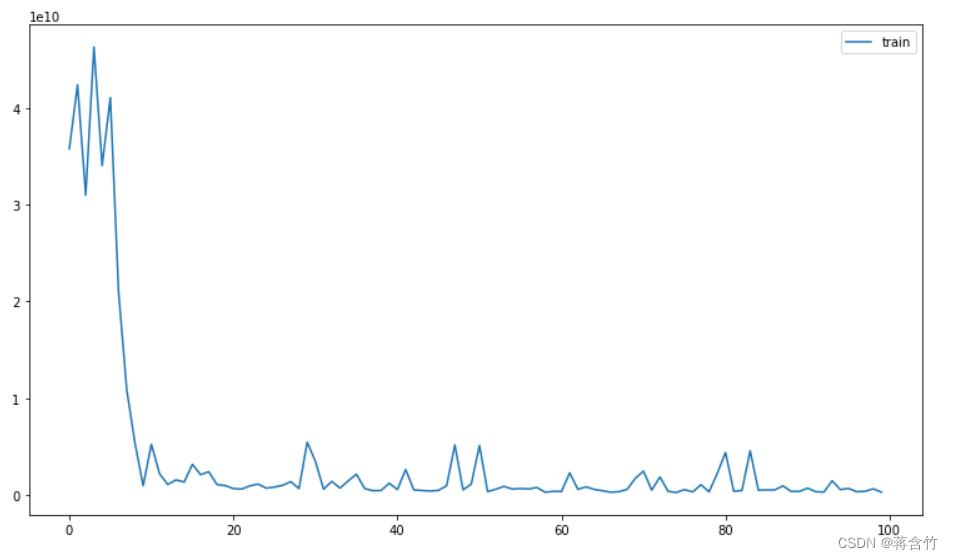

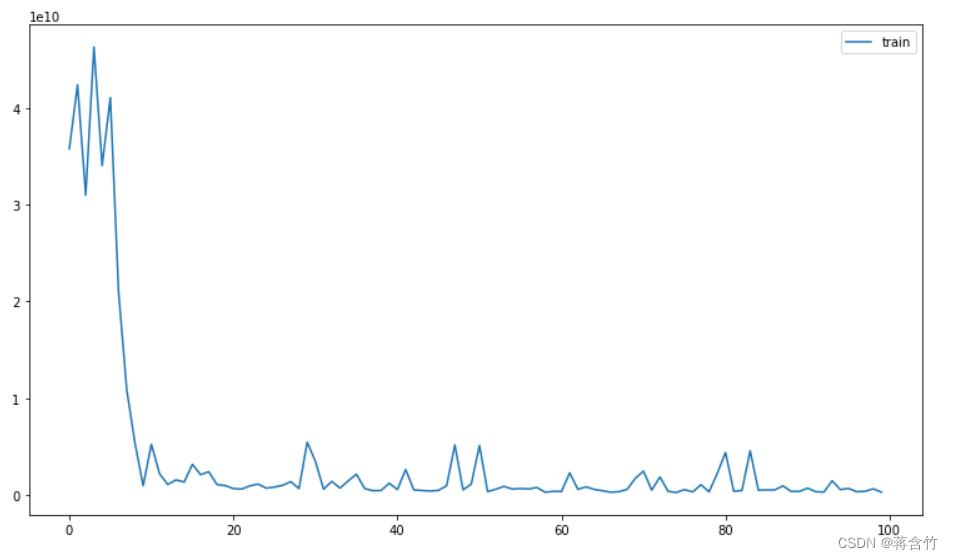

绘制曲线:epoch与loss

import matplotlib.pyplot as plt

plt.figure(figsize=(12.8, 7.2))

plt.plot(range(0, num_epochs), train_ls, label="train")

plt.legend()

plt.show()

911

911

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?