目录

说明:本内容为个人自用学习笔记,整理自b站西湖大学赵世钰老师的【强化学习的数学原理】课程,特别感谢老师分享讲解如此清楚的课程。

两个概念(optimal state value and optimal policy)+一个工具(Bellman optimality equation )

1. 最优策略(optimal policy)的定义

通过state value 评估一个 policy的好坏,如果两个策略

π

1

,

π

2

\pi_1, \pi_2

π1,π2 满足以下式子,则认为策略

π

1

\pi_1

π1 比

π

2

\pi_2

π2 更好

v

π

1

(

s

)

≥

v

π

2

(

s

)

f

o

r

a

l

l

s

∈

S

v_{\pi_1}(s) \ge v_{\pi_2}(s) \quad for\,all\, s \in S

vπ1(s)≥vπ2(s)foralls∈S

最优策略的定义:

A policy π ∗ \pi^* π∗ is optimal if v π ∗ ≥ v π ( s ) v_{\pi^{*}} \ge v_{\pi}(s) vπ∗≥vπ(s) for any other policy π \pi π .

四个问题:策略是否存在;是否唯一;随机还是确定;如何得到;

2. Bellman optimal policy(BOE)

-

elementwise form

v ( s ) = max π ∑ a π ( a ∣ s ) ( ∑ r p ( r ∣ s , a ) r + γ ∑ s ′ p ( s ′ ∣ s , a ) v π ( s ′ ) ) , ∀ s ∈ S . = max π ∑ a π ( a ∣ s ) q ( s , a ) \begin{aligned} v (s) & = \max\limits_{\pi}\sum\limits_{a} \pi(a|s) \left( \sum\limits_{r} p(r|s, a)r + \gamma \sum\limits_{s'} p(s'|s,a) v_\pi (s') \right), \quad \forall s \in S. \\ & = \max\limits_{\pi}\sum\limits_{a} \pi(a|s)q(s,a) \end{aligned} v(s)=πmaxa∑π(a∣s)(r∑p(r∣s,a)r+γs′∑p(s′∣s,a)vπ(s′)),∀s∈S.=πmaxa∑π(a∣s)q(s,a)- 已知 p ( r ∣ s , a ) p(r|s, a) p(r∣s,a) , p ( s ′ ∣ s , a ) p(s'|s,a) p(s′∣s,a) ,未知需求 v π ( s ) v_\pi (s) vπ(s) , v π ( s ′ ) v_\pi (s') vπ(s′)

- bellman equation 是依赖于给定的 π \pi π ,bellman optimal eqation 未给定

-

matrix-vector form

v = max π ( r π + γ P π v ) \begin{aligned} v_ = \max\limits_{\pi}(r_{\pi}+\gamma P_{\pi}v) \end{aligned} v=πmax(rπ+γPπv)- 如何求解,方程是否有解,解是否唯一,和最优策略的关系

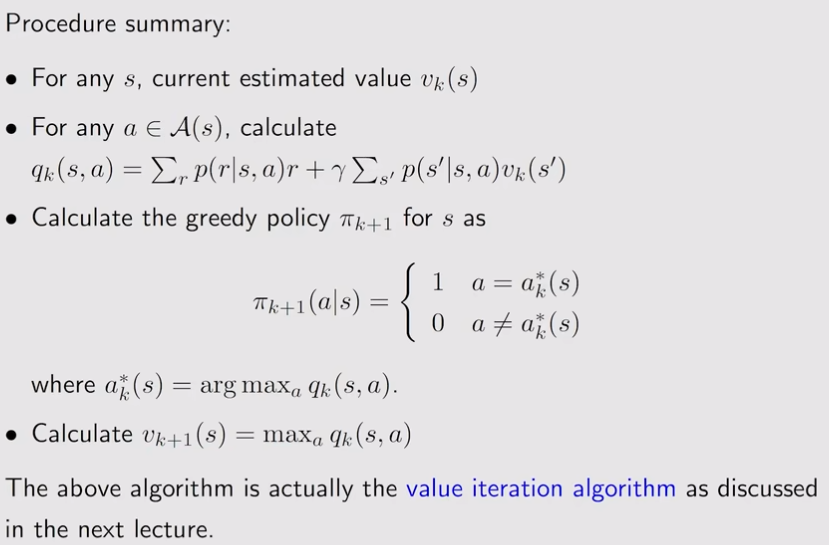

3. Rewrite Equation

v ( s ) = max π ∑ a π ( a ∣ s ) q ( s , a ) = max a ∈ A ( s ) q ( s , a ) a ∗ = arg max a q ( s , a ) π ( a ∣ s ) = { 1 a = a ∗ 0 a ≠ a ∗ v (s) = \max\limits_{\pi}\sum\limits_{a} \pi(a|s)q(s,a)= \max\limits_{a\in \mathcal{A}(s)}q(s,a)\\ a^* = \arg\max\limits_{a}q(s,a) \\ \pi(a|s)= \left\{ \begin{array}{ll} 1 & \textrm{$a=a^*$} \\ 0 &\textrm{$a \neq a^* $} \end{array} \right. v(s)=πmaxa∑π(a∣s)q(s,a)=a∈A(s)maxq(s,a)a∗=argamaxq(s,a)π(a∣s)={10a=a∗a=a∗

Let

f

(

v

)

:

=

max

π

(

r

π

+

γ

P

π

v

)

f(v) := \max\limits_{\pi}(r_\pi + \gamma P_\pi v)

f(v):=πmax(rπ+γPπv)

则 bellman optimal equation 成为(固定v,求解)

v

=

f

(

v

)

v = f(v)

v=f(v)

其中,

[

f

(

v

)

]

s

=

max

a

π

(

a

∣

s

)

q

(

s

,

a

)

s

∈

S

[f(v)]_s = \max \limits_{a} \pi(a|s)q(s,a)\quad s \in S

[f(v)]s=amaxπ(a∣s)q(s,a)s∈S

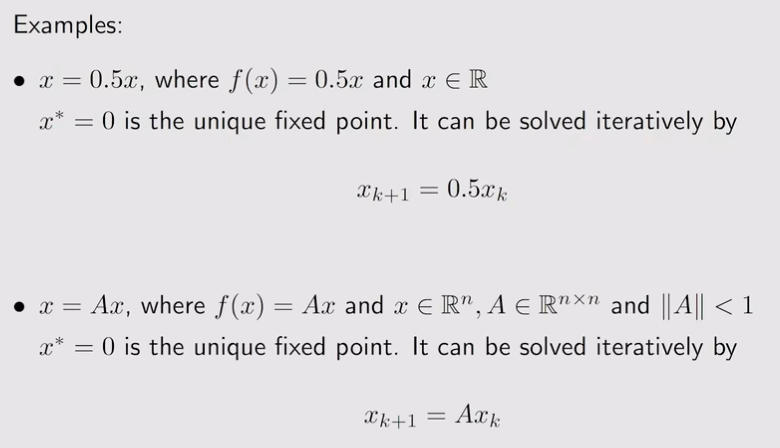

4. Contraction Mapping Theorem

For any equation that has the form of x = f ( x ) x=f(x) x=f(x) , if f f f is a contarction mapping, then

- Existence: there exists a fixed point x ∗ x^* x∗ satisfying f ( x ∗ ) = x ∗ f(x^*)=x^* f(x∗)=x∗

- Uniqueeness: The fixed point x ∗ x^* x∗ is unique

- Algorithm: Consider a sequence { x k } \{x_k\} {xk} where x k + 1 = f ( x k ) x_{k+1}=f(x_k) xk+1=f(xk) then x k → x ∗ x_k \rightarrow x^* xk→x∗ as k → ∞ k\rightarrow\infty k→∞ . Moreover, the convergence rate is exponentially.

5. Solution

v = f ( v ) = max π ( r π + γ P π v ) v = f(v) =\max\limits_{\pi}(r_\pi + \gamma P_\pi v) v=f(v)=πmax(rπ+γPπv)

f ( v ) f(v) f(v) is contraction mapping.

根据 Contraction Mapping Theorem可以得出:

- bellman 最优方程存在最优解且解唯一。

- 给定任意的 v 0 v_0 v0,最终 v k v_k vk 会以指数速度收敛于 v ∗ v^* v∗ ,收敛速度取决于 γ \gamma γ

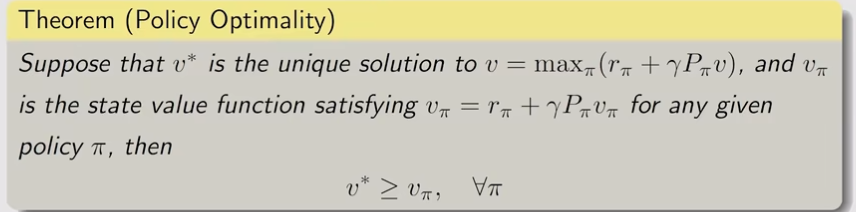

6. Analyzing optimal policies

v ( s ) = max π ∑ a π ( a ∣ s ) ( ∑ r p ( r ∣ s , a ) r + γ ∑ s ′ p ( s ′ ∣ s , a ) v π ( s ′ ) ) , ∀ s ∈ S . v (s)= \max\limits_{\pi}\sum\limits_{a} \pi(a|s) \left( \sum\limits_{r} p(r|s, a)r + \gamma \sum\limits_{s'} p(s'|s,a) v_\pi (s') \right), \quad \forall s \in S. \\ v(s)=πmaxa∑π(a∣s)(r∑p(r∣s,a)r+γs′∑p(s′∣s,a)vπ(s′)),∀s∈S.

- 最优策略的影响因素

- Reward design: r(r → \rightarrow → ar+b 不会改变最优策略,Absolute reward values is not matter, it’s their relative values!)

- System model: p ( r ∣ s , a ) p(r|s, a) p(r∣s,a) , p ( s ′ ∣ s , a ) p(s'|s,a) p(s′∣s,a)

- Discount rate: γ \gamma γ (越小越近视,关注当前的收益)

- γ \gamma γ 决定了Agent选择最短路径。

1677

1677

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?