在做物体检测时,由于成本和应用场合的限制,不能够一味地增加相机的分辨率,或者已经用了分辨率很高的相机,但是视野范围很大,仍然无法实现很高的精度,这时就要考虑亚像素技术,亚像素技术就是在两个像素点之间进行进一步的细分,从而得到亚像素级别的边缘点的坐标(也就是float类型的坐标),一般来说,现有的技术可以做到2细分、4细分,甚至很牛的能做到更高,通过亚像素边缘检测技术的使用,可以节约成本,提高识别精度。

常见的亚像素技术包括灰度矩、Hu矩、空间矩、Zernike矩等,通过查阅多篇论文,综合比较精度、时间和实现难度,选择Zernike矩作为项目中的亚像素边缘检测算法。基于Zernike矩的边缘检测原理,下一篇文章详细再总结一下,这里大体把步骤说一下,并使用OpenCV实现。

大体步骤就是,首先确定使用的模板大小,然后根据Zernike矩的公式求出各个模板系数,例如我选择的是7*7模板(模板越大精度越高,但是运算时间越长),要计算M00,M11R,M11I,M20,M31R,M31I,M40七个Zernike模板。第二步是对待检测图像进行预处理,包括滤波二值化等,也可以在进行一次Canny边缘检测。第三步是将预处理的图像跟7个Zernike矩模板分别进行卷积,得到7个Zernike矩。第四步是把上一步的矩乘上角度校正系数(这是因为利用Zernike的旋转不变性,Zernike模型把边缘都简化成了x=n的直线,这里要调整回来)。第五步是计算距离参数l和灰度差参数k,根据k和l判断该点是否为边缘点。以下是基于OpenCV的实现。

理论部分可以参考:(12条消息) Zernike矩之边缘检测(附源码)_xiaoluo91的专栏-CSDN博客_zernike矩边缘检测

以下部分为python实现的代码段,仅供参考。(以上理论部分为原始的Zernike矩,代码为改进的Zernike矩,参考文章为:基于Zernike正交矩的图像亚像素边缘检测算法改进。高世一)

import cv2

import numpy as np

import matplotlib.pyplot as plt

import time

g_N = 7

M00 = np.array([0, 0.0287, 0.0686, 0.0807, 0.0686, 0.0287, 0,

0.0287, 0.0815, 0.0816, 0.0816, 0.0816, 0.0815, 0.0287,

0.0686, 0.0816, 0.0816, 0.0816, 0.0816, 0.0816, 0.0686,

0.0807, 0.0816, 0.0816, 0.0816, 0.0816, 0.0816, 0.0807,

0.0686, 0.0816, 0.0816, 0.0816, 0.0816, 0.0816, 0.0686,

0.0287, 0.0815, 0.0816, 0.0816, 0.0816, 0.0815, 0.0287,

0, 0.0287, 0.0686, 0.0807, 0.0686, 0.0287, 0]).reshape((7, 7))

M11R = np.array([0, -0.015, -0.019, 0, 0.019, 0.015, 0,

-0.0224, -0.0466, -0.0233, 0, 0.0233, 0.0466, 0.0224,

-0.0573, -0.0466, -0.0233, 0, 0.0233, 0.0466, 0.0573,

-0.069, -0.0466, -0.0233, 0, 0.0233, 0.0466, 0.069,

-0.0573, -0.0466, -0.0233, 0, 0.0233, 0.0466, 0.0573,

-0.0224, -0.0466, -0.0233, 0, 0.0233, 0.0466, 0.0224,

0, -0.015, -0.019, 0, 0.019, 0.015, 0]).reshape((7, 7))

M11I = np.array([0, -0.0224, -0.0573, -0.069, -0.0573, -0.0224, 0,

-0.015, -0.0466, -0.0466, -0.0466, -0.0466, -0.0466, -0.015,

-0.019, -0.0233, -0.0233, -0.0233, -0.0233, -0.0233, -0.019,

0, 0, 0, 0, 0, 0, 0,

0.019, 0.0233, 0.0233, 0.0233, 0.0233, 0.0233, 0.019,

0.015, 0.0466, 0.0466, 0.0466, 0.0466, 0.0466, 0.015,

0, 0.0224, 0.0573, 0.069, 0.0573, 0.0224, 0]).reshape((7, 7))

M20 = np.array([0, 0.0225, 0.0394, 0.0396, 0.0394, 0.0225, 0,

0.0225, 0.0271, -0.0128, -0.0261, -0.0128, 0.0271, 0.0225,

0.0394, -0.0128, -0.0528, -0.0661, -0.0528, -0.0128, 0.0394,

0.0396, -0.0261, -0.0661, -0.0794, -0.0661, -0.0261, 0.0396,

0.0394, -0.0128, -0.0528, -0.0661, -0.0528, -0.0128, 0.0394,

0.0225, 0.0271, -0.0128, -0.0261, -0.0128, 0.0271, 0.0225,

0, 0.0225, 0.0394, 0.0396, 0.0394, 0.0225, 0]).reshape((7, 7))

M31R = np.array([0, -0.0103, -0.0073, 0, 0.0073, 0.0103, 0,

-0.0153, -0.0018, 0.0162, 0, -0.0162, 0.0018, 0.0153,

-0.0223, 0.0324, 0.0333, 0, -0.0333, -0.0324, 0.0223,

-0.0190, 0.0438, 0.0390, 0, -0.0390, -0.0438, 0.0190,

-0.0223, 0.0324, 0.0333, 0, -0.0333, -0.0324, 0.0223,

-0.0153, -0.0018, 0.0162, 0, -0.0162, 0.0018, 0.0153,

0, -0.0103, -0.0073, 0, 0.0073, 0.0103, 0]).reshape(7, 7)

M31I = np.array([0, -0.0153, -0.0223, -0.019, -0.0223, -0.0153, 0,

-0.0103, -0.0018, 0.0324, 0.0438, 0.0324, -0.0018, -0.0103,

-0.0073, 0.0162, 0.0333, 0.039, 0.0333, 0.0162, -0.0073,

0, 0, 0, 0, 0, 0, 0,

0.0073, -0.0162, -0.0333, -0.039, -0.0333, -0.0162, 0.0073,

0.0103, 0.0018, -0.0324, -0.0438, -0.0324, 0.0018, 0.0103,

0, 0.0153, 0.0223, 0.0190, 0.0223, 0.0153, 0]).reshape(7, 7)

M40 = np.array([0, 0.013, 0.0056, -0.0018, 0.0056, 0.013, 0,

0.0130, -0.0186, -0.0323, -0.0239, -0.0323, -0.0186, 0.0130,

0.0056, -0.0323, 0.0125, 0.0406, 0.0125, -0.0323, 0.0056,

-0.0018, -0.0239, 0.0406, 0.0751, 0.0406, -0.0239, -0.0018,

0.0056, -0.0323, 0.0125, 0.0406, 0.0125, -0.0323, 0.0056,

0.0130, -0.0186, -0.0323, -0.0239, -0.0323, -0.0186, 0.0130,

0, 0.013, 0.0056, -0.0018, 0.0056, 0.013, 0]).reshape(7, 7)

def zernike_detection(path):

img = cv2.imread(path)

img = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

blur_img = cv2.medianBlur(img, 13)

c_img = cv2.adaptiveThreshold(blur_img, 255, cv2.ADAPTIVE_THRESH_GAUSSIAN_C, cv2.THRESH_BINARY_INV, 7, 4)

ZerImgM00 = cv2.filter2D(c_img, cv2.CV_64F, M00)

ZerImgM11R = cv2.filter2D(c_img, cv2.CV_64F, M11R)

ZerImgM11I = cv2.filter2D(c_img, cv2.CV_64F, M11I)

ZerImgM20 = cv2.filter2D(c_img, cv2.CV_64F, M20)

ZerImgM31R = cv2.filter2D(c_img, cv2.CV_64F, M31R)

ZerImgM31I = cv2.filter2D(c_img, cv2.CV_64F, M31I)

ZerImgM40 = cv2.filter2D(c_img, cv2.CV_64F, M40)

point_temporary_x = []

point_temporary_y = []

scatter_arr = cv2.findNonZero(ZerImgM00).reshape(-1, 2)

for idx in scatter_arr:

j, i = idx

theta_temporary = np.arctan2(ZerImgM31I[i][j], ZerImgM31R[i][j])

rotated_z11 = np.sin(theta_temporary) * ZerImgM11I[i][j] + np.cos(theta_temporary) * ZerImgM11R[i][j]

rotated_z31 = np.sin(theta_temporary) * ZerImgM31I[i][j] + np.cos(theta_temporary) * ZerImgM31R[i][j]

l_method1 = np.sqrt((5 * ZerImgM40[i][j] + 3 * ZerImgM20[i][j]) / (8 * ZerImgM20[i][j]))

l_method2 = np.sqrt((5 * rotated_z31 + rotated_z11)/(6 * rotated_z11))

l = (l_method1 + l_method2)/2

k = 3 * rotated_z11 / (2 * (1 - l_method2 ** 2)**1.5)

# h = (ZerImgM00[i][j] - k * np.pi / 2 + k * np.arcsin(l_method2) + k * l_method2 * (1 - l_method2 ** 2) ** 0.5)

# / np.pi

k_value = 20.0

l_value = 2**0.5 / g_N

absl = np.abs(l_method2 - l_method1)

if k >= k_value and absl <= l_value:

y = i + g_N * l * np.sin(theta_temporary) / 2

x = j + g_N * l * np.cos(theta_temporary) / 2

point_temporary_x.append(x)

point_temporary_y.append(y)

else:

continue

return point_temporary_x, point_temporary_y

path = 'E:/program/image/lo.jpg' # 此处需要修改为自己的路径!!!

time1 = time.time()

point_temporary_x, point_temporary_y = zernike_detection(path)

time2 = time.time()

print(time2-time1)文章中的point_temporary_x, point_temporary_y即为所存亚像素点。

由于本人是菜鸟一枚,不知道怎么样在图像中显示亚像素(希望有大佬会的话,可以教教我)。。就用散点图画了一个。如下:

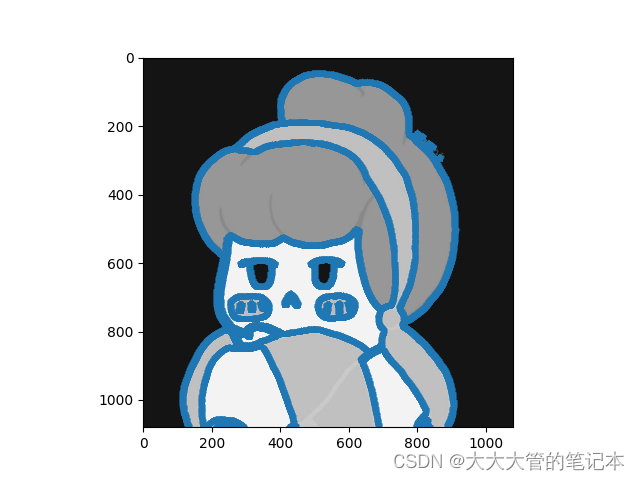

原图:

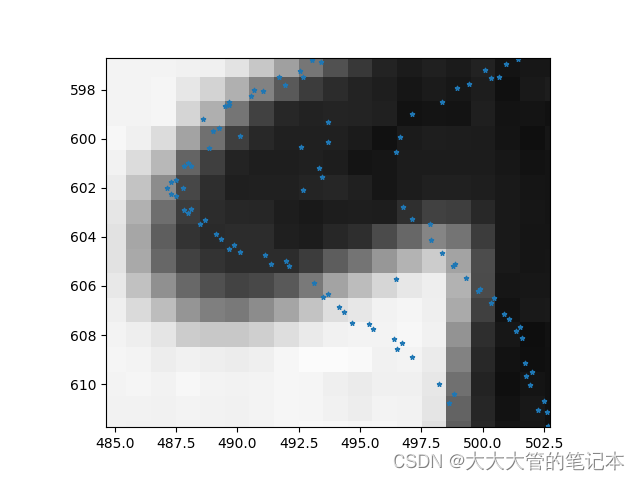

亚像素散点图为:

其中会产生双层边缘,如下:

产生的原因是由于边缘线与两端背景都有像素差, (猜测一下)如果是二值图象直接检测的话,应该就不会有双层边缘了。

-----------------------------------------------2022.10.31-----------------------------------------------

经大佬指点,成功在图像中显示亚像素!以下为效果图:

下图为右眼眼角的边缘显示:

下面为完整代码:

import cv2

import numpy as np

import matplotlib.pyplot as plt

import time

g_N = 7

M00 = np.array([0, 0.0287, 0.0686, 0.0807, 0.0686, 0.0287, 0,

0.0287, 0.0815, 0.0816, 0.0816, 0.0816, 0.0815, 0.0287,

0.0686, 0.0816, 0.0816, 0.0816, 0.0816, 0.0816, 0.0686,

0.0807, 0.0816, 0.0816, 0.0816, 0.0816, 0.0816, 0.0807,

0.0686, 0.0816, 0.0816, 0.0816, 0.0816, 0.0816, 0.0686,

0.0287, 0.0815, 0.0816, 0.0816, 0.0816, 0.0815, 0.0287,

0, 0.0287, 0.0686, 0.0807, 0.0686, 0.0287, 0]).reshape((7, 7))

M11R = np.array([0, -0.015, -0.019, 0, 0.019, 0.015, 0,

-0.0224, -0.0466, -0.0233, 0, 0.0233, 0.0466, 0.0224,

-0.0573, -0.0466, -0.0233, 0, 0.0233, 0.0466, 0.0573,

-0.069, -0.0466, -0.0233, 0, 0.0233, 0.0466, 0.069,

-0.0573, -0.0466, -0.0233, 0, 0.0233, 0.0466, 0.0573,

-0.0224, -0.0466, -0.0233, 0, 0.0233, 0.0466, 0.0224,

0, -0.015, -0.019, 0, 0.019, 0.015, 0]).reshape((7, 7))

M11I = np.array([0, -0.0224, -0.0573, -0.069, -0.0573, -0.0224, 0,

-0.015, -0.0466, -0.0466, -0.0466, -0.0466, -0.0466, -0.015,

-0.019, -0.0233, -0.0233, -0.0233, -0.0233, -0.0233, -0.019,

0, 0, 0, 0, 0, 0, 0,

0.019, 0.0233, 0.0233, 0.0233, 0.0233, 0.0233, 0.019,

0.015, 0.0466, 0.0466, 0.0466, 0.0466, 0.0466, 0.015,

0, 0.0224, 0.0573, 0.069, 0.0573, 0.0224, 0]).reshape((7, 7))

M20 = np.array([0, 0.0225, 0.0394, 0.0396, 0.0394, 0.0225, 0,

0.0225, 0.0271, -0.0128, -0.0261, -0.0128, 0.0271, 0.0225,

0.0394, -0.0128, -0.0528, -0.0661, -0.0528, -0.0128, 0.0394,

0.0396, -0.0261, -0.0661, -0.0794, -0.0661, -0.0261, 0.0396,

0.0394, -0.0128, -0.0528, -0.0661, -0.0528, -0.0128, 0.0394,

0.0225, 0.0271, -0.0128, -0.0261, -0.0128, 0.0271, 0.0225,

0, 0.0225, 0.0394, 0.0396, 0.0394, 0.0225, 0]).reshape((7, 7))

M31R = np.array([0, -0.0103, -0.0073, 0, 0.0073, 0.0103, 0,

-0.0153, -0.0018, 0.0162, 0, -0.0162, 0.0018, 0.0153,

-0.0223, 0.0324, 0.0333, 0, -0.0333, -0.0324, 0.0223,

-0.0190, 0.0438, 0.0390, 0, -0.0390, -0.0438, 0.0190,

-0.0223, 0.0324, 0.0333, 0, -0.0333, -0.0324, 0.0223,

-0.0153, -0.0018, 0.0162, 0, -0.0162, 0.0018, 0.0153,

0, -0.0103, -0.0073, 0, 0.0073, 0.0103, 0]).reshape(7, 7)

M31I = np.array([0, -0.0153, -0.0223, -0.019, -0.0223, -0.0153, 0,

-0.0103, -0.0018, 0.0324, 0.0438, 0.0324, -0.0018, -0.0103,

-0.0073, 0.0162, 0.0333, 0.039, 0.0333, 0.0162, -0.0073,

0, 0, 0, 0, 0, 0, 0,

0.0073, -0.0162, -0.0333, -0.039, -0.0333, -0.0162, 0.0073,

0.0103, 0.0018, -0.0324, -0.0438, -0.0324, 0.0018, 0.0103,

0, 0.0153, 0.0223, 0.0190, 0.0223, 0.0153, 0]).reshape(7, 7)

M40 = np.array([0, 0.013, 0.0056, -0.0018, 0.0056, 0.013, 0,

0.0130, -0.0186, -0.0323, -0.0239, -0.0323, -0.0186, 0.0130,

0.0056, -0.0323, 0.0125, 0.0406, 0.0125, -0.0323, 0.0056,

-0.0018, -0.0239, 0.0406, 0.0751, 0.0406, -0.0239, -0.0018,

0.0056, -0.0323, 0.0125, 0.0406, 0.0125, -0.0323, 0.0056,

0.0130, -0.0186, -0.0323, -0.0239, -0.0323, -0.0186, 0.0130,

0, 0.013, 0.0056, -0.0018, 0.0056, 0.013, 0]).reshape(7, 7)

def zernike_detection(path):

img = cv2.imread(path)

img = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

blur_img = cv2.medianBlur(img, 13)

c_img = cv2.adaptiveThreshold(blur_img, 255, cv2.ADAPTIVE_THRESH_GAUSSIAN_C, cv2.THRESH_BINARY_INV, 7, 4)

ZerImgM00 = cv2.filter2D(c_img, cv2.CV_64F, M00)

ZerImgM11R = cv2.filter2D(c_img, cv2.CV_64F, M11R)

ZerImgM11I = cv2.filter2D(c_img, cv2.CV_64F, M11I)

ZerImgM20 = cv2.filter2D(c_img, cv2.CV_64F, M20)

ZerImgM31R = cv2.filter2D(c_img, cv2.CV_64F, M31R)

ZerImgM31I = cv2.filter2D(c_img, cv2.CV_64F, M31I)

ZerImgM40 = cv2.filter2D(c_img, cv2.CV_64F, M40)

point_temporary_x = []

point_temporary_y = []

scatter_arr = cv2.findNonZero(ZerImgM00).reshape(-1, 2)

for idx in scatter_arr:

j, i = idx

theta_temporary = np.arctan2(ZerImgM31I[i][j], ZerImgM31R[i][j])

rotated_z11 = np.sin(theta_temporary) * ZerImgM11I[i][j] + np.cos(theta_temporary) * ZerImgM11R[i][j]

rotated_z31 = np.sin(theta_temporary) * ZerImgM31I[i][j] + np.cos(theta_temporary) * ZerImgM31R[i][j]

l_method1 = np.sqrt((5 * ZerImgM40[i][j] + 3 * ZerImgM20[i][j]) / (8 * ZerImgM20[i][j]))

l_method2 = np.sqrt((5 * rotated_z31 + rotated_z11) / (6 * rotated_z11))

l = (l_method1 + l_method2) / 2

k = 3 * rotated_z11 / (2 * (1 - l_method2 ** 2) ** 1.5)

# h = (ZerImgM00[i][j] - k * np.pi / 2 + k * np.arcsin(l_method2) + k * l_method2 * (1 - l_method2 ** 2) ** 0.5)

# / np.pi

k_value = 20.0

l_value = 2 ** 0.5 / g_N

absl = np.abs(l_method2 - l_method1)

if k >= k_value and absl <= l_value:

y = i + g_N * l * np.sin(theta_temporary) / 2

x = j + g_N * l * np.cos(theta_temporary) / 2

point_temporary_x.append(x)

point_temporary_y.append(y)

else:

continue

return point_temporary_x, point_temporary_y

path = 'E:/program/image/lo.jpg' # 此处需要修改为自己的路径!!!

time1 = time.time()

point_temporary_x, point_temporary_y = zernike_detection(path)

time2 = time.time()

print(time2 - time1)

# gray : 进行检测的图像

gray = cv2.imread(path, 0)

plt.imshow(gray, cmap="gray")

# point检测出的亚像素点

point = np.array([point_temporary_x, point_temporary_y])

# s:调整显示点的大小

plt.scatter(point[0, :], point[1, :], s=10, marker="*")

plt.show()-----------------------------------------------2024.04.26-------------------------------------------

好多好多人问我怎么解决双重边缘的问题,但是我也没有一个很好的办法。但是机智的我想到了一个偏方,虽然从理论上来看可能是不对的哈,但是确实是可以解决双重边缘的这个问题的。其实思路也非常简单,就是检测完边缘之后,再从边缘的像素点中保留像素点距离小于1的值就好啦。

除此之外,我还发现Canny是比中值模糊+自适应阈值略快一点的。就改了一下,需要的自取。。

再次更新一下代码:

import cv2

import numpy as np

import matplotlib.pyplot as plt

import time

g_N = 7

M00 = np.array([0, 0.0287, 0.0686, 0.0807, 0.0686, 0.0287, 0,

0.0287, 0.0815, 0.0816, 0.0816, 0.0816, 0.0815, 0.0287,

0.0686, 0.0816, 0.0816, 0.0816, 0.0816, 0.0816, 0.0686,

0.0807, 0.0816, 0.0816, 0.0816, 0.0816, 0.0816, 0.0807,

0.0686, 0.0816, 0.0816, 0.0816, 0.0816, 0.0816, 0.0686,

0.0287, 0.0815, 0.0816, 0.0816, 0.0816, 0.0815, 0.0287,

0, 0.0287, 0.0686, 0.0807, 0.0686, 0.0287, 0]).reshape((7, 7))

M11R = np.array([0, -0.015, -0.019, 0, 0.019, 0.015, 0,

-0.0224, -0.0466, -0.0233, 0, 0.0233, 0.0466, 0.0224,

-0.0573, -0.0466, -0.0233, 0, 0.0233, 0.0466, 0.0573,

-0.069, -0.0466, -0.0233, 0, 0.0233, 0.0466, 0.069,

-0.0573, -0.0466, -0.0233, 0, 0.0233, 0.0466, 0.0573,

-0.0224, -0.0466, -0.0233, 0, 0.0233, 0.0466, 0.0224,

0, -0.015, -0.019, 0, 0.019, 0.015, 0]).reshape((7, 7))

M11I = np.array([0, -0.0224, -0.0573, -0.069, -0.0573, -0.0224, 0,

-0.015, -0.0466, -0.0466, -0.0466, -0.0466, -0.0466, -0.015,

-0.019, -0.0233, -0.0233, -0.0233, -0.0233, -0.0233, -0.019,

0, 0, 0, 0, 0, 0, 0,

0.019, 0.0233, 0.0233, 0.0233, 0.0233, 0.0233, 0.019,

0.015, 0.0466, 0.0466, 0.0466, 0.0466, 0.0466, 0.015,

0, 0.0224, 0.0573, 0.069, 0.0573, 0.0224, 0]).reshape((7, 7))

M20 = np.array([0, 0.0225, 0.0394, 0.0396, 0.0394, 0.0225, 0,

0.0225, 0.0271, -0.0128, -0.0261, -0.0128, 0.0271, 0.0225,

0.0394, -0.0128, -0.0528, -0.0661, -0.0528, -0.0128, 0.0394,

0.0396, -0.0261, -0.0661, -0.0794, -0.0661, -0.0261, 0.0396,

0.0394, -0.0128, -0.0528, -0.0661, -0.0528, -0.0128, 0.0394,

0.0225, 0.0271, -0.0128, -0.0261, -0.0128, 0.0271, 0.0225,

0, 0.0225, 0.0394, 0.0396, 0.0394, 0.0225, 0]).reshape((7, 7))

M31R = np.array([0, -0.0103, -0.0073, 0, 0.0073, 0.0103, 0,

-0.0153, -0.0018, 0.0162, 0, -0.0162, 0.0018, 0.0153,

-0.0223, 0.0324, 0.0333, 0, -0.0333, -0.0324, 0.0223,

-0.0190, 0.0438, 0.0390, 0, -0.0390, -0.0438, 0.0190,

-0.0223, 0.0324, 0.0333, 0, -0.0333, -0.0324, 0.0223,

-0.0153, -0.0018, 0.0162, 0, -0.0162, 0.0018, 0.0153,

0, -0.0103, -0.0073, 0, 0.0073, 0.0103, 0]).reshape(7, 7)

M31I = np.array([0, -0.0153, -0.0223, -0.019, -0.0223, -0.0153, 0,

-0.0103, -0.0018, 0.0324, 0.0438, 0.0324, -0.0018, -0.0103,

-0.0073, 0.0162, 0.0333, 0.039, 0.0333, 0.0162, -0.0073,

0, 0, 0, 0, 0, 0, 0,

0.0073, -0.0162, -0.0333, -0.039, -0.0333, -0.0162, 0.0073,

0.0103, 0.0018, -0.0324, -0.0438, -0.0324, 0.0018, 0.0103,

0, 0.0153, 0.0223, 0.0190, 0.0223, 0.0153, 0]).reshape(7, 7)

M40 = np.array([0, 0.013, 0.0056, -0.0018, 0.0056, 0.013, 0,

0.0130, -0.0186, -0.0323, -0.0239, -0.0323, -0.0186, 0.0130,

0.0056, -0.0323, 0.0125, 0.0406, 0.0125, -0.0323, 0.0056,

-0.0018, -0.0239, 0.0406, 0.0751, 0.0406, -0.0239, -0.0018,

0.0056, -0.0323, 0.0125, 0.0406, 0.0125, -0.0323, 0.0056,

0.0130, -0.0186, -0.0323, -0.0239, -0.0323, -0.0186, 0.0130,

0, 0.013, 0.0056, -0.0018, 0.0056, 0.013, 0]).reshape(7, 7)

def zernike_detection(path):

img = cv2.imread(path)

img = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

# blur_img = cv2.medianBlur(img, 3)

# c_img = cv2.adaptiveThreshold(blur_img, 255, cv2.ADAPTIVE_THRESH_GAUSSIAN_C, cv2.THRESH_BINARY_INV, 7, 4)

c_img = cv2.Canny(img, 0, 255)

ZerImgM00 = cv2.filter2D(c_img, cv2.CV_64F, M00)

ZerImgM11R = cv2.filter2D(c_img, cv2.CV_64F, M11R)

ZerImgM11I = cv2.filter2D(c_img, cv2.CV_64F, M11I)

ZerImgM20 = cv2.filter2D(c_img, cv2.CV_64F, M20)

ZerImgM31R = cv2.filter2D(c_img, cv2.CV_64F, M31R)

ZerImgM31I = cv2.filter2D(c_img, cv2.CV_64F, M31I)

ZerImgM40 = cv2.filter2D(c_img, cv2.CV_64F, M40)

point_temporary_x = []

point_temporary_y = []

scatter_arr = cv2.findNonZero(ZerImgM00).reshape(-1, 2)

for idx in scatter_arr:

j, i = idx

theta_temporary = np.arctan2(ZerImgM31I[i][j], ZerImgM31R[i][j])

rotated_z11 = np.sin(theta_temporary) * ZerImgM11I[i][j] + np.cos(theta_temporary) * ZerImgM11R[i][j]

rotated_z31 = np.sin(theta_temporary) * ZerImgM31I[i][j] + np.cos(theta_temporary) * ZerImgM31R[i][j]

l_method1 = np.sqrt((5 * ZerImgM40[i][j] + 3 * ZerImgM20[i][j]) / (8 * ZerImgM20[i][j]))

l_method2 = np.sqrt((5 * rotated_z31 + rotated_z11) / (6 * rotated_z11))

l = (l_method1 + l_method2) / 2

k = 3 * rotated_z11 / (2 * (1 - l_method2 ** 2) ** 1.5)

# h = (ZerImgM00[i][j] - k * np.pi / 2 + k * np.arcsin(l_method2) + k * l_method2 * (1 - l_method2 ** 2) ** 0.5)

# / np.pi

k_value = 20.0

l_value = 2 ** 0.5 / g_N

absl = np.abs(l_method2 - l_method1)

if k >= k_value and absl <= l_value and g_N * l * np.sin(theta_temporary) / 2<1 and g_N * l * np.cos(theta_temporary) / 2<1:

y = i + g_N * l * np.sin(theta_temporary) / 2

x = j + g_N * l * np.cos(theta_temporary) / 2

point_temporary_x.append(x)

point_temporary_y.append(y)

else:

continue

return point_temporary_x, point_temporary_y

path = 'E:/program/image/lo.jpg' # 此处需要修改为自己的路径!!!

time1 = time.time()

point_temporary_x, point_temporary_y = zernike_detection(path)

time2 = time.time()

print(time2 - time1)

# gray : 进行检测的图像

gray = cv2.imread(path, 0)

gray = cv2.Canny(gray, 0, 255)

plt.imshow(gray, cmap="gray")

# point检测出的亚像素点

point = np.array([point_temporary_x, point_temporary_y])

# s:调整显示点的大小

plt.scatter(point[0, :], point[1, :], s=10, marker="*")

plt.show()参考&改编自:OpenCV实现基于Zernike矩的亚像素边缘检测_zilanpotou182的博客-CSDN博客_opencv亚像素边缘检测

397

397

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?