函数功能:

DataLayer 用于将数据库上的内容,一个batch一个batch的读入到相对应的Blob中,

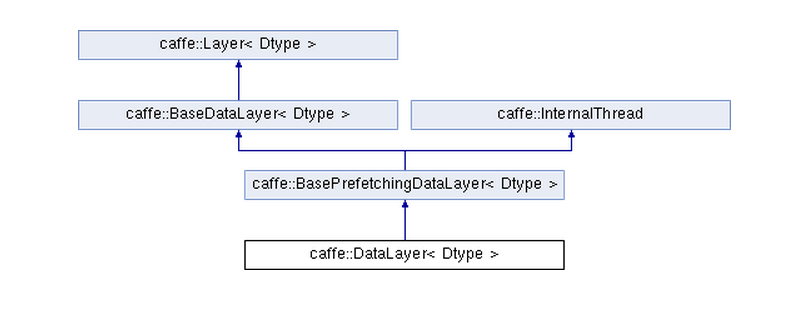

首先查看其继承关系

注意其不是直接继承于BaseDatalayer,因为,它需要并行的读取数据库上的数据,需要新开线程来预先读入数据。

DataLayer 有两个指针成员用来存放数据库和游标,

shared_ptr<db::DB> db_;

shared_ptr<db::Cursor> cursor_;其继承自基类的成员变量有

protected:

Blob<Dtype> prefetch_data_;

Blob<Dtype> prefetch_label_;

Blob<Dtype> transformed_data_;用于保存预读取的数据,标签,以及转换过的数据

继承自BaseDataLayer的成员变量有:

bool output_labels_;

其成员函数InternalThreadEntry()用于真正的数据读入操作,

其中

Dtype* top_data = this->prefetch_data_.mutable_cpu_data();

Dtype* top_label = NULL; // suppress warnings about uninitialized variables指针用于保留输入的批数据。

数据库里面的数据依然是先转化为Datum,

Datum datum;

datum.ParseFromString(cursor_->value());

int offset = this->prefetch_data_.offset(item_id);

this->transformed_data_.set_cpu_data(top_data + offset);

top_label[item_id] = datum.label();

其读取数据库输入也是通过游标来操作,cursor_->Next();,注意这里都是按照顺序读入的,所以,需要自己保证在输入存入数据库的时候确保其是无序的。

=============

源代码:

#include <opencv2/core/core.hpp>

#include <stdint.h>

#include <string>

#include <vector>

#include "caffe/common.hpp"

#include "caffe/data_layers.hpp"

#include "caffe/layer.hpp"

#include "caffe/proto/caffe.pb.h"

#include "caffe/util/benchmark.hpp"

#include "caffe/util/io.hpp"

#include "caffe/util/math_functions.hpp"

#include "caffe/util/rng.hpp"

namespace caffe {

template <typename Dtype>

DataLayer<Dtype>::~DataLayer<Dtype>() {

this->JoinPrefetchThread();

}

template <typename Dtype>

void DataLayer<Dtype>::DataLayerSetUp(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top) {

// Initialize DB

db_.reset(db::GetDB(this->layer_param_.data_param().backend()));

db_->Open(this->layer_param_.data_param().source(), db::READ);

cursor_.reset(db_->NewCursor());

// Check if we should randomly skip a few data points

if (this->layer_param_.data_param().rand_skip()) {

unsigned int skip = caffe_rng_rand() %

this->layer_param_.data_param().rand_skip();

LOG(INFO) << "Skipping first " << skip << " data points.";

while (skip-- > 0) {

cursor_->Next();

}

}

// Read a data point, and use it to initialize the top blob.

Datum datum;

datum.ParseFromString(cursor_->value());

bool force_color = this->layer_param_.data_param().force_encoded_color();

if ((force_color && DecodeDatum(&datum, true)) ||

DecodeDatumNative(&datum)) {

LOG(INFO) << "Decoding Datum";

}

// image

int crop_size = this->layer_param_.transform_param().crop_size();

if (crop_size > 0) {

top[0]->Reshape(this->layer_param_.data_param().batch_size(),

datum.channels(), crop_size, crop_size);

this->prefetch_data_.Reshape(this->layer_param_.data_param().batch_size(),

datum.channels(), crop_size, crop_size);

this->transformed_data_.Reshape(1, datum.channels(), crop_size, crop_size);

} else {

top[0]->Reshape(

this->layer_param_.data_param().batch_size(), datum.channels(),

datum.height(), datum.width());

this->prefetch_data_.Reshape(this->layer_param_.data_param().batch_size(),

datum.channels(), datum.height(), datum.width());

this->transformed_data_.Reshape(1, datum.channels(),

datum.height(), datum.width());

}

LOG(INFO) << "output data size: " << top[0]->num() << ","

<< top[0]->channels() << "," << top[0]->height() << ","

<< top[0]->width();

// label

if (this->output_labels_) {

vector<int> label_shape(1, this->layer_param_.data_param().batch_size());

top[1]->Reshape(label_shape);

this->prefetch_label_.Reshape(label_shape);

}

}

// This function is used to create a thread that prefetches the data.

template <typename Dtype>

void DataLayer<Dtype>::InternalThreadEntry() {

CPUTimer batch_timer;

batch_timer.Start();

double read_time = 0;

double trans_time = 0;

CPUTimer timer;

CHECK(this->prefetch_data_.count());

CHECK(this->transformed_data_.count());

// Reshape on single input batches for inputs of varying dimension.

const int batch_size = this->layer_param_.data_param().batch_size();

const int crop_size = this->layer_param_.transform_param().crop_size();

bool force_color = this->layer_param_.data_param().force_encoded_color();

if (batch_size == 1 && crop_size == 0) {

Datum datum;

datum.ParseFromString(cursor_->value());

if (datum.encoded()) {

if (force_color) {

DecodeDatum(&datum, true);

} else {

DecodeDatumNative(&datum);

}

}

this->prefetch_data_.Reshape(1, datum.channels(),

datum.height(), datum.width());

this->transformed_data_.Reshape(1, datum.channels(),

datum.height(), datum.width());

}

Dtype* top_data = this->prefetch_data_.mutable_cpu_data();

Dtype* top_label = NULL; // suppress warnings about uninitialized variables

if (this->output_labels_) {

top_label = this->prefetch_label_.mutable_cpu_data();

}

for (int item_id = 0; item_id < batch_size; ++item_id) {

timer.Start();

// get a blob

Datum datum;

datum.ParseFromString(cursor_->value());

cv::Mat cv_img;

if (datum.encoded()) {

if (force_color) {

cv_img = DecodeDatumToCVMat(datum, true);

} else {

cv_img = DecodeDatumToCVMatNative(datum);

}

if (cv_img.channels() != this->transformed_data_.channels()) {

LOG(WARNING) << "Your dataset contains encoded images with mixed "

<< "channel sizes. Consider adding a 'force_color' flag to the "

<< "model definition, or rebuild your dataset using "

<< "convert_imageset.";

}

}

read_time += timer.MicroSeconds();

timer.Start();

// Apply data transformations (mirror, scale, crop...)

int offset = this->prefetch_data_.offset(item_id);

this->transformed_data_.set_cpu_data(top_data + offset);

if (datum.encoded()) {

this->data_transformer_->Transform(cv_img, &(this->transformed_data_));

} else {

this->data_transformer_->Transform(datum, &(this->transformed_data_));

}

if (this->output_labels_) {

top_label[item_id] = datum.label();

}

trans_time += timer.MicroSeconds();

// go to the next iter

cursor_->Next();

if (!cursor_->valid()) {

DLOG(INFO) << "Restarting data prefetching from start.";

cursor_->SeekToFirst();

}

}

batch_timer.Stop();

DLOG(INFO) << "Prefetch batch: " << batch_timer.MilliSeconds() << " ms.";

DLOG(INFO) << " Read time: " << read_time / 1000 << " ms.";

DLOG(INFO) << "Transform time: " << trans_time / 1000 << " ms.";

}

INSTANTIATE_CLASS(DataLayer);

REGISTER_LAYER_CLASS(Data);

} // namespace caffe

其相对应的头文件信息:

template <typename Dtype>

class BaseDataLayer : public Layer<Dtype> {

public:

explicit BaseDataLayer(const LayerParameter& param);

virtual ~BaseDataLayer() {}

// LayerSetUp: implements common data layer setup functionality, and calls

// DataLayerSetUp to do special data layer setup for individual layer types.

// This method may not be overridden except by the BasePrefetchingDataLayer.

virtual void LayerSetUp(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top);

virtual void DataLayerSetUp(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top) {}

// Data layers have no bottoms, so reshaping is trivial.

virtual void Reshape(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top) {}

virtual void Backward_cpu(const vector<Blob<Dtype>*>& top,

const vector<bool>& propagate_down, const vector<Blob<Dtype>*>& bottom) {}

virtual void Backward_gpu(const vector<Blob<Dtype>*>& top,

const vector<bool>& propagate_down, const vector<Blob<Dtype>*>& bottom) {}

protected:

TransformationParameter transform_param_;

shared_ptr<DataTransformer<Dtype> > data_transformer_;

bool output_labels_;

};

template <typename Dtype>

class BasePrefetchingDataLayer :

public BaseDataLayer<Dtype>, public InternalThread {

public:

explicit BasePrefetchingDataLayer(const LayerParameter& param)

: BaseDataLayer<Dtype>(param) {}

virtual ~BasePrefetchingDataLayer() {}

// LayerSetUp: implements common data layer setup functionality, and calls

// DataLayerSetUp to do special data layer setup for individual layer types.

// This method may not be overridden.

void LayerSetUp(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top);

virtual void Forward_cpu(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top);

virtual void Forward_gpu(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top);

virtual void CreatePrefetchThread();

virtual void JoinPrefetchThread();

// The thread's function

virtual void InternalThreadEntry() {}

protected:

Blob<Dtype> prefetch_data_;

Blob<Dtype> prefetch_label_;

Blob<Dtype> transformed_data_;

};

template <typename Dtype>

本文解析了Caffe框架中DataLayer的功能与实现细节,包括如何批量读取数据库内容至Blob中,以及为确保高效数据读取所采取的线程并发策略。介绍了DataLayer的成员变量与函数,并详细说明了数据读取、预处理及转换过程。

本文解析了Caffe框架中DataLayer的功能与实现细节,包括如何批量读取数据库内容至Blob中,以及为确保高效数据读取所采取的线程并发策略。介绍了DataLayer的成员变量与函数,并详细说明了数据读取、预处理及转换过程。

4305

4305

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?