What is throttling?

Throttling is a flow control feature that limits access toresource to a certain number of times. Once the upper limit orthreshold is reached, access to resource is rejected. A ban listcan be used to record such failed access, so that within a timewindow, access to the same resource is also denied. It providesprivilege based access control and shield resource against DDOSattack.

In API gateway, throttling is basically used to limit APIaccess based on subscription tier. Eg: gold tier allows 1000 APIaccess per limit. Throttling can be single tier (Eg: per userbased, per IP address based) or multi-tier (per user per APIendpoint). In both cases, throttling can be viewed as a tuple (key,upper limit). For the multi-tier case, the key can be viewed as apath. Eg, User id + “/” + ipaddress

Throttlingwithin VM

Counter based implementation

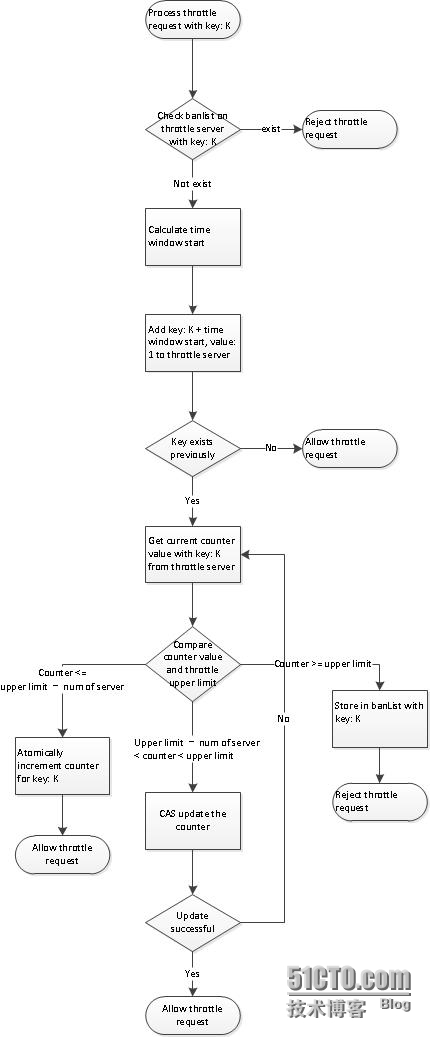

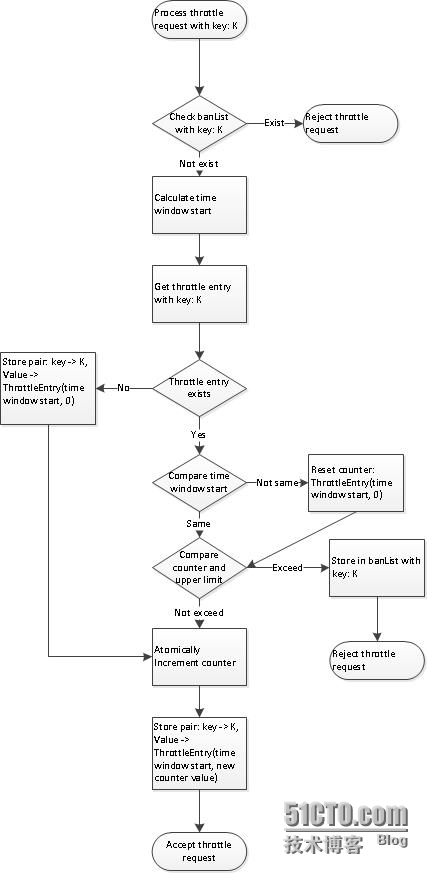

The time line is divided into aseries of time windows. A counter is maintained for the each timewindow.When new request comes in, the correct time window start isretrieved and counter is incremented. Request is denied if counterreaches limit and throttle key is added to the ban list. Thecounter is reset at the end of current time window

Refer to the followingdiagram

Time window approach is quitesimple to implement: a map is good to capture throttlinginformation and simplicity implies less calculationoverhead.

However, it subjects to aproblem known as access spike. Consider the followingscenario

Throttling criteria: 1000request per minute

Current time window: 15:00 –15:01

For the first 30 second withincurrent time window, no request

For the last 30 second withincurrent time window, there are 999 requests. All requests passthrottling

Now it starts next time window:15:01 – 15:02

For the first 30 second withincurrent time window, there are 999 requests. All requests passthrottling

Now if you considered the timewindow from second 30 15:00 to second 30 15:01, it accepts 1998requests, almost double the throttling limit!

Given the above explanation,choice of time window is a fairly important aspect toconsider:

If time window is too large,the access spike problem will manifest itself more.

If time window is too small,time of throttling calculation becomes non-trivial compares to thetime window and accuracy will be compromised. Currently, wenormally use per-second throttling, this is usually well- balancedbetween accuracy and access spike issue

Queue based implementation

An alternative is queue basedapproach. For each key, a sorted queue is maintained to recordrequest time. When new request comes in, we need to look back thequeue, sum up all request times that with range of current time andcurrent time – time window size to gets the total number ofrequest. This is compared against throttling limit to allow/denyaccess.

At backend, there is anotherhousekeeping thread that cleans up queues for older request timesthat are earlier than current time – time window size.

Refer to the followingdiagram

Queue based implementation isnot subject to access spike mentioned above. But calculation costis higher due to the need to iterate through the queue. The queueneeds to be locked for multi-threaded access. This impactsperformance and throughput

Scalability consideration:

Within a single VM, scalabilityissue mainly arises from concurrency and lock contention bydifferent threads. Carefully chosen data structure could reducelock usage and improve performance. For counter based approach,Concurrent hashmap is used to implement key to counter mapping, andjava atomicInteger is used to implement counter. For queue basedapproach, ConcurrentSkipList is used to implement the sorted queue.

Throttling incluster environment

The simple problem becomes moreintriguing in a cluster environment. API requests may be handled bydifferent members in a cluster, and the total number of requestshould not exceed throttling limit. Currently WSO2 API gateway usespeer to peer cluster synchronization approach, where throttlingdata on one cluster member is asynchronously replicated to othermembers.

This approach is flawed due tothe following reasons:

Large number of messagesexchanged among cluster members: On receiving eachthrottling request, data is synchronized to all cluster members.Given average M throttling request on each node and N clustermembers, the total number of message is M*N

To make the situation worse,throttling data is stored in axis2 message context and the wholecontext is replicated. Under peak load, number of throttle keysstored on each node will be huge and throttle data will have alarge memory footprint, this makes serialization/deserializationand transferring of the message across networkexpensive.

Throttling accuracy is notguaranteed.

This is mainly due to networktransfer latency of throttling data, consider the followingscenario:

10 requests are allowed perminute

Node 1 received 5 requests andnode 2 received 4 requests previously and both nodes aresynchronized, so counter for node 1 and node 2 are both set to9

Now Node 1 receives6th request for current time window

Node 1 replicate throttlingdata to node 2

Node 2 received 5threquest for current time window, however this is before lateststate of node 1 is replicated to node 2, node 2 checks localcounter and allows the request

There are totally 11 requestsreceived within time window and exceeds throttling limit!

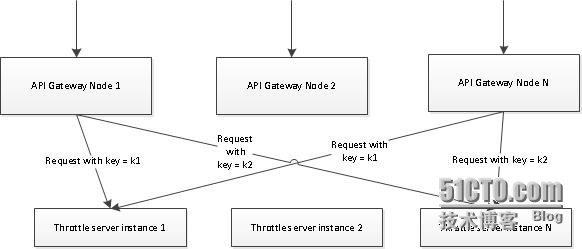

The proposed solution is to usea centralized throttle server to handle access request for thewhole cluster, compared with synchronization approach, it onlysends 1 message to throttling server for each request, resulting in much lessnetwork overhead.

From implementationperspective, we need a centralized key value store of highperformance; key is the concatenation of throttle key and starttime of current time window, value is counter. Banlist is also keptin the store.

We choose memcached or redis ascandidate key value store

Memcached has better readperformance and slightly better write performance compared toRedis, especially for highly concurrent access. Redis providecluster synchronization support and more flexible data structure.So in our case, both redis throttle and memcached throttle areimplemented, but memcached throttle is more preferred. Refer to

http://blog.sina.com.cn/s/blog_72995dcc01018qkf.html

http://iyunlin.com/thread/200319for various comparison between memcached and redis

CAS (compare and swap) isanother feature necessary to ensure accuracy of counter. A nodereceives throttle request will retrieve counter from server,compare it with throttle limit. Before counter is incremented andwritten to server, it may have been updated by another node. CASallowed us to detect such data contention and to avoid writingstale value. In this case, client is responsible for retrievecounter again and retry. Luckily both redis and memcached supportsCAS operation

Counter reset at end of timewindow is handled by key expiration. Each key’s expiration time isset to time window length, so there is no need to explicitly removekey from store at the end of each time window. Note that the smallestexpiration time for memcached is 1 second, which implies that wecannot do accurate throttling at millisecond level.

A single centralized throttlemay become a bottleneck on heavy load if all throttling request ishandled by it. Ideally, the centralized throttle can be a clustertoo and throttle request can be distributed among the cluster.So whichserver should handle a particular throttle request? We use hashpartition: calculate a hash value of the throttle key (murmur hashalgorithm is used), divide by number of throttle servers. The modis index of server. Alternatively, consistent hash can be used.Thisensures that same throttle key always hits the same server and wedon’t have to worry about distributing the key value store amongservers. Afew limitations: We have not considered data replication andbackup, as memcached does not support that. If one throttle serveris down, data stored on it is lost and we will not auto fallback toanther server. Load distribution isnot even as some key may be accessed more frequently than another,implying that the corresponding sever will take more load.

Further optimization

During implementation, we made some observations that can improveperformance further:

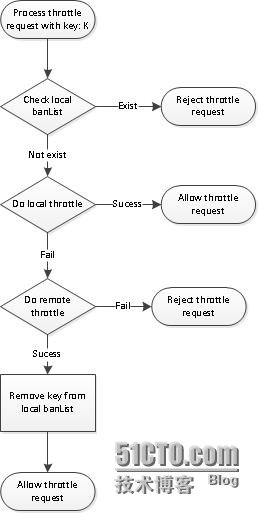

Ban list can be stored incentral throttle server as well as API gateway server. Key affinitysaves network bandwidth and is effective against DDOS attack. If akey is rejected, it reaches central throttle server only for thefirst time and the key is added to gateway server’s local ban list.Subsequently, throttle request for the same key is directlyrejected by gateway server and does not reach central throttleserver.

The counter can be distributedbetween central throttle server and API gateway server.

Say throttle limit = 1000requests per min with 20 API gateway server in a cluster, we couldallocate a quota of 40 as local throttle limit for each gatewayserver. So that the first 40 throttle requests are handled locally.Note that this is a heuristic approach on the premise that requestfor a particular throttle key is distributed evenly to all gatewaycluster node, and this is meaningful only if throttle limit is fairlarge.

CAS can be expensive. Weobserve that under high concurrency scenario, CAS can fail easilyand need to retry for many times. Each CAS attempt sends anadditional request to central throttle server and degradesperformance drastically. So we should try to reduce CAS usage muchas possible. Consider the following scenario

Throttle limit = 1000 requestsper min with 20 API gateway server in a cluster

The first 980 request is safewithout using CAS. A simple atomic increment operation will do.Contention only arises when we are near throttle limit. We shoulduse CAS only when counter >= 980. To further reduce contention,we can sleep a random short time before each CAS attempt

1074

1074

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?