Here's a few comments and disclaimers to start with. First, benchmarks are challenging. They are challenging to measure, but the real issue is that often we forget WHY we are benchmarking something. We'll take a complex multi-machine financial system and suddenly we're hyper-focused on a bunch of serialization code that we're convinced is THE problem. "If I can fix this serialization by writing a 10,000 iteration for loop and getting it down to x milliseconds, it'll be SMOOOOOOTH sailing."

以下是一些评论和免责声明。 首先,基准测试具有挑战性。 他们很难衡量,但是真正的问题是我们常常忘记为什么我们要对某些东西进行基准测试。 我们将采用一个复杂的多机器财务系统,突然之间,我们过度专注于我们确信是问题的一堆序列化代码。 “如果我可以通过编写10,000次循环迭代并将其降低至x毫秒来解决此序列化问题,那将是SMOOOOOOTH。”

Second, this isn't a benchmarking blog post. Don't point this blog post and say "see! Library X is better than library Y! And .NET is better than Java!" Instead, consider this a cautionary tale, and a series of general guidelines. I'm just using this anecdote to illustrate these points.

其次,这不是基准博客文章。 不要指向此博客文章并说:“瞧!库X优于库Y!而.NET比Java更好!” 相反,请考虑一下这是一个警示性的故事,以及一系列常规准则。 我只是用这个轶事来说明这些观点。

- Are you 100% sure what you're measuring? 您100%确定要测量什么吗?

- Have you run a profiler like the Visual Studio profiler, ANTS, or dotTrace? 您是否运行过像Visual Studio探查器,ANTS或dotTrace这样的探查器?

- Are you considering warm-up time? Throwing out outliers? Are your results statistically significant? 您是否正在考虑预热时间? 抛出异常值? 您的结果有统计意义吗?

- Are the libraries you're using optimized for your use case? Are you sure what your use case is? 您使用的库是否针对用例进行了优化? 您确定用例是什么?

基准不好 (A bad benchmark)

A reader sent me a email recently with concern of serialization in .NET. They had read some very old blog posts from 2009 about perf that included charts and graphs and did some tests of their own. They were seeing serialization times (of tens of thousands of items) over 700ms and sizes nearly 2 megs. The tests included serialization of their typical data structures in both C# and Java across a number of different serialization libraries and techniques. Techniques included their company's custom serialization, .NET binary DataContract serialization, as well as JSON.NET. One serialization format was small (1.8Ms for a large structure) and one was fast (94ms) but there was no clear winner. This reader was at their wit's end and had decided, more or less, that .NET must not be up for the task.

最近有位读者向我发送了一封电子邮件,内容涉及.NET中的序列化。 他们从2009年开始阅读了一些关于性能的非常古老的博客文章,其中包括图表和图形,并对自己进行了一些测试。 他们看到序列化时间(成千上万项)超过700毫秒,大小接近2兆。 测试包括通过多种不同的序列化库和技术对C#和Java中典型数据结构的序列化。 技术包括其公司的自定义序列化,.NET二进制DataContract序列化以及JSON.NET。 一种序列化格式很小(大型结构为1.8Ms),而一种则是快速格式(94ms),但没有明显的获胜者。 这位读者不知所措,已经或多或少地决定.NET一定不能胜任这项任务。

To me, this benchmark didn't smell right. It wasn't clear what was being measured. It wasn't clear if it was being accurately measured, but more specifically, the overarching conclusion of ".NET is slow" wasn't reasonable given the data.

对我来说,这个基准测试听起来不对。 目前尚不清楚正在测量什么。 尚不清楚它是否被准确测量,但是更具体地说,鉴于数据,“。NET缓慢”的总体结论并不合理。

Hm. So .NET can't serialize a few tens of thousands of data items quickly? I know it can.

嗯因此,.NET无法快速序列化数万个数据项? 我知道可以。

Related Links: Create benchmarks and results that have value and Responsible benchmarking by @Kellabyte

相关链接:创建具有价值的基准和结果,并通过@Kellabyte负责任的基准

I am no expert, but I poked around at this code.

我不是专家,但是我在这段代码中闲逛了。

第一:我们测量正确吗? (First: Are we measuring correctly?)

The tests were using DateTime.UtcNow which isn't advisable.

测试使用DateTime.UtcNow,这是不可取的。

startTime = DateTime.UtcNow;

resultData = TestSerialization(foo);

endTime = DateTime.UtcNow;

Do not use DateTime.Now or DateTime.Utc for measuring things where any kind of precision matters. DateTime doesn't have enough precision and is said to be accurate only to 30ms.

不要使用DateTime.Now或DateTime.Utc来测量任何涉及精度的事情。 DateTime的精度不够高,据说只能精确到30ms 。

DateTime represents a date and a time. It's not a high-precision timer or Stopwatch.

DateTime表示日期和时间。 它不是高精度计时器或秒表。

In short, "what time is it?" and "how long did that take?" are completely different questions; don't use a tool designed to answer one question to answer the other.

简而言之,“现在几点?” 和“那花了多长时间?” 是完全不同的问题; 不要使用旨在回答一个问题来回答另一个问题的工具。

And as Raymond Chen says:

正如雷蒙德·陈所说:

"Precision is not the same as accuracy. Accuracy is how close you are to the correct answer; precision is how much resolution you have for that answer."

“精度与精度并不相同。精度是您与正确答案的接近程度;精度是您对该答案有多少分辨率。”

So, we will use a Stopwatch when you need a stopwatch. In fact, before I switch the sample to Stopwatch I was getting numbers in milliseconds like 90,106,103,165,94, and after Stopwatch the results were 99,94,95,95,94. There's much less jitter.

因此,当您需要秒表时,我们将使用秒表。 实际上,在我将样本切换到秒表之前,我得到的毫秒数为90,106,103,165,94,而在秒表之后,结果为99,94,95,95,94。 抖动更少。

Stopwatch sw = new Stopwatch();

sw.Start();

// stuff

sw.Stop();

You might also want to pin your process to a single CPU core if you're trying to get an accurate throughput measurement. While it shouldn't matter and Stopwatch is using the Win32 QueryPerformanceCounter internally (the source for the .NET Stopwatch Class is here) there were some issues on old systems when you'd start on one proc and stop on another.

如果您要获得准确的吞吐量度量,您可能还希望将您的进程固定到单个CPU内核。 尽管没关系,秒表在内部使用Win32 QueryPerformanceCounter ( .NET秒表类的源在此处),但在旧系统上,当您从一个proc开始而在另一个proc上停止时,会出现一些问题。

// One Core

var p = Process.GetCurrentProcess();

p.ProcessorAffinity = (IntPtr)1;

If you don't use Stopwatch, look for a simple and well-thought-of benchmarking library.

如果您不使用秒表,请寻找一个简单且经过深思熟虑的基准测试库。

第二:做数学 (Second: Doing the math)

In the code sample I was given, about 10 lines of code were the thing being measured, and 735 lines were the "harness" to collect and display the data from the benchmark. Perhaps you've seen things like this before? It's fair to say that the benchmark can get lost in the harness.

在代码示例中,我测量了大约10行代码,而735行是收集和显示基准数据的“工具”。 也许您以前看过这样的事情? 可以公平地说基准可能会丢失。

Have a listen to my recent podcast with Matt Warren on "Performance as a Feature" and consider Matt's performance blog and be sure to pick up Ben Watson's recent Book called "Writing High Performance .NET Code".

收听我最近与Matt Warren进行的有关“ Performance as a Feature ”的播客,并查看Matt的性能博客,并确保阅读Ben Watson的最新著作“ Writing High Performance .NET Code” 。

Also note that Matt is currently exploring creating a mini-benchmark harness on GitHub. Matt's system is rather promising and would have a [Benchmark] attribute within a test.

还要注意,Matt当前正在探索在GitHub上创建迷你基准测试工具。 Matt的系统相当有前途,并且在测试中将具有[Benchmark]属性。

Considering using an existing harness for small benchmarks. One is SimpleSpeedTester from Yan Cui. It makes nice tables and does a lot of the tedious work for you. Here's a screenshot I

stole

borrowed from Yan's blog.

考虑将现有工具用于小型基准测试。 一个是Yan Cui的SimpleSpeedTester 。 它可以制作精美的桌子,并为您完成许多繁琐的工作。 以下是截图我

偷了

颜的博客借来的。

Something a bit more advanced to explore is HdrHistogram, a library "designed for recoding histograms of value measurements in latency and performance sensitive applications." It's also on GitHub and includes Java, C, and C# implementations.

HdrHistogram是一个更高级的工具,它是一个库,“旨在在等待时间和对性能敏感的应用程序中记录值测量的直方图。” 它也在GitHub上,包括Java,C和C#实现。

And seriously. Use a profiler.

认真地使用探查器。

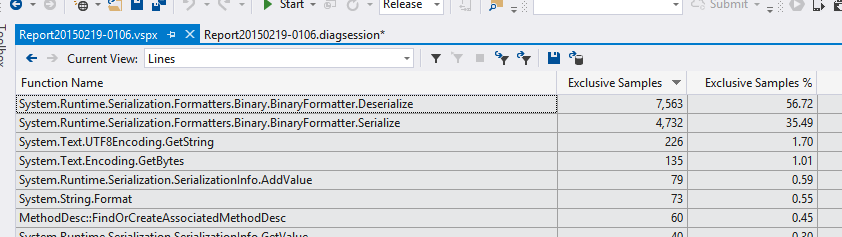

第三:您运行了探查器吗? (Third: Have you run a profiler?)

Use the Visual Studio Profiler, or get a trial of the Redgate ANTS Performance Profiler or the JetBrains dotTrace profiler.

使用Visual Studio Profiler,或试用Redgate ANTS Performance Profiler或JetBrains dotTrace profiler 。

Where is our application spending its time? Surprise I think we've all seen people write complex benchmarks and poke at a black box rather than simply running a profiler.

我们的应用程序在哪里花时间? 令人惊讶的是,我认为我们所有人都看到人们编写了复杂的基准测试并p了黑匣子,而不是仅仅运行探查器。

另外:有没有更新/更好/被理解的方法来解决这个问题? (Aside: Are there newer/better/understood ways to solve this?)

This is my opinion, but I think it's a decent one and there's numbers to back it up. Some of the .NET serialization code is pretty old, written in 2003 or 2005 and may not be taking advantage of new techniques or knowledge. Plus, it's rather flexible "make it work for everyone" code, as opposed to very narrowly purposed code.

这是我的观点,但我认为这是一个不错的观点,并且有很多数据可以支持。 .NET序列化代码中的一些非常古老,写于2003或2005年,可能没有利用新技术或知识。 另外,与用途非常狭窄的代码相反,它是相当灵活的“使每个人都可以使用”的代码。

People have different serialization needs. You can't serialize something as XML and expect it to be small and tight. You likely can't serialize a structure as JSON and expect it to be as fast as a packed binary serializer.

人们有不同的序列化需求。 您不能将某些内容序列化为XML并期望它很小且紧凑。 您可能无法序列化为JSON的结构,并期望它与打包的二进制序列化器一样快。

Measure your code, consider your requirements, and step back and consider all options.

评估您的代码,考虑您的要求,然后退后一步,考虑所有选项。

第四:要考虑的新型.NET序列化器 (Fourth: Newer .NET Serializers to Consider)

Now that I have a sense of what's happening and how to measure the timing, it was clear these serializers didn't meet this reader's goals. Some of are old, as I mentioned, so what other newer more sophisticated options exist?

现在,我对正在发生的事情以及如何测量时序有了一定的了解,很明显,这些串行器没有达到读者的目标。 正如我提到的那样,其中有些是旧的,那么还有哪些其他较新的更复杂的选择呢?

There's two really nice specialized serializers to watch. They are Jil from Kevin Montrose, and protobuf-net from Marc Gravell. Both are extraordinary libraries, and protobuf-net's breadth of target framework scope and build system are a joy to behold. There are also other impressive serializers in including support for not only JSON, but also JSV and CSV in ServiceStack.NET.

有两个非常好的专业化串行器需要观察。 他们是凯文·蒙特罗斯( Kevin Montrose)的吉尔(Jil) ,马克(Marc Gravell)的protobuf-net 。 两者都是非凡的库,并且protobuf-net的目标框架范围和构建系统的广度令人瞩目。 还有其他令人印象深刻的序列化程序,它们不仅支持JSON,而且还支持ServiceStack.NET中的JSV和CSV 。

Protobuf-net-.NET的协议缓冲区 (Protobuf-net - protocol buffers for .NET)

Protocol buffers are a data structure format from Google, and protobuf-net is a high performance .NET implementation of protocol buffers. Think if it like XML but smaller and faster. It also can serialize cross language. From their site:

协议缓冲区是Google的数据结构格式,而protobuf-net是协议缓冲区的高性能.NET实现。 考虑一下它是否像XML,但是更小,更快。 它还可以序列化跨语言。 从他们的网站:

Protocol buffers have many advantages over XML for serializing structured data. Protocol buffers:

与XML相比,协议缓冲区在序列化结构化数据方面具有许多优势。 协议缓冲区:

are simpler

更简单

are 3 to 10 times smaller

小3到10倍

are 20 to 100 times faster

快20到100倍

are less ambiguous

不那么模棱两可

generate data access classes that are easier to use programmatically

生成更易于以编程方式使用的数据访问类

It was easy to add. There's lots of options and ways to decorate your data structures but in essence:

这很容易添加。 装饰数据结构的方法和方法有很多,但本质上是:

var r = ProtoBuf.Serializer.Deserialize<List<DataItem>>(memInStream);

The numbers I got with protobuf-net were exceptional and in this case packed the data tightly and quickly, taking just 49ms.

我在protobuf-net上获得的数据非常出色,在这种情况下,数据紧紧而Swift地打包,仅需49毫秒。

使用Sigil的.NET的JIL-Json序列化器 (JIL - Json Serializer for .NET using Sigil)

Jil s a Json serializer that is less flexible than Json.net and makes those small sacrifices in the name of raw speed. From their site:

Jil sa Json序列化器不如Json.net灵活,并以原始速度为名进行了一些小的牺牲。 从他们的网站:

Flexibility and "nice to have" features are explicitly discounted in the pursuit of speed.

为了追求速度,灵活性和“必备”功能受到了明显的折衷。

It's also worth pointing out that some serializers work over the whole string in memory, while others like Json.NET and DataContractSerializer work over a stream. That means you'll want to consider the size of what you're serializing when choosing a library.

还值得指出的是,某些序列化程序在内存中的整个字符串上工作,而其他序列化程序(如Json.NET和DataContractSerializer)则在流上工作。 这意味着在选择库时,您将需要考虑序列化文件的大小。

Jil is impressive in a number of ways but particularly in that it dynamically emits a custom serializer (much like the XmlSerializers of old)

Jil在很多方面都给人留下了深刻的印象,但特别是它动态地发出了一个自定义的序列化器(很像旧的XmlSerializers)。

Jil is trivial to use. It just worked. I plugged it in to this sample and it took my basic serialization times to 84ms.

吉尔使用起来很简单。 它只是工作。 我将其插入此示例中,这使我的基本序列化时间达到了84ms。

result = Jil.JSON.Deserialize<Foo>(jsonData);

结论:关于基准的事情。 这取决于。 (Conclusion: There's the thing about benchmarks. It depends.)

What are you measuring? Why are you measuring it? Does the technique you're using handle your use case? Are you serializing one large object or thousands of small ones?

你在测量什么? 你为什么要测量? 您使用的技术可以处理用例吗? 您要序列化一个大对象还是数千个小对象?

James Newton-King made this excellent point to me:

詹姆斯·牛顿·金向我指出了这一点:

"[There's a] meta-problem around benchmarking. Micro-optimization and caring about performance when it doesn't matter is something devs are guilty of. Documentation, developer productivity, and flexibility are more important than a 100th of a millisecond."

“ [基准测试方面存在一个元问题。开发人员应该对微优化和无关紧要的性能表示关注。开发人员对文档,开发人员的生产力和灵活性的重视程度超过了百分之一毫秒。”

In fact, James pointed out this old (but recently fixed) ASP.NET bug on Twitter. It's a performance bug that is significant, but was totally overshadowed by the time spent on the network.

实际上,James在Twitter上指出了这个古老(但最近已修复)的ASP.NET错误。 这是一个非常重要的性能错误,但在网络上花费的时间却完全被其掩盖了。

This bug backs up the idea that many developers care about performance where it doesn't matter https://t.co/LH4WR1nit9

— James Newton-King (@JamesNK)此错误支持以下想法:许多开发人员都在乎无关紧要的性能https://t.co/LH4WR1nit9

— James Newton-King(@JamesNK) February 13, 2015 2015年2月13日

Thanks to Marc Gravell and James Newton-King for their time helping with this post.

感谢Marc Gravell和James Newton-King的时间为这篇文章提供帮助。

What are your benchmarking tips and tricks? Sound off in the comments!

您的基准测试技巧和窍门是什么? 在评论中听起来不错!

3090

3090

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?