深度卷积对抗神经网络 基础 第六部分 缺点和偏见 GANs Disadvantages and Bias

GANs 综合评估

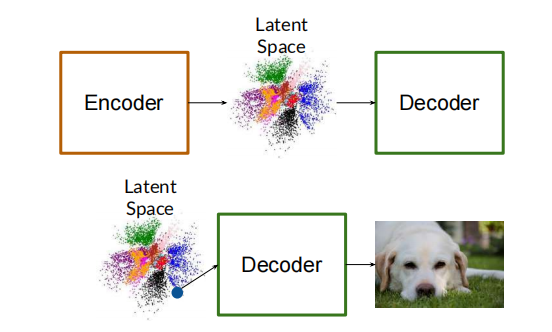

生成对抗网络(英语:Generative Adversarial Network,简称GAN)是非监督式学习的一种方法,透过两个神经网络相互博弈的方式进行学习。该方法由伊恩·古德费洛等人于2014年提出。[1] 生成对抗网络由一个生成网络与一个判别网络组成。生成网络从潜在空间(latent space)中随机取样作为输入,其输出结果需要尽量模仿训练集中的真实样本。判别网络的输入则为真实样本或生成网络的输出,其目的是将生成网络的输出从真实样本中尽可能分辨出来。而生成网络则要尽可能地欺骗判别网络。两个网络相互对抗、不断调整参数,最终目的是使判别网络无法判断生成网络的输出结果是否真实。

GANs作为一种新型的神经网络确实非常具有革命性,其产生的一些具体的AI产品也是非常的有趣,并且未来将会成为一个非常大的市场。但是在纯技术层面,其还是存在很多的缺点和不足需要去解决。

GANs 的优缺点

优点:

- 生成非常漂亮的照片

- 非常快的推理过程和速度

缺点:

- 缺少一种直观的效率测度

- 训练往往是不稳定的

- 生成的图像的类型和样子往往不是非常可控的

- 反向操作是不直观的

GANs的发展方向

变分自编码器(Variational Autoencoders (VAEs),Flow models 和 GANs的结合

GANs的公平性和存在的偏见

由于样本数据的分布,系统的设计行问题等等,GANs会不可避免地出现一些公平性和偏见。

因此,当GANs被应用时,如何定义公平以及如何达到公平的目标其实是非常重要的。

如何消除偏见和如何保证公平在模型设计的每一步都需要去重视和解决,这样才能尽量达到最后的模型的相对公平的状态。

● Bias can be introduced into a model at each step of the process

● Awareness and mitigation of bias is vital to responsible use of AI and, especially, state-of-the-art GANs

参考文献 Reference

1. (Optional Notebook) Score-based Generative Modeling

Please note that this is an optional notebook meant to introduce more advanced concepts. If you’re up for a challenge, take a look and don’t worry if you can’t follow everything. There is no code to implement—only some cool code for you to learn and run!

Click this link to access the Colab notebook!

This is a hitchhiker’s guide to score-based generative models, a family of approaches based on estimating gradients of the data distribution. They have obtained high-quality samples comparable to GANs (like below, figure from this paper) without requiring adversarial training, and are considered by some to be the new contender to GANs.

https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing

2. Fairness Definitions

To understand some of the existing definitions of fairness and their relationships, please read the following paper and view the Google glossary entry for fairness:

- Fairness Definitions Explained (Verma and Rubin, 2018): https://fairware.cs.umass.edu/papers/Verma.pdf

- Machine Learning Glossary: Fairness (2020): https://developers.google.com/machine-learning/glossary/fairness

3. A Survey on Bias and Fairness in Machine Learning

To understand the complex nature of bias and fairness, please read the following paper describing ways they exist in artificial intelligence and machine learning:

A Survey on Bias and Fairness in Machine Learning (Mehrabi, Morstatter, Saxena, Lerman, and Galstyan, 2019): https://arxiv.org/abs/1908.09635

4. Finding Bias

Now that you’ve seen how complex fairness is, how do you find bias in existing material (models, datasets, frameworks, etc.) and how can you prevent it? These two readings offer some insight into how bias was detected and some avenues where it may have been introduced.

- Does Object Recognition Work for Everyone? (DeVries, Misra, Wang, and van der Maaten, 2019): https://arxiv.org/abs/1906.02659

- What a machine learning tool that turns Obama white can (and can’t) tell us about AI bias (Vincent, 2020): https://www.theverge.com/21298762/face-depixelizer-ai-machine-learning-tool-pulse-stylegan-obama-bias

5. (Optional Notebook) GAN Debiasing

Please note that this is an optional notebook that is meant to introduce more advanced concepts, if you’re up for a challenge. So, don’t worry if you don’t completely follow every step!

Notebook link: https://colab.research.google.com/github/https-deeplearning-ai/GANs-Public/blob/master/C2W2_GAN_Debiasing_(Optional).ipynb

Fair Attribute Classification through Latent Space De-biasing. Vikram V. Ramaswamy, Sunnie S. Y. Kim, Olga Russakovsky. CVPR 2021.

Fairness in visual recognition is becoming a prominent and critical topic of discussion as recognition systems are deployed at scale in the real world. Models trained from data in which target labels are correlated with protected attributes (i.e. gender, race) are known to learn and perpetuate those correlations.

In this notebook, you will learn about Fair Attribute Classification through Latent Space De-biasing (Ramaswamy et al. 2020) that introduces a method for training accurate target classifiers while mitigating biases that stem from these correlations. Specifically, this work uses GANs to generate realistic-looking images and perturb these images in the underlying latent space to generate training data that is balanced for each protected attribute. They augment the original dataset with this perturbed generated data, and empirically demonstrate that target classifiers trained on the augmented dataset exhibit a number of both quantitative and qualitative benefits.

6. Works Cited

All of the resources cited in Course 2 Week 2, in one place. You are encouraged to explore these papers/sites if they interest you, especially because this is an important topic to understand. They are listed in the order they appear in the lessons.

From the videos:

- Hyperspherical Variational Auto-Encoders (Davidson, Falorsi, De Cao, Kipf, and Tomczak, 2018): https://arxiv.org/abs/1804.00891

- Generating Diverse High-Fidelity Images with VQ-VAE-2 (Razavi, van den Oord, and Vinyals, 2019): https://arxiv.org/abs/1906.00446

- Conditional Image Generation with PixelCNN Decoders (van den Oord et al., 2016): https://arxiv.org/abs/1606.05328

- Glow: Better Reversible Generative Models (Dhariwal and Kingma, 2018): https://openai.com/blog/glow/

- Machine Bias (Angwin, Larson, Mattu, and Kirchner, 2016): https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing

- Fairness Definitions Explained (Verma and Rubin, 2018): https://fairware.cs.umass.edu/papers/Verma.pdf

- Does Object Recognition Work for Everyone? (DeVries, Misra, Wang, and van der Maaten, 2019): https://arxiv.org/abs/1906.02659

- PULSE: Self-Supervised Photo Upsampling via Latent Space Exploration of Generative Models (Menon, Damian, Hu, Ravi, and Rudin, 2020): https://arxiv.org/abs/2003.03808

- What a machine learning tool that turns Obama white can (and can’t) tell us about AI bias (Vincent, 2020): https://www.theverge.com/21298762/face-depixelizer-ai-machine-learning-tool-pulse-stylegan-obama-bias

From the notebook:

- Mitigating Unwanted Biases with Adversarial Learning (Zhang, Lemoine, and Mitchell, 2018): https://m-mitchell.com/papers/Adversarial_Bias_Mitigation.pdf

- Tutorial on Fairness Accountability Transparency and Ethics in Computer Vision at CVPR 2020 (Gebru and Denton, 2020): https://sites.google.com/view/fatecv-tutorial/schedule?authuser=0

- Machine Learning Glossary: Fairness (2020): https://developers.google.com/machine-learning/glossary/fairness

- CelebFaces Attributes Dataset (CelebA): http://mmlab.ie.cuhk.edu.hk/projects/CelebA.html

7.(Optional Notebook) NeRF: Neural Radiance Fields

Please note that this is an optional notebook meant to introduce more advanced concepts. If you’re up for a challenge, take a look and don’t worry if you can’t follow everything. There is no code to implement—only some cool code for you to learn and run!

Click this link to access the Colab notebook!

In this notebook, you’ll learn how to use Neural Radiance Fields to generate new views of a complex 3D scene using only a couple input views, first proposed by NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis (Mildenhall et al. 2020). Though 2D GANs have seen success in high-resolution image synthesis, NeRF has quickly become a popular technique to enable high-resolution 3D-aware GANs.

522

522

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?