gst-launch-1.0 nvurisrcbin uri=file:///opt/nvidia/deepstream/deepstream/samples/streams/sample_1080p_h264.mp4 ! fakesink

gst-launch-1.0 nvurisrcbin uri=rtsp://xx ! fakesink

gst-launch-1.0 uridecodebin=rtsp://xx ! fakesink

gst-launch-1.0 uridecodebin uri=file:///opt/nvidia/deepstream/deepstream/samples/streams/sample_1080p_h264.mp4 ! fakesink

gst-launch-1.0 rtspsrc location=rtsp://xx/media/video1 protocols=4 tcp-timeout=80000000 short-header=1 \

! decodebin ! queue ! nvvideoconvert ! videoconvert ! x264enc ! h264parse ! matroskamux ! filesink location=rtsp.mp4

deepstream-test1

Sample of how to use DeepStream elements for a single H.264, filesrc → decode → nvstreammux → nvinfer (primary detector) → nvdsosd → renderer. This app uses resnet10.caffemodel for detection.

单源一级推理,直接显示

单源一级推理,存h264文件

deepstream-test2

Sample of how to use DeepStream elements for a single H.264, filesrc → decode → nvstreammux → nvinfer (主推) → nvtracker(跟踪) → nvinfer (三个二级分类) → nvdsosd → renderer. This app uses resnet10.caffemodel for detection and 3 classifier models (i.e., Car Color, Make and Model).

单源三级推理,直接显示

gst-launch命令:

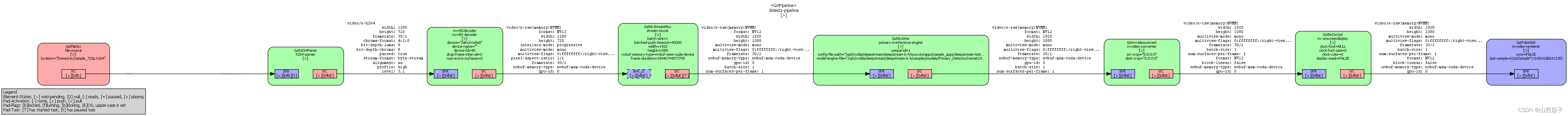

gst-launch-1.0 filesrc location=/opt/nvidia/deepstream/deepstream/samples/streams/sample_720p.mp4 ! qtdemux ! h264parse ! nvv4l2decoder ! mux.sink_0 nvstreammux name=mux batch-size=1 width=1280 height=720 ! nvinfer config-file-path=./dstest2_pgie_config.txt ! nvtracker tracker-width=640 tracker-height=384 ll-lib-file=/opt/nvidia/deepstream/deepstream/lib/libnvds_nvmultiobjecttracker.so enable-batch-process=1 ll-config-file=../../../../samples/configs/deepstream-app/config_tracker_NvDCF_perf.yml ! nvinfer config-file-path=./dstest2_sgie1_config.txt ! nvinfer config-file-path=./dstest2_sgie2_config.txt ! nvinfer config-file-path=./dstest2_sgie3_config.txt ! nvvideoconvert ! 'video/x-raw(memory:NVMM),format=RGBA' ! nvdsosd ! nvvideoconvert ! fakesink

deepstream-test3

新版本的可以设置 export NVDS_TEST3_PERF_MODE=1 来不显示结果。

Builds on deepstream-test1 (simple test application 1) to demonstrate how to:

• Use multiple sources in the pipeline.

• Use a uridecodebin to accept any type of input (e.g. RTSP/File), any GStreamer supported container format, and any codec.

• Configure Gst-nvstreammux to generate a batch of frames and infer on it for better resource utilization.

• Extract the stream metadata, which contains useful information about the frames in the batched buffer.

多路源一级推理,直接显示

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

6755

6755

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?