按以下2部分写:

1 Keras常用的接口函数介绍

2 Keras代码实例

[keras] 模型保存、加载、model类方法、打印各层权重

1.模型保存

model.save_model()可以保存网络结构权重以及优化器的参数

model.save_weights() 仅仅保存权重

2.模型加载

from keras.models import load_model

load_model()只能load 由save_model保存的形式,将模型和weight全load进来

model.load_weights(self, filepath, by_name=False):

在加载权重之前,model必须编译好,即如下先执行以后。load_weights()和save_weights()配套用的

metrics = ['accuracy']

if self.nb_classes >= 10:

metrics.append('top_k_categorical_accuracy')

# self.input_shape = (seq_length, features_length)

self.model,self.original_model = self.zf_model()

optimizer = SGD(lr=1e-3)

#必须先model.compile(),才能加载权重

self.model.compile(loss='categorical_crossentropy', optimizer=optimizer,

metrics=metrics) #

3.sequential 和functional

序列式模型只能有单输入单输出,函数式模型可以有多个输入输出

因为是继承, model对象有 container和layer的所有方法,可以用model对象访问下面三个类的所有方法

以上的具体区别,可以参考Keras教程:https://keras.io/zh/

Container的类属性

类属性,不是函数

name

inputs

outputs

input_layers

output_layers

input_spec

trainable (boolean)

input_shape

output_shape

inbound_nodes: list of nodes

outbound_nodes: list of nodes

trainable_weights (list of variables)

non_trainable_weights (list of variables)

layer.get_weights返回的是没有名字的权重array,Model.get_weights() 是他们的拼接,也没有名字,利用layer.weights 可以访问到后台的变量

for layer in model.layers:

for weight in layer.weights:

print weight.name,weight.shape

#打印各层名字,权重的形状

block14_sepconv1/pointwise_kernel:0 (1, 1, 1024, 1536)

block14_sepconv1_bn/gamma:0 (1536,)

block14_sepconv1_bn/beta:0 (1536,)

block14_sepconv1_bn/moving_mean:0 (1536,

conv_att/bias:0 (5,)

linear_1/kernel:0 (2048, 256)

linear_1/bias:0 (256,)

linear_2/kernel:0 (2048, 256)

linear_2/bias:0 (256,)

linear_3/kernel:0 (2048, 256)

linear_3/bias:0 (256,)

linear_4/kernel:0 (2048, 256)

linear_4/bias:0 (256,)

linear_5/kernel:0 (2048, 256)

linear_5/bias:0 (256,)

rgb_softmax/kernel:0 (1280, 60)

rgb_softmax/bias:0 (60,)

from keras.applications.vgg16 import VGG16

# model.layers ,layer.weights

model = VGG16()

names = [weight.name for layer in model.layers for weight in layer.weights]

weights = model.get_weights()

for name, weight in zip(names, weights):

print(name, weight.shape)

--------------------- 案例1------------------

【Keras】保存权重以及载入,Model、Layers函数code

from keras.models import Sequential, Model

from keras.layers import Dense, LSTM, Activation, Input

from keras.optimizers import adam, rmsprop, adadelta

import numpy as np

import matplotlib.pyplot as plt

#construct model

data_input = Input((1,),dtype='float32',name='input_data')

x = Dense(100, activation = 'relu', name='layer1')(data_input)

x = Dense(32, activation = 'tanh', name='layer2')(x)

data_output = Dense(1, activation='tanh', name='output_data')(x)

model = Model(inputs=data_input, outputs=data_output)

model.compile(optimizer='rmsprop', loss='mse', metrics=['accuracy'])

#print model

print('models layers:',model.layers)

print('models config:',model.get_config())

print('models summary:',model.summary())

#get layers by name

layer1 = model.get_layer(name='layer1')

layer1_W_pro = layer1.get_weights()

layer2 = model.get_layer(name='layer2')

layer2_W_pro = layer2.get_weights()

#train data

dataX = np.linspace(-2 * np.pi,2 * np.pi, 1000)

dataX = np.reshape(dataX, [dataX.__len__(), 1])

noise = np.random.rand(dataX.__len__(), 1) * 0.1

dataY = np.sin(dataX) + noise

model.fit(dataX, dataY, epochs=10, batch_size=10, shuffle=True, verbose = 1)

predictY = model.predict(dataX, batch_size=1)

score = model.evaluate(dataX, dataY, batch_size=10)

print(score)

#get layers1 wights

layer1_W_end = layer1.get_weights()

#layer1_W_end - layer1_W_pro

layer2_W_end = layer2.get_weights()

#layer2_W_end - layer2_W_pro

#plot

fig, ax = plt.subplots()

ax.plot(dataX, dataY, 'b-')

ax.plot(dataX, predictY, 'r.')

ax.set(xlabel="x", ylabel="y=f(x)", title="y = sin(x),red:predict data,bule:true data")

ax.grid(True)

plt.savefig('d:\\test.eps', format='eps', dpi=1000)

plt.show()

#save weight

model.save_weights('d:\\test.hdf5')

#create new model

data_input1 = Input((1,),dtype='float32',name='input_data1')

x1 = Dense(100, activation = 'relu', name='layer11')(data_input1)

x1 = Dense(32, activation = 'tanh', name='layer21')(x1)

data_output1 = Dense(1, activation='tanh', name='output_data')(x1)

model1 = Model(inputs=data_input1, outputs=data_output1)

model1.load_weights('d:\\test.hdf5')

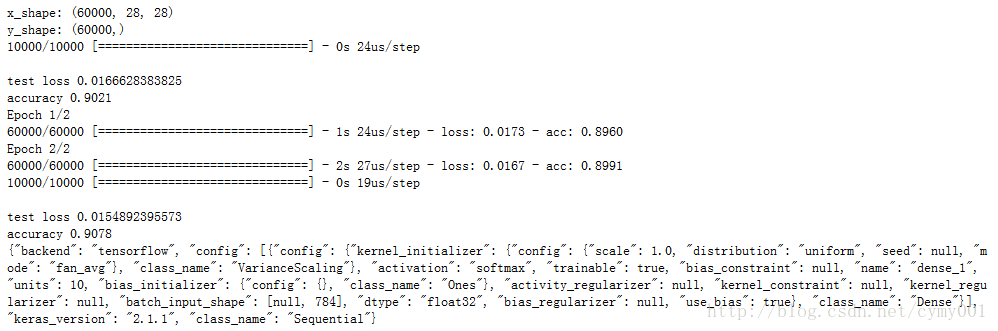

-----------------------------案例2:实验数据MNIST---------------------------------

初次训练模型并保存

import numpy as np

from keras.datasets import mnist

from keras.utils import np_utils

from keras.models import Sequential

from keras.layers import Dense

from keras.optimizers import SGD

# 载入数据

(x_train,y_train),(x_test,y_test) = mnist.load_data()

# (60000,28,28)

print('x_shape:',x_train.shape)

# (60000)

print('y_shape:',y_train.shape)

# (60000,28,28)->(60000,784)

x_train = x_train.reshape(x_train.shape[0],-1)/255.0

x_test = x_test.reshape(x_test.shape[0],-1)/255.0

# 换one hot格式

y_train = np_utils.to_categorical(y_train,num_classes=10)

y_test = np_utils.to_categorical(y_test,num_classes=10)

# 创建模型,输入784个神经元,输出10个神经元

model = Sequential([

Dense(units=10,input_dim=784,bias_initializer='one',activation='softmax')

])

# 定义优化器

sgd = SGD(lr=0.2)

# 定义优化器,loss function,训练过程中计算准确率

model.compile(

optimizer = sgd,

loss = 'mse',

metrics=['accuracy'],

)

# 训练模型

model.fit(x_train,y_train,batch_size=64,epochs=5)

# 评估模型

loss,accuracy = model.evaluate(x_test,y_test)

print('\ntest loss',loss)

print('accuracy',accuracy)

# 保存模型

model.save('model.h5') # HDF5文件,pip install h5py

载入初次训练的模型,再训练

import numpy as np

from keras.datasets import mnist

from keras.utils import np_utils

from keras.models import Sequential

from keras.layers import Dense

from keras.optimizers import SGD

from keras.models import load_model

# 载入数据

(x_train,y_train),(x_test,y_test) = mnist.load_data()

# (60000,28,28)

print('x_shape:',x_train.shape)

# (60000)

print('y_shape:',y_train.shape)

# (60000,28,28)->(60000,784)

x_train = x_train.reshape(x_train.shape[0],-1)/255.0

x_test = x_test.reshape(x_test.shape[0],-1)/255.0

# 换one hot格式

y_train = np_utils.to_categorical(y_train,num_classes=10)

y_test = np_utils.to_categorical(y_test,num_classes=10)

# 载入模型

model = load_model('model.h5')

# 评估模型

loss,accuracy = model.evaluate(x_test,y_test)

print('\ntest loss',loss)

print('accuracy',accuracy)

# 训练模型

model.fit(x_train,y_train,batch_size=64,epochs=2)

# 评估模型

loss,accuracy = model.evaluate(x_test,y_test)

print('\ntest loss',loss)

print('accuracy',accuracy)

# 保存参数,载入参数

model.save_weights('my_model_weights.h5')

model.load_weights('my_model_weights.h5')

# 保存网络结构,载入网络结构

from keras.models import model_from_json

json_string = model.to_json()

model = model_from_json(json_string)

print(json_string)原文:https://blog.csdn.net/u013608336/article/details/82664529

691

691

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?