第6章 支持向量机

6.1 间隔与支持向量

超平面的方程可以表示为:

(6.1)

w

T

x

+

b

=

0

\tag{6.1} w^Tx+b =0

wTx+b=0(6.1)

推导6.1

样本数据集:

(

x

1

0

,

x

2

0

,

x

3

0

,

…

,

x

m

0

x

1

1

,

x

2

1

,

x

3

1

,

…

,

x

m

1

x

1

2

,

x

2

2

,

x

3

2

,

…

,

x

m

2

x

1

3

,

x

2

3

,

x

3

3

,

…

,

x

m

3

…

x

1

n

,

x

2

n

,

x

3

n

,

…

,

x

m

n

)

\begin{pmatrix} x_1^0,x_2^0,x_3^0,\dots,x_m^0 \\ x_1^1,x_2^1,x_3^1,\dots,x_m^1 \\ x_1^2,x_2^2,x_3^2,\dots,x_m^2\\ x_1^3,x_2^3,x_3^3,\dots,x_m^3\\ \dots\\ x_1^n,x_2^n,x_3^n,\dots,x_m^n \end{pmatrix}

⎝⎜⎜⎜⎜⎜⎜⎛x10,x20,x30,…,xm0x11,x21,x31,…,xm1x12,x22,x32,…,xm2x13,x23,x33,…,xm3…x1n,x2n,x3n,…,xmn⎠⎟⎟⎟⎟⎟⎟⎞其中

x

n

m

x_n^m

xnm表示第

m

m

m个样本的第

n

n

n个特征,这里对每一个样本添加一个

x

0

=

1

x_0 = 1

x0=1

(

y

1

,

y

2

,

y

3

,

…

,

y

m

)

\begin{pmatrix} y_1, y_2, y_3, \dots, y_m \end{pmatrix}

(y1,y2,y3,…,ym)其中

y

m

y_m

ym表示第

m

m

m个样本的lable

w

T

=

(

w

0

,

w

1

,

w

2

,

w

3

,

…

,

w

n

)

w^T = \begin{pmatrix} w_0,w_1 , w_2, w_3, \dots, w_n \end{pmatrix}

wT=(w0,w1,w2,w3,…,wn),其中w为超平面的法向量,决定了超平面的方向,

w

0

∗

x

0

=

b

w_0 * x_0 = b

w0∗x0=b为位移项,决定了超平面与原点之间的距离。

(6.2)

下面我们将超平面的方程标记为

(

w

,

b

)

(w,b)

(w,b),样本空间中任意点

x

x

x到超平面

(

w

,

b

)

(w,b)

(w,b)的距离可写为

(6.2)

r

=

∣

w

T

x

+

b

∣

∣

∣

w

∣

∣

\tag{6.2} r = \frac{|w^Tx+b|}{||w||}

r=∣∣w∣∣∣wTx+b∣(6.2)

推导(6.2)

在二维平面d怎么求?(点到直线的距离公式)

(

x

,

y

)

(x,y)

(x,y)到

A

x

+

B

y

+

C

=

0

Ax+By+C=0

Ax+By+C=0的距离用以下公式表示

d

=

∣

A

x

+

B

y

+

C

∣

A

2

+

B

2

d=\frac{|Ax+By+C|}{\sqrt{A^2+B^2}}

d=A2+B2∣Ax+By+C∣

拓展到n维空间有:

w

T

x

b

=

0

w^Tx_b=0

wTxb=0→

w

T

x

+

b

=

0

w^Tx+b=0

wTx+b=0

∣

∣

w

∣

∣

=

w

1

2

+

w

2

2

+

⋯

+

w

n

2

||w|| = \sqrt{w_1^2+w_2^2 +\cdots + w_n^2}

∣∣w∣∣=w12+w22+⋯+wn2

假设超平面

(

w

,

b

)

(w,b)

(w,b)能够将训练样本正确分类,即对于

(

x

i

,

y

i

)

∈

D

(x_i,y_i)\in D

(xi,yi)∈D,若

y

i

=

+

1

y_i = +1

yi=+1,则有

w

T

x

i

+

b

>

0

w^Tx_i + b \gt0

wTxi+b>0;若

y

i

=

−

1

y_i = -1

yi=−1,则有

w

T

x

i

+

b

<

0

w^Tx_i + b \lt0

wTxi+b<0。

(6.3)

{

w

T

x

i

+

b

≥

+

1

y

i

=

+

1

w

T

x

i

+

b

≤

−

1

y

i

=

−

1

\tag{6.3} \begin{cases} w^Tx_i+b \geq + 1 &\text{ } y_i = +1 \\ w^Tx_i+b \leq - 1 &\text{ } y_i = -1 \end{cases}

{wTxi+b≥+1wTxi+b≤−1 yi=+1 yi=−1(6.3)

推导6.3

空间任意一点到超平面的距离为d

d

=

∣

w

T

x

+

b

∣

∣

∣

w

∣

∣

d=\frac{|w^Tx+b|}{||w||}

d=∣∣w∣∣∣wTx+b∣

∣

∣

w

∣

∣

=

w

1

2

+

w

2

2

+

⋯

+

w

n

2

||w|| = \sqrt{w_1^2+w_2^2 +\cdots + w_n^2}

∣∣w∣∣=w12+w22+⋯+wn2

{

w

T

x

i

+

b

∣

∣

w

∣

∣

≥

d

∀

y

i

=

1

w

T

x

i

+

b

∣

∣

w

∣

∣

≤

−

d

∀

y

i

=

−

1

⟹

{

w

d

T

x

i

+

b

d

≥

1

∀

y

i

=

1

w

d

T

x

i

+

b

d

≤

−

1

∀

y

i

=

−

1

\begin{cases} \frac{w^Tx_i+b }{||w||}\geq d & \forall y_i=1 \\ \frac{w^Tx_i+b}{||w||}\leq -d & \forall y_i=-1 \\ \end{cases} \Longrightarrow \begin{cases} w_d^T x_i + b_d\geq 1 & \forall y_i=1 \\ w_d^T x_i+ b_d \leq -1 & \forall y_i=-1 \\ \end{cases}

{∣∣w∣∣wTxi+b≥d∣∣w∣∣wTxi+b≤−d∀yi=1∀yi=−1⟹{wdTxi+bd≥1wdTxi+bd≤−1∀yi=1∀yi=−1

其中

{

w

d

T

=

w

T

d

b

d

=

b

d

\begin{cases} w_d^T = \frac{w^T}{d}\\ b_d = \frac{b}{d} \\ \end{cases}

{wdT=dwTbd=db

对于决策边界的超平面方程:

w

d

T

x

+

b

d

=

0

w_d^Tx+b_d=0

wdTx+bd=0

重命名!!! 令

{

w

d

T

=

w

T

b

d

=

b

⟹

w

T

x

+

b

=

0

⟹

{

w

T

x

i

+

b

≥

1

∀

y

i

=

1

w

T

x

i

+

b

≤

−

1

∀

y

i

=

−

1

\begin{cases} w_d^T = w^T\\ b_d = b \\ \end{cases}\Longrightarrow w^Tx+b=0\Longrightarrow \begin{cases} w^T x_i + b\geq 1 & \forall y_i=1 \\ w^T x_i+ b \leq -1 & \forall y_i=-1 \\ \end{cases}

{wdT=wTbd=b⟹wTx+b=0⟹{wTxi+b≥1wTxi+b≤−1∀yi=1∀yi=−1

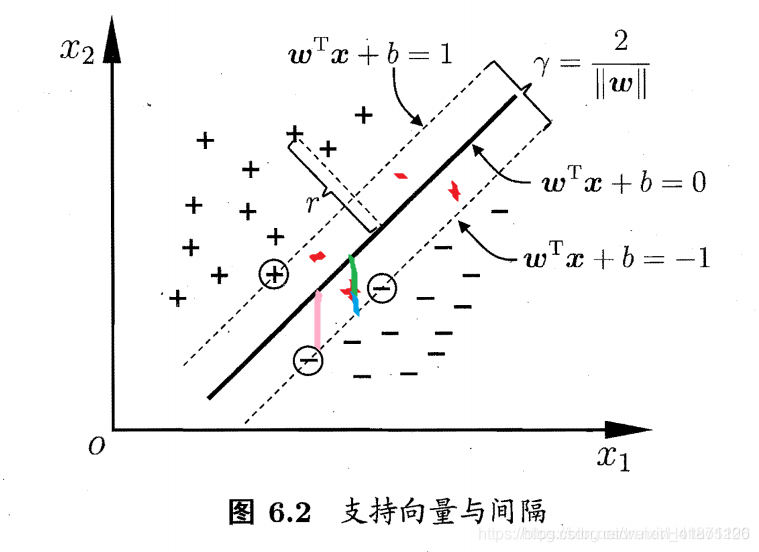

距离超平面最近的这几个训练样本点使式 (6.3) 的等号成立,它们被称为"支持向量" (surport vector),两个异类支持向量到超平面的距离之和为:

(6.4)

γ

=

2

∣

∣

w

∣

∣

\tag{6.4} \gamma =\frac{2}{||w||}

γ=∣∣w∣∣2(6.4)

推导6.4

在超平面的一边的同类支持向量构成决策边界方程为:

w

T

x

i

+

b

=

1

w^T x_i + b = 1

wTxi+b=1

超平面到该决策边界的距离为:

γ

=

1

∣

∣

w

∣

∣

\gamma = \frac{1}{||w||}

γ=∣∣w∣∣1

两平行直线之间的距离

欲找到具有"最大间隔" (maximum margin) 的划分超平面,也就是要找

到能满足式 (6.3) 中约束的参数

w

w

w和

b

b

b,使得

γ

\gamma

γ最大,即

(6.5)

m

a

x

⎵

w

,

b

2

∣

∣

w

∣

∣

\tag{6.5} \underbrace{max}_{\text{$w,b$}}\frac{2}{||w||}

w,b

max∣∣w∣∣2(6.5)

s

.

t

.

y

i

(

w

T

x

i

+

b

)

≥

1

,

i

=

1

,

2

…

m

s.t.\quad y_i(w^Tx_i+b) \geq 1,\quad i=1,2 \dots m

s.t.yi(wTxi+b)≥1,i=1,2…m

显然为了最大化间隔

γ

\gamma

γ,仅需最大化

2

∣

∣

w

∣

∣

\frac{2}{||w||}

∣∣w∣∣2 ,这等价于最小化

∣

∣

w

∣

∣

||w||

∣∣w∣∣ (加上系数与平方,只是为了计算方便)。

(6.6)

m

i

n

⎵

w

,

b

1

2

∣

∣

w

∣

∣

2

\tag{6.6} \underbrace{min}_{\text{$w,b$}}\frac{1}{2} ||w||^2

w,b

min21∣∣w∣∣2(6.6)

s . t . y i ( w T x i + b ) ≥ 1 , i = 1 , 2 … m s.t.\quad y_i(w^Tx_i+b) \geq 1,\quad i=1,2 \dots m s.t.yi(wTxi+b)≥1,i=1,2…m

6.2 对偶问题

求解式 (6.6)来得到大间隔划分超平面所对应的模型

(6.7)

f

(

x

)

=

w

T

x

+

b

\tag{6.7} f(x) = w^Tx+b

f(x)=wTx+b(6.7)

对式 (6.6)使用拉格朗日乘子法可得到其"对偶问题" (dual problem). 具体来说,对式 (6.6) 的每条约束添加拉格朗日乘子

α

≥

0

\alpha \geq 0

α≥0,则该问题的拉格朗日函数可写为:

(6.8)

L

(

w

,

b

,

α

)

=

1

2

∣

∣

w

∣

∣

2

+

∑

i

=

1

m

α

i

(

1

−

y

i

(

w

T

x

i

+

b

)

)

\tag{6.8} L(w,b,\alpha) = \frac{1}{2}||w||^2+\sum_{i=1}^m\alpha_i(1-y_i(w^Tx_i+b))

L(w,b,α)=21∣∣w∣∣2+i=1∑mαi(1−yi(wTxi+b))(6.8)

推导6.8

其中

α

=

(

α

1

;

α

2

;

⋯

α

m

)

\alpha = (\alpha_1;\alpha_2;\cdots\alpha_m)

α=(α1;α2;⋯αm).令

L

(

w

,

b

,

α

)

L(w,b,\alpha)

L(w,b,α)对

w

w

w和

b

b

b的偏导为0

L

(

w

,

b

,

α

)

=

1

2

∣

∣

w

∣

∣

2

+

∑

i

=

1

m

α

i

(

1

−

y

i

(

w

T

x

i

+

b

)

)

=

1

2

∣

∣

w

∣

∣

2

+

∑

i

=

1

m

(

α

i

−

α

i

y

i

w

T

x

i

+

α

i

y

i

b

)

=

1

2

∣

∣

w

∣

∣

2

+

∑

i

=

1

m

α

i

−

∑

i

=

1

m

α

i

y

i

w

T

x

i

+

∑

i

=

1

m

α

i

y

i

b

\begin{aligned}L(w,b,\alpha) &= \frac{1}{2}||w||^2+\sum_{i=1}^m\alpha_i(1-y_i(w^Tx_i+b)) \\ & = \frac{1}{2}||w||^2+\sum_{i=1}^m(\alpha_i-\alpha_iy_iw^Tx_i+\alpha_iy_ib)\\ & =\frac{1}{2}||w||^2+\sum_{i=1}^m\alpha_i -\sum_{i=1}^m\alpha_iy_iw^Tx_i +\sum_{i=1}^m\alpha_iy_ib \end{aligned}

L(w,b,α)=21∣∣w∣∣2+i=1∑mαi(1−yi(wTxi+b))=21∣∣w∣∣2+i=1∑m(αi−αiyiwTxi+αiyib)=21∣∣w∣∣2+i=1∑mαi−i=1∑mαiyiwTxi+i=1∑mαiyib

(1)对

w

w

w和

b

b

b分别求偏导数

∂ L ∂ w = w − ∑ i = 1 m α i y i x i = 0 ⟹ w = ∑ i = 1 m α i y i x i \frac {\partial L}{\partial w}=w - \sum_{i=1}^{m}\alpha^iy^ix^i = 0 \Longrightarrow w=\sum_{i=1}^{m}\alpha^iy^ix^i ∂w∂L=w−i=1∑mαiyixi=0⟹w=i=1∑mαiyixi

∂

L

∂

b

=

∑

i

=

1

m

α

i

y

i

0

⟹

∑

i

=

1

m

α

i

y

i

=

0

\frac {\partial L}{\partial b}=\sum_{i=1}^{m}\alpha^iy^i0 \Longrightarrow \sum_{i=1}^{m}\alpha^iy^i = 0

∂b∂L=i=1∑mαiyi0⟹i=1∑mαiyi=0

(6.9)

w

=

∑

i

=

1

m

α

i

y

i

x

i

\tag{6.9} w = \sum_{i=1}^m\alpha_iy_ix_i

w=i=1∑mαiyixi(6.9)

(6.10) 0 = ∑ i = 1 m α i y i \tag{6.10} 0=\sum_{i=1}^m\alpha_iy_i 0=i=1∑mαiyi(6.10)

(6.11)

L

(

w

,

b

,

α

)

=

m

a

x

⎵

α

∑

i

=

1

m

α

i

−

1

2

∑

i

=

1

m

∑

j

=

1

m

α

i

α

j

y

i

y

j

x

i

T

x

j

\tag{6.11} L(w,b,\alpha) =\underbrace{max}_{\text{$\alpha$}}\sum_{i=1}^m\alpha_i - \frac{1}{2}\sum_{i = 1}^m\sum_{j=1}^m\alpha_i \alpha_j y_iy_jx_i^Tx_j

L(w,b,α)=α

maxi=1∑mαi−21i=1∑mj=1∑mαiαjyiyjxiTxj(6.11)

推导6.11

将式 (6.9)代人 (6.8) ,即可将

L

(

w

,

b

,

α

)

L(w ,b ,\alpha)

L(w,b,α) 中的

w

w

w 和

b

b

b 消去,再考虑式 (6.10) 的约束,就得到式 (6.6) 的对偶问题

L

(

w

,

b

,

α

)

=

1

2

w

T

w

+

∑

i

=

1

m

α

i

[

1

−

y

i

(

w

T

x

i

+

b

)

]

=

1

2

w

T

w

+

∑

i

=

1

m

α

i

−

∑

i

=

1

m

α

i

y

i

w

T

x

i

−

∑

i

=

1

m

α

i

y

i

b

=

1

2

w

T

∑

i

=

1

m

α

i

y

i

x

i

−

w

T

∑

i

=

1

m

α

i

y

i

x

i

+

∑

i

=

1

m

α

i

−

∑

i

=

1

m

α

i

y

i

b

=

−

1

2

w

T

∑

i

=

1

m

α

i

y

i

x

i

+

∑

i

=

1

m

α

i

−

∑

i

=

1

m

α

i

y

i

b

=

−

1

2

w

T

∑

i

=

1

m

α

i

y

i

x

i

+

∑

i

=

1

m

α

i

−

b

∑

i

=

1

m

α

i

y

i

=

−

1

2

(

∑

i

=

1

m

α

i

y

i

x

i

)

T

(

∑

i

=

1

m

α

i

y

i

x

i

)

+

∑

i

=

1

m

α

i

−

b

∑

i

=

1

m

α

i

y

i

=

−

1

2

∑

i

=

1

m

α

i

y

i

(

x

i

)

T

∑

i

=

1

m

α

i

y

i

x

i

+

∑

i

=

1

m

α

i

−

b

∑

i

=

1

m

α

i

y

i

⟹

其

中

∑

i

=

1

m

α

i

y

i

=

0

=

−

1

2

∑

i

=

1

m

α

i

y

i

(

x

i

)

T

∑

i

=

1

m

α

i

y

i

x

i

+

∑

i

=

1

m

α

i

=

∑

i

=

1

m

α

i

−

1

2

∑

i

=

1

m

∑

j

=

1

m

α

i

α

j

y

i

y

j

(

x

i

)

T

x

j

\begin{aligned} L(w,b,\alpha) &=\frac {1}{2}w^Tw+\sum _{i=1}^m\alpha_i[1-y_i(w^Tx_i+b)]\\ & =\frac {1}{2}w^Tw+\sum _{i=1}^m\alpha_i - \sum _{i=1}^m\alpha_iy_iw^Tx_i-\sum _{i=1}^m\alpha_iy_ib\\ &=\frac {1}{2}w^T\sum _{i=1}^m\alpha_iy_ix_i-w^T\sum _{i=1}^m\alpha_iy_ix_i+\sum _{i=1}^m\alpha_ i -\sum _{i=1}^m\alpha_iy_ib\\ & = -\frac {1}{2}w^T\sum _{i=1}^m\alpha_iy_ix_i+\sum _{i=1}^m\alpha_i -\sum _{i=1}^m\alpha_iy_ib\\ &=-\frac {1}{2}w^T\sum _{i=1}^m\alpha_iy_ix_i+\sum _{i=1}^m\alpha_i -b\sum _{i=1}^m\alpha_iy_i\\ &=-\frac {1}{2}(\sum_{i=1}^{m}\alpha_iy_ix_i)^T(\sum _{i=1}^m\alpha_iy_ix_i)+\sum _{i=1}^m\alpha_i -b\sum _{i=1}^m\alpha_iy_i\\ &=-\frac {1}{2}\sum_{i=1}^{m}\alpha_iy_i(x_i)^T\sum _{i=1}^m\alpha_iy_ix_i+\sum _{i=1}^m\alpha_i -b\sum _{i=1}^m\alpha_iy_i\Longrightarrow其中 \sum_{i=1}^{m}\alpha_iy_i = 0\\ &= -\frac {1}{2}\sum_{i=1}^{m}\alpha_iy_i(x_i)^T\sum _{i=1}^m\alpha_iy_ix_i+\sum _{i=1}^m\alpha_i \\ &=\sum _{i=1}^m\alpha_i-\frac {1}{2}\sum_{i=1 }^{m}\sum_{j=1}^{m}\alpha_i\alpha_jy_iy_j(x_i)^Tx_j \end{aligned}

L(w,b,α)=21wTw+i=1∑mαi[1−yi(wTxi+b)]=21wTw+i=1∑mαi−i=1∑mαiyiwTxi−i=1∑mαiyib=21wTi=1∑mαiyixi−wTi=1∑mαiyixi+i=1∑mαi−i=1∑mαiyib=−21wTi=1∑mαiyixi+i=1∑mαi−i=1∑mαiyib=−21wTi=1∑mαiyixi+i=1∑mαi−bi=1∑mαiyi=−21(i=1∑mαiyixi)T(i=1∑mαiyixi)+i=1∑mαi−bi=1∑mαiyi=−21i=1∑mαiyi(xi)Ti=1∑mαiyixi+i=1∑mαi−bi=1∑mαiyi⟹其中i=1∑mαiyi=0=−21i=1∑mαiyi(xi)Ti=1∑mαiyixi+i=1∑mαi=i=1∑mαi−21i=1∑mj=1∑mαiαjyiyj(xi)Txj

s . t . ∑ i = 1 m α i y i = 0 s.t. \quad \sum_{i=1}^m \alpha_i y_i = 0 s.t.i=1∑mαiyi=0

α i ≥ 0 , i = 1 , 2 … , m \alpha _i \geq 0,\quad i=1,2\dots,m αi≥0,i=1,2…,m

将(6.9)带入

f

(

x

)

f(x)

f(x)得:

(6.12)

f

(

x

)

=

w

T

x

+

b

=

∑

i

=

1

m

α

i

y

i

x

i

T

x

+

b

\tag{6.12} f(x) = w^Tx+b = \sum_{i=1}^{m}\alpha_iy_ix_i^Tx+b

f(x)=wTx+b=i=1∑mαiyixiTx+b(6.12)

从对偶问题 (6.11)解出的

α

i

\alpha_i

αi是式 (6.8) 中的拉格朗日乘子,它恰对应着训练样本

(

x

i

,

y

i

)

(x_i ,y_i)

(xi,yi). 注意到式 (6.6) 中有不等式约束,因此上述过程需满足KKT(Karush-Kuhn-Tucker) 条件,即要求:

(6.13)

{

α

i

≥

0

;

y

i

f

(

x

i

)

−

1

≥

0

;

α

i

(

y

i

f

(

x

i

)

−

1

)

=

0

\tag{6.13} \begin{cases} \alpha_i \geq 0; &\text{ } \\ y_if(x_i)-1 \geq 0;&\text{}\\ \alpha_i(y_if(x_i) -1) =0 \end{cases}

⎩⎪⎨⎪⎧αi≥0;yif(xi)−1≥0;αi(yif(xi)−1)=0 (6.13)

使用

S

M

O

SMO

SMO算法,固定

α

i

,

α

j

\alpha_i,\alpha_j

αi,αj以外的参数,则有:

(6.14)

α

i

y

i

+

α

j

y

j

=

c

,

α

i

≥

0

,

α

j

≥

0

\tag{6.14} \alpha_iy_i + \alpha_jy_j = c,\quad \alpha_i \geq 0 ,\quad \alpha_j\geq 0

αiyi+αjyj=c,αi≥0,αj≥0(6.14)

(6.15)

c

=

−

∑

k

≠

i

,

j

α

k

y

k

\tag{6.15}c = -\sum_{k\ne i,j}\alpha_ky_k

c=−k̸=i,j∑αkyk(6.15)

(6.16)

α

i

y

i

+

α

j

y

j

=

c

\tag{6.16}\alpha_iy_i+\alpha_jy_j = c

αiyi+αjyj=c(6.16)

对于任何支持向量都有

{

w

T

x

s

+

b

=

1

∀

y

s

=

1

w

T

x

s

+

b

=

−

1

∀

y

s

=

−

1

⟹

y

s

f

(

x

s

)

=

1

\begin{cases} w^T x_s + b=1 & \forall y_s=1 \\ w^T x_s+ b= -1 & \forall y_s=-1 \end{cases} \Longrightarrow y_sf(x_s)= 1

{wTxs+b=1wTxs+b=−1∀ys=1∀ys=−1⟹ysf(xs)=1

(6.17)

y

s

(

∑

i

∈

S

α

i

y

i

x

i

T

x

s

+

b

)

=

1

\tag{6.17}y_s(\sum_{i\in S}\alpha_iy_ix_i^Tx_s+b) = 1

ys(i∈S∑αiyixiTxs+b)=1(6.17)

推导6.17

(6.17)等式两边同乘

y

s

y_s

ys

y

s

2

(

∑

i

∈

S

α

i

y

i

x

i

T

x

s

+

b

)

=

y

s

,

其

中

y

s

2

=

1

y_s^2(\sum_{i\in S}\alpha_iy_ix_i^Tx_s+b) = y_s,\quad其中y_s^2 =1

ys2(i∈S∑αiyixiTxs+b)=ys,其中ys2=1

(6.18) b = 1 ∣ S ∣ ∑ s ∈ S ( y s − ∑ s ∈ S α i y i x i T x s ) \tag{6.18}b = \frac{1}{|S|}\sum_{s\in S}(y_s - \sum_{s\in S}\alpha _iy_ix_i^Tx_s) b=∣S∣1s∈S∑(ys−s∈S∑αiyixiTxs)(6.18)

6.3 核函数

ϕ

(

x

)

\phi(x)

ϕ(x)表示将 x映射

到一个合适的高维空间 后的特征向量

(6.19)

f

(

x

)

=

w

T

ϕ

(

x

)

+

b

\tag{6.19}f(x) = w^T\phi(x) +b

f(x)=wTϕ(x)+b(6.19)

(6.20)

m

i

n

⎵

w

,

b

1

2

∣

∣

w

∣

∣

2

\tag{6.20} \underbrace{min}_{\text{$w,b$}}\frac{1}{2}||w||^2

w,b

min21∣∣w∣∣2(6.20)

s . t . y i ( w T ϕ ( x i ) + b ) ≥ 1 , i = 1 , 2 … m s.t. \quad y_i(w^T\phi(x_i)+ b)\geq 1,\quad i = 1,2\dots m s.t.yi(wTϕ(xi)+b)≥1,i=1,2…m

(6.21) m a x ⎵ α ∑ i = 1 m α i − 1 2 ∑ i = 1 m ∑ j = 1 m α i α j y i y j ϕ ( x i ) T ( x j ) \tag{6.21}\underbrace{max}_{\text{$\alpha$}}\sum_{i=1}^m\alpha_i - \frac{1}{2}\sum_{i=1}^m\sum_{j=1}^m\alpha_i\alpha_jy_iy_j\phi(x_i)^T(x_j) α maxi=1∑mαi−21i=1∑mj=1∑mαiαjyiyjϕ(xi)T(xj)(6.21)

s

.

t

.

∑

i

=

1

m

α

i

y

i

=

0

s.t. \quad \sum_{i=1}^m\alpha_iy_i = 0

s.t.i=1∑mαiyi=0

α

i

≥

0

,

i

=

1

,

2

,

…

,

m

\alpha_i\geq 0,\quad i =1,2,\dots,m

αi≥0,i=1,2,…,m

半正定矩阵和正定矩阵

(6.22)

κ

(

x

i

,

x

j

)

=

⟨

ϕ

(

x

i

)

,

ϕ

(

x

j

)

⟩

=

ϕ

(

x

)

(

x

i

)

T

ϕ

(

x

)

(

x

j

)

\tag{6.22}\kappa(x_i,x_j) =\langle \phi(x_i),\phi(x_j) \rangle = \phi(x)(x_i)^T\phi(x)(x_j)

κ(xi,xj)=⟨ϕ(xi),ϕ(xj)⟩=ϕ(x)(xi)Tϕ(x)(xj)(6.22)

(6.23)

m

a

x

⎵

α

∑

i

=

1

m

α

i

−

1

2

∑

i

=

1

m

∑

j

=

1

m

α

i

α

j

y

i

y

j

κ

(

x

i

,

x

j

)

\tag{6.23} \underbrace{max}_{\text{$\alpha$}} \sum_{i=1}^m\alpha_i - \frac{1}{2}\sum_{i=1}^m\sum_{j=1}^m\alpha_i\alpha_jy_iy_j\kappa(x_i,x_j)

α

maxi=1∑mαi−21i=1∑mj=1∑mαiαjyiyjκ(xi,xj)(6.23)

s . t . ∑ i = 1 m α i y i = 0 s.t. \quad \sum_{i=1}^m\alpha_iy_i =0 s.t.i=1∑mαiyi=0

α

i

≥

0

,

i

=

1

,

2

⋯

,

m

\alpha_i \geq 0,\quad i =1,2\cdots,m

αi≥0,i=1,2⋯,m

(6.24)

f

(

x

)

=

w

T

ϕ

(

x

)

+

b

=

∑

i

=

1

m

α

i

y

i

ϕ

(

x

)

(

x

i

)

T

ϕ

(

x

)

+

b

=

∑

i

=

1

m

α

i

y

i

κ

(

x

i

,

x

j

)

+

b

\tag{6.24}\begin{aligned} f(x) &=w^T\phi(x) +b \\&=\sum_{i=1}^m\alpha_iy_i\phi(x)(x_i)^T\phi(x) +b \\&=\sum_{i=1}^m\alpha_iy_i\kappa(x_i,x_j) +b\end{aligned}

f(x)=wTϕ(x)+b=i=1∑mαiyiϕ(x)(xi)Tϕ(x)+b=i=1∑mαiyiκ(xi,xj)+b(6.24)

为核函数还可通过函数组合得到,例如:

- 若

κ

1

\kappa_1

κ1和

κ

2

\kappa_2

κ2为核函数,则对于任意正数

γ

1

\gamma_1

γ1,

γ

2

\gamma_2

γ2,其线性组合

(6.25) γ 1 κ 1 + γ 2 κ 2 \tag{6.25}\gamma_1\kappa_1+\gamma_2\kappa_2 γ1κ1+γ2κ2(6.25) - 若

κ

1

\kappa_1

κ1和

κ

2

\kappa_2

κ2为核函数,则核函数的直积

(6.26) κ 1 ⨂ κ 2 ( x , z ) = κ 1 ( x , z ) κ 2 ( x , z ) \tag{6.26}\kappa_1\bigotimes\kappa_2(x,z) = \kappa_1(x,z)\kappa_2(x,z) κ1⨂κ2(x,z)=κ1(x,z)κ2(x,z)(6.26) - 若

κ

1

\kappa_1

κ1为核函 数,则对于任意函数

g

(

x

)

g(x)

g(x),

(6.27) κ ( x , z ) = g ( x ) κ 1 ( x , z ) g ( z ) \tag{6.27} \kappa(x,z)=g(x)\kappa_1(x,z)g(z) κ(x,z)=g(x)κ1(x,z)g(z)(6.27)

6.4 软间隔与正则化

(6.28)

y

i

(

w

T

x

i

+

b

)

≥

1

,

i

=

1

,

2

…

m

\tag{6.28} \quad y_i(w^Tx_i+b) \geq 1,\quad i=1,2 \dots m

yi(wTxi+b)≥1,i=1,2…m(6.28)

(6.29)

m

i

n

⎵

w

,

b

1

2

∣

∣

w

∣

∣

2

+

C

∑

i

=

1

m

l

0

/

1

(

y

i

(

w

T

x

i

+

b

)

−

1

)

\tag{6.29} \underbrace{min}_{\text{$w,b$}}\frac{1}{2} ||w||^2+C\sum_{i=1}^{m}l_{0/1}(y_i(w^Tx_i+b)-1)

w,b

min21∣∣w∣∣2+Ci=1∑ml0/1(yi(wTxi+b)−1)(6.29)

(6.30)

l

0

/

1

(

z

)

=

{

1

,

z

<

0

0

,

o

t

h

e

r

w

i

s

e

\tag{6.30}l_{0/1}(z)=\begin{cases}\\ 1 , z<0 \\ 0 ,otherwise\\ \end{cases}

l0/1(z)={1,z<00,otherwise(6.30)

在6.6式的基础上,我们把 不满足约束的样本以

l

0

/

1

l_{0/1}

l0/1损失函数引入进来 ,以实现允许少量样本不满足约束

hingle损失:

(6.31)

l

h

i

n

g

e

=

m

a

x

(

0

,

1

−

z

)

;

\tag{6.31}l_{hinge}=max(0,1-z);

lhinge=max(0,1−z);(6.31)

指数损失:

(6.32)

(

e

x

p

o

n

e

n

t

i

a

l

l

o

s

s

)

:

l

e

x

p

(

z

)

=

e

x

p

(

−

z

)

\tag{6.32}(exponential loss):l_{exp}(z)=exp(-z)

(exponentialloss):lexp(z)=exp(−z)(6.32)

对率损失:

(6.33)

(

l

o

g

i

s

t

i

c

s

l

o

s

s

)

:

L

l

o

g

(

z

)

=

l

o

g

(

1

+

e

x

p

(

−

z

)

)

\tag{6.33}(logistics loss):L_{log}(z)=log(1+exp(-z))

(logisticsloss):Llog(z)=log(1+exp(−z))(6.33)

我们用hinge损失代替

l

0

/

1

l_{0/1}

l0/1

(6.29)

m

i

n

⎵

w

,

b

1

2

∣

∣

w

∣

∣

2

+

C

∑

i

=

1

m

m

a

x

(

0

,

1

−

y

i

(

w

T

x

i

+

b

)

)

\tag{6.29} \underbrace{min}_{\text{$w,b$}}\frac{1}{2} ||w||^2+C\sum_{i=1}^{m}max(0,1-y_i(w^Tx_i+b))

w,b

min21∣∣w∣∣2+Ci=1∑mmax(0,1−yi(wTxi+b))(6.29)

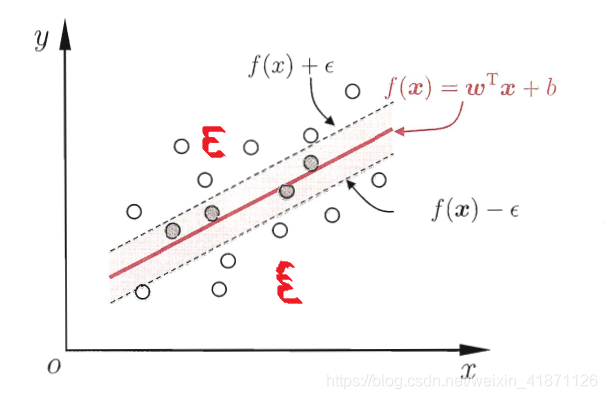

引入“松弛变量”

ε

i

\varepsilon_i

εi,为了方便理解,我们用‘红色’标出四个位于间隔内的点,粉色线段长度代表函数间隔(在这里为1)蓝色线段为

ε

\varepsilon

ε 绿色线段为

1

−

ε

1-\varepsilon

1−ε ,此时我们将(6.34)重写为

(6.35)

m

i

n

⎵

w

,

b

,

ε

i

1

2

∣

∣

w

∣

∣

2

+

C

∑

i

=

1

m

ε

i

\tag{6.35} \underbrace{min}_{\text{$w,b,\varepsilon_i$}}\frac{1}{2} ||w||^2+C\sum_{i=1}^{m}\varepsilon_i

w,b,εi

min21∣∣w∣∣2+Ci=1∑mεi(6.35)

s

.

t

.

y

i

(

w

T

x

i

+

b

)

≥

1

−

ε

i

,

i

=

1

,

2

…

m

s.t.\quad y_i(w^Tx_i+b) \geq 1-\varepsilon_i,\quad i=1,2 \dots m

s.t.yi(wTxi+b)≥1−εi,i=1,2…m

ε

i

≥

0

i

=

1

,

2

,

.

.

.

m

.

\varepsilon_i \geq 0 \quad i= 1,2,...m.

εi≥0i=1,2,...m.

(6.36)

L

(

w

,

b

,

α

,

ε

,

μ

)

=

1

2

∣

∣

w

∣

∣

2

+

C

∑

i

=

1

m

ε

i

+

∑

i

=

1

m

α

i

(

1

−

ε

i

−

y

i

(

w

T

x

i

+

b

)

)

−

∑

i

=

1

m

μ

i

ε

i

\tag{6.36} L(w,b,\alpha,\varepsilon ,\mu) = \frac{1}{2}||w||^2+C\sum_{i=1}^m \varepsilon_i +\sum_{i=1}^m \alpha_i(1-\varepsilon_i-y_i(w^Tx_i+b))-\sum_{i=1}^m\mu_i \varepsilon_i

L(w,b,α,ε,μ)=21∣∣w∣∣2+Ci=1∑mεi+i=1∑mαi(1−εi−yi(wTxi+b))−i=1∑mμiεi(6.36)在这部分由于存在两个月叔条件,我们引入两个拉格朗日乘子

α

,

ε

\alpha ,\varepsilon

α,ε

分别对

w

,

b

,

ε

w,b,\varepsilon

w,b,ε求导并使其为0

(6.37)

∂

L

∂

w

=

w

−

∑

i

=

1

m

α

i

y

i

x

i

=

0

⟹

w

=

∑

i

=

1

m

α

i

y

i

x

i

\tag{6.37} \frac {\partial L}{\partial w}=w - \sum_{i=1}^{m}\alpha^iy^ix^i = 0 \Longrightarrow w=\sum_{i=1}^{m}\alpha^iy^ix^i

∂w∂L=w−i=1∑mαiyixi=0⟹w=i=1∑mαiyixi(6.37)

(6.38)

∂

L

∂

b

=

∑

i

=

1

m

α

i

y

i

=

0

⟹

∑

i

=

1

m

α

i

y

i

=

0

\tag{6.38} \frac {\partial L}{\partial b}=\sum_{i=1}^{m}\alpha^iy^i=0 \Longrightarrow \sum_{i=1}^{m}\alpha^iy^i = 0

∂b∂L=i=1∑mαiyi=0⟹i=1∑mαiyi=0(6.38)

(6.39)

∂

L

∂

ε

=

C

∑

i

=

1

m

1

−

∑

i

=

1

m

α

1

−

∑

i

=

1

m

u

i

⟹

C

=

α

i

+

μ

i

\tag{6.39} \frac{\partial L}{\partial \varepsilon}=C\sum_{i=1}^m1-\sum_{i=1}^m \alpha_1 -\sum_{i=1}^m \,u_i \Longrightarrow C=\alpha_i +\mu_i

∂ε∂L=Ci=1∑m1−i=1∑mα1−i=1∑mui⟹C=αi+μi(6.39)

将式6.37-6.39代入6.36可以得到6.35的对偶问题——线性规划中普遍存在配对现象,每一个线性规划问题都存在另一个与他有对应关系的线性规划问题,其一叫原问题,其二叫对偶问题

(6.40)

L

(

w

,

b

,

α

,

ε

,

μ

)

=

1

2

∣

∣

w

∣

∣

2

+

C

∑

i

=

1

m

ε

i

+

∑

i

=

1

m

α

i

(

1

−

ε

i

−

y

i

(

w

T

x

i

+

b

)

)

−

∑

i

=

1

m

μ

i

ε

i

=

1

2

∣

∣

w

∣

∣

2

+

∑

i

=

1

m

α

i

(

1

−

y

i

(

w

T

x

i

+

b

)

)

+

C

∑

i

=

1

m

ε

i

−

∑

i

=

1

m

α

i

ε

i

−

∑

i

=

1

m

μ

i

ε

i

=

−

1

2

∑

i

=

1

m

α

i

y

i

(

x

i

)

T

∑

i

=

1

m

α

i

y

i

x

i

+

∑

i

=

1

m

α

i

+

∑

i

=

1

m

C

ε

i

−

∑

i

=

1

m

α

i

ε

i

−

∑

i

=

1

m

μ

i

ε

i

=

−

1

2

∑

i

=

1

m

α

i

y

i

(

x

i

)

T

∑

i

=

1

m

α

i

y

i

x

i

+

∑

i

=

1

m

α

i

+

∑

i

=

1

m

(

C

−

α

i

−

μ

i

)

ε

i

=

∑

i

=

1

m

α

i

−

1

2

∑

i

=

1

m

∑

j

=

1

m

α

i

α

j

y

i

y

j

(

x

i

)

T

x

j

m

a

x

⎵

α

∑

i

=

1

m

α

i

−

1

2

∑

i

=

1

m

∑

j

=

1

m

α

i

α

j

y

i

y

j

(

x

i

)

T

x

j

s

.

t

.

∑

i

=

1

m

α

i

y

i

=

0

0

≤

α

i

≤

C

i

=

1

,

2

,

…

,

m

\begin{aligned} L(w,b,\alpha,\varepsilon ,\mu) &= \frac{1}{2}||w||^2+C\sum_{i=1}^m \varepsilon_i+\sum_{i=1}^m \alpha_i(1-\varepsilon_i-y_i(w^Tx_i+b))-\sum_{i=1}^m\mu_i \varepsilon_i \\ &=\frac{1}{2}||w||^2+\sum_{i=1}^m\alpha_i(1-y_i(w^Tx_i+b))+C\sum_{i=1}^m \varepsilon_i-\sum_{i=1}^m \alpha_i \varepsilon_i-\sum_{i=1}^m\mu_i \varepsilon_i \\ & = -\frac {1}{2}\sum_{i=1}^{m}\alpha_iy_i(x_i)^T\sum _{i=1}^m\alpha_iy_ix_i+\sum _{i=1}^m\alpha_i +\sum_{i=1}^m C\varepsilon_i-\sum_{i=1}^m \alpha_i \varepsilon_i-\sum_{i=1}^m\mu_i \varepsilon_i \\ & = -\frac {1}{2}\sum_{i=1}^{m}\alpha_iy_i(x_i)^T\sum _{i=1}^m\alpha_iy_ix_i+\sum _{i=1}^m\alpha_i +\sum_{i=1}^m (C-\alpha_i-\mu_i)\varepsilon_i \\ &=\sum _{i=1}^m\alpha_i-\frac {1}{2}\sum_{i=1 }^{m}\sum_{j=1}^{m}\alpha_i\alpha_jy_iy_j(x_i)^Tx_j \\ &\tag{6.40}\underbrace{max}_{\alpha}\sum _{i=1}^m\alpha_i-\frac {1}{2}\sum_{i=1 }^{m}\sum_{j=1}^{m}\alpha_i\alpha_jy_iy_j(x_i)^Tx_j \\ &s.t. \sum_{i=1}^m \alpha_i y_i=0 \\ & 0 \leq\alpha_i \leq C \quad i=1,2,\dots ,m \end{aligned}

L(w,b,α,ε,μ)=21∣∣w∣∣2+Ci=1∑mεi+i=1∑mαi(1−εi−yi(wTxi+b))−i=1∑mμiεi=21∣∣w∣∣2+i=1∑mαi(1−yi(wTxi+b))+Ci=1∑mεi−i=1∑mαiεi−i=1∑mμiεi=−21i=1∑mαiyi(xi)Ti=1∑mαiyixi+i=1∑mαi+i=1∑mCεi−i=1∑mαiεi−i=1∑mμiεi=−21i=1∑mαiyi(xi)Ti=1∑mαiyixi+i=1∑mαi+i=1∑m(C−αi−μi)εi=i=1∑mαi−21i=1∑mj=1∑mαiαjyiyj(xi)Txjα

maxi=1∑mαi−21i=1∑mj=1∑mαiαjyiyj(xi)Txjs.t.i=1∑mαiyi=00≤αi≤Ci=1,2,…,m(6.40)

KKT条件,这里简单提一下不等式约束的KKT拉格朗日乘子为

α

\alpha

α不等式约束为

ε

\varepsilon

ε那么要满足

{

α

≥

0

ε

α

ε

=

0

\begin{cases} \alpha \geq0 \\ \varepsilon \\ \alpha \varepsilon=0 \end{cases}

⎩⎪⎨⎪⎧α≥0εαε=0

(6.41)

{

α

i

≥

0

,

μ

i

≥

0

,

y

i

f

(

x

i

)

−

1

+

ε

i

≥

0

,

α

i

(

y

i

f

(

x

i

)

−

1

+

ε

i

)

=

0

,

ε

i

≥

0

,

μ

i

ε

i

=

0.

\tag{6.41}\begin{cases} \alpha_i \geq0, \mu_i \geq0 ,\\ y_if(x_i)-1+\varepsilon_i \geq0,\\ \alpha_i(y_if(x_i)-1+\varepsilon_i)=0,\\ \varepsilon_i \geq0, \mu_i \varepsilon_i=0. \end{cases}

⎩⎪⎪⎪⎨⎪⎪⎪⎧αi≥0,μi≥0,yif(xi)−1+εi≥0,αi(yif(xi)−1+εi)=0,εi≥0,μiεi=0.(6.41)

我们对不同的损失函数概括抽象推广到一般形式:

(6.42)

m

i

n

⎵

f

Ω

(

f

)

+

C

∑

i

=

1

m

l

(

f

(

x

i

)

,

y

i

)

\tag{6.42} \underbrace{min}_{f} \Omega(f)+C\sum_{i=1}^ml(f(x_i),y_i)

f

minΩ(f)+Ci=1∑ml(f(xi),yi)(6.42)

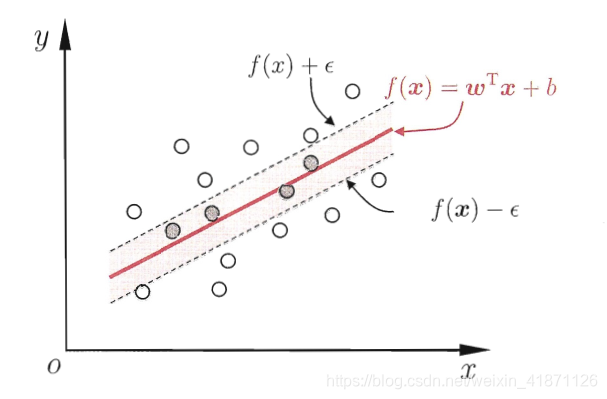

6.5 支持向量回归

在这里简单说一点,我们如何来看待分类和回归,分类的损失函数要么是1,要么是0,回归得的损失函数是连续的数值。支持向量回归我们容忍

f

(

x

)

f(x)

f(x)与y之间有

ϵ

\epsilon

ϵ的误差,(结合图片,把6.43,6.44一起理解)

(6.43)

m

i

n

⎵

w

,

b

,

ε

i

1

2

∣

∣

w

∣

∣

2

+

C

∑

i

=

1

m

l

ϵ

(

f

(

x

i

)

−

y

i

)

\tag{6.43} \underbrace{min}_{\text{$w,b,\varepsilon_i$}}\frac{1}{2} ||w||^2+C\sum_{i=1}^{m}l_{\epsilon}(f(x_i)-y_i)

w,b,εi

min21∣∣w∣∣2+Ci=1∑mlϵ(f(xi)−yi)(6.43)

(6.44)

l

ϵ

(

z

)

=

{

0

,

i

f

∣

z

∣

≤

ϵ

∣

z

∣

−

ϵ

,

o

t

h

e

r

w

i

s

e

\tag{6.44}l_{\epsilon}(z)= \begin{cases}\\ 0, if|z| \leq \epsilon \\|z|-\epsilon,otherwise \end{cases}

lϵ(z)={0,if∣z∣≤ϵ∣z∣−ϵ,otherwise(6.44)

如图所示我们在间隔两侧引入松弛变量

ε

,

ε

^

\varepsilon ,\hat\varepsilon

ε,ε^,就是在间隔

ϵ

\epsilon

ϵ基础上我们重新增加了一部分“容忍量”

(6.45)

m

i

n

⎵

w

,

b

,

ε

i

,

ε

^

i

1

2

∣

∣

w

∣

∣

2

+

C

∑

i

=

1

m

(

ϵ

i

,

ε

^

i

\tag{6.45} \underbrace{min}_{\text{$w,b,\varepsilon_i,\hat \varepsilon_i$}}\frac{1}{2} ||w||^2+C\sum_{i=1}^{m}(\epsilon_i,\hat \varepsilon_i

w,b,εi,ε^i

min21∣∣w∣∣2+Ci=1∑m(ϵi,ε^i(6.45)

f

(

x

i

)

−

y

i

≤

ϵ

+

ε

i

,

f(x_i)-y_i\leq\epsilon+\varepsilon_i,

f(xi)−yi≤ϵ+εi,

y

i

−

f

(

x

i

)

≤

ϵ

+

ε

^

i

,

y_i-f(x_i) \leq\epsilon+\hat\varepsilon_i,

yi−f(xi)≤ϵ+ε^i,

ε

i

≥

0

,

ε

^

0

,

i

=

1

,

2

,

…

,

m

.

\varepsilon_i \geq0,\hat\varepsilon0,\quad i=1,2,\dots,m.

εi≥0,ε^0,i=1,2,…,m.

引入拉格朗日乘子

μ

i

≥

0

,

m

μ

^

i

≥

0

\mu_i \geq0,m\hat\mu_i\geq0

μi≥0,mμ^i≥0,对应两个松弛变量,

α

−

i

≥

0

,

α

^

i

≥

0

\alpha-i\geq0,\hat\alpha_i\geq0

α−i≥0,α^i≥0对应两个约束条件.

(6.46)

L

(

w

,

b

,

α

,

α

^

,

ε

,

ε

^

,

μ

,

μ

^

)

=

1

2

∣

∣

w

∣

∣

2

+

C

∑

i

=

1

m

(

ε

i

+

ε

^

i

)

−

∑

i

=

1

m

μ

i

ε

i

−

∑

i

=

1

m

μ

^

i

ε

^

i

+

∑

i

=

1

m

α

i

(

f

(

x

i

)

−

y

i

−

ϵ

−

ε

i

)

+

∑

i

=

1

m

α

^

i

(

y

i

−

f

(

x

i

)

−

ϵ

−

ε

^

i

\tag{6.46} L(w,b,\alpha,\hat\alpha,\varepsilon,\hat\varepsilon ,\mu,\hat\mu) \\ = \frac{1}{2}||w||^2+C\sum_{i=1}^m (\varepsilon_i+\hat\varepsilon_i)-\sum_{i=1}^m\mu_i \varepsilon_i-\sum_{i=1}^m\hat\mu_i \hat\varepsilon_i +\sum_{i=1}^m \alpha_i(f(x_i)-y_i-\epsilon-\varepsilon_i)+\sum_{i=1}^m\hat\alpha_i(y_i-f(x_i)-\epsilon-\hat\varepsilon_i

L(w,b,α,α^,ε,ε^,μ,μ^)=21∣∣w∣∣2+Ci=1∑m(εi+ε^i)−i=1∑mμiεi−i=1∑mμ^iε^i+i=1∑mαi(f(xi)−yi−ϵ−εi)+i=1∑mα^i(yi−f(xi)−ϵ−ε^i(6.46)

L

(

w

,

b

,

α

,

α

^

,

ε

,

ε

^

,

μ

,

μ

^

)

L(w,b,\alpha,\hat\alpha,\varepsilon,\hat\varepsilon ,\mu,\hat\mu)

L(w,b,α,α^,ε,ε^,μ,μ^)分别对

w

,

b

,

ε

,

ε

^

w,b,\varepsilon,\hat\varepsilon

w,b,ε,ε^求偏导并使其为0

(6.47)

∂

L

∂

w

=

w

−

∑

i

=

1

m

α

i

x

i

−

∑

i

=

1

m

α

^

i

x

i

=

0

⟹

w

=

∑

i

=

1

m

(

α

^

i

−

α

i

)

x

i

\tag{6.47} \frac{\partial L}{\partial w}=w-\sum_{i=1}^m\alpha_ix_i-\sum_{i=1}^m\hat\alpha_ix_i=0 \Longrightarrow w=\sum_{i=1}^m(\hat\alpha_i-\alpha_i)x_i

∂w∂L=w−i=1∑mαixi−i=1∑mα^ixi=0⟹w=i=1∑m(α^i−αi)xi(6.47)

(6.48)

∂

L

∂

b

=

∑

i

=

1

m

α

i

−

∑

i

=

1

m

α

^

i

=

0

⟹

0

=

∑

i

=

1

m

(

α

^

i

−

α

i

)

\tag{6.48} \frac{\partial L}{\partial b}=\sum_{i=1}^m\alpha_i -\sum_{i=1}^m\hat\alpha_i=0 \Longrightarrow 0=\sum_{i=1}^m(\hat\alpha_i-\alpha_i)

∂b∂L=i=1∑mαi−i=1∑mα^i=0⟹0=i=1∑m(α^i−αi)(6.48)

(6.49)

∂

L

∂

ε

i

=

C

∑

i

=

1

m

1

−

∑

i

=

1

m

α

1

−

∑

i

=

1

m

u

i

⟹

C

=

α

i

+

μ

i

\tag{6.49} \frac{\partial L}{\partial \varepsilon_i}=C\sum_{i=1}^m1-\sum_{i=1}^m \alpha_1 -\sum_{i=1}^m \,u_i \Longrightarrow C=\alpha_i +\mu_i

∂εi∂L=Ci=1∑m1−i=1∑mα1−i=1∑mui⟹C=αi+μi(6.49)

(6.50)

∂

L

∂

ε

^

i

=

C

∑

i

=

1

m

1

−

∑

i

=

1

m

α

^

1

−

∑

i

=

1

m

μ

^

i

⟹

C

=

α

^

i

+

μ

^

i

\tag{6.50} \frac{\partial L}{\partial \hat\varepsilon_i}=C\sum_{i=1}^m1-\sum_{i=1}^m \hat\alpha_1 -\sum_{i=1}^m \hat\mu_i \Longrightarrow C=\hat\alpha_i +\hat\mu_i

∂ε^i∂L=Ci=1∑m1−i=1∑mα^1−i=1∑mμ^i⟹C=α^i+μ^i(6.50)

将6.47-6.50代入6.46,即可得到SVR的对偶问题

L

(

w

,

b

,

α

,

α

^

,

ε

,

ε

^

,

μ

,

μ

^

)

=

−

1

2

w

T

∑

i

=

1

m

(

α

^

i

−

α

i

)

x

i

+

∑

i

=

1

m

(

α

i

ε

i

+

α

i

ε

^

i

+

μ

i

ε

i

+

μ

i

ε

^

i

−

μ

i

ε

i

−

μ

^

i

ε

^

i

)

+

∑

i

=

1

m

α

i

(

(

w

T

x

i

+

b

)

−

y

i

−

ϵ

−

ε

i

)

+

∑

i

=

1

m

α

^

i

(

y

i

−

(

w

T

+

b

)

−

ϵ

−

ε

^

i

)

=

∑

i

=

1

m

(

α

i

ε

i

+

α

i

ε

^

i

+

μ

i

ε

i

+

μ

i

ε

^

i

−

μ

i

ε

i

−

μ

^

i

ε

^

i

−

μ

i

ε

i

−

μ

^

i

ε

^

i

−

α

i

ε

i

−

α

^

i

ε

^

i

)

+

∑

i

=

1

m

[

y

i

(

α

^

i

−

α

i

)

−

ϵ

(

α

^

i

+

α

i

)

]

−

1

2

w

T

∑

i

=

1

m

(

α

^

i

−

α

i

)

x

i

+

∑

i

=

1

m

(

α

i

−

α

^

i

)

w

T

x

i

=

∑

i

=

1

m

[

y

i

(

α

^

i

−

α

i

)

−

ϵ

(

α

^

i

+

α

i

)

]

+

∑

i

=

1

m

(

α

i

ε

^

i

−

μ

i

ε

^

i

−

μ

^

i

ε

^

i

−

α

^

i

ε

^

i

)

−

1

2

∑

i

=

1

m

∑

j

=

1

m

(

α

^

i

−

α

i

)

(

∣

^

a

l

p

h

a

j

−

α

j

)

x

i

T

x

j

=

∑

i

=

1

m

[

y

i

(

α

^

i

−

α

i

)

−

ϵ

(

α

^

i

+

α

i

)

]

+

∑

i

=

1

m

[

(

α

i

+

μ

i

)

−

(

μ

^

i

+

α

^

i

)

]

ε

^

i

−

1

2

∑

i

=

1

m

∑

j

=

1

m

(

α

^

i

−

α

i

)

(

∣

^

a

l

p

h

a

j

−

α

j

)

x

i

T

x

j

=

∑

i

=

1

m

[

y

i

(

α

^

i

−

α

i

)

−

ϵ

(

α

^

i

+

α

i

)

]

−

1

2

∑

i

=

1

m

∑

j

=

1

m

(

α

^

i

−

α

i

)

(

∣

^

a

l

p

h

a

j

−

α

j

)

x

i

T

x

j

\begin{aligned} L(w,b,\alpha,\hat\alpha,\varepsilon,\hat\varepsilon ,\mu,\hat\mu) =-\frac{1}{2}w^T\sum_{i=1}^m(\hat\alpha_i-\alpha_i)x_i+\sum_{i=1}^m(\alpha_i\varepsilon_i+\alpha_i\hat\varepsilon_i+\mu_i\varepsilon_i+\mu_i\hat\varepsilon_i-\mu_i\varepsilon_i-\hat\mu_i\hat\varepsilon_i)\\ +\sum_{i=1}^m\alpha_i((w^Tx_i+b)-y_i-\epsilon-\varepsilon_i)+\sum_{i=1}^m\hat\alpha_i(y_i-(w^T+b)-\epsilon-\hat\varepsilon_i)\\ =\sum_{i=1}^m(\alpha_i\varepsilon_i+\alpha_i\hat\varepsilon_i+\mu_i\varepsilon_i+\mu_i\hat\varepsilon_i-\mu_i\varepsilon_i-\hat\mu_i\hat\varepsilon_i-\mu_i\varepsilon_i-\hat\mu_i\hat\varepsilon_i-\alpha_i\varepsilon_i-\hat\alpha_i\hat\varepsilon_i)\\ +\sum_{i=1}^m[y_i(\hat\alpha_i-\alpha_i)-\epsilon(\hat\alpha_i+\alpha_i)]-\frac{1}{2}w^T\sum_{i=1}^m(\hat\alpha_i-\alpha_i)x_i+\sum_{i=1}^m(\alpha_i-\hat\alpha_i)w^Tx_i\\ =\sum_{i=1}^m[y_i(\hat\alpha_i-\alpha_i)-\epsilon(\hat\alpha_i+\alpha_i)]+\sum_{i=1}^m(\alpha_i\hat\varepsilon_i-\mu_i\hat\varepsilon_i-\hat\mu_i\hat\varepsilon_i-\hat\alpha_i\hat\varepsilon_i)-\frac{1}{2}\sum_{i=1}^m\sum_{j=1}^m(\hat\alpha_i-\alpha_i)(\hat|alpha_j-\alpha_j)x_i^Tx_j\\ =\sum_{i=1}^m[y_i(\hat\alpha_i-\alpha_i)-\epsilon(\hat\alpha_i+\alpha_i)]+\sum_{i=1}^m[(\alpha_i+\mu_i)-(\hat\mu_i+\hat\alpha_i)]\hat\varepsilon_i-\frac{1}{2}\sum_{i=1}^m\sum_{j=1}^m(\hat\alpha_i-\alpha_i)(\hat|alpha_j-\alpha_j)x_i^Tx_j\\ =\sum_{i=1}^m[y_i(\hat\alpha_i-\alpha_i)-\epsilon(\hat\alpha_i+\alpha_i)]-\frac{1}{2}\sum_{i=1}^m\sum_{j=1}^m(\hat\alpha_i-\alpha_i)(\hat|alpha_j-\alpha_j)x_i^Tx_j \end{aligned}

L(w,b,α,α^,ε,ε^,μ,μ^)=−21wTi=1∑m(α^i−αi)xi+i=1∑m(αiεi+αiε^i+μiεi+μiε^i−μiεi−μ^iε^i)+i=1∑mαi((wTxi+b)−yi−ϵ−εi)+i=1∑mα^i(yi−(wT+b)−ϵ−ε^i)=i=1∑m(αiεi+αiε^i+μiεi+μiε^i−μiεi−μ^iε^i−μiεi−μ^iε^i−αiεi−α^iε^i)+i=1∑m[yi(α^i−αi)−ϵ(α^i+αi)]−21wTi=1∑m(α^i−αi)xi+i=1∑m(αi−α^i)wTxi=i=1∑m[yi(α^i−αi)−ϵ(α^i+αi)]+i=1∑m(αiε^i−μiε^i−μ^iε^i−α^iε^i)−21i=1∑mj=1∑m(α^i−αi)(∣^alphaj−αj)xiTxj=i=1∑m[yi(α^i−αi)−ϵ(α^i+αi)]+i=1∑m[(αi+μi)−(μ^i+α^i)]ε^i−21i=1∑mj=1∑m(α^i−αi)(∣^alphaj−αj)xiTxj=i=1∑m[yi(α^i−αi)−ϵ(α^i+αi)]−21i=1∑mj=1∑m(α^i−αi)(∣^alphaj−αj)xiTxj

(6.51)

∑

i

=

1

m

[

y

i

(

α

^

i

−

α

i

)

−

ϵ

(

α

^

i

+

α

i

)

]

−

1

2

∑

i

=

1

m

∑

j

=

1

m

(

α

^

i

−

α

i

)

(

∣

^

a

l

p

h

a

j

−

α

j

)

x

i

T

x

j

\tag{6.51}\sum_{i=1}^m[y_i(\hat\alpha_i-\alpha_i)-\epsilon(\hat\alpha_i+\alpha_i)]-\frac{1}{2}\sum_{i=1}^m\sum_{j=1}^m(\hat\alpha_i-\alpha_i)(\hat|alpha_j-\alpha_j)x_i^Tx_j

i=1∑m[yi(α^i−αi)−ϵ(α^i+αi)]−21i=1∑mj=1∑m(α^i−αi)(∣^alphaj−αj)xiTxj(6.51)

s

.

t

.

∑

i

=

1

m

(

α

^

i

−

α

i

)

=

0

s.t.\sum_{i=1}^m(\hat\alpha_i-\alpha_i)=0

s.t.i=1∑m(α^i−αi)=0

0

≤

α

i

,

α

^

i

≤

C

0\leq\alpha_i,\hat\alpha_i\leq C

0≤αi,α^i≤C

上述过程满足KKT条件,即要求

(6.52)

{

α

i

(

f

(

x

i

)

−

y

i

−

ϵ

−

ε

i

)

=

0

α

^

i

(

y

i

−

f

(

x

i

)

−

ϵ

−

ε

i

)

=

0

α

i

α

^

i

=

0

,

ε

i

ε

^

i

=

0

(

C

−

α

i

)

ε

i

=

0

,

(

c

−

α

^

i

)

ε

^

i

=

0

r

\tag{6.52}\begin{cases}\\ \alpha_i(f(x_i)-y_i-\epsilon-\varepsilon_i)=0 \\ \hat\alpha_i(y_i-f(x_i)-\epsilon-\varepsilon_i)=0\\ \alpha_i\hat\alpha_i=0,\varepsilon_i\hat\varepsilon_i=0\\ (C-\alpha_i)\varepsilon_i=0,(c-\hat\alpha_i)\hat\varepsilon_i=0 \end{cases}r

⎩⎪⎪⎪⎨⎪⎪⎪⎧αi(f(xi)−yi−ϵ−εi)=0α^i(yi−f(xi)−ϵ−εi)=0αiα^i=0,εiε^i=0(C−αi)εi=0,(c−α^i)ε^i=0r(6.52)

将式6.47代入6.7

(6.7)

f

(

x

)

=

w

T

x

+

b

\tag{6.7} f(x) = w^Tx+b

f(x)=wTx+b(6.7)

(6.47)

w

=

∑

i

=

1

m

(

α

^

i

−

α

i

)

x

i

\tag{6.47}w=\sum_{i=1}^m(\hat\alpha_i-\alpha_i)x_i

w=i=1∑m(α^i−αi)xi(6.47)

(6.53)

f

(

x

)

=

∑

i

=

1

m

(

α

^

i

−

α

i

)

x

i

T

x

+

b

\tag{6.53}f(x)=\sum_{i=1}^m(\hat\alpha_i-\alpha_i)x_i^Tx+b

f(x)=i=1∑m(α^i−αi)xiTx+b(6.53)

(6.54) b = y i + ϵ − ∑ j = 1 m ( α ^ j α j ) x j T x i \tag{6.54}b=y_i+\epsilon-\sum_{j=1}^m(\hat\alpha_j\alpha_j)x_j^Tx_i b=yi+ϵ−j=1∑m(α^jαj)xjTxi(6.54)

(6.55) w = ∑ i = 1 m ( α ^ i − α i ) ϕ ( x i ) \tag{6.55}w=\sum_{i=1}^m(\hat\alpha_i-\alpha_i)\phi (x_i) w=i=1∑m(α^i−αi)ϕ(xi)(6.55)

(6.56) f ( x ) = ∑ i = 1 m ( α ^ i − α i ) k ( x , x i ) + b \tag{6.56}f(x)=\sum_{i=1}^m(\hat\alpha_i-\alpha_i)k(x,x_i)+b f(x)=i=1∑m(α^i−αi)k(x,xi)+b(6.56) 其中 k ( x i , x j ) = ϕ ( x i ) T ϕ ( x j ) k(x_i,x_j)=\phi(x_i)^T \phi(x_j) k(xi,xj)=ϕ(xi)Tϕ(xj)为核函数

6.6 核方法[公式6.57-6.70]

它的核心思想是将数据映射到高维空间中,希望在高维空间中数据具有更好的区分性,而核函数是用来计算映射到高维空间中内积的一种方法,也就是说核方法的本质应该是内积,而内积又恰恰定义了相似度。

再生核希尔伯特空间

(6.57)

m

i

n

⎵

h

∈

H

F

(

h

)

=

Ω

(

∣

∣

h

∣

∣

H

)

+

ℓ

(

h

(

x

1

)

,

h

(

x

2

)

…

h

(

x

m

)

)

\tag{6.57} \underbrace{min}_{\text{$h \in H$}}F(h) = \Omega(||h||_H)+\ell(h(x_1),h(x_2)\dots h(x_m))

h∈H

minF(h)=Ω(∣∣h∣∣H)+ℓ(h(x1),h(x2)…h(xm))(6.57)

(6.58)

h

∗

(

x

)

=

∑

i

=

1

m

α

i

κ

(

x

,

x

i

)

\tag{6.58} h^*(x) = \sum_{i=1}^m\alpha_i\kappa(x,x_i)

h∗(x)=i=1∑mαiκ(x,xi)(6.58)

证明见wiki

将样本映射到高维空间,进行线性判别分析

(6.59)

h

(

x

)

=

w

T

ϕ

(

x

)

\tag{6.59} h(x) =w^T\phi(x)

h(x)=wTϕ(x)(6.59)

(6.60) m a x ⎵ w J ( w ) = w T S b ϕ w w T S w ϕ w \tag{6.60} \underbrace{max}_{\text{$w$}}J(w) = \frac{w^TS_b^ \phi w}{w^TS_w^\phi w} w maxJ(w)=wTSwϕwwTSbϕw(6.60)

(6.61) μ i ϕ = 1 m i ∑ x ∈ X i ϕ ( x ) \tag{6.61} \mu_i^\phi = \frac{1}{m_i}\sum_{x\in X_i}\phi(x) μiϕ=mi1x∈Xi∑ϕ(x)(6.61)

(6.62) S b ϕ = ( μ 1 ϕ − μ 0 ϕ ) ( μ 1 ϕ − μ 0 ϕ ) T \tag{6.62} S_b^\phi =(\mu_1^\phi - \mu_0^\phi )(\mu_1^\phi - \mu_0^\phi )^T Sbϕ=(μ1ϕ−μ0ϕ)(μ1ϕ−μ0ϕ)T(6.62)

(6.63) S w ϕ = ∑ i = 0 1 ∑ x ∈ X i ( ϕ ( x ) − μ i ϕ ) ( ϕ ( x ) − μ i ϕ ) T \tag{6.63} S_w^\phi =\sum_{i=0}^{1}\sum_{x\in X_i}(\phi (x)- \mu_i^\phi )(\phi (x)- \mu_i^\phi )^T Swϕ=i=0∑1x∈Xi∑(ϕ(x)−μiϕ)(ϕ(x)−μiϕ)T(6.63)

(6.64) h ( x ) = ∑ i = 1 m α i κ ( x , x i ) = ∑ i = 1 m α i ϕ ( x ) T ϕ ( x i ) = w T ϕ ( x ) \tag{6.64} h(x) = \sum_{i=1}^m\alpha_i\kappa(x,x_i) = \sum_{i=1}^m\alpha_i\phi (x)^T\phi (x_i) =w^T\phi(x) h(x)=i=1∑mαiκ(x,xi)=i=1∑mαiϕ(x)Tϕ(xi)=wTϕ(x)(6.64)

(6.65)

w

=

∑

i

=

1

m

α

i

ϕ

(

x

i

)

\tag{6.65} w = \sum_{i=1}^m\alpha_i\phi (x_i)

w=i=1∑mαiϕ(xi)(6.65)

推导6.65

h

(

x

)

=

∑

i

=

1

m

α

i

ϕ

(

x

)

T

ϕ

(

x

i

)

=

w

T

ϕ

(

x

)

h(x) = \sum_{i=1}^m\alpha_i\phi (x)^T\phi (x_i) =w^T\phi(x)

h(x)=i=1∑mαiϕ(x)Tϕ(xi)=wTϕ(x)

(6.66) μ ^ 0 = 1 m 0 K l 0 \tag{6.66} \hat{\mu}_0 =\frac{1}{m_0}Kl_0 μ^0=m01Kl0(6.66)

(6.67) μ ^ 0 = 1 m 1 K l 1 \tag{6.67} \hat{\mu}_0 =\frac{1}{m_1}Kl_1 μ^0=m11Kl1(6.67)

(6.68) M = ( μ ^ 0 − μ ^ 1 ) ( μ ^ 0 − μ ^ 1 ) T = S b ϕ \tag{6.68} M=(\hat{\mu}_0-\hat{\mu}_1)(\hat{\mu}_0-\hat{\mu}_1)^T = S_b^\phi M=(μ^0−μ^1)(μ^0−μ^1)T=Sbϕ(6.68)

(6.69) N = K K T − ∑ i = 0 1 m i μ ^ i μ ^ i T = S w ϕ \tag{6.69} N = KK^T-\sum_{i=0}^1m_i\hat{\mu}_i\hat{\mu}_i^T = S_w^\phi N=KKT−i=0∑1miμ^iμ^iT=Swϕ(6.69)

(6.70) m a x ⎵ w J ( α ) = α T M α α T N α \tag{6.70} \underbrace{max}_{\text{$w$}}J(\alpha)= \frac{\alpha^TM\alpha}{\alpha^TN\alpha} w maxJ(α)=αTNααTMα(6.70)

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?