原文链接地址:http://www.learnopengles.com/android-lesson-four-introducing-basic-texturing/

Android Lesson Four: Introducing Basic Texturing

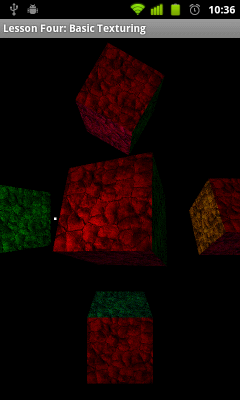

This is the fourth tutorial in our Android series. In this lesson, we’re going to add to what we learned in lesson three and learn how to add texturing.We’ll look at how to read an image from the application resources, load this image into OpenGL ES, and display it on the screen.

Follow along with me and you’ll understand basic texturing in no time flat!

Assumptions and prerequisites

Each lesson in this series builds on the lesson before it. This lesson is an extension of lesson three, so please be sure to review that lesson before continuing on. Here are the previous lessons in the series:

- Android Lesson One: Getting Started

- Android Lesson Two: Ambient and Diffuse Lighting

- Android Lesson Three: Moving to Per-Fragment Lighting

The basics of texturing

The art of texture mapping (along with lighting) is one of the most important parts of building up a realistic-looking 3D world. Without texture mapping, everything is smoothly shaded and looks quite artificial, like an old console game from the 90s.

The first games to start heavily using textures, such as Doom and Duke Nukem 3D, were able to greatly enhance the realism of the gameplay through the added visual impact — these were games that could start to truly scare us if played at night in the dark.

Here’s a look at a scene, without and with texturing:

| In the image on the left, the scene is lit with per-pixel lighting and colored. Otherwise the scene appears very smooth. There are not many places in real-life where we would walk into a room full of smooth-shaded objects like this cube.In the image on the right, the same scene has now also been textured. The ambient lighting has also been increased because the use of textures darkens the overall scene, so this was done so you could also see the effects of texturing on the side cubes. The cubes have the same number of polygons as before, but they appear a lot more detailed with the new texture. For those who are curious, the texture source is from public domain textures. |

Texture coordinates

In OpenGL, texture coordinates are sometimes referred to in coordinates (s, t) instead of (x, y). (s, t) represents a texel on the texture, which is then mapped to the polygon. Another thing to note is that these texture coordinates are like other OpenGL coordinates: The t (or y) axis is pointing upwards, so that values get higher the higher you go.

In most computer images, the y axis is pointing downwards. This means that the top-left most corner of the image is (0, 0), and the y values increase the lower you go.In other words, the y-axis is flipped between OpenGL’s coordinate system and most computer images, and this is something you need to take into account.

The basics of texture mapping

In this lesson, we will look at regular 2D textures (GL_TEXTURE_2D) with red, green, and blue color information (GL_RGB). OpenGL ES also offers other texture modes that let you do different and more specialized effects. We’ll look at point sampling using GL_NEAREST. GL_LINEAR and mip-mapping will be covered in a future lesson.

Let’s start getting into the code and see how to start using basic texturing in Android!

Vertex shader

We’re going to take our per-pixel lighting shader from the previous lesson, and add texturing support. Here are the new changes:

attribute vec2 a_TexCoordinate; // Per-vertex texture coordinate information we will pass in.

...

varying vec2 v_TexCoordinate; // This will be passed into the fragment shader.

...

// Pass through the texture coordinate.

v_TexCoordinate = a_TexCoordinate; In the vertex shader, we add a new attribute of type vec2 (an array with two components) that will take in texture coordinate information as input. This will be per-vertex, like the position, color, and normal data. We also add a new varying that will pass this data through to the fragment shader via linear interpolation across the surface of the triangle.

Fragment shader

uniform sampler2D u_Texture; // The input texture.

...

varying vec2 v_TexCoordinate; // Interpolated texture coordinate per fragment.

...

// Add attenuation.

diffuse = diffuse * (1.0 / (1.0 + (0.10 * distance)));

...

// Add ambient lighting

diffuse = diffuse + 0.3;

...

// Multiply the color by the diffuse illumination level and texture value to get final output color.

gl_FragColor = (v_Color * diffuse * texture2D(u_Texture, v_TexCoordinate)); We add a new uniform of type sampler2D to represent the actual texture data (as opposed to texture coordinates). The varying passes in the interpolated texture coordinates from the vertex shader, and we call texture2D(texture, textureCoordinate) to read in the value of the texture at the current coordinate. We then take this value and multiply it with the other terms to get the final output color.

Adding in a texture this way darkens the overall scene somewhat, so we also boost up the ambient lighting a bit and reduce the lighting attenuation.

Loading in the texture from an image file

public static int loadTexture(final Context context, final int resourceId)

{

final int[] textureHandle = new int[1];

GLES20.glGenTextures(1, textureHandle, 0);

if (textureHandle[0] != 0)

{

final BitmapFactory.Options options = new BitmapFactory.Options();

options.inScaled = false; // No pre-scaling

// Read in the resource

final Bitmap bitmap = BitmapFactory.decodeResource(context.getResources(), resourceId, options);

// Bind to the texture in OpenGL

GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, textureHandle[0]);

// Set filtering

GLES20.glTexParameteri(GLES20.GL_TEXTURE_2D, GLES20.GL_TEXTURE_MIN_FILTER, GLES20.GL_NEAREST);

GLES20.glTexParameteri(GLES20.GL_TEXTURE_2D, GLES20.GL_TEXTURE_MAG_FILTER, GLES20.GL_NEAREST);

// Load the bitmap into the bound texture.

GLUtils.texImage2D(GLES20.GL_TEXTURE_2D, 0, bitmap, 0);

// Recycle the bitmap, since its data has been loaded into OpenGL.

bitmap.recycle();

}

if (textureHandle[0] == 0)

{

throw new RuntimeException("Error loading texture.");

}

return textureHandle[0];

} This bit of code will read in a graphics file from your Android res folder and load it into OpenGL. I’ll explain what each part does.

We first need to ask OpenGL to create a new handle for us. This handle serves as a unique identifier, and we use it whenever we want to refer to the same texture in OpenGL.

final int[] textureHandle = new int[1];

GLES20.glGenTextures(1, textureHandle, 0); The OpenGL method can be used to generate multiple handles at the same time; here we generate just one.

Once we have a texture handle, we use it to load the texture. First, we need to get the texture in a format that OpenGL will understand. We can’t just feed it raw data from a PNG or JPG, because it won’t understand that. The first step that we need to do is to decode the image file into an Android Bitmap object:

final BitmapFactory.Options options = new BitmapFactory.Options();

options.inScaled = false; // No pre-scaling

// Read in the resource

final Bitmap bitmap = BitmapFactory.decodeResource(context.getResources(), resourceId, options); By default, Android applies pre-scaling to bitmaps depending on the resolution of your device and which resource folder you placed the image in. We don’t want Android to scale our bitmap at all, so to be sure, we set inScaled to false.

// Bind to the texture in OpenGL

GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, textureHandle[0]);

// Set filtering

GLES20.glTexParameteri(GLES20.GL_TEXTURE_2D, GLES20.GL_TEXTURE_MIN_FILTER, GLES20.GL_NEAREST);

GLES20.glTexParameteri(GLES20.GL_TEXTURE_2D, GLES20.GL_TEXTURE_MAG_FILTER, GLES20.GL_NEAREST); We then bind to the texture and set a couple of parameters. Binding to a texture tells OpenGL that subsequent OpenGL calls should affect this texture. We set the default filters to GL_NEAREST, which is the quickest and also the roughest form of filtering. All it does is pick the nearest texel at each point in the screen, which can lead to graphical artifacts and aliasing.

- GL_TEXTURE_MIN_FILTER — This tells OpenGL what type of filtering to apply when drawing the texture smaller than the original size in pixels.

- GL_TEXTURE_MAG_FILTER — This tells OpenGL what type of filtering to apply when magnifying the texture beyond its original size in pixels.

// Load the bitmap into the bound texture.

GLUtils.texImage2D(GLES20.GL_TEXTURE_2D, 0, bitmap, 0);

// Recycle the bitmap, since its data has been loaded into OpenGL.

bitmap.recycle(); Android has a very useful utility to load bitmaps directly into OpenGL. Once you’ve read in a resource into a Bitmap object, GLUtils.texImage2D() will take care of the rest. Here’s the method signature:

public static void texImage2D (int target, int level, Bitmap bitmap, int border)

We want a regular 2D bitmap, so we pass in GL_TEXTURE_2D as the first parameter. The second parameter is for mip-mapping, and lets you specify the image to use at each level. We’re not using mip-mapping here so we’ll put 0 which is the default level. We pass in the bitmap, and we’re not using the border so we pass in 0.

We then call recycle() on the original bitmap, which is an important step to free up memory. The texture has been loaded into OpenGL, so we don’t need to keep a copy of it lying around. Yes, Android apps run under a Dalvik VM that performs garbage collection, but Bitmap objects contain data that resides in native memory and they take a few cycles to be garbage collected if you don’t recycle them explicitly. This means that you could actually crash with an out of memory error if you forget to do this, even if you no longer hold any references to the bitmap.

Applying the texture to our scene

First, we need to add various members to the class to hold stuff we need for our texture:

/** Store our model data in a float buffer. */

private final FloatBuffer mCubeTextureCoordinates;

/** This will be used to pass in the texture. */

private int mTextureUniformHandle;

/** This will be used to pass in model texture coordinate information. */

private int mTextureCoordinateHandle;

/** Size of the texture coordinate data in elements. */

private final int mTextureCoordinateDataSize = 2;

/** This is a handle to our texture data. */

private int mTextureDataHandle; We basically need to add new members to track what we added to the shaders, as well as hold a reference to our texture.

Defining the texture coordinates

We define our texture coordinates in the constructor:

// S, T (or X, Y)

// Texture coordinate data.

// Because images have a Y axis pointing downward (values increase as you move down the image) while

// OpenGL has a Y axis pointing upward, we adjust for that here by flipping the Y axis.

// What's more is that the texture coordinates are the same for every face.

final float[] cubeTextureCoordinateData =

{

// Front face

0.0f, 0.0f,

0.0f, 1.0f,

1.0f, 0.0f,

0.0f, 1.0f,

1.0f, 1.0f,

1.0f, 0.0f,

... The coordinate data might look a little confusing here. If you go back and look at how the position points are defined in Lesson 3, you’ll see that we define two triangles per face of the cube. The points are defined like this:

(Triangle 1)

Upper-left,

Lower-left,

Upper-right,

(Triangle 2)

Lower-left,

Lower-right,

Upper-right

The texture coordinates are pretty much the position coordinates for the front face, but with the Y axis flipped to compensate for the fact that in graphics images, the Y axis points in the opposite direction of OpenGL’s Y axis.

Setting up the texture

We load the texture in the onSurfaceCreated() method.

@Override

public void onSurfaceCreated(GL10 glUnused, EGLConfig config)

{

...

// Enable texture mapping

GLES20.glEnable(GLES20.GL_TEXTURE_2D);

...

mProgramHandle = ShaderHelper.createAndLinkProgram(vertexShaderHandle, fragmentShaderHandle,

new String[] {"a_Position", "a_Color", "a_Normal", "a_TexCoordinate"});

...

// Load the texture

mTextureDataHandle = TextureHelper.loadTexture(mActivityContext, R.drawable.bumpy_bricks_public_domain); We enable 2D texture mapping, we pass in “a_TexCoordinate” as a new attribute to bind to in our shader program, and we load in our texture using the loadTexture() method we created above.

Using the texture

We also add some code to the onDrawFrame(GL10 glUnused) method.

We get the shader locations for the texture data and texture coordinates. In OpenGL, textures need to be bound to texture units before they can be used in rendering. A texture unit is what reads in the texture and actually passes it through the shader so it can be displayed on the screen. Different graphics chips have a different number of texture units, so you’ll need to check if additional texture units exist before using them.

First, we tell OpenGL that we want to set the active texture unit to the first unit, texture unit 0. Our call to glBindTexture() will then automatically bind the texture to the first texture unit. Finally, we tell OpenGL that we want to bind the first texture unit to the mTextureUniformHandle, which refers to “u_Texture” in the fragment shader.

In short:

- Set the active texture unit.

- Bind a texture to this unit.

- Assign this unit to a texture uniform in the fragment shader.

Repeat for as many textures as you need.

Further exercises

Once you’ve made it this far, you’re done! Surely that wasn’t as bad as you expected… or was it?  As your next exercise, try to add multi-texturing by loading in another texture, binding it to another unit, and using it in the shader.

As your next exercise, try to add multi-texturing by loading in another texture, binding it to another unit, and using it in the shader.

Review

Here is a review of the full shader code, as well as a new helper function that we added to read in the shader code from the resource folder instead of storing it as a Java String:

Vertex shader

uniform mat4 u_MVPMatrix; // A constant representing the combined model/view/projection matrix.

uniform mat4 u_MVMatrix; // A constant representing the combined model/view matrix.

attribute vec4 a_Position; // Per-vertex position information we will pass in.

attribute vec4 a_Color; // Per-vertex color information we will pass in.

attribute vec3 a_Normal; // Per-vertex normal information we will pass in.

attribute vec2 a_TexCoordinate; // Per-vertex texture coordinate information we will pass in.

varying vec3 v_Position; // This will be passed into the fragment shader.

varying vec4 v_Color; // This will be passed into the fragment shader.

varying vec3 v_Normal; // This will be passed into the fragment shader.

varying vec2 v_TexCoordinate; // This will be passed into the fragment shader.

// The entry point for our vertex shader.

void main()

{

// Transform the vertex into eye space.

v_Position = vec3(u_MVMatrix * a_Position);

// Pass through the color.

v_Color = a_Color;

// Pass through the texture coordinate.

v_TexCoordinate = a_TexCoordinate;

// Transform the normal's orientation into eye space.

v_Normal = vec3(u_MVMatrix * vec4(a_Normal, 0.0));

// gl_Position is a special variable used to store the final position.

// Multiply the vertex by the matrix to get the final point in normalized screen coordinates.

gl_Position = u_MVPMatrix * a_Position;

} Fragment shader

precision mediump float; // Set the default precision to medium. We don't need as high of a

// precision in the fragment shader.

uniform vec3 u_LightPos; // The position of the light in eye space.

uniform sampler2D u_Texture; // The input texture.

varying vec3 v_Position; // Interpolated position for this fragment.

varying vec4 v_Color; // This is the color from the vertex shader interpolated across the

// triangle per fragment.

varying vec3 v_Normal; // Interpolated normal for this fragment.

varying vec2 v_TexCoordinate; // Interpolated texture coordinate per fragment.

// The entry point for our fragment shader.

void main()

{

// Will be used for attenuation.

float distance = length(u_LightPos - v_Position);

// Get a lighting direction vector from the light to the vertex.

vec3 lightVector = normalize(u_LightPos - v_Position);

// Calculate the dot product of the light vector and vertex normal. If the normal and light vector are

// pointing in the same direction then it will get max illumination.

float diffuse = max(dot(v_Normal, lightVector), 0.0);

// Add attenuation.

diffuse = diffuse * (1.0 / (1.0 + (0.10 * distance)));

// Add ambient lighting

diffuse = diffuse + 0.3;

// Multiply the color by the diffuse illumination level and texture value to get final output color.

gl_FragColor = (v_Color * diffuse * texture2D(u_Texture, v_TexCoordinate));

} How to read in the shader from a text file in the raw resources folder

public static String readTextFileFromRawResource(final Context context,

final int resourceId)

{

final InputStream inputStream = context.getResources().openRawResource(

resourceId);

final InputStreamReader inputStreamReader = new InputStreamReader(

inputStream);

final BufferedReader bufferedReader = new BufferedReader(

inputStreamReader);

String nextLine;

final StringBuilder body = new StringBuilder();

try

{

while ((nextLine = bufferedReader.readLine()) != null)

{

body.append(nextLine);

body.append('\n');

}

}

catch (IOException e)

{

return null;

}

return body.toString();

}

1892

1892

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?