文章目录

1. 论文总述

这篇也是基于CNN的光流估计work,ECCV2020的best paper。

本文主要是在计算两帧图像特征的相似性时构建了一个4D的 correlation volumes,然后还参考了传统光流算法的迭代优化思想,逐步地一点一点的对flow map进行优化!! 实现上是用的循环单元GRU(这个后续我得细看下实现)

重要: RAFT以高分辨率维护和更新单个固定的光流场。

大家都推荐RAFT模型来做光流估计,甚至可以利用它来做光流的GT,因为它的泛化性比较强,我在自己的特定数据集下也测试过RAFT的性能,预测出的flow map确实挺好的。

另一个优势就是RAFT的训练速度比较快,模型也比较小,但推理速度并没有宣传的那么快。

针对RAFT有两个解读的比较好的博客,推荐给大家:

RAFT consists of three main components: (1) a feature encoder that extracts a feature vector for each pixel; (2) a correlation layer that produces a 4D

correlation volume for all pairs of pixels, with subsequent pooling to produce

lower resolution volumes; (3) a recurrent GRU-based update operator that retrieves values from the correlation volumes and iteratively updates a flow field

initialized at zero. Fig. 1 illustrates the design of RAFT.

2. RAFT结构被传统算法所启发

The RAFT architecture is motivated by traditional optimization-based approaches. The feature encoder extracts per-pixel features. The correlation layer

computes visual similarity between pixels. The update operator mimics the steps

of an iterative optimization algorithm. But unlike traditional approaches, features and motion priors are not handcrafted but learned—learned by the feature

encoder and the update operator respectively.

Our approach can be viewed as learning to optimize: our network uses a

large number of update blocks to emulate the steps of a first-order optimization

algorithm. However, unlike prior work, we never explicitly define a gradient with

respect to some optimization objective. Instead, our network retrieves features

from correlation volumes to propose the descent direction.

3. RAFT的三个重大创新点

The design of RAFT draws inspiration from many existing works but is substantially novel. First, RAFT maintains and updates a single fixed flow field at

high resolution. This is different from the prevailing coarse-to-fine design in prior

work [42,49,22,23,50], where flow is first estimated at low resolution and upsampled and refined at high resolution. By operating on a single high-resolution flow

field, RAFT overcomes several limitations of a coarse-to-fine cascade: the diffi-

culty of recovering from errors at coarse resolutions, the tendency to miss small

fast-moving objects, and the many training iterations (often over 1M) typically

required for training a multi-stage cascade .(高分辨率 可以 减少训练时的迭代次数??)

Second, the update operator of RAFT is recurrent and lightweight. Many

recent works [24,42,49,22,25] have included some form of iterative refinement,

but do not tie the weights across iterations [42,49,22] and are therefore limited

to a fixed number of iterations. To our knowledge, IRR [24] is the only deep

learning approach [24] that is recurrent. It uses FlowNetS [15] or PWC-Net [42]

as its recurrent unit. When using FlowNetS, it is limited by the size of the

network (38M parameters) and is only applied up to 5 iterations. When using

PWC-Net, iterations are limited by the number of pyramid levels. In contrast,

our update operator has only 2.7M parameters and can be applied 100+ times

during inference without divergence.

Third, the update operator has a novel design, which consists of a convolutional GRU that performs lookups on 4D multi-scale correlation volumes; in

contrast, refinement modules in prior work typically use only plain convolution

or correlation layers.

4. 光流算法需要解决的难点

Optical flow is the task of estimating per-pixel motion between video frames.

It is a long-standing vision problem that remains unsolved. The best systems

are limited by difficulties including fast-moving objects, occlusions, motion blur,

and textureless surfaces.

5. 传统光流算法简介及缺点

Optical flow has traditionally been approached as a hand-crafted optimization problem over the space of dense displacement fields between a pair of images [21,51,13]. Generally, the optimization objective defines a trade-off between

a data term which encourages the alignment of visually similar image regions

and a regularization term which imposes priors on the plausibility of motion.

Such an approach has achieved considerable success, but further progress has

appeared challenging, due to the difficulties in hand-designing an optimization

objective that is robust to a variety of corner cases.

6. Fast DIS 有可能存在的问题

To ensure a smooth objective function, a first order

Taylor approximation is used to model the data term. As a result, they only work

well for small displacements. To handle large displacements, the coarse-to-fine

strategy is used, where an image pyramid is used to estimate large displacements

at low resolution, then small displacements refined at high resolution. But this

coarse-to-fine strategy may miss small fast-moving objects and have difficulty

recovering from early mistakes (前面错了,后面不好找补)

7. 本文相近work

More closely related to our approach is IRR[24], which builds off of the

FlownetS and PWC-Net architecture but shares weights between refinement

networks. When using FlowNetS, it is limited by the size of the network (38M

parameters) and is only applied up to 5 iterations. When using PWC-Net, iterations are limited by the number of pyramid levels. In contrast, we use a much

simpler refinement module (2.7M parameters) which can be applied for 100+ iterations during inference without divergence.

Our method also shares similarites

with Devon [31], namely the construction of the cost volume without warping

and fixed resolution updates. However, Devon does not have any recurrent unit.

It also differs from ours regarding large displacements. Devon handles large displacements using a dilated cost volume while our approach pools the correlation

volume at multiple resolutions.

8. Building correlation volumes

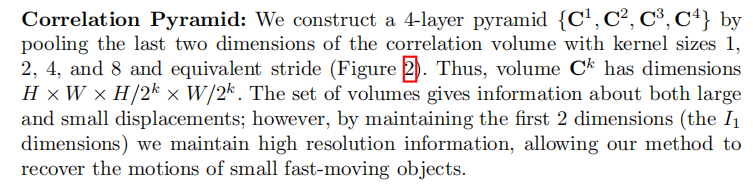

同时构建了一个correlation 金字塔,注意:构建这个金字塔的时候,保持前两维不变,只减小后两维的分辨率!!

计算完correlation pyramid之后,有一个Correlation Lookup操作,look up多次,但是使用的是同一个correlation pyramid,look up简单来说就是: 根据先前计算出来的flow map将image1 进行warp,然后每个像素在warp_image1 的对应像素位置 取一个邻域(半径为r,是一个超参,作者自己设置的),将这个邻域 concat为一个feature??

计算完correlation pyramid之后,有一个Correlation Lookup操作,look up多次,但是使用的是同一个correlation pyramid,look up简单来说就是: 根据先前计算出来的flow map将image1 进行warp,然后每个像素在warp_image1 的对应像素位置 取一个邻域(半径为r,是一个超参,作者自己设置的),将这个邻域 concat为一个feature??

建议还是看论文,这段论文里讲的比较清楚!!

9. Update

10. 一个新颖的利用卷积层去学习的上采样操作

Upsampling: The network outputs optical flow at 1/8 resolution. We upsample

the optical flow to full resolution by taking the full resolution flow at each pixel

to be the convex combination of a 3x3 grid of its coarse resolution neighbors. We

use two convolutional layers to predict a H/8×W/8×(8×8×9) mask and perform

softmax over the weights of the 9 neighbors. The final high resolution flow field is

found by using the mask to take a weighted combination over the neighborhood,

then permuting and reshaping to a H × W × 2 dimensional flow field. This layer

can be directly implemented in PyTorch using the unfold function.

11. 模型指标对比

12. 消融实验

消融实验这块也最好看一下paper中的解释。

Features for Refinement: We compute visual similarity by building a correlation volume between all pairs of pixels. In this experiment, we try replacing

the correlation volume with a warping layer, which uses the current estimate

of optical flow to warp features from I2 onto I1 and then estimates the residual displacement. While warping is still competitive with prior work on Sintel,

correlation performs significantly better, especially on KITTI.

参考文献

- ECCV 2020最佳论文讲了啥?作者为ImageNet一作、李飞飞高徒邓嘉

- RAFT论文笔记

- (论文解读)RAFT: Recurrent All-Pairs Field Transforms for Optical Flow

- 《RAFT:Recurrent All-Pairs Field Transforms for Optical Flow》论文笔记

- https://github.com/princeton-vl/RAFT

- http://hci-benchmark.iwr.uni-heidelberg.de/(HD1K数据集)

343

343

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?