Python+OpenCV:尺度不变特征变换 (SIFT, Scale-Invariant Feature Transform)

理论

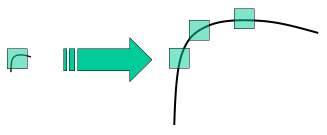

A corner in a small image within a small window is flat when it is zoomed in the same window. So Harris corner is not scale invariant.

SIFT算法主要包括五个步骤:

1. Scale-space Extrema Detection(尺度空间极值检测)

From the image above, it is obvious that we can't use the same window to detect keypoints with different scale.

It is OK with small corner. But to detect larger corners we need larger windows. For this, scale-space filtering is used.

In it, Laplacian of Gaussian is found for the image with various σ values.

LoG acts as a blob detector which detects blobs in various sizes due to change in σ.

In short, σ acts as a scaling parameter.

For eg, in the above image, gaussian kernel with low σ gives high value for small corner while gaussian kernel with high σ fits well for larger corner.

So, we can find the local maxima across the scale and space which gives us a list of (x,y,σ) values which means there is a potential keypoint at (x,y) at σ scale.

But this LoG is a little costly, so SIFT algorithm uses Difference of Gaussians which is an approximation of LoG.

Difference of Gaussian is obtained as the difference of Gaussian blurring of an image with two different σ, let it be σ and kσ.

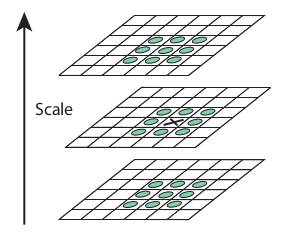

This process is done for different octaves of the image in Gaussian Pyramid. It is represented in below image:

Once this DoG are found, images are searched for local extrema over scale and space.

For eg, one pixel in an image is compared with its 8 neighbours as well as 9 pixels in next scale and 9 pixels in previous scales.

If it is a local extrema, it is a potential keypoint. It basically means that keypoint is best represented in that scale.

It is shown in below image:

Regarding different parameters, the paper gives some empirical data which can be summarized as, number of octaves = 4, number of scale levels = 5, initial σ=1.6, k=2–√ etc as optimal values.

2. Keypoint Localization(关键点定位)

Once potential keypoints locations are found, they have to be refined to get more accurate results.

They used Taylor series expansion(级数展开) of scale space to get more accurate location of extrema, and if the intensity at this extrema is less than a threshold value (0.03 as per the paper), it is rejected.

This threshold is called contrastThreshold in OpenCV.

DoG has higher response for edges, so edges also need to be removed.

For this, a concept similar to Harris corner detector is used. They used a 2x2 Hessian matrix (H) to compute the principal curvature(主曲率).

We know from Harris corner detector that for edges, one eigen value is larger than the other.

So here they used a simple function, If this ratio is greater than a threshold, called edgeThreshold in OpenCV, that keypoint is discarded.

So it eliminates any low-contrast keypoints and edge keypoints and what remains is strong interest points.

3. Orientation Assignment(定向分配)

Now an orientation is assigned to each keypoint to achieve invariance to image rotation.

A neighbourhood is taken around the keypoint location depending on the scale, and the gradient magnitude and direction is calculated in that region.

An orientation histogram with 36 bins covering 360 degrees is created (It is weighted by gradient magnitude and gaussian-weighted circular window with σ equal to 1.5 times the scale of keypoint).

The highest peak in the histogram is taken and any peak above 80% of it is also considered to calculate the orientation.

It creates keypoints with same location and scale, but different directions. It contribute to stability of matching.

4. Keypoint Descriptor(关键点描述符)

Now keypoint descriptor is created.

A 16x16 neighbourhood around the keypoint is taken.

It is divided into 16 sub-blocks of 4x4 size.

For each sub-block, 8 bin orientation histogram is created.

So a total of 128 bin values are available.

It is represented as a vector to form keypoint descriptor.

In addition to this, several measures are taken to achieve robustness against illumination changes, rotation etc.

5. Keypoint Matching(关键点匹配)

Keypoints between two images are matched by identifying their nearest neighbours.

But in some cases, the second closest-match may be very near to the first.

It may happen due to noise or some other reasons.

In that case, ratio of closest-distance to second-closest distance is taken.

If it is greater than 0.8, they are rejected.

It eliminates around 90% of false matches while discards only 5% correct matches, as per the paper.

So this is a summary of SIFT algorithm.

For more details and understanding, reading the original paper is highly recommended.

Remember one thing, this algorithm is patented. So this algorithm is included in the opencv contrib repo

SIFT in OpenCV

####################################################################################################

# 图像尺度不变特征变换(SIFT, Scale-Invariant Feature Transform)

def lmc_cv_image_sift_detection():

"""

函数功能: 图像尺度不变特征变换(SIFT, Scale-Invariant Feature Transform)。

"""

stacking_images = []

image_file_name = ['D:/99-Research/Python/Image/Castle01.jpg',

'D:/99-Research/Python/Image/Castle02.jpg',

'D:/99-Research/Python/Image/Castle03.jpg',

'D:/99-Research/Python/Image/Castle04.jpg']

for i in range(len(image_file_name)):

# 读取图像

image = lmc_cv.imread(image_file_name[i])

image = lmc_cv.cvtColor(image, lmc_cv.COLOR_BGR2RGB)

result_image = image.copy()

gray_image = lmc_cv.cvtColor(image, lmc_cv.COLOR_BGR2GRAY)

# find SIFT keypoints

sift = lmc_cv.SIFT_create()

# keypoints = sift.detect(gray_image, None)

keypoints, descriptors = sift.detectAndCompute(gray_image, None)

# For Python API, flags are modified as:

# cv.DRAW_MATCHES_FLAGS_DEFAULT,

# cv.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS,

# cv.DRAW_MATCHES_FLAGS_DRAW_OVER_OUTIMG,

# cv.DRAW_MATCHES_FLAGS_NOT_DRAW_SINGLE_POINTS

result_image = lmc_cv.drawKeypoints(gray_image, keypoints, result_image,

flags=lmc_cv.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS)

# stacking images side-by-side

stacking_image = np.hstack((image, result_image))

stacking_images.append(stacking_image)

# 显示图像

for i in range(len(stacking_images)):

pyplot.figure('SIFT Keypoints Detection %d' % (i + 1))

pyplot.subplot(1, 1, 1)

pyplot.imshow(stacking_images[i], 'gray')

pyplot.title('SIFT Keypoints Detection')

pyplot.xticks([])

pyplot.yticks([])

pyplot.show()

# 根据用户输入保存图像

if ord("q") == (lmc_cv.waitKey(0) & 0xFF):

# 销毁窗口

pyplot.close('all')

return

7004

7004

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?