下面使用多类逻辑回归进行多类分类。这个模型跟线性回归的主要区别在于输出节点从一个变成了多个。

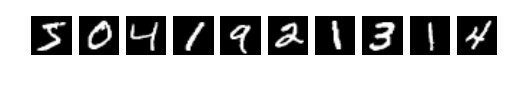

演示这个模型的常见数据集是手写数字识别MNIST,如下图所示。

这里用稍复杂点的数据集FashionMNIST(https://github.com/zalandoresearch/fashion-mnist,http://fashion-mnist.s3-website.eu-central-1.amazonaws.com/),它跟MNIST非常像,但是内容不再是分类数字,而是服饰。我们通过gluon的data.vision模块自动下载这个数据。

获取数据:

from mxnet import gluon

from mxnet import ndarray as nd

def transform(data, label):

return data.astype('float32')/255, label.astype('float32')

mnist_train = gluon.data.vision.FashionMNIST(train=True, transform=transform)

mnist_test = gluon.data.vision.FashionMNIST(train=False, transform=transform)

data, label = mnist_train[0]

('example shape: ', data.shape, 'label:', label)

('example shape: ', (28, 28, 1), 'label:', 2.0)

画出前十个样本:

import matplotlib.pyplot as plt

def show_images(images):

n = images.shape[0]

_, figs = plt.subplots(1, n, figsize=(15, 15))

for i in range(n):

figs[i].imshow(images[i].reshape((28, 28)).asnumpy())

figs[i].axes.get_xaxis().set_visible(False)

figs[i].axes.get_yaxis().set_visible(False)

plt.show()

def get_text_labels(label):

text_labels = [

't-shirt', 'trouser', 'pullover', 'dress,', 'coat',

'sandal', 'shirt', 'sneaker', 'bag', 'ankle boot'

]

return [text_labels[int(i)] for i in label]

data, label = mnist_train[0:9]

show_images(data)

print(get_text_labels(label))

batch_size = 256

train_data = gluon.data.DataLoader(mnist_train, batch_size, shuffle=True)

test_data = gluon.data.DataLoader(mnist_test, batch_size, shuffle=False)

num_inputs = 784

num_outputs = 10

W = nd.random_normal(shape=(num_inputs, num_outputs))

b = nd.random_normal(shape=num_outputs)

params = [W, b]

for param in params:

param.attach_grad()

定义模型和交叉熵损失函数、计算精度:

from mxnet import nd

def softmax(X):

exp = nd.exp(X)

# 假设exp是矩阵,这里对行进行求和,并要求保留axis 1,

# 就是返回 (nrows, 1) 形状的矩阵

partition = exp.sum(axis=1, keepdims=True)

return exp / partition

X = nd.random_normal(shape=(2,5))

X_prob = softmax(X)

print(X_prob)

print(X_prob.sum(axis=1))

def net(X):

return softmax(nd.dot(X.reshape((-1,num_inputs)), W) + b)

def cross_entropy(yhat, y):

return - nd.pick(nd.log(yhat), y)

def accuracy(output, label):

return nd.mean(output.argmax(axis=1)==label).asscalar()

def evaluate_accuracy(data_iterator, net):

acc = 0.

for data, label in data_iterator:

output = net(data)

acc += accuracy(output, label)

return acc / len(data_iterator)

evaluate_accuracy(test_data, net)

import sys

sys.path.append('..')

from utils import SGD

from mxnet import autograd

learning_rate = .1

for epoch in range(5):

train_loss = 0.

train_acc = 0.

for data, label in train_data:

with autograd.record():

output = net(data)

loss = cross_entropy(output, label)

loss.backward()

# 将梯度做平均,这样学习率会对batch size不那么敏感

SGD(params, learning_rate/batch_size)

train_loss += nd.mean(loss).asscalar()

train_acc += accuracy(output, label)

test_acc = evaluate_accuracy(test_data, net)

print("Epoch %d. Loss: %f, Train acc %f, Test acc %f" % (

epoch, train_loss/len(train_data), train_acc/len(train_data), test_acc))

data, label = mnist_test[0:9]

show_images(data)

print('true labels')

print(get_text_labels(label))

predicted_labels = net(data).argmax(axis=1)

print('predicted labels')

print(get_text_labels(predicted_labels.asnumpy()))

import sys

sys.path.append('..')

import utils

batch_size = 256

train_data, test_data = utils.load_data_fashion_mnist(batch_size)

from mxnet import gluon

net = gluon.nn.Sequential()

with net.name_scope():

net.add(gluon.nn.Flatten())

net.add(gluon.nn.Dense(10))

net.initialize()

Softmax和交叉熵损失函数,优化:

softmax_cross_entropy = gluon.loss.SoftmaxCrossEntropyLoss()

trainer = gluon.Trainer(net.collect_params(), 'sgd', {'learning_rate': 0.1})

训练:

from mxnet import ndarray as nd

from mxnet import autograd

for epoch in range(5):

train_loss = 0.

train_acc = 0.

for data, label in train_data:

with autograd.record():

output = net(data)

loss = softmax_cross_entropy(output, label)

loss.backward()

trainer.step(batch_size)

train_loss += nd.mean(loss).asscalar()

train_acc += utils.accuracy(output, label)

test_acc = utils.evaluate_accuracy(test_data, net)

print("Epoch %d. Loss: %f, Train acc %f, Test acc %f" % (

epoch, train_loss/len(train_data), train_acc/len(train_data), test_acc))

data, label = mnist_test[0:9]

show_images(data)

print('true labels')

print(get_text_labels(label))

predicted_labels = net(data).argmax(axis=1)

print('predicted labels')

print(get_text_labels(predicted_labels.asnumpy()))

224

224

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?