VOC数据格式

---VOC2007

+---Annotations(使用labelImg保存的标注文件)

+---ImageSets

| +---Layout

| \---Main(使用脚本生成test.txt,train.txt,trainval.txt,val.txt)

+---JPEGImages(存放所有图片)

+---SegmentationClass

\---SegmentationObject

Yolo 数据格式

MaskRcnn数据格式

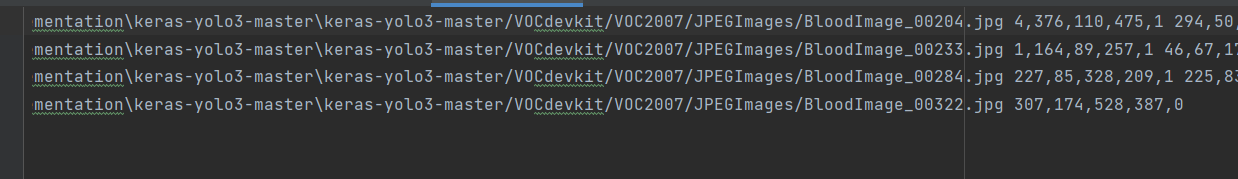

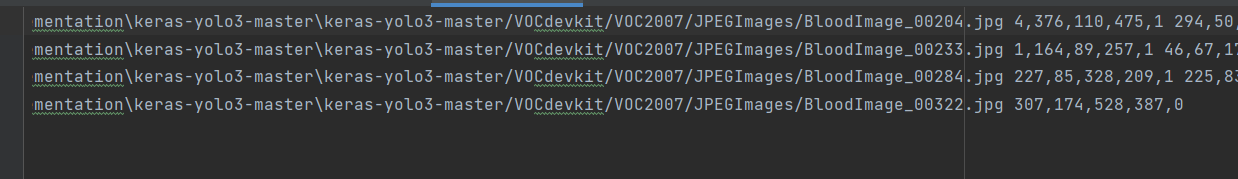

Voc 格式 转YOLO

import xml.etree.ElementTree as ET

from os import getcwd

sets=[('2007', 'train'), ('2007', 'val'), ('2007', 'test')]

classes = ["WBC","RBC",]

def convert_annotation(year, image_id, list_file):

in_file = open('VOCdevkit/VOC%s/Annotations/%s.xml'%(year, image_id))

tree=ET.parse(in_file)

root = tree.getroot()

for obj in root.iter('object'):

difficult = obj.find('difficult').text

cls = obj.find('name').text

if cls not in classes or int(difficult)==1:

continue

cls_id = classes.index(cls)

xmlbox = obj.find('bndbox')

b = (int(xmlbox.find('xmin').text), int(xmlbox.find('ymin').text), int(xmlbox.find('xmax').text), int(xmlbox.find('ymax').text))

list_file.write(" " + ",".join([str(a) for a in b]) + ',' + str(cls_id))

wd = getcwd()

for year, image_set in sets:

image_ids = open('VOCdevkit/VOC%s/ImageSets/Main/%s.txt'%(year, image_set)).read().strip().split()

list_file = open('%s_%s.txt'%(year, image_set), 'w')

for image_id in image_ids:

list_file.write('%s/VOCdevkit/VOC%s/JPEGImages/%s.jpg'%(wd, year, image_id))

convert_annotation(year, image_id, list_file)

list_file.write('\n')

list_file.close()

MaskRcnn json 格式转 yolo

from os import getcwd

import os

import json

import glob

wd = getcwd()

classes = ["Landslide"]

image_ids = glob.glob(r"Data/*png")

print(image_ids)

list_file = open('train.txt', 'w')

def convert_annotation(image_id, list_file):

jsonfile=open('%s.json' % (image_id))

in_file = json.load(jsonfile)

for i in range(0,len(in_file["shapes"])):

object=in_file["shapes"][i]

cls=object["label"]

points=object["points"]

xmin=int(points[0][0])

ymin=int(points[0][1])

xmax=int(points[1][0])

ymax=int(points[1][1])

if cls not in classes:

print("cls not in classes")

continue

cls_id = classes.index(cls)

b = (xmin, ymin, xmax, ymax)

list_file.write(" " + ",".join([str(a) for a in b]) + ',' + str(cls_id))

jsonfile.close()

for image_id in image_ids:

list_file.write('%s.png' % (image_id.split('.')[0]))

convert_annotation(image_id.split('.')[0], list_file)

list_file.write('\n')

list_file.close()

Yolo 数据格式转 VOC格式

Voc 数据格式转YoloLabel, 求anchor boxes

Voc 数据格式转YoloLabel

import xml.etree.ElementTree as ET

import pickle

import os

from os import listdir, getcwd

from os.path import join

def convert(size, box):

x_center = (box[0]+box[1])/2.0

y_center = (box[2]+box[3])/2.0

x = x_center / size[0]

y = y_center / size[1]

w = (box[1] - box[0]) / size[0]

h = (box[3] - box[2]) / size[1]

return (x,y,w,h)

def convert_annotation(xml_files_path, save_txt_files_path, classes):

xml_files = os.listdir(xml_files_path)

print(xml_files)

for xml_name in xml_files:

print(xml_name)

xml_file = os.path.join(xml_files_path, xml_name)

out_txt_path = os.path.join(save_txt_files_path, xml_name.split('.')[0] + '.txt')

out_txt_f = open(out_txt_path, 'w')

tree=ET.parse(xml_file)

root = tree.getroot()

size = root.find('size')

w = int(size.find('width').text)

h = int(size.find('height').text)

for obj in root.iter('object'):

difficult = obj.find('difficult').text

cls = obj.find('name').text

if cls not in classes or int(difficult) == 1:

continue

cls_id = classes.index(cls)

xmlbox = obj.find('bndbox')

b = (float(xmlbox.find('xmin').text), float(xmlbox.find('xmax').text), float(xmlbox.find('ymin').text), float(xmlbox.find('ymax').text))

print(w, h, b)

bb = convert((w,h), b)

out_txt_f.write(str(cls_id) + " " + " ".join([str(a) for a in bb]) + '\n')

if __name__ == "__main__":

classes1 = ['WBC', 'RBC']

xml_files1 = r'VOCdevkit/VOC2007/Annotations/'

save_txt_files1 = r'YoloLabel'

convert_annotation(xml_files1, save_txt_files1, classes1)

求anchor boxes ,用于yolo3

import numpy as np

import random

import argparse

import os

parser = argparse.ArgumentParser(description='使用该脚本生成YOLO-V3的anchor boxes\n')

parser.add_argument('--input_annotation_txt_dir',default="YoloLabel",required=False,type=str,help='输入存储图片的标注txt文件(注意不要有中文)')

parser.add_argument('--output_anchors_txt',required=False,default='generated_anchors/anchors/anchors.txt',type=str,help='输出的存储Anchor boxes的文本文件')

parser.add_argument('--input_num_anchors',required=False,default=9,type=int,help='输入要计算的聚类(Anchor boxes的个数)')

parser.add_argument('--input_cfg_width',required=False,default=416,type=int,help="配置文件中width")

parser.add_argument('--input_cfg_height',required=False,default=416,type=int,help="配置文件中height")

args = parser.parse_args()

'''

centroids 聚类点 尺寸是 numx2,类型是ndarray

annotation_array 其中之一的标注框

'''

def IOU(annotation_array,centroids):

similarities = []

w,h = annotation_array

for centroid in centroids:

c_w,c_h = centroid

if c_w >=w and c_h >= h:

similarity = w*h/(c_w*c_h)

elif c_w >= w and c_h <= h:

similarity = w*c_h/(w*h + (c_w - w)*c_h)

elif c_w <= w and c_h >= h:

similarity = c_w*h/(w*h +(c_h - h)*c_w)

else:

similarity = (c_w*c_h)/(w*h)

similarities.append(similarity)

return np.array(similarities,np.float32)

'''

k_means:k均值聚类

annotations_array 所有的标注框的宽高,N个标注框,尺寸是Nx2,类型是ndarray

centroids 聚类点 尺寸是 numx2,类型是ndarray

'''

def k_means(annotations_array,centroids,eps=0.00005,iterations=200000):

N = annotations_array.shape[0]

num = centroids.shape[0]

distance_sum_pre = -1

assignments_pre = -1*np.ones(N,dtype=np.int64)

iteration = 0

while(True):

iteration += 1

distances = []

for i in range(N):

distance = 1 - IOU(annotations_array[i],centroids)

distances.append(distance)

distances_array = np.array(distances,np.float32)

assignments = np.argmin(distances_array,axis=1)

distances_sum = np.sum(distances_array)

centroid_sums = np.zeros(centroids.shape,np.float32)

for i in range(N):

centroid_sums[assignments[i]] += annotations_array[i]

for j in range(num):

centroids[j] = centroid_sums[j]/(np.sum(assignments==j))

diff = abs(distances_sum-distance_sum_pre)

print("iteration: {},distance: {}, diff: {}, avg_IOU: {}\n".format(iteration,distances_sum,diff,np.sum(1-distances_array)/(N*num)))

if (assignments==assignments_pre).all():

print("按照前后两次的得到的聚类结果是否相同结束循环\n")

break

if diff < eps:

print("按照eps结束循环\n")

break

if iteration > iterations:

print("按照迭代次数结束循环\n")

break

distance_sum_pre = distances_sum

assignments_pre = assignments.copy()

if __name__=='__main__':

num_clusters = args.input_num_anchors

names = os.listdir(args.input_annotation_txt_dir)

annotations_w_h = []

for name in names:

txt_path = os.path.join(args.input_annotation_txt_dir,name)

f = open(txt_path,'r')

for line in f.readlines():

line = line.rstrip('\n')

w,h = line.split(' ')[3:]

annotations_w_h.append((eval(w),eval(h)))

f.close()

annotations_array = np.array(annotations_w_h,dtype=np.float32)

N = annotations_array.shape[0]

random_indices = [random.randrange(N) for i in range(num_clusters)]

centroids = annotations_array[random_indices]

k_means(annotations_array,centroids,0.00005,200000)

widths = centroids[:,0]

sorted_indices = np.argsort(widths)

anchors = centroids[sorted_indices]

f_anchors = open(args.output_anchors_txt,'w')

for anchor in anchors:

f_anchors.write('%d,%d'%(int(anchor[0]*args.input_cfg_width),int(anchor[1]*args.input_cfg_height)))

f_anchors.write('\n')

Mask +image 转 MaskRcnn json 格式

import datetime

import json

import os

import io

import re

import fnmatch

import json

from PIL import Image

import numpy as np

from pycococreatortools import pycococreatortools

from PIL import Image

import base64

from base64 import b64encode

ROOT_DIR = 'D:\zhaozheng\projects\Landslide segementation\Related\JiShunping_2020\datasets\Bijie_landslide_dataset\Bijie-landslide-dataset\landslide\\'

IMAGE_DIR = os.path.join(ROOT_DIR, "image")

ANNOTATION_DIR = os.path.join(ROOT_DIR, "mask")

def img_tobyte(img_pil):

ENCODING='utf-8'

img_byte=io.BytesIO()

img_pil.save(img_byte,format='PNG')

binary_str2=img_byte.getvalue()

imageData = base64.b64encode(binary_str2)

base64_string = imageData.decode(ENCODING)

return base64_string

annotation_files=os.listdir(ANNOTATION_DIR)

for annotation_filename in annotation_files:

coco_output = {

"version": "4.6.0",

"flags": {},

"fillColor": [255, 0,0,128],

"lineColor": [0,255,0, 128],

"imagePath": {},

"shapes": [],

"imageData": {} }

print(annotation_filename)

class_id = 1

name = annotation_filename.split('.',3)[0]

name1="..\\pic\\"+name+'.png'

name2= name+'.png'

coco_output["imagePath"]=name1

image = Image.open(IMAGE_DIR+'/'+ name2)

imageData=img_tobyte(image)

coco_output["imageData"]= imageData

binary_mask = np.asarray(Image.open(ANNOTATION_DIR+'/'+annotation_filename)

.convert('1')).astype(np.uint8)

segmentation=pycococreatortools.binary_mask_to_polygon(binary_mask, tolerance=3)

for item in segmentation:

if(len(item)>10):

list1=[]

for i in range(0, len(item), 2):

list1.append( [item[i],item[i+1]])

seg_info = {'points': list1, "fill_color":'null' ,"line_color":'null' ,"label": "Landslide", "shape_type": "polygon","flags": {}}

coco_output["shapes"].append(seg_info)

coco_output[ "imageHeight"]=binary_mask.shape[0]

coco_output[ "imageWidth"]=binary_mask.shape[1]

full_path='{}/'+name+'.json'

with open( full_path.format(ROOT_DIR), 'w') as output_json_file:

json.dump(coco_output, output_json_file)

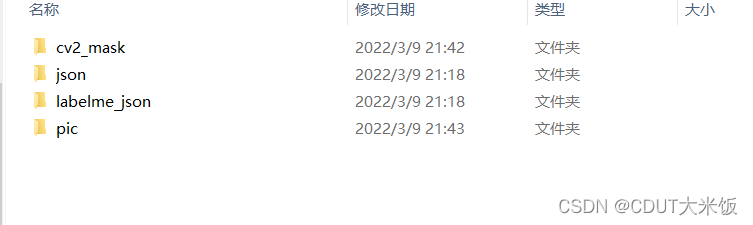

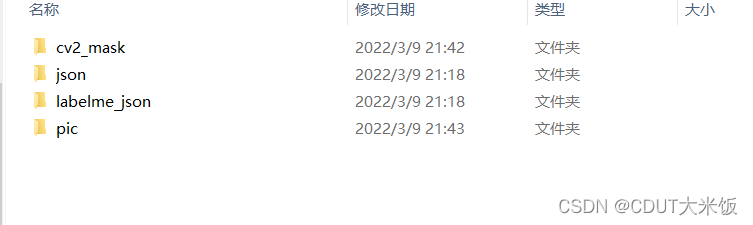

在上一步的基础上批量生成json、yaml等文件

import json

import os

def jsonMutiMS():

os.chdir('C:\\Users\zhaoz\\anaconda3\\Scripts\\')

json_file = 'D:\zhaozheng\Public\ML\segementation\Mask_R_cnn\\train_data\json'

list = os.listdir(json_file)

for i in range(0, len(list)):

path = os.path.join(json_file, list[i])

if os.path.isfile(path):

v=os.system(r'labelme_json_to_dataset.exe %s' %(path))

print(v)

jsonMutiMS()

将上一步label.png改名为对应的名字.png

import os

for root, dirs, names in os.walk(r'../labelme_json'):

for dr in dirs:

file_dir = os.path.join(root, dr)

file = os.path.join(file_dir, 'label.png')

new_name = dr.split('_')[0] + '.png'

new_file_name = os.path.join(file_dir, new_name)

os.rename(file, new_file_name)

将生成的png批量移动到mask文件夹下

import os

from shutil import copyfile

for root, dirs, names in os.walk(r'../labelme_json'):

for dr in dirs:

file_dir = os.path.join(root, dr)

print(dr)

file = os.path.join(file_dir,'label.png')

print(file)

new_name = dr.split('_')[0] + '.png'

new_file_name = os.path.join(file_dir, new_name)

print(new_file_name)

tar_root = r'../cv2_mask'

tar_file = os.path.join(tar_root, new_name)

copyfile(new_file_name, tar_file)

2933

2933

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?