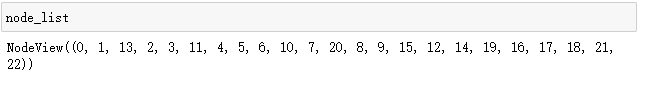

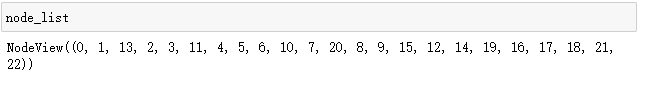

node_list:

node_color_dict

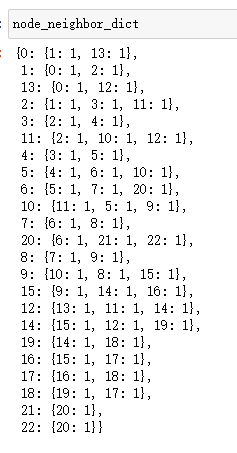

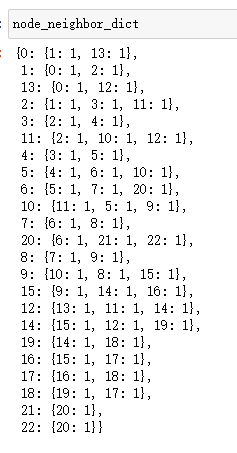

node_neighbor_dict

self.max_iter : 最大迭代次数

node_list:

node_color_dict

node_neighbor_dict

self.max_iter : 最大迭代次数

1万+

1万+

2426

2426

2100

2100

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?