考虑script_3_fine_tuning.py中setting_obj.load_run_save_evaluate()

定义MethodGraphBertNodeClassification类

learning_record_dict = {}

lr = 0.001

weight_decay = 5e-4

max_epoch = 500

spy_tag = True

load_pretrained_path = ''

save_pretrained_path = ''

输入为GraphBertConfig,即

{

"attention_probs_dropout_prob": 0.3,

"finetuning_task": null,

"hidden_act": "gelu",

"hidden_dropout_prob": 0.5,

"hidden_size": 32,

"initializer_range": 0.02,

"intermediate_size": 32,

"is_decoder": false,

"k": 7,

"layer_norm_eps": 1e-12,

"max_hop_dis_index": 100,

"max_inti_pos_index": 100,

"max_wl_role_index": 100,

"num_attention_heads": 2,

"num_hidden_layers": 2,

"num_labels": 2,

"output_attentions": false,

"output_hidden_states": false,

"output_past": true,

"pruned_heads": {},

"residual_type": "graph_raw",

"torchscript": false,

"use_bfloat16": false,

"x_size": 1433,

"y_size": 7

}

#这一层输入大小1433,输出32

self.res_h = torch.nn.Linear(config.x_size, config.hidden_size)

#输入1433,输出7

self.res_y = torch.nn.Linear(config.x_size, config.y_size)

#输入32,输出7

self.cls_y = torch.nn.Linear(config.hidden_size, config.y_size)

————————

前向过程forward

输入:

特征集合raw_embeddings,WL角色wl_embedding,邻居范围集合int_embeddings,hop集合hop_embeddings,训练集索引idx_train

residual_h, residual_y = self.residual_term()

相当于特征X分别在res_h层和res_y层运算后再分别左乘一个邻接矩阵A

MethodGraphBert(

(embeddings): BertEmbeddings(

(raw_feature_embeddings): Linear(in_features=1433, out_features=32, bias=True)

(wl_role_embeddings): Embedding(100, 32)

(inti_pos_embeddings): Embedding(100, 32)

(hop_dis_embeddings): Embedding(100, 32)

(LayerNorm): LayerNorm((32,), eps=1e-12, elementwise_affine=True)

(dropout): Dropout(p=0.5, inplace=False)

)

(encoder): BertEncoder(

(layer): ModuleList(

(0): BertLayer(

(attention): BertAttention(

(self): BertSelfAttention(

(query): Linear(in_features=32, out_features=32, bias=True)

(key): Linear(in_features=32, out_features=32, bias=True)

(value): Linear(in_features=32, out_features=32, bias=True)

(dropout): Dropout(p=0.3, inplace=False)

)

(output): BertSelfOutput(

(dense): Linear(in_features=32, out_features=32, bias=True)

(LayerNorm): LayerNorm((32,), eps=1e-12, elementwise_affine=True)

(dropout): Dropout(p=0.5, inplace=False)

)

)

(intermediate): BertIntermediate(

(dense): Linear(in_features=32, out_features=32, bias=True)

)

(output): BertOutput(

(dense): Linear(in_features=32, out_features=32, bias=True)

(LayerNorm): LayerNorm((32,), eps=1e-12, elementwise_affine=True)

(dropout): Dropout(p=0.5, inplace=False)

)

)

(1): BertLayer(

(attention): BertAttention(

(self): BertSelfAttention(

(query): Linear(in_features=32, out_features=32, bias=True)

(key): Linear(in_features=32, out_features=32, bias=True)

(value): Linear(in_features=32, out_features=32, bias=True)

(dropout): Dropout(p=0.3, inplace=False)

)

(output): BertSelfOutput(

(dense): Linear(in_features=32, out_features=32, bias=True)

(LayerNorm): LayerNorm((32,), eps=1e-12, elementwise_affine=True)

(dropout): Dropout(p=0.5, inplace=False)

)

)

(intermediate): BertIntermediate(

(dense): Linear(in_features=32, out_features=32, bias=True)

)

(output): BertOutput(

(dense): Linear(in_features=32, out_features=32, bias=True)

(LayerNorm): LayerNorm((32,), eps=1e-12, elementwise_affine=True)

(dropout): Dropout(p=0.5, inplace=False)

)

)

)

)

(pooler): BertPooler(

(dense): Linear(in_features=32, out_features=32, bias=True)

(activation): Tanh()

)

)

将raw_features[idx], wl_role_ids[idx], init_pos_ids[idx], hop_dis_ids[idx], residual_h输入通过上述的graph-bert模型运算

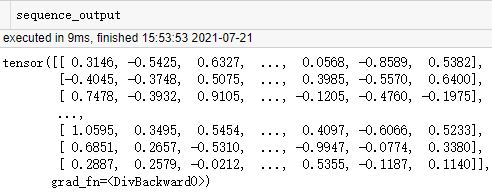

得到两个tensor组成的元组outputs

outputs[0]大小[140, 8, 32]

outputs[1]大小[140, 32]

residual_y = residual_y[idx]

sequence_output = 0

for i in range(self.config.k+1):

sequence_output += outputs[0][:,i,:]

sequence_output /= float(self.config.k+1)

labels = self.cls_y(sequence_output)

labels += residual_y

输出F.log_softmax(labels, dim=1),大小[140, 7]

这样训练一轮后,计算交叉熵损失,准确度

BP

adam优化

验证

测试

(这里是边训练边测试,这样和训练出成型模型后再测试有什么讲究吗)

459

459

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?