OpenCV SVM

图片机器学习的流程:

- 预处理,包括去除光和噪声、滤波、模糊等

- 分割,提取图像中的感兴趣区域,并将每个区域隔离为感兴趣的唯一对象

- 特征提取,提取每一个对象的特征,这些特征通常是对象特征的向量。特征用于描述对象,可以是对象区域、轮廓、纹理图案、像素等

- 训练,选择合适的训练算法进行训练

- 预测,利用训练生成的模型,或者参数对未知特征向量进行预测

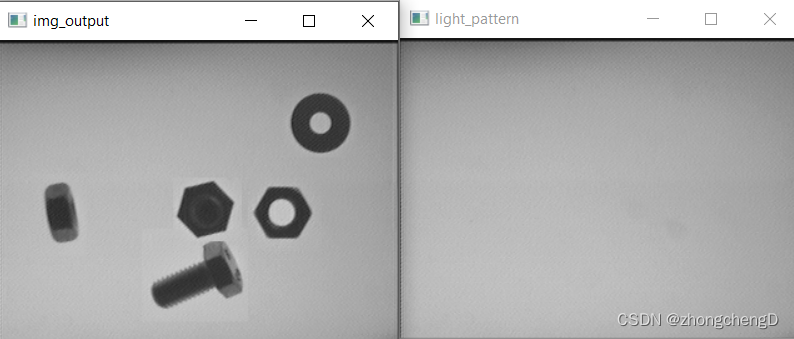

最终结果展示:

预处理图片

// Load image to process

Mat img = imread(img_file, 0);

cv::imshow("img_file",img);

std::cout<<"img_rows: "<<img.rows<<" img_cols: "<<img.cols<<std::endl;

std::cout<<img.size<<std::endl;

// waitKey(0);

if(img.data==NULL){

cout << "Error loading image "<< img_file << endl;

return 0;

}

Mat img_output= img.clone();

cvtColor(img_output, img_output, COLOR_GRAY2BGR);

imshow("img_output",img_output);

std::cout<<"img_output_rows: "<<img_output.rows<<" img_output_cols: "<<img_output.cols<<std::endl;

std::cout<<img_output.size<<std::endl;

//waitKey(0);

// Load image to process

light_pattern= imread(light_pattern_file, 0);

imshow("light_pattern",light_pattern);

waitKey(0);

if(light_pattern.data==NULL){

// Calculate light pattern

cout << "ERROR: Not light patter loaded" << endl;

return 0;

}

medianBlur(light_pattern, light_pattern, 3);

读取读片,并进行中值滤波。

提取特征值

bool readFolderAndExtractFeatures(string folder, int label, int num_for_test,

vector<float> &trainingData, vector<int> &responsesData,

vector<float> &testData, vector<float> &testResponsesData)

{

VideoCapture images;

std::cout<<"folder Path: "<<folder<<std::endl;

if(images.open(folder)==false){

cout << "Can not open the folder images" << endl;

return false;

}

Mat frame;

int img_index=0;

while( images.read(frame) ){

Preprocess image

Mat pre= preprocessImage(frame);

// Extract features

vector< vector<float> > features= ExtractFeatures(pre);

for(int i=0; i< features.size(); i++){

if(img_index >= num_for_test){

trainingData.push_back(features[i][0]);

trainingData.push_back(features[i][1]);

responsesData.push_back(label);

}else{

testData.push_back(features[i][0]);

testData.push_back(features[i][1]);

testResponsesData.push_back((float)label);

}

}

img_index++;

}

return true;

}

vector< vector<float> > ExtractFeatures(Mat img, vector<int>* left=NULL, vector<int>* top=NULL)

{

vector< vector<float> > output;

vector<vector<Point> > contours;

Mat input= img.clone();

vector<Vec4i> hierarchy;

findContours(input, contours, hierarchy, RETR_CCOMP, CHAIN_APPROX_SIMPLE);

// Check the number of objects detected

if(contours.size() == 0 ){

return output;

}

RNG rng( 0xFFFFFFFF );

for(int i=0; i<contours.size(); i++){

Mat mask= Mat::zeros(img.rows, img.cols, CV_8UC1);

drawContours(mask, contours, i, Scalar(1), FILLED, LINE_8, hierarchy, 1);

Scalar area_s= sum(mask);

float area= area_s[0];

if(area>500){ //if the area is greather than min.

RotatedRect r= minAreaRect(contours[i]);

float width= r.size.width;

float height= r.size.height;

float ar=(width<height)?height/width:width/height;

vector<float> row;

row.push_back(area);

row.push_back(ar);

output.push_back(row);

if(left!=NULL){

left->push_back((int)r.center.x);

}

if(top!=NULL){

top->push_back((int)r.center.y);

}

miw->addImage("Extract Features", mask*255);

miw->render();

waitKey(10);

}

}

return output;

}

从数据集中循环读取图片数据,并进行预处理,利用光背景法去除背景,并进行阈值分割。

while( images.read(frame) ){

Preprocess image

imshow("frame",frame);

Mat pre= preprocessImage(frame);

imshow("pre",pre);

waitKey(0);

// Extract features

vector< vector<float> > features= ExtractFeatures(pre);

for(int i=0; i< features.size(); i++){

if(img_index >= num_for_test){

trainingData.push_back(features[i][0]);

trainingData.push_back(features[i][1]);

responsesData.push_back(label);

}else{

testData.push_back(features[i][0]);

testData.push_back(features[i][1]);

testResponsesData.push_back((float)label);

}

}

img_index++;

}

提取特征值代码:

vector< vector<float> > ExtractFeatures(Mat img, vector<int>* left=NULL, vector<int>* top=NULL)

{

vector< vector<float> > output;

vector<vector<Point> > contours;

Mat input= img.clone();

vector<Vec4i> hierarchy;

findContours(input, contours, hierarchy, RETR_CCOMP, CHAIN_APPROX_SIMPLE);

// Check the number of objects detected

if(contours.size() == 0 ){

return output;

}

RNG rng( 0xFFFFFFFF );

for(int i=0; i<contours.size(); i++){

Mat mask= Mat::zeros(img.rows, img.cols, CV_8UC1);

drawContours(mask, contours, i, Scalar(1), FILLED, LINE_8, hierarchy, 1);

Scalar area_s= sum(mask);

float area= area_s[0];

if(area>500){ //if the area is greather than min.

RotatedRect r= minAreaRect(contours[i]);

float width= r.size.width;

float height= r.size.height;

float ar=(width<height)?height/width:width/height;

vector<float> row;

row.push_back(area);

row.push_back(ar);

output.push_back(row);

if(left!=NULL){

left->push_back((int)r.center.x);

}

if(top!=NULL){

top->push_back((int)r.center.y);

}

miw->addImage("Extract Features", mask*255);

miw->render();

waitKey(10);

}

}

return output;

}

代码流程分析:

- 预处理后的图片传入ExtractFeatures()函数中

- 利用找轮廓去找到物品的边缘findContours()

- 提取数据特征(面积,长宽比)

- 保存特征数据

if(area>500){ //if the area is greather than min.

RotatedRect r= minAreaRect(contours[i]);

float width= r.size.width;

float height= r.size.height;

float ar=(width<height)?height/width:width/height;

vector<float> row;

row.push_back(area);

row.push_back(ar);

output.push_back(row);

if(left!=NULL){

left->push_back((int)r.center.x);

}

if(top!=NULL){

top->push_back((int)r.center.y);

}

miw->addImage("Extract Features", mask*255);

miw->render();

waitKey(10);

}

}

利用area>500排除掉其它不需要的数据,只保留我们感兴趣的区域。

row = [area1,ar1] output数据为

[area1,ar1]

遍历所有预处理后的图片,提取特征将其保存到trainingData中,features即是Output数据

trainingData.push_back(features[i][0]);

trainingData.push_back(features[i][1]);

trainingData遍历完数据这种形式

{

area1,

ar1,

area2,

ar2,

area3,

ar3,

.

.

.

arean,

arn

}

由于area1,ar1是一组不同的特征值,所以需要合并这两个数据

Mat testDataMat(testData.size()/2, 2, CV_32FC1, &testData[0]);

合并完的数据如下:

{

{area1,ar1},

{area2,ar2},

{area3,ar3},

{area4,ar4},

.

.

.

{arean,arn}

}

这是打印的testDataMat部分结果

[1215, 1.1219512;

1185, 1.0952381;

1194, 1.1049821;

1221, 1.1016949;

1220, 1.1038963;

1187, 1.1016949;

1204, 1.0952381;

1203, 1.1219512;

1203, 1.1264825;

963, 2.2802894;

1157, 1.1000001;

1023, 2.1187501;

1182, 1.1099707;

1037, 2.0476189;

1120, 1.0714285;

995, 2.1994822;

1191, 1.1042652;

1100, 1.8478874;

1185, 1.1020036;

971, 2.1699345;

1174, 1.0952381;

1003, 2.1633165;

1139, 1.1206896;]

训练

- 创建训练数据

- SVM初始化

- SVM训练

Ptr<TrainData> tdata= TrainData::create(trainingDataMat, ROW_SAMPLE, responses);

svm = cv::ml::SVM::create();

svm->setType(cv::ml::SVM::C_SVC);

svm->setNu(0.05);

svm->setKernel(cv::ml::SVM::CHI2);

svm->setDegree(1.0);

svm->setGamma(2.0);

svm->setTermCriteria(TermCriteria(TermCriteria::MAX_ITER, 100, 1e-6));

svm->train(tdata);

预测

在预测之前,需要对输入图像进行预处理,分别提取特征。用train->predict进行预测。

for(int i=0; i< features.size(); i++){

cout << "Data Area AR: " << features[i][0] << " " << features[i][1] << endl;

Mat trainingDataMat(1, 2, CV_32FC1, &features[i][0]);

cout << "Features to predict: " << trainingDataMat << endl;

float result= svm->predict(trainingDataMat);

cout << result << endl;

stringstream ss;

Scalar color;

if(result==0){

color= green; // NUT

ss << "NUT";

}

else if(result==1){

color= blue; // RING

ss << "RING" ;

}

else if(result==2){

color= red; // SCREW

ss << "SCREW";

}

putText(img_output,

ss.str(),

Point2d(pos_left[i], pos_top[i]),

FONT_HERSHEY_SIMPLEX,

0.4,

color);

}

该文详细介绍了如何利用OpenCV库进行图像预处理,包括滤波和轮廓检测,然后提取特征值,如面积和长宽比。通过SVM(支持向量机)进行训练和预测,用于图像识别任务。文章强调了对感兴趣区域的选取以及特征向量在训练和预测过程中的重要性。

该文详细介绍了如何利用OpenCV库进行图像预处理,包括滤波和轮廓检测,然后提取特征值,如面积和长宽比。通过SVM(支持向量机)进行训练和预测,用于图像识别任务。文章强调了对感兴趣区域的选取以及特征向量在训练和预测过程中的重要性。

3227

3227

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?