1 Title

KAN: Kolmogorov–Arnold Networks(Ziming Liu, Yixuan Wang, Sachin Vaidya, Fabian Ruehle, James Halverson, Marin Soljačić, Thomas Y. Hou, Max Tegmark)【2024】

2 Conclusion

Inspired by the Kolmogorov-Arnold representation theorem, this study proposes KolmogorovArnold Networks (KANs) as promising alternatives to Multi-Layer Perceptrons (MLPs). While MLPs have fixed activation functions on nodes (“neurons”), KANs have learnable activation functions on edges (“weights”). KANs have no linear weights at all – every weight parameter is replaced by a univariate function parametrized as a spline.. For accuracy, much smaller KANs can achieve comparable or better accuracy than much larger MLPs in data fitting and PDE solving. Theoretically and empirically, KANs possess faster neural scaling laws than MLPs.

3 Good Sentences

1、 For interpretability, KANs can be intuitively visualized and can easily interact with human users. Through two examples in mathematics and physics, KANs are shown to be useful “collaborators” helping scientists (re)discover mathematical and physical laws. In summary, KANs are promising alternatives for MLPs, opening opportunities for further improving today’s deep learning models which rely heavily on MLPs.(The significance of KAN's contribution)

2、However, are MLPs the best nonlinear regressors we can build? Despite the prevalent use of MLPs, they have significant drawbacks. In transformers for example, MLPs consume almost all non-embedding parameters and are typically less interpretable (relative to attention layers) without post-analysis tools(disadvantages of MLPs)

3、Despite their elegant mathematical interpretation, KANs are nothing more than combinations of splines and MLPs, leveraging their respective strengths and avoiding their respective weaknesses. Splines are accurate for low-dimensional functions, easy to adjust locally, and able to switch between different resolutions. However, splines have a serious curse of dimensionality (COD) problem, because of their inability to exploit compositional structures(The differents and their own advantage between MLPs and KANs)

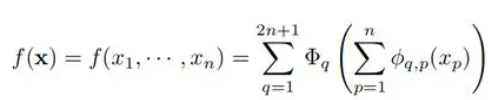

这个方法起源于Kolmogorov Arnold公式:

这个公式觉得难理解看看:KAN: Kolmogorov–Arnold Networks背后的原理 - 知乎,数学上的东西不太懂捏

KAN算法的思想:找激活函数而不是找权重

让机器学习每个特定神经元的最佳激活,而不是由人类决定使用什么激活函数,对于搞模型的来说就是把就是MLP把权重换成了样条函数。

597

597

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?