pytorch版本

def Conv3x3BNReLU(in_channels,out_channels,stride,groups):

return nn.Sequential(

nn.Conv2d(in_channels=in_channels, out_channels=out_channels, kernel_size=3, stride=stride, padding=1, groups=groups),

nn.BatchNorm2d(out_channels),

nn.ReLU6(inplace=True)

)

def Conv1x1BNReLU(in_channels,out_channels):

return nn.Sequential(

nn.Conv2d(in_channels=in_channels, out_channels=out_channels, kernel_size=1, stride=1),

nn.BatchNorm2d(out_channels),

nn.ReLU6(inplace=True)

)

def Conv1x1BN(in_channels,out_channels):

return nn.Sequential(

nn.Conv2d(in_channels=in_channels, out_channels=out_channels, kernel_size=1, stride=1),

nn.BatchNorm2d(out_channels)

)

class InvertedResidual(nn.Module):

def init(self, in_channels, out_channels, stride, expansion_factor=6):

super(InvertedResidual, self).init()

self.stride = stride

mid_channels = (in_channels * expansion_factor)

self.bottleneck = nn.Sequential(

Conv1x1BNReLU(in_channels, mid_channels),

Conv3x3BNReLU(mid_channels, mid_channels, stride,groups=mid_channels),

Conv1x1BN(mid_channels, out_channels)

)

if self.stride == 1:

self.shortcut = Conv1x1BN(in_channels, out_channels)

def forward(self, x):

out = self.bottleneck(x)

out = (out+self.shortcut(x)) if self.stride==1 else out

return out

keras版本

def relu6(x):

return K.relu(x, max_value=6)

保证特征层数为8的倍数

def make_divisible(v, divisor, min_value=None):

if min_value is None:

min_value = divisor

new_v = max(min_value, int(v+divisor/2)//divisor*divisor) #//向下取整,除

if new_v<0.9*v:

new_v +=divisor

return new_v

def pad_size(inputs, kernel_size):

input_size = inputs.shape[1:3]

if isinstance(kernel_size, int):

kernel_size = (kernel_size, kernel_size)

if input_size[0] is None:

adjust = (1,1)

else:

adjust = (1- input_size[0]%2, 1-input_size[1]%2)

correct = (kernel_size[0]//2, kernel_size[1]//2)

return ((correct[0] - adjust[0], correct[0]),

(correct[1] - adjust[1], correct[1]))

def conv_block (x, nb_filter, kernel=(1,1), stride=(1,1), name=None):

x = Conv2D(nb_filter, kernel, strides=stride, padding=‘same’, use_bias=False, name=name+‘_expand’)(x)

x = BatchNormalization(axis=3, name=name+‘_expand_BN’)(x)

x = Activation(relu6, name=name+‘_expand_relu’)(x)

return x

def depthwise_res_block(x, nb_filter, kernel, stride, t, alpha, resdiual=False, name=None):

input_tensor=x

exp_channels= x.shape[-1]*t #扩展维度

alpha_channels = int(nb_filter*alpha) #压缩维度

x = conv_block(x, exp_channels, (1,1), (1,1), name=name)

if stride[0]==2:

x = ZeroPadding2D(padding=pad_size(x, 3), name=name+‘_pad’)(x)

x = DepthwiseConv2D(kernel, padding=‘same’ if stride[0]==1 else ‘valid’, strides=stride, depth_multiplier=1, use_bias=False, name=name+‘_depthwise’)(x)

x = BatchNormalization(axis=3, name=name+‘_depthwise_BN’)(x)

x = Activation(relu6, name=name+‘_depthwise_relu’)(x)

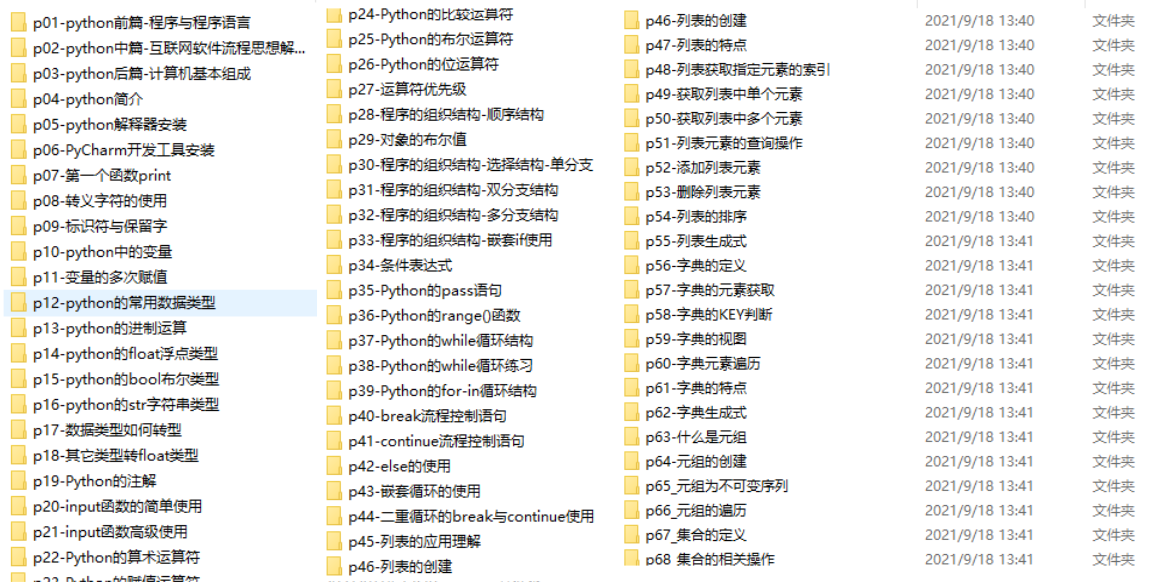

现在能在网上找到很多很多的学习资源,有免费的也有收费的,当我拿到1套比较全的学习资源之前,我并没着急去看第1节,我而是去审视这套资源是否值得学习,有时候也会去问一些学长的意见,如果可以之后,我会对这套学习资源做1个学习计划,我的学习计划主要包括规划图和学习进度表。

分享给大家这份我薅到的免费视频资料,质量还不错,大家可以跟着学习

1223

1223

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?