目录

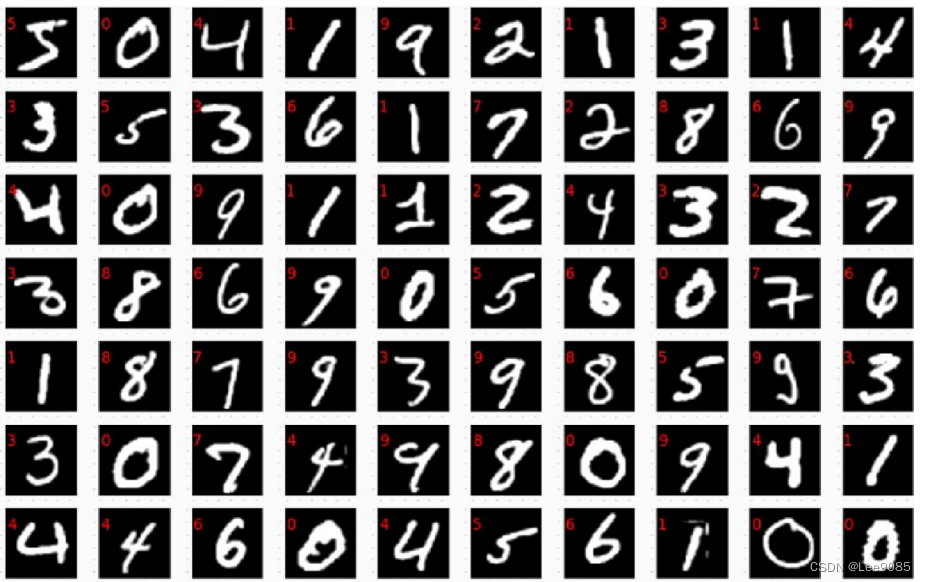

Mnist数据集介绍

Mnist数据集中包含60000张共10类手写数字的图片,每张图片的大小为28*28。部分手写数字图片如下所示:

选用其中50000张图片作为训练集,10000张图片作为测试集。

数据集链接:https://pan.quark.cn/s/03a502977f21

构建多层感知机模型

代码:

clear

clc

%导入数据集

matlabroot = './mnist_train_jpg_60000';

digitDatasetPath = fullfile(matlabroot);

imds = imageDatastore(digitDatasetPath,'IncludeSubfolders',true,'LabelSource','foldernames');

%分割数据集

numTrainFiles = 5000;

[imdsTrain,imdsValidation] = splitEachLabel(imds,numTrainFiles,'randomize');

%创建模型

layers = [

imageInputLayer([28 28 1],"Name","imageinput")

fullyConnectedLayer(128,'Name',"fc")

batchNormalizationLayer("Name","batchnorm")

reluLayer("Name","relu")

fullyConnectedLayer(64,'Name',"fc_1")

batchNormalizationLayer("Name","batchnorm_1")

reluLayer("Name","relu_1")

fullyConnectedLayer(32,'Name',"fc_2")

batchNormalizationLayer("Name","batchnorm_2")

reluLayer("Name","relu_2")

fullyConnectedLayer(10,"Name","fc_3")

softmaxLayer("Name","softmax")

classificationLayer("Name","classoutput")];

%训练参数

options = trainingOptions('sgdm', ...

'InitialLearnRate',0.01, ...

'MaxEpochs',4, ...

'Shuffle','every-epoch', ...

'ValidationData',imdsValidation, ...

'ValidationFrequency',30, ...

'Verbose',false, ...

'Plots','training-progress');

%进行训练,并输出最终结果

net = trainNetwork(imdsTrain,layers,options);

YPred = classify(net,imdsValidation);

YValidation = imdsValidation.Labels;

accuracy = sum(YPred == YValidation)/numel(YValidation);

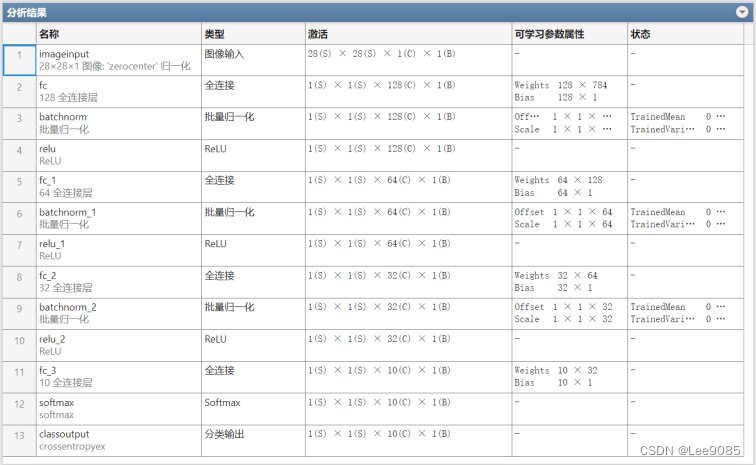

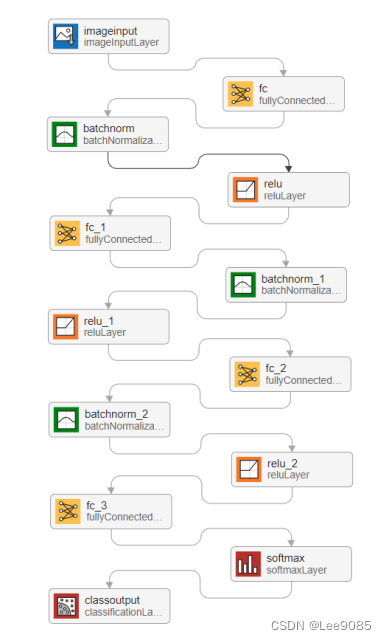

disp(accuracy);模型结构:

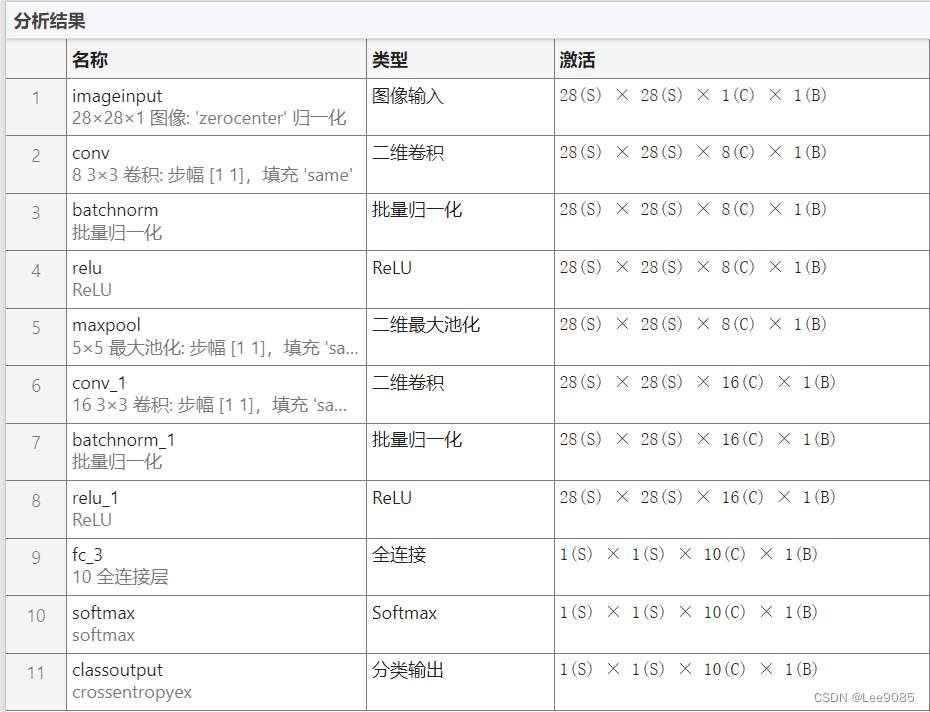

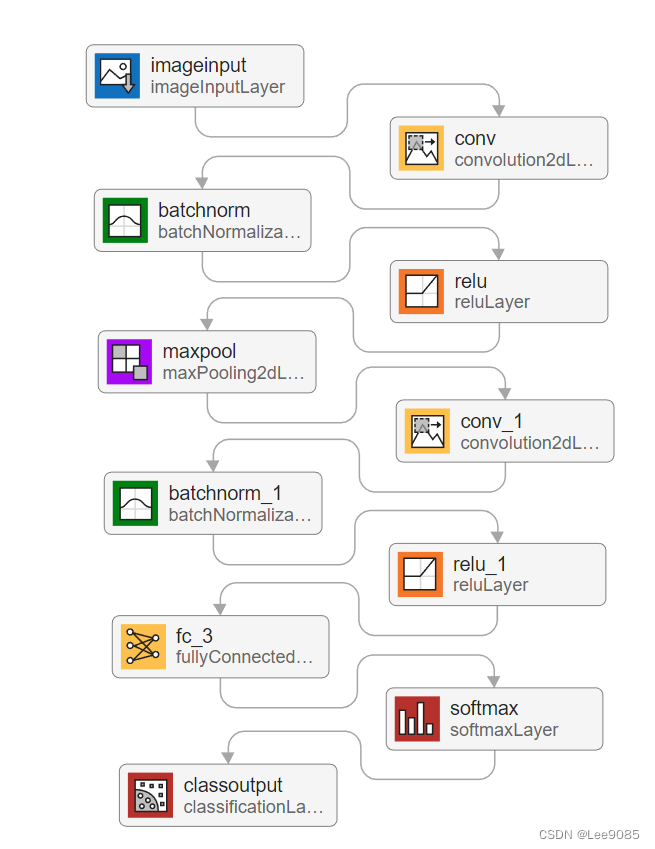

生成的模型输入为28*28*1大小的图片,有十个输出(与十种数字类型相对应)。模型包含输入层(imageInputLayer)、全连接层(fullyConnectedLayer)、批量归一化层(batchNormalizationLayer )、激活函数(reluLayer),softmaxLayer层及分类层(classificationLayer)。各层的详细信息与连接顺序图如下所示:

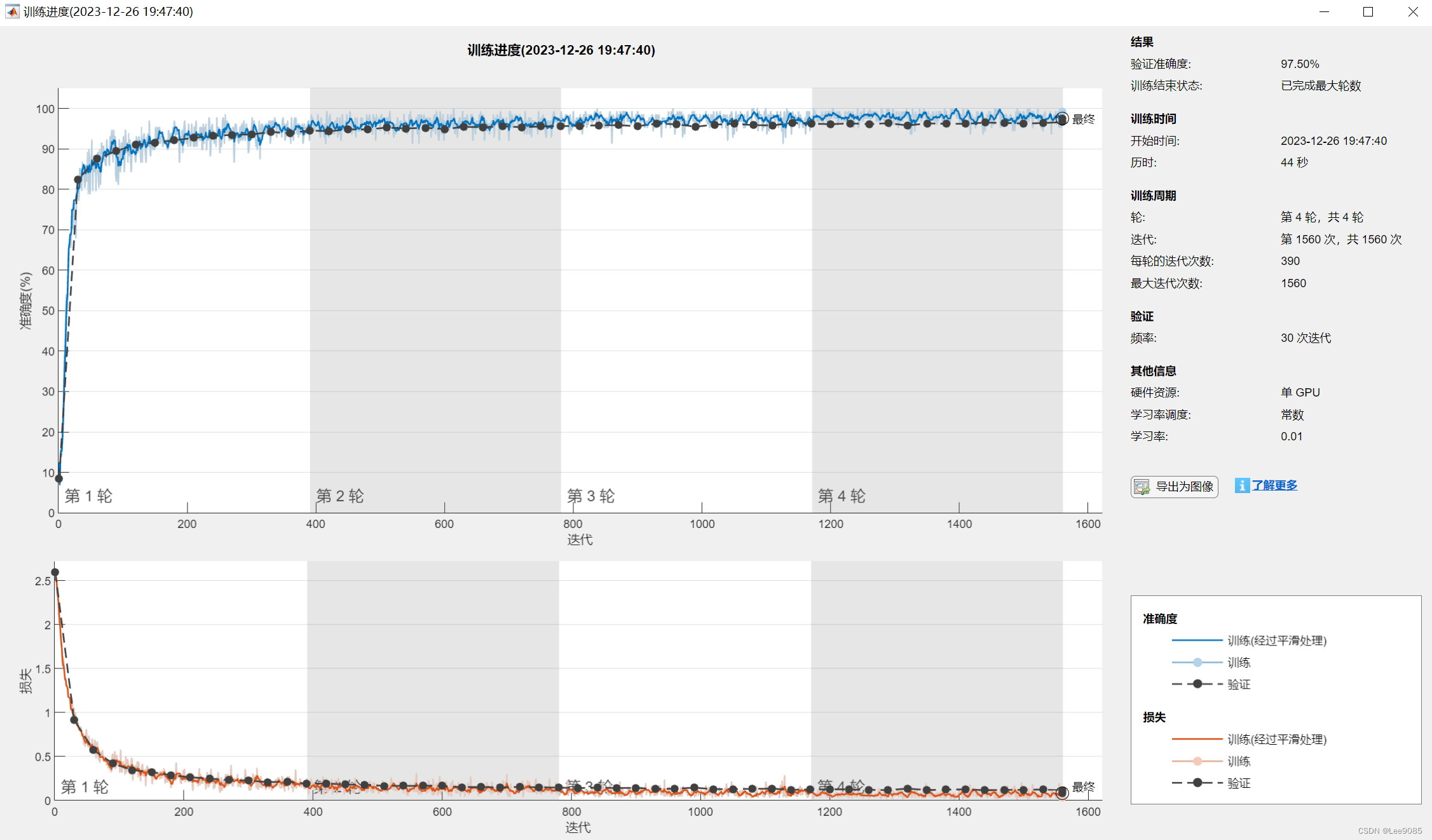

运行结果:

构建卷积神经网络:

代码:

clear

clc

matlabroot = './mnist_train_jpg_60000';

digitDatasetPath = fullfile(matlabroot);

imds = imageDatastore(digitDatasetPath,'IncludeSubfolders',true,'LabelSource','foldernames');

numTrainFiles = 5000;

[imdsTrain,imdsValidation] = splitEachLabel(imds,numTrainFiles,'randomize');

layers = [

imageInputLayer([28 28 1],"Name","imageinput")

convolution2dLayer([3 3],8,"Name","conv","Padding","same")

batchNormalizationLayer("Name","batchnorm")

reluLayer("Name","relu")

maxPooling2dLayer([5 5],"Name","maxpool","Padding","same")

convolution2dLayer([3 3],16,"Name","conv_1","Padding","same")

batchNormalizationLayer("Name","batchnorm_1")

reluLayer("Name","relu_1")

fullyConnectedLayer(10,"Name","fc_3")

softmaxLayer("Name","softmax")

classificationLayer("Name","classoutput")];

options = trainingOptions('sgdm', ...

'InitialLearnRate',0.01, ...

'MaxEpochs',4, ...

'Shuffle','every-epoch', ...

'ValidationData',imdsValidation, ...

'ValidationFrequency',30, ...

'Verbose',false, ...

'Plots','training-progress');

net = trainNetwork(imdsTrain,layers,options);

YPred = classify(net,imdsValidation);

YValidation = imdsValidation.Labels;

accuracy = sum(YPred == YValidation)/numel(YValidation);

disp(accuracy);模型结构:

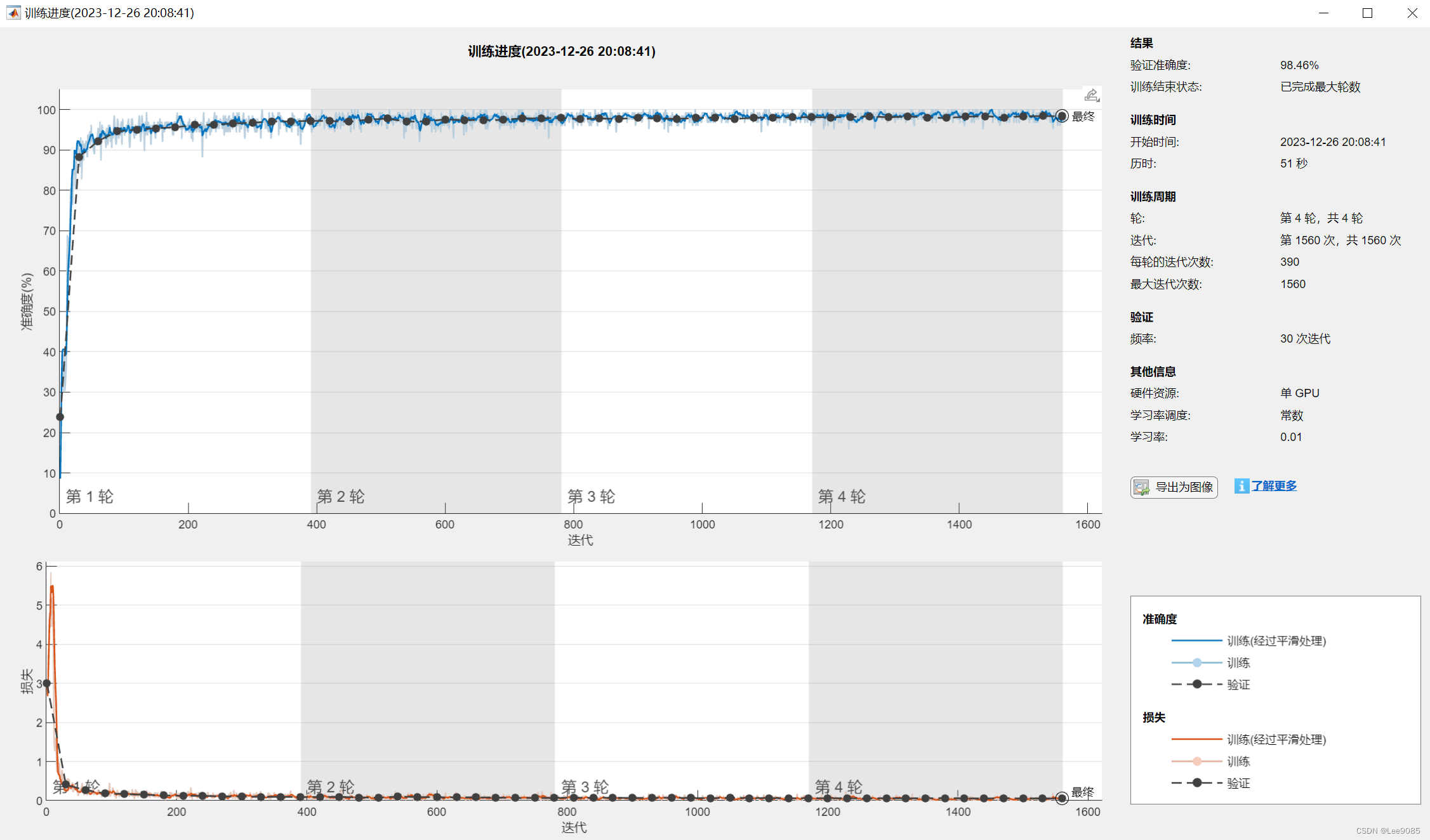

运行结果:

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?