★★★ 本文源自AlStudio社区精品项目,【点击此处】查看更多精品内容 >>>

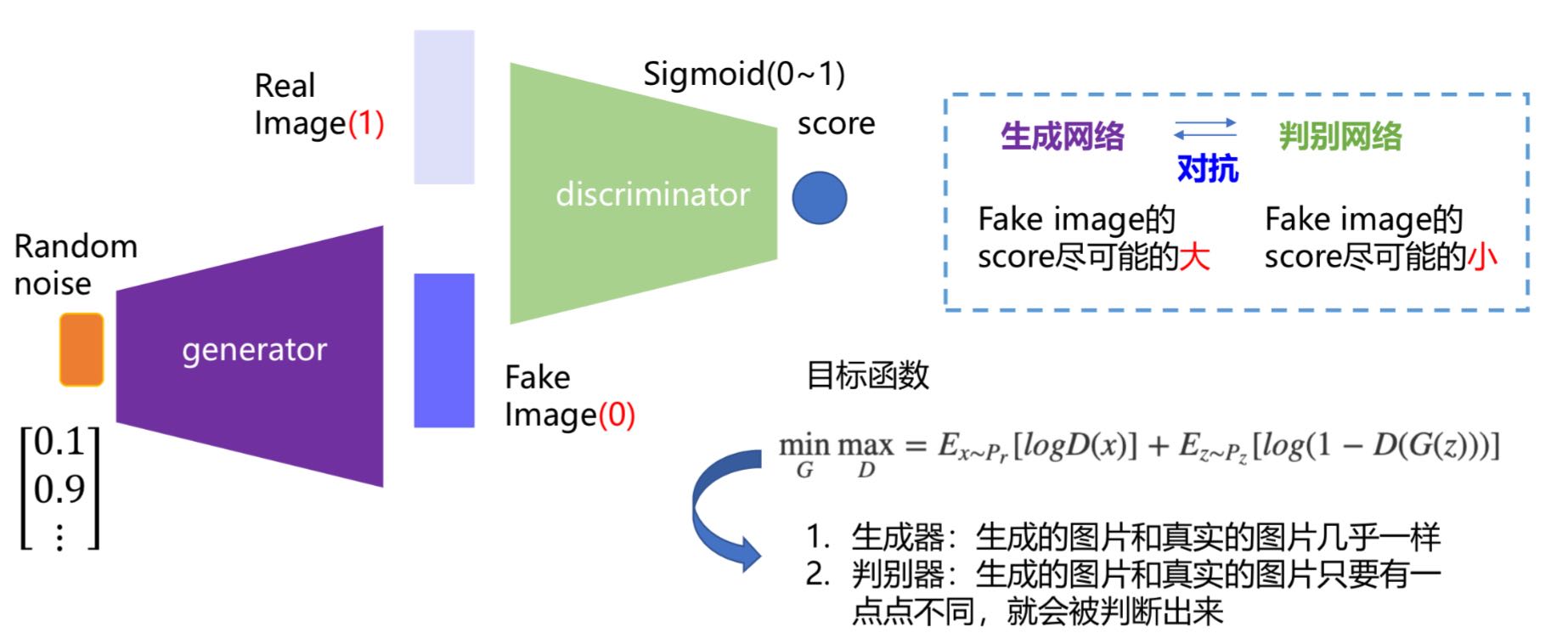

一、生成对抗网络用于图像生成

生成对抗网络(Generative Adversarial Networks)目前已经被广泛应用于图片与视频的生成任务,并且取得了非常不错的效果。

GAN由判别器网络与生成器网络两部分组成。

生成器负责判断输入是由判别器生成的假图片还是预先准备的图片,生成器负责生成样本来骗过判别器,两者交替训练以提高网络性能,最终获得足以生成以及乱真图片的生成器模型。

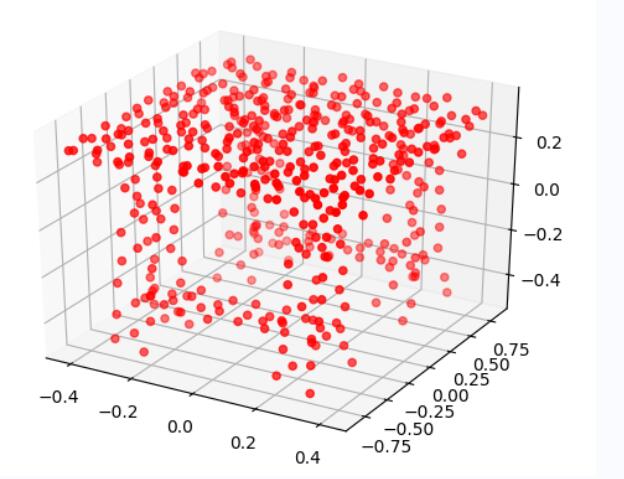

二、生成对抗网络用于3D模型的生成

既然GAN可用于生成二维的图片,那么是否可以用于生成3维模型呢,接下来我将借鉴经验来生成3维的点云。

1.1数据集ModelNet10

该数据集包含浴缸、床、椅子、桌子等10类CAD家具模型。

该数据集由于是顶点坐标来进行标识,因此不太适合进行训练,可视化效果不明显。

对于三维物体文件来说,通常使用.off文件进行保存,off是object file format的缩写,即物体文件格式的简称。其文件格式如下:

OFF

顶点数 面片数 边数

以下是顶点坐标

x y z

x y z

…

以下是每个面的顶点的索引和颜色

n个顶点 顶点1的索引 顶点2的索引 … 顶点n的索引 RGB颜色表示

…

# 导入项目所需要的包

import zipfile

import numpy as np

from mpl_toolkits import mplot3d

%matplotlib inline

import matplotlib.pyplot as plt

import numpy as np

import os

import paddle

from paddle.io import Dataset

# 解压数据

zip_file = zipfile.ZipFile('data/data108288/ModelNet10.zip')

#解压

zip_extract = zip_file.extractall('data/data108288')

1.2数据集modelnet40_normal_resampled

该数据集包含浴缸、床、椅子、桌子等40类3D点云模型。

该数据集是通过采样表面点生成的,可视化效果好,更适合训练,接下来的训练过程将选用这个数据集。

文件结构如下:

x坐标 y坐标 z坐标 颜色R 颜色G 颜色B

…

# 解压数据

zip_file = zipfile.ZipFile('data/data50045/modelnet40_normal_resampled.zip')

#解压

zip_extract = zip_file.extractall('data/data50045')

2.数据读取

数据集数据包含40个种类,对此进行分别训练,这里以table种类为例

# 定义椅子数据集

trainList = open("data/data50045/trainList.txt", "w")

for root, dirs, files in os.walk("data/data50045/modelnet40_normal_resampled/table"):

for file in files:

if file.endswith(".txt"):

trainList.writelines(os.path.join(root, file) + "\n")

trainList.close()

# 数据处理

def ThreeDFolder2(DataList):

filenameList = open(DataList, "r")

AllData = []

for filename in filenameList:

f = open(filename.split('\n')[0], 'r')

view_data = [] # 保存所有顶点的坐标信息

for view_id in range(len(open(r"data/data50045/trainList.txt",'rU').readlines())):

view_data.append(list(map(float, f.readline().split(','))))

# 单独保存每个点的三维坐标

zdata = []

xdata = []

ydata = []

for point in view_data:

xdata.append(point[0])

ydata.append(point[1])

zdata.append(point[2])

xdata = np.array(xdata)

ydata = np.array(ydata)

zdata = np.array(zdata)

data = np.array([xdata, ydata, zdata])

label = np.array(1)

AllData.append([data, label])

f.close()

return AllData

# 点云可视化

def show_3D(views):

zdata = []

xdata = []

ydata = []

for point in views:

xdata.append(point[0])

ydata.append(point[2])

zdata.append(point[1])

xdata = np.array(xdata)

ydata = np.array(ydata)

zdata = np.array(zdata)

# 3D点云可视化

ax = plt.axes(projection='3d')

ax.scatter3D(xdata, ydata, zdata, c='r')

plt.show()

可视化展示

3.数据集定义

# 构建Dataset类

class ModelNetDataset(Dataset):

"""

步骤一:继承paddle.io.Dataset类

"""

def __init__(self, mode="Train"):

"""

步骤二:实现构造函数,定义数据读取方式,划分训练和测试数据集

"""

super(ModelNetDataset, self).__init__()

# train_dir = 'data/data108288/trainList.txt'

train_dir = 'data/data50045/trainList.txt'

self.mode = mode

train_data_folder = ThreeDFolder2(DataList = train_dir)

self.data = train_data_folder

def __getitem__(self, index):

"""

步骤三:实现__getitem__方法,定义指定index时如何获取数据,并返回单条数据(训练数据,对应的标签)

"""

data = np.array(self.data[index][0]).astype('float32')

label = np.array([self.data[index][1]]).astype('int64')

return data, label

def __len__(self):

"""

步骤四:实现__len__方法,返回数据集总数目

"""

return len(self.data)

train_dataset = ModelNetDataset(mode="Train")

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/ipykernel_launcher.py:8: DeprecationWarning: 'U' mode is deprecated

4.判别器网络构建

这里使用全卷积神经网络构建判别器,方便不同尺寸的3D点云模型输入

# 判别器网络构建

import paddle

import paddle.nn.functional as F

class Block1024(paddle.nn.Layer):

def __init__(self):

super(Block1024, self).__init__()

self.pool = paddle.nn.AdaptiveAvgPool1D(output_size=1024)

def forward(self, inputs):

x = F.sigmoid(inputs)

x = self.pool(x)

return x

class Block512(paddle.nn.Layer):

def __init__(self):

super(Block512, self).__init__()

self.pool = paddle.nn.AdaptiveAvgPool1D(output_size=512)

def forward(self, inputs):

x = F.sigmoid(inputs)

x = self.pool(x)

return x

class Block256(paddle.nn.Layer):

def __init__(self):

super(Block256, self).__init__()

self.pool = paddle.nn.AdaptiveAvgPool1D(output_size=256)

def forward(self, inputs):

x = F.sigmoid(inputs)

x = self.pool(x)

return x

class Block128(paddle.nn.Layer):

def __init__(self):

super(Block128, self).__init__()

self.pool = paddle.nn.AdaptiveMaxPool1D(output_size=10)

def forward(self, inputs):

x = F.sigmoid(inputs)

x = self.pool(x)

return x

class BlockOut(paddle.nn.Layer):

def __init__(self):

super(BlockOut, self).__init__()

self.conv = paddle.nn.Conv1D(in_channels=10, out_channels=1, kernel_size=3)

self.pool = paddle.nn.AdaptiveAvgPool1D(output_size=1)

def forward(self, inputs):

x = self.conv(inputs)

x = F.sigmoid(x)

x = self.pool(x)

return x

# Layer类继承方式组网

class netD(paddle.nn.Layer):

def __init__(self):

super(netD, self).__init__()

self.Block1024 = Block1024()

self.Block512 = Block512()

self.Block256 = Block256()

self.Block128 = Block128()

self.conv11 = paddle.nn.Conv1D(in_channels=3, out_channels=33, kernel_size=13)

self.conv7a = paddle.nn.Conv1D(in_channels=33, out_channels=99, kernel_size=11)

self.conv7b = paddle.nn.Conv1D(in_channels=33, out_channels=99, kernel_size=11)

self.conv5a = paddle.nn.Conv1D(in_channels=99, out_channels=297, kernel_size=7)

self.conv5b = paddle.nn.Conv1D(in_channels=99, out_channels=297, kernel_size=7)

self.conv5c = paddle.nn.Conv1D(in_channels=99, out_channels=297, kernel_size=7)

self.conv5d = paddle.nn.Conv1D(in_channels=99, out_channels=297, kernel_size=7)

self.conv5e = paddle.nn.Conv1D(in_channels=99, out_channels=297, kernel_size=7)

self.conv3a = paddle.nn.Conv1D(in_channels=297, out_channels=10, kernel_size=5)

self.conv3b = paddle.nn.Conv1D(in_channels=297, out_channels=10, kernel_size=5)

self.conv3c = paddle.nn.Conv1D(in_channels=297, out_channels=10, kernel_size=5)

self.conv3d = paddle.nn.Conv1D(in_channels=297, out_channels=10, kernel_size=5)

self.conv3e = paddle.nn.Conv1D(in_channels=297, out_channels=10, kernel_size=5)

self.conv3f = paddle.nn.Conv1D(in_channels=297, out_channels=10, kernel_size=5)

self.conv3g = paddle.nn.Conv1D(in_channels=297, out_channels=10, kernel_size=5)

self.conv3h = paddle.nn.Conv1D(in_channels=297, out_channels=10, kernel_size=5)

self.BlockOut = BlockOut()

def forward(self, inputs):

x11 = self.conv11(inputs)

a = self.Block1024(x11)

x7 = self.conv7a(x11)

b1 = self.Block512(x7)

b2 = self.Block512(self.conv7b(a))

xb = paddle.concat(x=[b1, b2], axis=-1)

x5 = self.conv5a(x7)

xb5 = self.conv5b(xb)

c1 = self.Block256(self.conv5c(b1))

c2 = self.Block256(self.conv5d(b2))

c3 = self.Block256(self.conv5e(xb))

c4 = self.Block256(x5)

c5 = self.Block256(xb5)

xc = paddle.concat(x=[c1, c2, c3, c4, c5], axis=-1)

x3 = self.conv3a(x5)

xbc3 = self.conv3b(xb5)

xc3 = self.conv3c(xc)

d1 = self.Block128(self.conv3d(c1))

d2 = self.Block128(self.conv3e(c2))

d3 = self.Block128(self.conv3f(c3))

d4 = self.Block128(self.conv3g(xc))

d5 = self.Block128(self.conv3h(xb5))

d6 = self.Block128(x3)

d7 = self.Block128(xbc3)

d8 = self.Block128(xc3)

xd = paddle.concat(x=[d1, d2, d3, d4, d5, d6, d7, d8], axis=-1)

output = self.BlockOut(xd)

return output

5.生成器网络构建

这里使用了比较传统的生成器网络,并将其拓展到了七层

# 构建生成器

# noise : 1*3*100

class Layer1(paddle.nn.Layer):

def __init__(self):

super(Layer1, self).__init__()

self.convt = paddle.nn.Conv1DTranspose(in_channels=3,out_channels=33,kernel_size=7)

self.bn = paddle.nn.BatchNorm1D(33)

def forward(self, inputs):

data = self.convt(inputs)

data = self.bn(data)

data = F.relu(data)

return data

class Layer2(paddle.nn.Layer):

def __init__(self):

super(Layer2, self).__init__()

self.convt = paddle.nn.Conv1DTranspose(in_channels=33,out_channels=100,kernel_size=13)

self.bn = paddle.nn.BatchNorm1D(100)

def forward(self, inputs):

data = self.convt(inputs)

data = self.bn(data)

data = F.relu(data)

return data

class Layer3(paddle.nn.Layer):

def __init__(self):

super(Layer3, self).__init__()

self.convt = paddle.nn.Conv1DTranspose(in_channels=100,out_channels=300,kernel_size=17)

self.bn = paddle.nn.BatchNorm1D(300)

def forward(self, inputs):

data = self.convt(inputs)

data = self.bn(data)

data = F.relu(data)

return data

class Layer4(paddle.nn.Layer):

def __init__(self):

super(Layer4, self).__init__()

self.convt = paddle.nn.Conv1DTranspose(in_channels=300,out_channels=100,kernel_size=17)

self.bn = paddle.nn.BatchNorm1D(100)

def forward(self, inputs):

data = self.convt(inputs)

data = self.bn(data)

data = F.relu(data)

return data

class Layer5(paddle.nn.Layer):

def __init__(self):

super(Layer5, self).__init__()

self.convt = paddle.nn.Conv1DTranspose(in_channels=100,out_channels=33,kernel_size=13)

self.bn = paddle.nn.BatchNorm1D(33)

def forward(self, inputs):

data = self.convt(inputs)

data = self.bn(data)

data = F.relu(data)

return data

class Layer6(paddle.nn.Layer):

def __init__(self):

super(Layer6, self).__init__()

self.convt = paddle.nn.Conv1DTranspose(in_channels=33,out_channels=3,kernel_size=7)

self.bn = paddle.nn.BatchNorm1D(3)

def forward(self, inputs):

data = self.convt(inputs)

data = self.bn(data)

data = F.relu(data)

return data

class Layer7(paddle.nn.Layer):

def __init__(self):

super(Layer7, self).__init__()

self.convt = paddle.nn.Conv1DTranspose(in_channels=3,out_channels=3,kernel_size=5)

def forward(self, inputs):

data = self.convt(inputs)

data = F.tanh(data)

return data

class netG(paddle.nn.Layer):

def __init__(self):

super(netG, self).__init__()

self.layer1 = Layer1()

self.layer2 = Layer2()

self.layer3 = Layer3()

self.layer4 = Layer4()

self.layer5 = Layer5()

self.layer6 = Layer6()

self.layer7 = Layer7()

def forward(self, inputs):

data = self.layer1(inputs)

data = self.layer2(data)

data = self.layer3(data)

data = self.layer4(data)

data = self.layer5(data)

data = self.layer6(data)

data = self.layer7(data)

return data

D = netD()

G = netG()

paddle.summary(D, (1, 3, 1000))

paddle.summary(G, (1, 3, 1000))

W0326 10:43:38.740927 290 gpu_resources.cc:61] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 11.2, Runtime API Version: 11.2

W0326 10:43:38.745343 290 gpu_resources.cc:91] device: 0, cuDNN Version: 8.2.

-------------------------------------------------------------------------------

Layer (type) Input Shape Output Shape Param #

===============================================================================

Conv1D-1 [[1, 3, 1000]] [1, 33, 988] 1,320

AdaptiveAvgPool1D-1 [[1, 33, 988]] [1, 33, 1024] 0

Block1024-1 [[1, 33, 988]] [1, 33, 1024] 0

Conv1D-2 [[1, 33, 988]] [1, 99, 978] 36,036

AdaptiveAvgPool1D-2 [[1, 99, 1014]] [1, 99, 512] 0

Block512-1 [[1, 99, 1014]] [1, 99, 512] 0

Conv1D-3 [[1, 33, 1024]] [1, 99, 1014] 36,036

Conv1D-4 [[1, 99, 978]] [1, 297, 972] 206,118

Conv1D-5 [[1, 99, 1024]] [1, 297, 1018] 206,118

Conv1D-6 [[1, 99, 512]] [1, 297, 506] 206,118

AdaptiveAvgPool1D-3 [[1, 297, 1018]] [1, 297, 256] 0

Block256-1 [[1, 297, 1018]] [1, 297, 256] 0

Conv1D-7 [[1, 99, 512]] [1, 297, 506] 206,118

Conv1D-8 [[1, 99, 1024]] [1, 297, 1018] 206,118

Conv1D-9 [[1, 297, 972]] [1, 10, 968] 14,860

Conv1D-10 [[1, 297, 1018]] [1, 10, 1014] 14,860

Conv1D-11 [[1, 297, 1280]] [1, 10, 1276] 14,860

Conv1D-12 [[1, 297, 256]] [1, 10, 252] 14,860

AdaptiveMaxPool1D-1 [[1, 10, 1276]] [1, 10, 10] 0

Block128-1 [[1, 10, 1276]] [1, 10, 10] 0

Conv1D-13 [[1, 297, 256]] [1, 10, 252] 14,860

Conv1D-14 [[1, 297, 256]] [1, 10, 252] 14,860

Conv1D-15 [[1, 297, 1280]] [1, 10, 1276] 14,860

Conv1D-16 [[1, 297, 1018]] [1, 10, 1014] 14,860

Conv1D-17 [[1, 10, 80]] [1, 1, 78] 31

AdaptiveAvgPool1D-4 [[1, 1, 78]] [1, 1, 1] 0

BlockOut-1 [[1, 10, 80]] [1, 1, 1] 0

===============================================================================

Total params: 1,222,893

Trainable params: 1,222,893

Non-trainable params: 0

-------------------------------------------------------------------------------

Input size (MB): 0.01

Forward/backward pass size (MB): 13.79

Params size (MB): 4.66

Estimated Total Size (MB): 18.47

-------------------------------------------------------------------------------

-----------------------------------------------------------------------------

Layer (type) Input Shape Output Shape Param #

=============================================================================

Conv1DTranspose-1 [[1, 3, 1000]] [1, 33, 1006] 726

BatchNorm1D-1 [[1, 33, 1006]] [1, 33, 1006] 132

Layer1-1 [[1, 3, 1000]] [1, 33, 1006] 0

Conv1DTranspose-2 [[1, 33, 1006]] [1, 100, 1018] 43,000

BatchNorm1D-2 [[1, 100, 1018]] [1, 100, 1018] 400

Layer2-1 [[1, 33, 1006]] [1, 100, 1018] 0

Conv1DTranspose-3 [[1, 100, 1018]] [1, 300, 1034] 510,300

BatchNorm1D-3 [[1, 300, 1034]] [1, 300, 1034] 1,200

Layer3-1 [[1, 100, 1018]] [1, 300, 1034] 0

Conv1DTranspose-4 [[1, 300, 1034]] [1, 100, 1050] 510,100

BatchNorm1D-4 [[1, 100, 1050]] [1, 100, 1050] 400

Layer4-1 [[1, 300, 1034]] [1, 100, 1050] 0

Conv1DTranspose-5 [[1, 100, 1050]] [1, 33, 1062] 42,933

BatchNorm1D-5 [[1, 33, 1062]] [1, 33, 1062] 132

Layer5-1 [[1, 100, 1050]] [1, 33, 1062] 0

Conv1DTranspose-6 [[1, 33, 1062]] [1, 3, 1068] 696

BatchNorm1D-6 [[1, 3, 1068]] [1, 3, 1068] 12

Layer6-1 [[1, 33, 1062]] [1, 3, 1068] 0

Conv1DTranspose-7 [[1, 3, 1068]] [1, 3, 1072] 48

Layer7-1 [[1, 3, 1068]] [1, 3, 1072] 0

=============================================================================

Total params: 1,110,079

Trainable params: 1,108,941

Non-trainable params: 1,138

-----------------------------------------------------------------------------

Input size (MB): 0.01

Forward/backward pass size (MB): 13.52

Params size (MB): 4.23

Estimated Total Size (MB): 17.76

-----------------------------------------------------------------------------

{'total_params': 1110079, 'trainable_params': 1108941}

6.设定损失函数与优化器

train_loader = paddle.io.DataLoader(train_dataset, batch_size=1, shuffle=True)

loss = paddle.nn.BCELoss()

fixed_noise = paddle.randn([32, 100, 1, 1], dtype='float32')

real_label = 1.

fake_label = 0.

optimizerD = paddle.optimizer.Adam(parameters=D.parameters(), learning_rate=0.0002, beta1=0.5, beta2=0.999)

optimizerG = paddle.optimizer.Adam(parameters=G.parameters(), learning_rate=0.0002, beta1=0.5, beta2=0.999)

7.开始训练

每100batch打印一次生成结果

losses = [[], []]

#plt.ion()

now = 0

for pass_id in range(17):

for batch_id, data in enumerate(train_loader):

############################

# (1) Update D network: maximize log(D(x)) + log(1 - D(G(z)))

###########################

# print("-----")

optimizerD.clear_grad()

real_img = data[0]

# show_3D(real_img)

real_out = D(real_img)

label = paddle.full((1, 1, 1), real_label, dtype='float32')

errD_real = loss(real_out, label)

errD_real.backward()

noise = paddle.randn([1, 3, 100], 'float32')

fake_img = G(noise)

# show_3D(fake_img)

fake_out = D(fake_img.detach())

label = paddle.full((1, 1, 1), fake_label, dtype='float32')

errD_fake = loss(fake_out,label)

errD_fake.backward()

optimizerD.step()

optimizerD.clear_grad()

errD = errD_real + errD_fake

losses[0].append(errD.numpy()[0])

############################

# (2) Update G network: maximize log(D(G(z)))

###########################

optimizerG.clear_grad()

noise = paddle.randn([1, 3, 100],'float32')

fake = G(noise)

output = D(fake)

label = paddle.full((1, 1, 1), real_label, dtype='float32')

errG = loss(output,label)

errG.backward()

optimizerG.step()

optimizerG.clear_grad()

losses[1].append(errG.numpy()[0])

if batch_id % 100 == 0:

show_3D(fake)

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-MkDWIZqB-1682038883003)(main_files/main_22_0.png)]

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-AAzejgU8-1682038883003)(main_files/main_22_1.png)]

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-80oisuhq-1682038883004)(main_files/main_22_2.png)]

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-txO35CDV-1682038883004)(main_files/main_22_3.png)]

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-40Vs5x4r-1682038883005)(main_files/main_22_4.png)]

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-sCjU4ZRg-1682038883005)(main_files/main_22_5.png)]

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-xUInvkUw-1682038883005)(main_files/main_22_6.png)]

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-fvE93YuJ-1682038883006)(main_files/main_22_7.png)]

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-WoJjuXli-1682038883006)(main_files/main_22_8.png)]

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-2BmvmqZR-1682038883006)(main_files/main_22_9.png)]

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-oSxXrK2s-1682038883007)(main_files/main_22_10.png)]

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-0yCzh327-1682038883007)(main_files/main_22_11.png)]

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-fkzF6YtR-1682038883007)(main_files/main_22_12.png)]

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-oEAfwD0i-1682038883008)(main_files/main_22_13.png)]

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-YdifZ0I9-1682038883008)(main_files/main_22_14.png)]

7.查看损失函数变化

plt.plot(losses[0])

plt.show()

plt.plot(losses[1])

plt.show()

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-HHd331o8-1682038883008)(main_files/main_24_0.png)]

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-ua8Lx6iq-1682038883009)(main_files/main_24_1.png)]

8.保存模型

不同种类的模型保存不同的模型文件

paddle.save(G.state_dict(), "work/table20_generator.params")

9.加载模型测试生成结果

# 测试

testG = netG()

testG.set_state_dict(paddle.load("work/table17_generator.params"))

noise = paddle.randn([1, 3, 500], 'float32')

g_img = testG(noise)

show_3D(g_img)

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-Tuag0c3q-1682038883009)(main_files/main_28_0.png)]

三、项目总结

整个项目网络结构比较简单,生成效果勉强能看出物体的样子,该项目还可以从以下方向进行改进:

- 选择更加合适的网络结构

- 扩充数据集

- 选用CGAN来定向控制生成方向

请点击此处查看本环境基本用法.

Please click here for more detailed instructions.

此文章为搬运

原项目链接

1121

1121

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?