秋招面试专栏推荐 :深度学习算法工程师面试问题总结【百面算法工程师】——点击即可跳转

💡💡💡本专栏所有程序均经过测试,可成功执行💡💡💡

专栏目录: 《YOLOv5入门 + 改进涨点》专栏介绍 & 专栏目录 |目前已有60+篇内容,内含各种Head检测头、损失函数Loss、Backbone、Neck、NMS等创新点改进

MobileNetV2是一种高效的卷积神经网络架构,专为移动和嵌入式设备上的计算需求设计。它通过引入逆残差结构(Inverted Residuals)和线性瓶颈层,有效地减少了计算量和参数数量,同时保持了良好的精度。该网络在保持较低复杂度的同时,能够在图像分类、目标检测和语义分割等任务中提供强大的性能。文章在介绍主要的原理后,将手把手教学如何进行模块的代码添加和修改,并将修改后的完整代码放在文章的最后,方便大家一键运行,小白也可轻松上手实践。以帮助您更好地学习深度学习目标检测YOLO系列的挑战。

目录

1.原理

论文地址 :MobileNetV2: Inverted Residuals and Linear Bottlenecks——点击即可跳转

官方代码:官方代码仓库——点击即可跳转

以下原理内容来自@太阳花的小绿豆

在MobileNet v1的网络结构表中能够发现,网络的结构就像VGG一样是个直筒型的,不像ResNet网络有shorcut之类的连接方式。而且有人反映说MobileNet v1网络中的DW卷积很容易训练废掉,效果并没有那么理想。所以我们接着看下MobileNet v2网络。

MobileNet v2网络是由google团队在2018年提出的,相比MobileNet V1网络,准确率更高,模型更小。刚刚说了MobileNet v1网络中的亮点是DW卷积,那么在MobileNet v2中的亮点就是Inverted residual block(倒残差结构),如下下图所示,左侧是ResNet网络中的残差结构,右侧就是MobileNet v2中的到残差结构。在残差结构中是1x1卷积降维->3x3卷积->1x1卷积升维,在倒残差结构中正好相反,是1x1卷积升维->3x3DW卷积->1x1卷积降维。为什么要这样做,原文的解释是高维信息通过ReLU激活函数后丢失的信息更少(注意倒残差结构中基本使用的都是ReLU6激活函数,但是最后一个1x1的卷积层使用的是线性激活函数)。

在使用倒残差结构时需要注意下,并不是所有的倒残差结构都有shortcut连接,只有当stride=1且输入特征矩阵与输出特征矩阵shape相同时才有shortcut连接(只有当shape相同时,两个矩阵才能做加法运算,当stride=1时并不能保证输入特征矩阵的channel与输出特征矩阵的channel相同)。

下图是MobileNet v2网络的结构表,其中t代表的是扩展因子(倒残差结构中第一个1x1卷积的扩展因子),c代表输出特征矩阵的channel,n代表倒残差结构重复的次数,s代表步距(注意:这里的步距只是针对重复n次的第一层倒残差结构,后面的都默认为1)。

2. 将MobileNet v2添加到YOLOv5中

2.1 MobileNet v2的代码实现

关键步骤一: 将下面代码添加到 yolov5/models/common.py中

class conv_bn_relu_maxpool(nn.Module):

def __init__(self, c1, c2): # ch_in, ch_out

super(conv_bn_relu_maxpool, self).__init__()

self.conv = Conv(c1, c2, k=3, s=2, p=1, g=1, act='ReLU')

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

def forward(self, x):

return self.maxpool(self.conv(x))

def fuse(self):

self.conv.fuse()

class RepVGGBlock(nn.Module):

def __init__(self, in_channels, out_channels, kernel_size=3, stride=1, use_se=False, use_cbam=False,

padding=1, dilation=1, groups=1, padding_mode='zeros', deploy=False):

super(RepVGGBlock, self).__init__()

self.deploy = deploy

self.groups = groups

self.in_channels = in_channels

self.out_channels = out_channels

self.kernel_size = kernel_size

self.stride = stride

self.padding = padding

self.dilation = dilation

self.groups = groups

self.padding_mode = padding_mode

padding_11 = padding - kernel_size // 2

# self.nonlinearity = nn.SiLU()

self.nonlinearity = nn.ReLU()

if use_se or use_cbam:

if use_se:

self.se = SEBlock(out_channels, internal_neurons=out_channels // 16)

if use_cbam:

self.se = CBAM(out_channels, internal_neurons=out_channels // 16)

else:

self.se = nn.Identity()

if deploy:

self.rbr_reparam = nn.Conv2d(in_channels=in_channels, out_channels=out_channels, kernel_size=kernel_size,

stride=stride,

padding=padding, dilation=dilation, groups=groups, bias=True,

padding_mode=padding_mode)

else:

self.rbr_identity = nn.BatchNorm2d(

num_features=in_channels) if out_channels == in_channels and stride == 1 else None

self.rbr_dense = conv_bn(in_channels=in_channels, out_channels=out_channels, kernel_size=kernel_size,

stride=stride, padding=padding, groups=groups)

self.rbr_1x1 = conv_bn(in_channels=in_channels, out_channels=out_channels, kernel_size=1, stride=stride,

padding=padding_11, groups=groups)

# print('RepVGG Block, identity = ', self.rbr_identity)

def get_equivalent_kernel_bias(self):

kernel3x3, bias3x3 = self._fuse_bn_tensor(self.rbr_dense)

kernel1x1, bias1x1 = self._fuse_bn_tensor(self.rbr_1x1)

kernelid, biasid = self._fuse_bn_tensor(self.rbr_identity)

return kernel3x3 + self._pad_1x1_to_3x3_tensor(kernel1x1) + kernelid, bias3x3 + bias1x1 + biasid

def _pad_1x1_to_3x3_tensor(self, kernel1x1):

if kernel1x1 is None:

return 0

else:

return torch.nn.functional.pad(kernel1x1, [1, 1, 1, 1])

def _fuse_bn_tensor(self, branch):

if branch is None:

return 0, 0

if isinstance(branch, nn.Sequential):

kernel = branch.conv.weight

running_mean = branch.bn.running_mean

running_var = branch.bn.running_var

gamma = branch.bn.weight

beta = branch.bn.bias

eps = branch.bn.eps

else:

assert isinstance(branch, (nn.BatchNorm2d, nn.SyncBatchNorm))

if not hasattr(self, 'id_tensor'):

input_dim = self.in_channels // self.groups

kernel_value = np.zeros((self.in_channels, input_dim, 3, 3), dtype=np.float32)

for i in range(self.in_channels):

kernel_value[i, i % input_dim, 1, 1] = 1

self.id_tensor = torch.from_numpy(kernel_value).to(branch.weight.device)

kernel = self.id_tensor

running_mean = branch.running_mean

running_var = branch.running_var

gamma = branch.weight

beta = branch.bias

eps = branch.eps

std = (running_var + eps).sqrt()

t = (gamma / std).reshape(-1, 1, 1, 1)

return kernel * t, beta - running_mean * gamma / std

def forward(self, inputs):

if hasattr(self, 'rbr_reparam'):

return self.nonlinearity(self.se(self.rbr_reparam(inputs)))

if self.rbr_identity is None:

id_out = 0

else:

id_out = self.rbr_identity(inputs)

return self.nonlinearity(self.se(self.rbr_dense(inputs) + self.rbr_1x1(inputs) + id_out))

# RepVGGBlock(in_channels=self.in_planes, out_channels=planes, kernel_size=3,

# stride=stride, padding=1, groups=1, deploy=self.deploy, use_se=self.use_se))

def fuse(self):

if self.deploy == False:

self.rbr_reparam = nn.Conv2d(in_channels=self.in_channels, out_channels=self.out_channels,

kernel_size=self.kernel_size,

stride=self.stride,

padding=self.padding, dilation=self.dilation, groups=self.groups, bias=True,

padding_mode=self.padding_mode).requires_grad_(False).to(

self.rbr_dense.conv.weight.device)

self.deploy = True

kernel, bias = self.get_equivalent_kernel_bias()

self.rbr_reparam.weight.data = kernel

self.rbr_reparam.bias.data = bias

self.forward = self.fusevggforward

self.__delattr__('rbr_identity')

self.rbr_dense.__delattr__('conv')

self.rbr_dense.__delattr__('bn')

self.rbr_1x1.__delattr__('conv')

self.rbr_1x1.__delattr__('bn')

del self._modules['rbr_dense']

del self._modules['rbr_1x1']

def fusevggforward(self, inputs):

return self.nonlinearity(self.se(self.rbr_reparam(inputs)))

class MobileNetV2_Block(nn.Module):

def __init__(self, inp, oup, stride=1, expand_ratio=1):

super(MobileNetV2_Block, self).__init__()

assert stride in [1, 2]

self.stride = stride

self.identity = stride == 1 and inp == oup

hidden_dim = int(round(inp * expand_ratio))

act = 'ReLU'

if expand_ratio != 1:

self.conv = nn.Sequential(

Conv(inp, hidden_dim, k=1, s=1, p=0, act=act),

DWConv(hidden_dim, hidden_dim, k=3, s=stride, act=act),

Conv(hidden_dim, oup, k=1, s=1, p=0, act=False),

)

else:

self.conv = nn.Sequential(

DWConv(hidden_dim, hidden_dim, k=3, s=stride, act=act),

Conv(hidden_dim, oup, k=1, s=1, p=0, act=False),

)

def forward(self, x):

y = self.conv(x)

if self.identity:

return x + y

else:

return y

def fuse(self):

for m in self.conv:

if isinstance(m, (Conv, DWConv, RepVGGBlock)):

m.fuse()

2.2 新增yaml文件

关键步骤二:在下/yolov5/models下新建文件 yolov5_MobileNetv2.yaml并将下面代码复制进去

- 目标检测yaml文件

# YOLOv5 🚀 by Ultralytics, GPL-3.0 license

# Parameters

nc: 80 # number of classes

depth_multiple: 1.0 # model depth multiple

width_multiple: 1.0 # layer channel multiple

anchors:

- [10,13, 16,30, 33,23] # P3/8

- [30,61, 62,45, 59,119] # P4/16

- [116,90, 156,198, 373,326] # P5/32

# Mobilenetv3Small backbone

# MobileNetV3_Block in_ch, [out_ch, hid_ch, k_s, stride, SE, HardSwish]

backbone:

# [from, number, module, args]

[[-1, 1, Conv, [32, 3, 2]], # 0-p1/2

[-1, 1, MobileNetV2_Block, [16, 1, 1]], # 1

[-1, 1, MobileNetV2_Block, [24, 2, 6]], # 2-p2/4

[-1, 1, MobileNetV2_Block, [24, 1, 6]], # 3

[-1, 1, MobileNetV2_Block, [32, 2, 6]], # 4-p3/8

[-1, 2, MobileNetV2_Block, [32, 1, 6]], # 5

[-1, 1, MobileNetV2_Block, [64, 2, 6]], # 6-p4/16

[-1, 3, MobileNetV2_Block, [64, 1, 6]], # 7

[-1, 1, MobileNetV2_Block, [96, 1, 6]], # 8

[-1, 2, MobileNetV2_Block, [96, 1, 6]], # 9

[-1, 1, MobileNetV2_Block, [160, 2, 6]], # 10-p5/32

[-1, 2, MobileNetV2_Block, [160, 1, 6]], # 11

[-1, 1, MobileNetV2_Block, [320, 1, 6]], # 12

[-1, 1, SPPF, [1024, 5]], # 13

]

# YOLOv5 v6.0 head

head:

[[-1, 1, Conv, [256, 1, 1]], # 14

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 9], 1, Concat, [1]], # cat backbone P4

[-1, 1, C3, [256, False]], # 17

[-1, 1, Conv, [128, 1, 1]], # 18

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 5], 1, Concat, [1]], # cat backbone P3

[-1, 1, C3, [128, False]], # 20 (P3/8-small)

[-1, 1, Conv, [128, 3, 2]],

[[-1, 18], 1, Concat, [1]], # cat head P4

[-1, 1, C3, [256, False]], # 24 (P4/16-medium)

[-1, 1, Conv, [256, 3, 2]],

[[-1, 14], 1, Concat, [1]], # cat head P5

[-1, 1, C3, [512, False]], # 27 (P5/32-large)

[[21, 24, 27], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)

]- 语义分割yaml文件

# YOLOv5 🚀 by Ultralytics, GPL-3.0 license

# Parameters

nc: 80 # number of classes

depth_multiple: 1.0 # model depth multiple

width_multiple: 1.0 # layer channel multiple

anchors:

- [10,13, 16,30, 33,23] # P3/8

- [30,61, 62,45, 59,119] # P4/16

- [116,90, 156,198, 373,326] # P5/32

# Mobilenetv3Small backbone

# MobileNetV3_Block in_ch, [out_ch, hid_ch, k_s, stride, SE, HardSwish]

backbone:

# [from, number, module, args]

[[-1, 1, Conv, [32, 3, 2]], # 0-p1/2

[-1, 1, MobileNetV2_Block, [16, 1, 1]], # 1

[-1, 1, MobileNetV2_Block, [24, 2, 6]], # 2-p2/4

[-1, 1, MobileNetV2_Block, [24, 1, 6]], # 3

[-1, 1, MobileNetV2_Block, [32, 2, 6]], # 4-p3/8

[-1, 2, MobileNetV2_Block, [32, 1, 6]], # 5

[-1, 1, MobileNetV2_Block, [64, 2, 6]], # 6-p4/16

[-1, 3, MobileNetV2_Block, [64, 1, 6]], # 7

[-1, 1, MobileNetV2_Block, [96, 1, 6]], # 8

[-1, 2, MobileNetV2_Block, [96, 1, 6]], # 9

[-1, 1, MobileNetV2_Block, [160, 2, 6]], # 10-p5/32

[-1, 2, MobileNetV2_Block, [160, 1, 6]], # 11

[-1, 1, MobileNetV2_Block, [320, 1, 6]], # 12

[-1, 1, SPPF, [1024, 5]], # 13

]

# YOLOv5 v6.0 head

head:

[[-1, 1, Conv, [256, 1, 1]], # 14

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 9], 1, Concat, [1]], # cat backbone P4

[-1, 1, C3, [256, False]], # 17

[-1, 1, Conv, [128, 1, 1]], # 18

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 5], 1, Concat, [1]], # cat backbone P3

[-1, 1, C3, [128, False]], # 20 (P3/8-small)

[-1, 1, Conv, [128, 3, 2]],

[[-1, 18], 1, Concat, [1]], # cat head P4

[-1, 1, C3, [256, False]], # 24 (P4/16-medium)

[-1, 1, Conv, [256, 3, 2]],

[[-1, 14], 1, Concat, [1]], # cat head P5

[-1, 1, C3, [512, False]], # 27 (P5/32-large)

[[21, 24, 27], 1, Segment, [nc, anchors, 32, 256]], # Detect(P3, P4, P5)

]温馨提示:本文只是对yolov5基础上添加模块,如果要对yolov5n/l/m/x进行添加则只需要指定对应的depth_multiple 和 width_multiple。

# YOLOv5n

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.25 # layer channel multiple

# YOLOv5s

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

# YOLOv5l

depth_multiple: 1.0 # model depth multiple

width_multiple: 1.0 # layer channel multiple

# YOLOv5m

depth_multiple: 0.67 # model depth multiple

width_multiple: 0.75 # layer channel multiple

# YOLOv5x

depth_multiple: 1.33 # model depth multiple

width_multiple: 1.25 # layer channel multiple2.3 注册模块

关键步骤三:在yolo.py的parse_model函数中注册 添加“MobileNetv2",

2.4 执行程序

在train.py中,将cfg的参数路径设置为yolov5_MobileNetv2.yaml的路径

建议大家写绝对路径,确保一定能找到

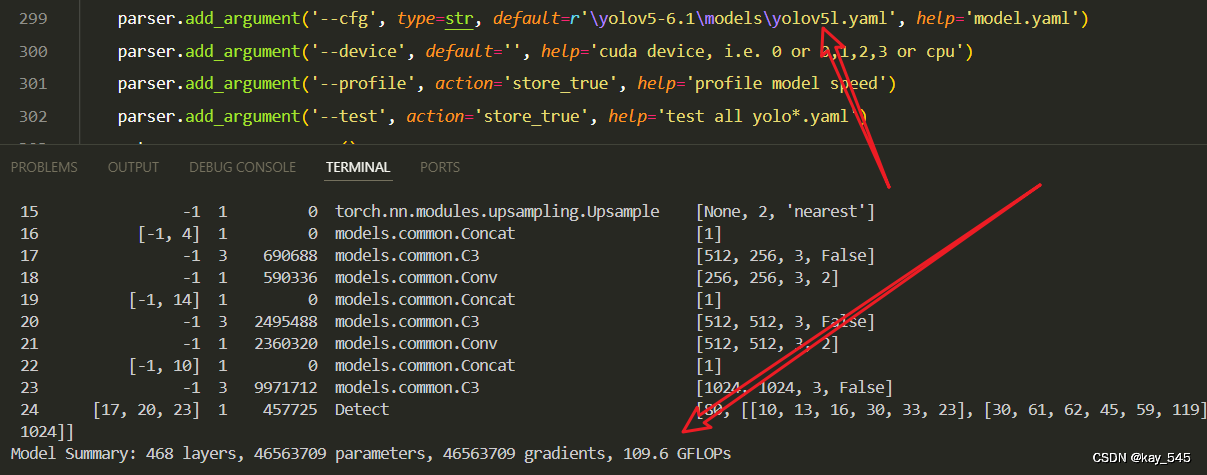

🚀运行程序,如果出现下面的内容则说明添加成功🚀

from n params module arguments

0 -1 1 928 models.common.Conv [3, 32, 3, 2]

1 -1 1 896 models.common.MobileNetV2_Block [32, 16, 1, 1]

2 -1 1 5136 models.common.MobileNetV2_Block [16, 24, 2, 6]

3 -1 1 8832 models.common.MobileNetV2_Block [24, 24, 1, 6]

4 -1 1 10000 models.common.MobileNetV2_Block [24, 32, 2, 6]

5 -1 2 29696 models.common.MobileNetV2_Block [32, 32, 1, 6]

6 -1 1 21056 models.common.MobileNetV2_Block [32, 64, 2, 6]

7 -1 3 162816 models.common.MobileNetV2_Block [64, 64, 1, 6]

8 -1 1 66624 models.common.MobileNetV2_Block [64, 96, 1, 6]

9 -1 2 236544 models.common.MobileNetV2_Block [96, 96, 1, 6]

10 -1 1 155264 models.common.MobileNetV2_Block [96, 160, 2, 6]

11 -1 2 640000 models.common.MobileNetV2_Block [160, 160, 1, 6]

12 -1 1 473920 models.common.MobileNetV2_Block [160, 320, 1, 6]

13 -1 1 708928 models.common.SPPF [320, 1024, 5]

14 -1 1 262656 models.common.Conv [1024, 256, 1, 1]

15 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

16 [-1, 9] 1 0 models.common.Concat [1]

17 -1 1 321024 models.common.C3 [352, 256, 1, False]

18 -1 1 33024 models.common.Conv [256, 128, 1, 1]

19 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

20 [-1, 5] 1 0 models.common.Concat [1]

21 -1 1 78592 models.common.C3 [160, 128, 1, False]

22 -1 1 147712 models.common.Conv [128, 128, 3, 2]

23 [-1, 18] 1 0 models.common.Concat [1]

24 -1 1 296448 models.common.C3 [256, 256, 1, False]

25 -1 1 590336 models.common.Conv [256, 256, 3, 2]

26 [-1, 14] 1 0 models.common.Concat [1]

27 -1 1 1182720 models.common.C3 [512, 512, 1, False]

28 [21, 24, 27] 1 229245 Detect [80, [[10, 13, 16, 30, 33, 23], [30, 61, 62, 45, 59, 119], [116, 90, 156, 198, 373, 326]], [128, 256, 512]]

YOLOv5 summary: 347 layers, 5662397 parameters, 5662397 gradients, 11.5 GFLOPs3. 完整代码分享

https://pan.baidu.com/s/1uxmTHtaXpeL-hWyP1-me1w?pwd=r6hg提取码: r6hg

4. GFLOPs

关于GFLOPs的计算方式可以查看:百面算法工程师 | 卷积基础知识——Convolution

未改进的GFLOPs

改进后的GFLOPs

现在手上没有卡了,等过段时候有卡了把这补上,需要的同学自己测一下

5. 进阶

可以结合损失函数或者卷积模块进行多重改进

YOLOv5改进 | 损失函数 | EIoU、SIoU、WIoU、DIoU、FocuSIoU等多种损失函数——点击即可跳转

6. 总结

MobileNetV2是谷歌于2018年提出的一种高效卷积神经网络架构,专为移动和嵌入式设备设计。它通过引入逆残差结构和线性瓶颈层,优化了计算效率和模型性能,显著减少了计算量和参数数量。MobileNetV2在图像分类、目标检测和语义分割等任务中表现出色,能够在资源受限的设备上提供与更大模型相近的精度。

1081

1081

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?